Data-Inclusive Open Source AI: Building a Fair and Collaborative Future

Artificial IntelligenceData-Inclusive Open Source AI: Building a Fair and Collaborative Future

:warning: WARNING: This content was generated using Generative AI. While efforts have been made to ensure accuracy and coherence, readers should approach the material with critical thinking and verify important information from authoritative sources.

Table of Contents

- Data-Inclusive Open Source AI: Building a Fair and Collaborative Future

- Introduction: The Convergence of Open Source and AI

- Chapter 1: The Critical Role of Data in AI Development

- Chapter 2: Ethical Implications of Data Access and Sharing in AI

- Chapter 3: Case Studies of Successful Open Source AI with Transparent Data Practices

- Chapter 4: Implementing Inclusive Data Policies in AI Initiatives

- Chapter 5: Future Scenarios and Potential Impacts of Data-Inclusive Open Source AI

- Conclusion: Charting the Path Forward for Data-Inclusive Open Source AI

Introduction: The Convergence of Open Source and AI

The Open Source Movement: A Brief History

From Software to AI: Expanding Open Source Principles

The journey from open source software to open source AI represents a significant evolution in the application of open source principles. As an expert who has witnessed and contributed to this transformation, I can attest to the profound impact this shift has had on the technology landscape, particularly in the realm of artificial intelligence.

The open source movement, which began in the software development world, was founded on principles of transparency, collaboration, and community-driven innovation. These principles have proven to be remarkably adaptable and valuable as we've moved into the era of AI development. The expansion of open source principles to AI has been both natural and necessary, driven by the increasing complexity and societal impact of AI systems.

- Transparency: Just as open source software allows for code inspection, open source AI aims to make algorithms and models transparent and auditable.

- Collaboration: The collaborative nature of open source projects has been crucial in accelerating AI development and fostering innovation.

- Accessibility: Open source AI democratises access to advanced technologies, enabling wider participation in AI development and application.

- Reproducibility: Open source principles in AI promote reproducibility of results, a critical factor in scientific and technological advancement.

However, the expansion of open source principles to AI has also introduced new challenges and considerations. Unlike traditional software, AI systems are heavily dependent on data, which adds layers of complexity in terms of privacy, ethics, and intellectual property. This dependency on data is a crucial factor that necessitates the inclusion of data considerations in any comprehensive definition of open source AI.

The transition from open source software to open source AI is not just a technological shift, but a paradigm change that requires us to rethink our approach to openness, collaboration, and innovation in the context of data-driven systems.

As we've seen in my consultancy work with government bodies and technology leaders, the application of open source principles to AI has far-reaching implications. It affects not only how AI systems are developed but also how they are deployed, governed, and integrated into society. The expansion of these principles to AI necessitates a more holistic approach that considers the entire AI ecosystem, including data, algorithms, models, and the infrastructure that supports them.

One of the most significant challenges in this expansion has been reconciling the open source ethos with the often proprietary nature of large-scale datasets used in AI training. This tension underscores the importance of including data considerations in the Open Source AI Definition (OSAID). Without addressing the role of data, any definition of open source AI risks being incomplete and potentially misleading.

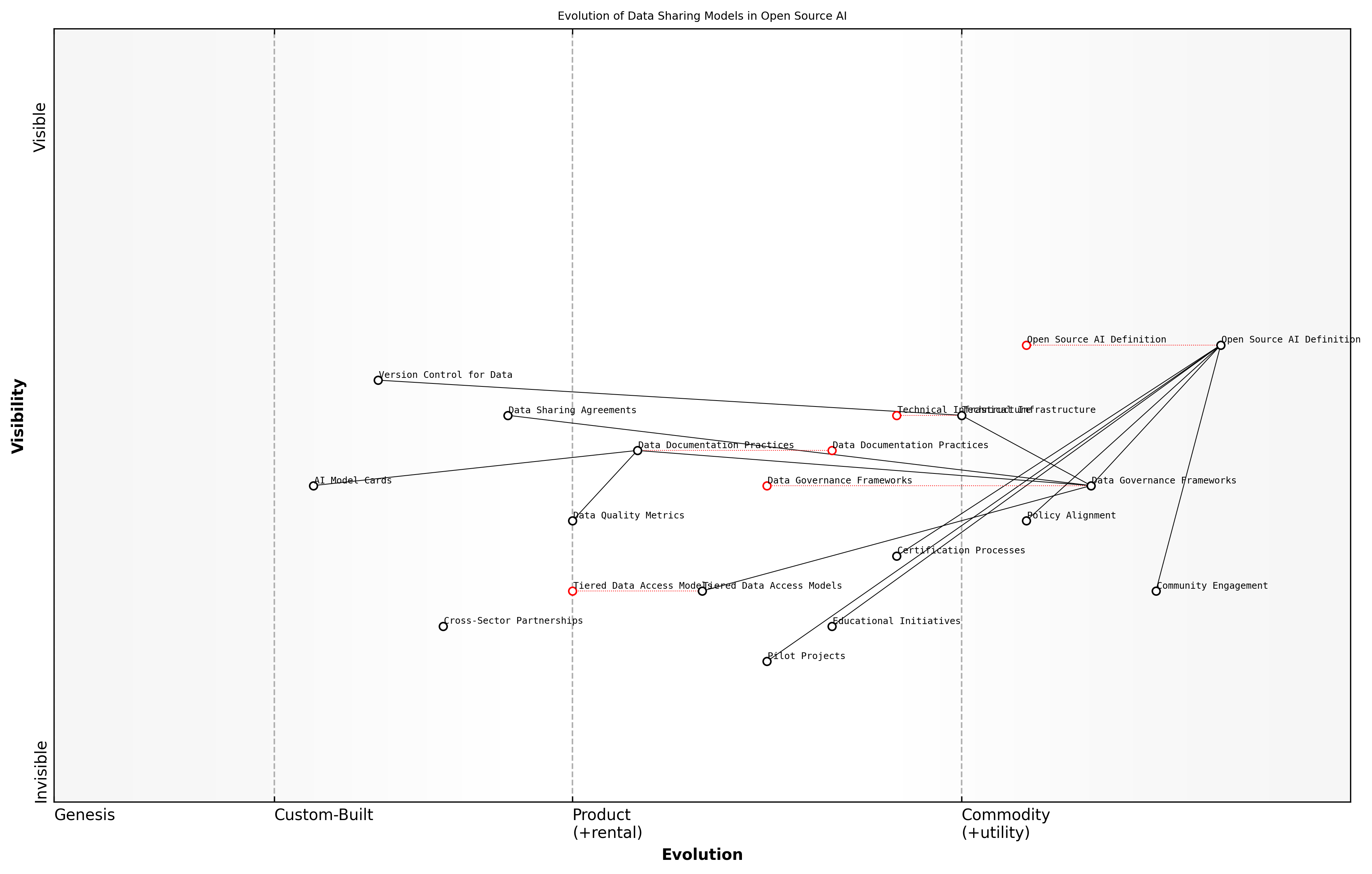

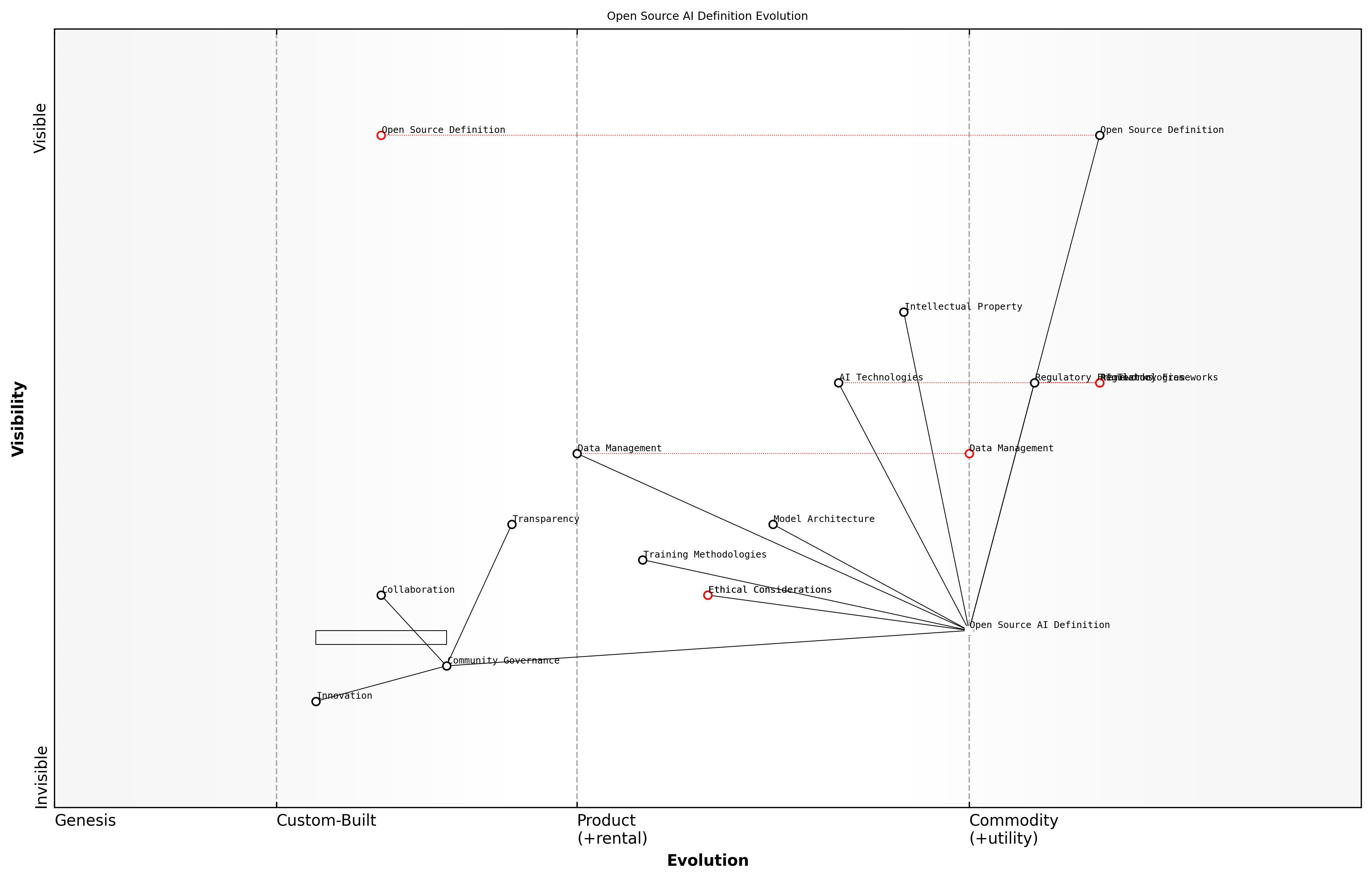

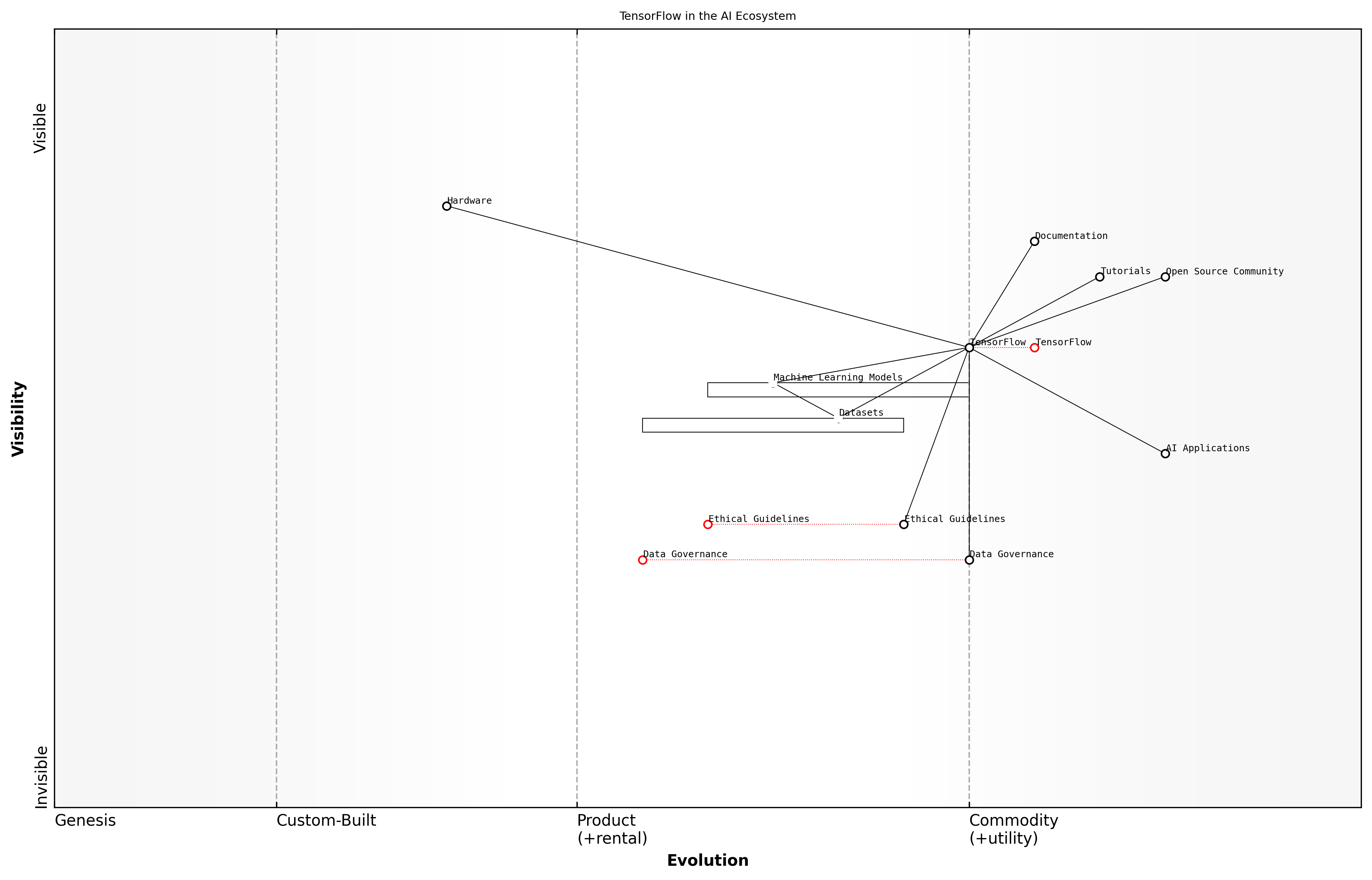

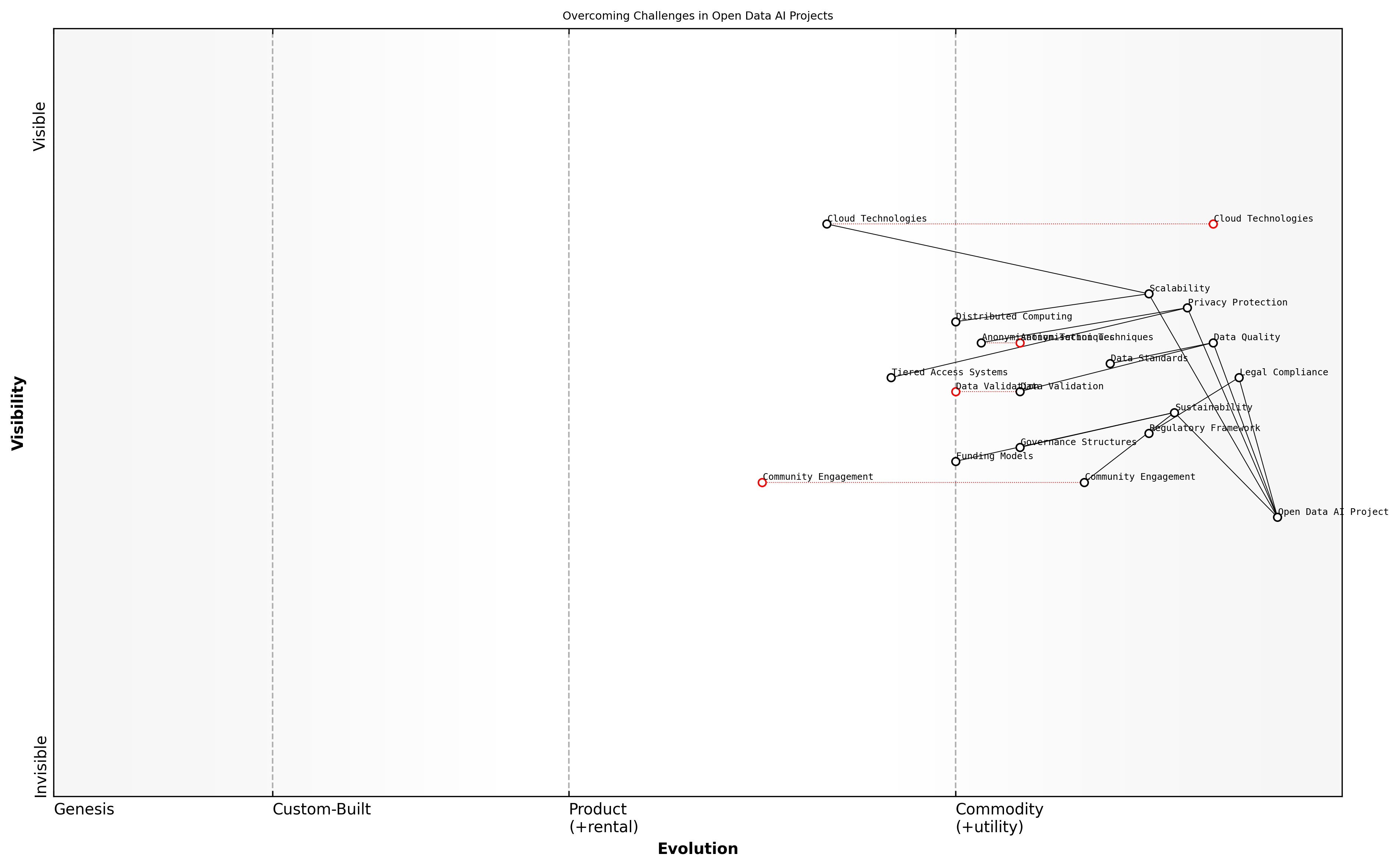

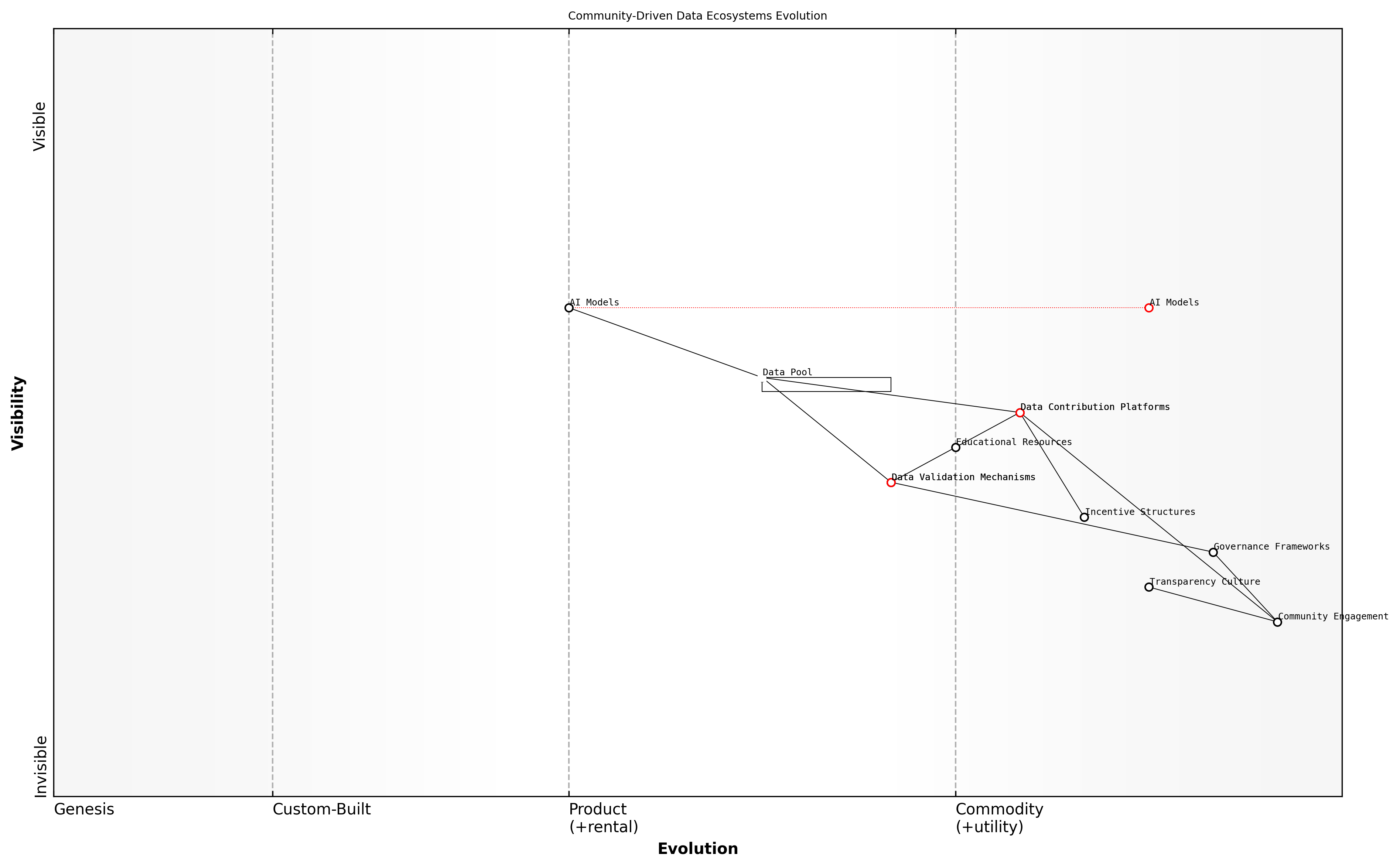

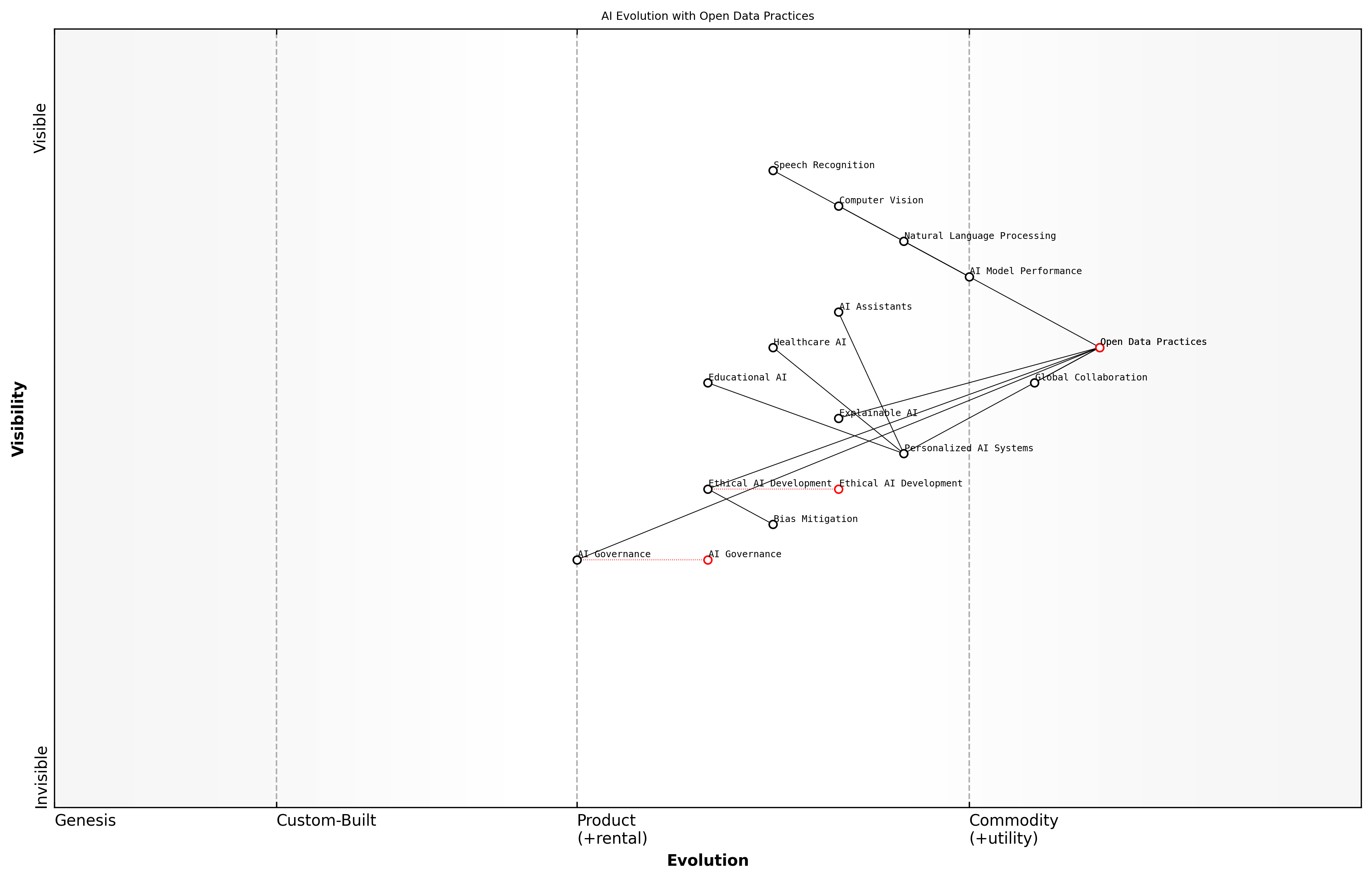

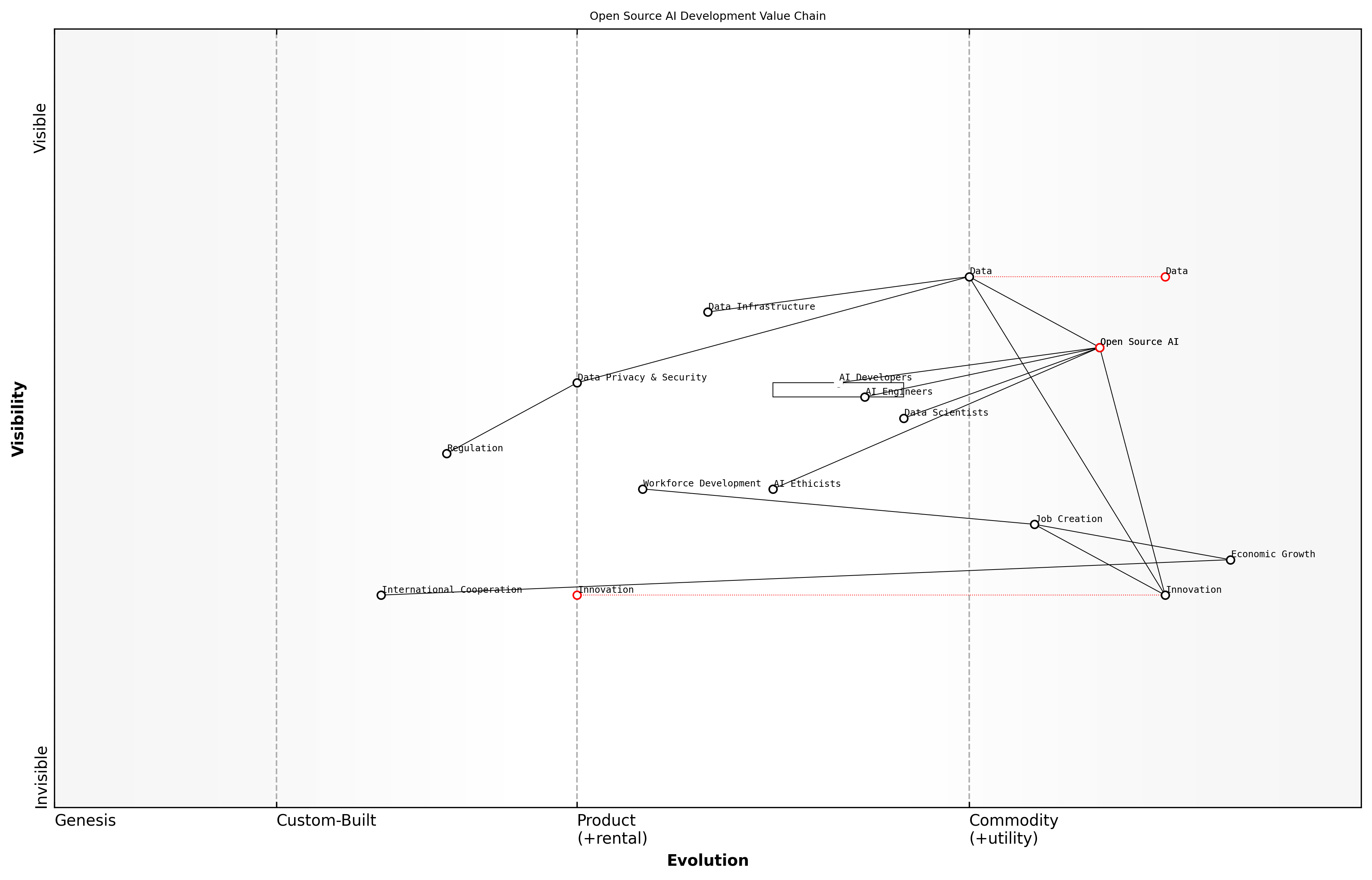

Wardley Map Assessment

This map represents a critical juncture in the evolution of open source practices from software to AI. It highlights the need for organisations to adapt their strategies, focusing on ethical AI development, robust governance, and advanced data management while maintaining the core principles of open source. Success in this transition will require balancing rapid innovation with responsible development practices, potentially reshaping the entire open source ecosystem.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_01_english_From Software to AI: Expanding Open Source Principles.md)

The expansion of open source principles to AI has also brought to the forefront issues of bias, fairness, and accountability. These concerns, while present in traditional software development, take on new dimensions in the context of AI due to the potential for automated decision-making systems to impact individuals and society at large. Addressing these issues requires not just open algorithms, but also transparent and well-documented datasets, further emphasising the need for data inclusion in the OSAID.

Open source AI is not just about sharing code; it's about fostering an ecosystem of transparency, collaboration, and responsible innovation that encompasses all aspects of AI development, including data.

As we continue to navigate this expansion of open source principles into the AI domain, it is crucial that we adapt our definitions, practices, and governance models to reflect the unique characteristics and requirements of AI systems. This includes recognising the central role of data and ensuring that our approach to open source AI is comprehensive, ethical, and aligned with the broader goals of technological advancement and societal benefit.

The Rise of AI and Its Impact on Open Source

The rise of Artificial Intelligence (AI) has been nothing short of revolutionary, fundamentally altering the landscape of technology and, by extension, the open source movement. As an expert who has witnessed this transformation firsthand, I can attest to the profound impact AI has had on open source principles, practices, and communities.

The advent of AI, particularly machine learning and deep learning technologies, has ushered in a new era of software development. These technologies rely heavily on vast amounts of data and complex algorithms, presenting unique challenges and opportunities for the open source community. The traditional open source model, which primarily focused on sharing source code, has been compelled to evolve to accommodate the data-centric nature of AI.

- Increased demand for open datasets

- Development of open source AI frameworks and libraries

- Emergence of AI-specific open source licences

- Growing emphasis on reproducibility and transparency in AI research

One of the most significant impacts of AI on open source has been the exponential growth in the development and sharing of open source AI tools and frameworks. Projects like TensorFlow, PyTorch, and scikit-learn have become cornerstones of AI development, embodying the open source ethos while pushing the boundaries of what's possible with freely available software.

The convergence of AI and open source has democratised access to cutting-edge technology, enabling individuals and organisations of all sizes to contribute to and benefit from AI advancements.

However, this convergence has also brought to light new challenges. The data-intensive nature of AI has raised questions about data privacy, ownership, and the ethical implications of sharing datasets. These concerns have prompted the open source community to grapple with complex issues that extend beyond traditional software licensing.

Moreover, the rise of AI has highlighted the limitations of existing open source definitions and licences when applied to AI systems. The intricate relationship between AI models, training data, and the resulting outputs has necessitated a re-evaluation of what it means for an AI system to be truly 'open source'.

- Challenges in defining openness for AI models

- Debates over the inclusion of training data in open source AI definitions

- Concerns about the potential misuse of open source AI technologies

- The need for new governance models for AI-driven open source projects

The impact of AI on open source has also extended to the very culture of open source communities. The collaborative nature of AI development, often requiring diverse expertise and substantial computational resources, has fostered new models of cooperation and resource sharing within the open source ecosystem.

The fusion of AI and open source principles has catalysed a new era of innovation, where openness and collaboration are not just ideals, but necessities for advancing the field.

As we look to the future, it's clear that the relationship between AI and open source will continue to evolve. The challenges and opportunities presented by this convergence will shape the next generation of open source initiatives, potentially redefining the very concept of openness in the digital age.

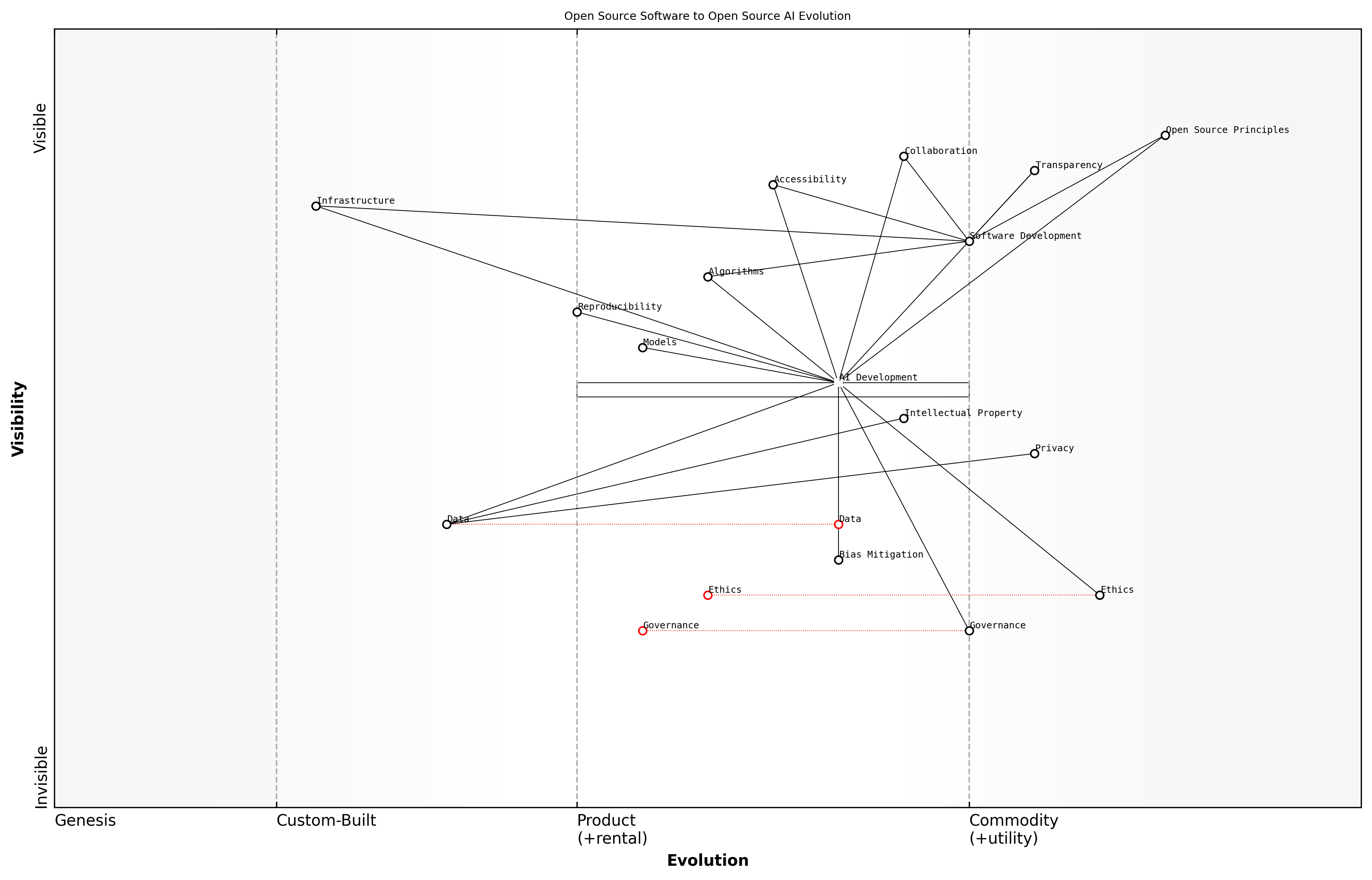

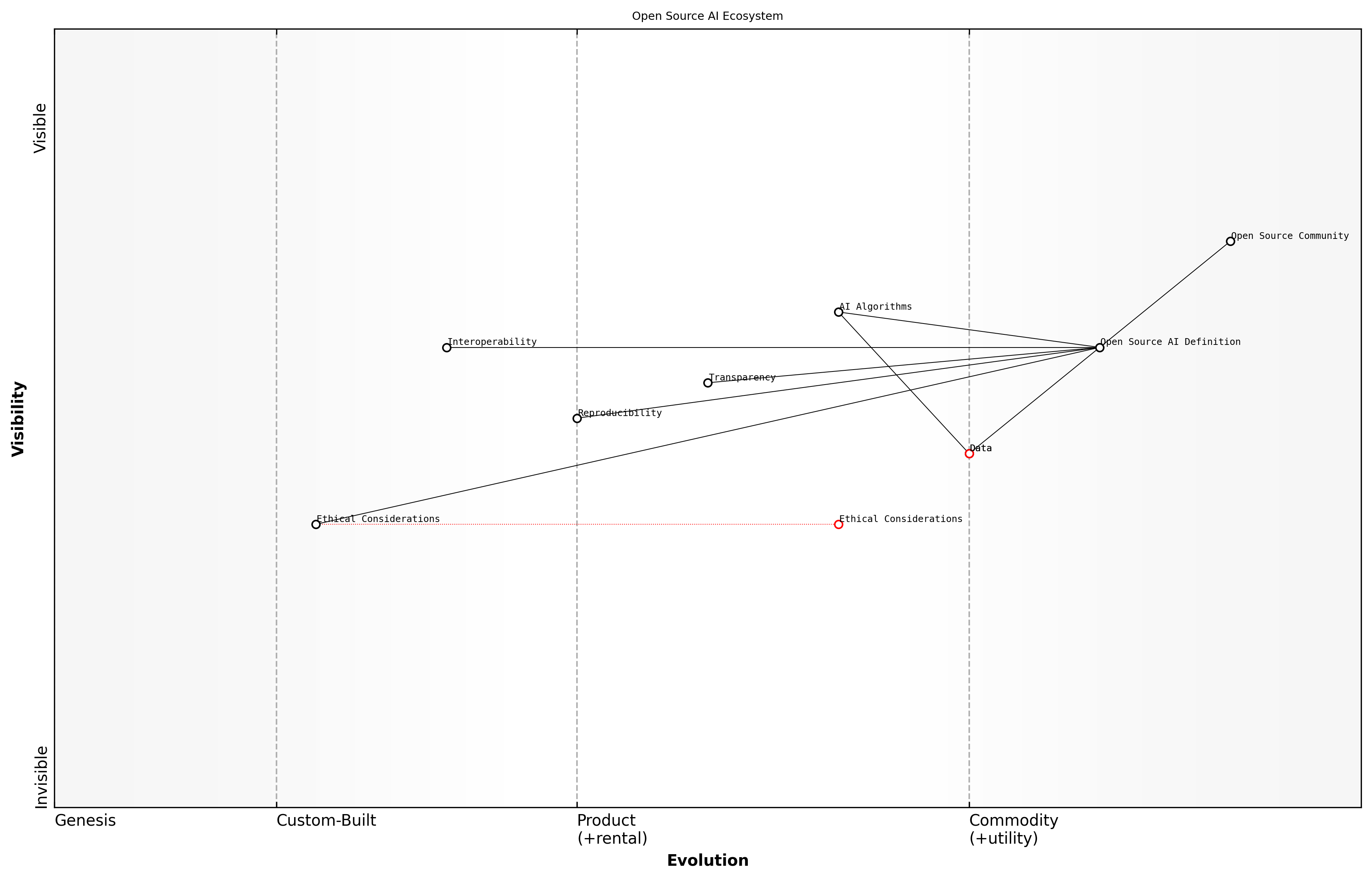

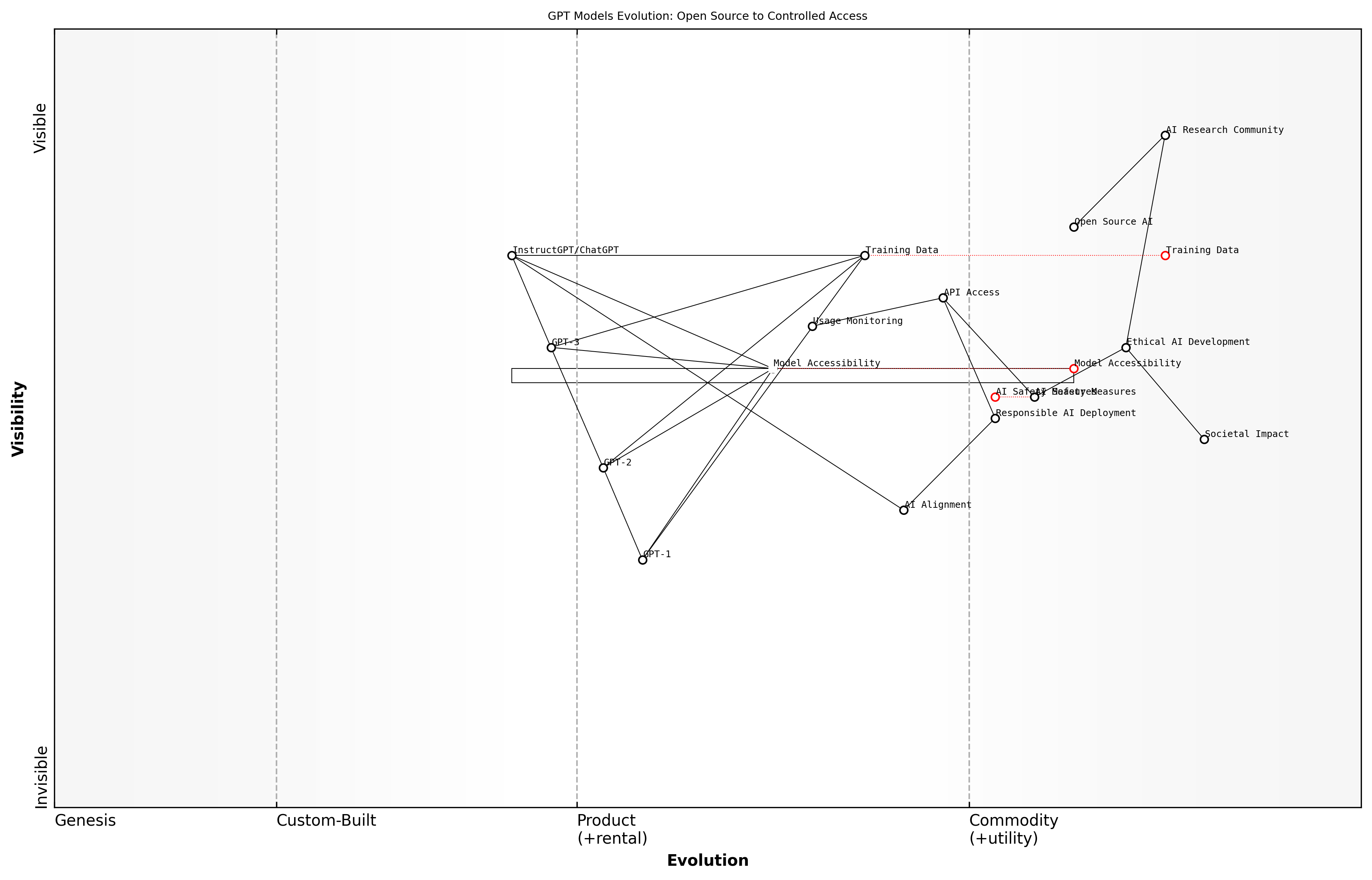

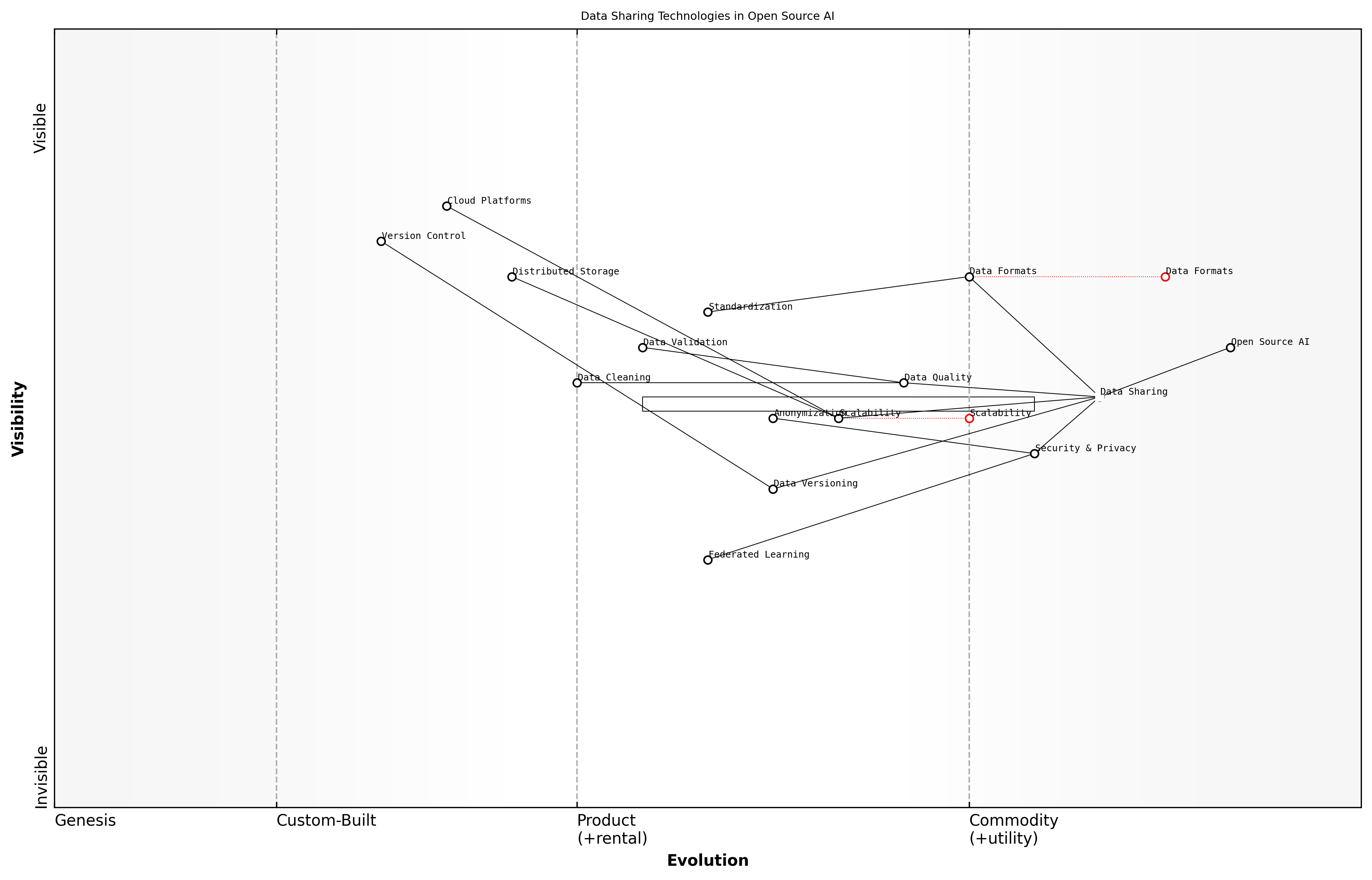

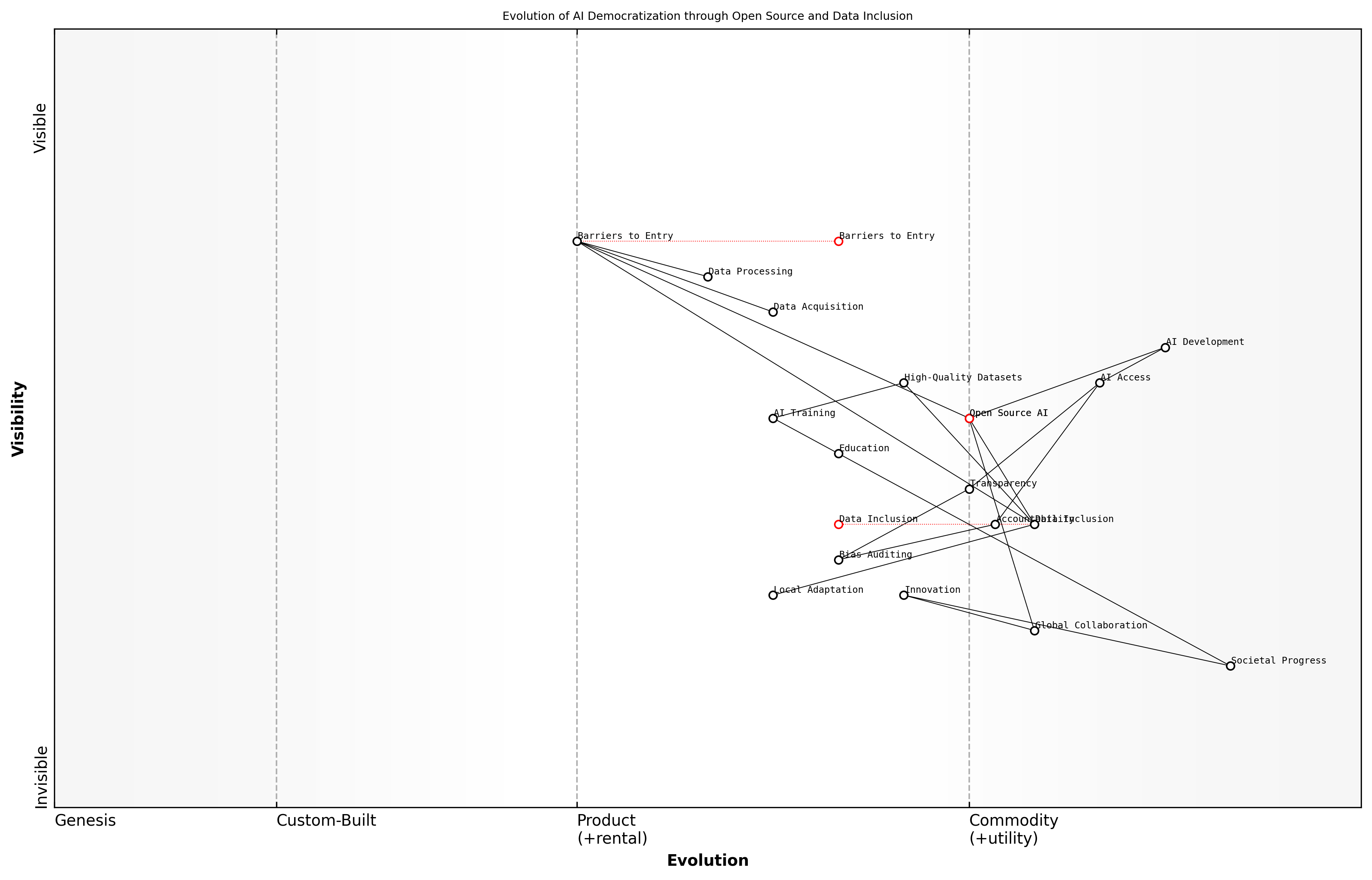

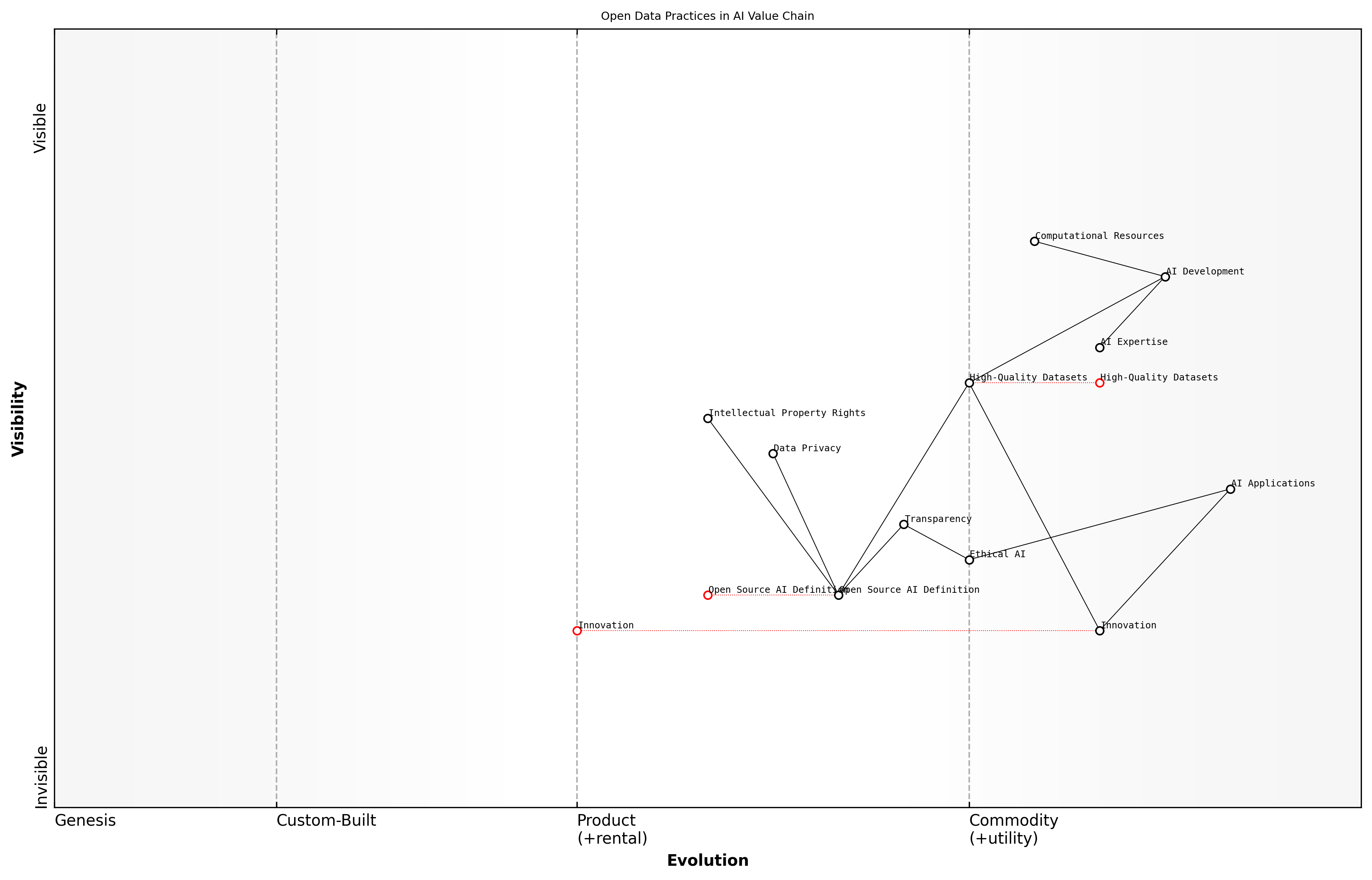

Wardley Map Assessment

The map reveals a dynamic shift in the open source landscape driven by AI advancements. While leveraging existing strengths in community and technology, there's a critical need to develop new capabilities in AI governance, ethics, and collaborative development. Organisations should prioritise adapting to data-centric open source models while proactively addressing emerging challenges in AI ethics and governance. The future success in this domain will depend on balancing innovation with responsible AI development practices.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_02_english_The Rise of AI and Its Impact on Open Source.md)

In conclusion, the rise of AI has profoundly impacted the open source movement, challenging traditional notions of openness, fostering new collaborative models, and necessitating the development of new frameworks and definitions. As we navigate this new landscape, it is imperative that we critically examine and adapt our open source practices to ensure they remain relevant and effective in the age of AI.

The Open Source Initiative (OSI) and AI

OSI's Role in Shaping Open Source Definitions

The Open Source Initiative (OSI) has played a pivotal role in shaping and maintaining the definition of open source since its inception in 1998. As an authoritative body in the open source community, OSI's influence extends far beyond software, now reaching into the realm of artificial intelligence. This evolution reflects the organisation's commitment to adapting its principles to emerging technologies while maintaining the core values of openness, collaboration, and transparency.

OSI's primary contribution to the open source movement has been the creation and stewardship of the Open Source Definition (OSD). This definition serves as the foundation for determining whether a software licence can be considered truly open source. The OSD's ten criteria encompass crucial aspects such as free redistribution, access to source code, and the ability to create derived works. These principles have been instrumental in fostering a robust ecosystem of open source software and have significantly influenced the development of collaborative technologies.

The Open Source Definition has been the cornerstone of the open source movement, providing a clear and unambiguous standard that has enabled the growth of a global community of developers and users.

As artificial intelligence has emerged as a transformative technology, OSI has recognised the need to extend its purview to encompass AI systems. This expansion is not without challenges, as AI introduces unique considerations that were not present in traditional software development. The complexity of AI systems, particularly in terms of their reliance on vast datasets and sophisticated algorithms, necessitates a re-evaluation of what 'openness' means in this context.

- Adapting the concept of source code access to include AI models and architectures

- Addressing the role of training data in AI system development and deployment

- Considering the ethical implications of AI transparency and accountability

- Balancing intellectual property concerns with the principles of openness

OSI's approach to defining open source AI has been characterised by careful deliberation and community engagement. The organisation has initiated discussions with AI researchers, developers, and ethicists to understand the unique challenges posed by AI systems. These consultations have highlighted the critical importance of data in AI development, leading to debates about whether and how data should be incorporated into the open source definition for AI.

The release candidate Open Source AI Definition (OSAID) represents a significant milestone in OSI's efforts to adapt its principles to the AI landscape. This definition aims to provide a framework for evaluating the openness of AI systems, taking into account not only the code and algorithms but also the data and models that are integral to their functioning. However, the current iteration of OSAID has sparked debate within the community, particularly regarding the extent to which data should be included in the definition.

The challenge we face is to create a definition that captures the essence of openness in AI whilst acknowledging the complexities and sensitivities surrounding data. It's a delicate balance, but one that is crucial for the future of open source AI.

OSI's role in shaping the open source definition for AI is not merely about creating a set of criteria. It is about fostering a culture of openness, collaboration, and ethical consideration in the development of AI technologies. By engaging with diverse stakeholders and carefully considering the implications of various approaches, OSI is working to ensure that the principles of open source can be meaningfully applied to AI systems.

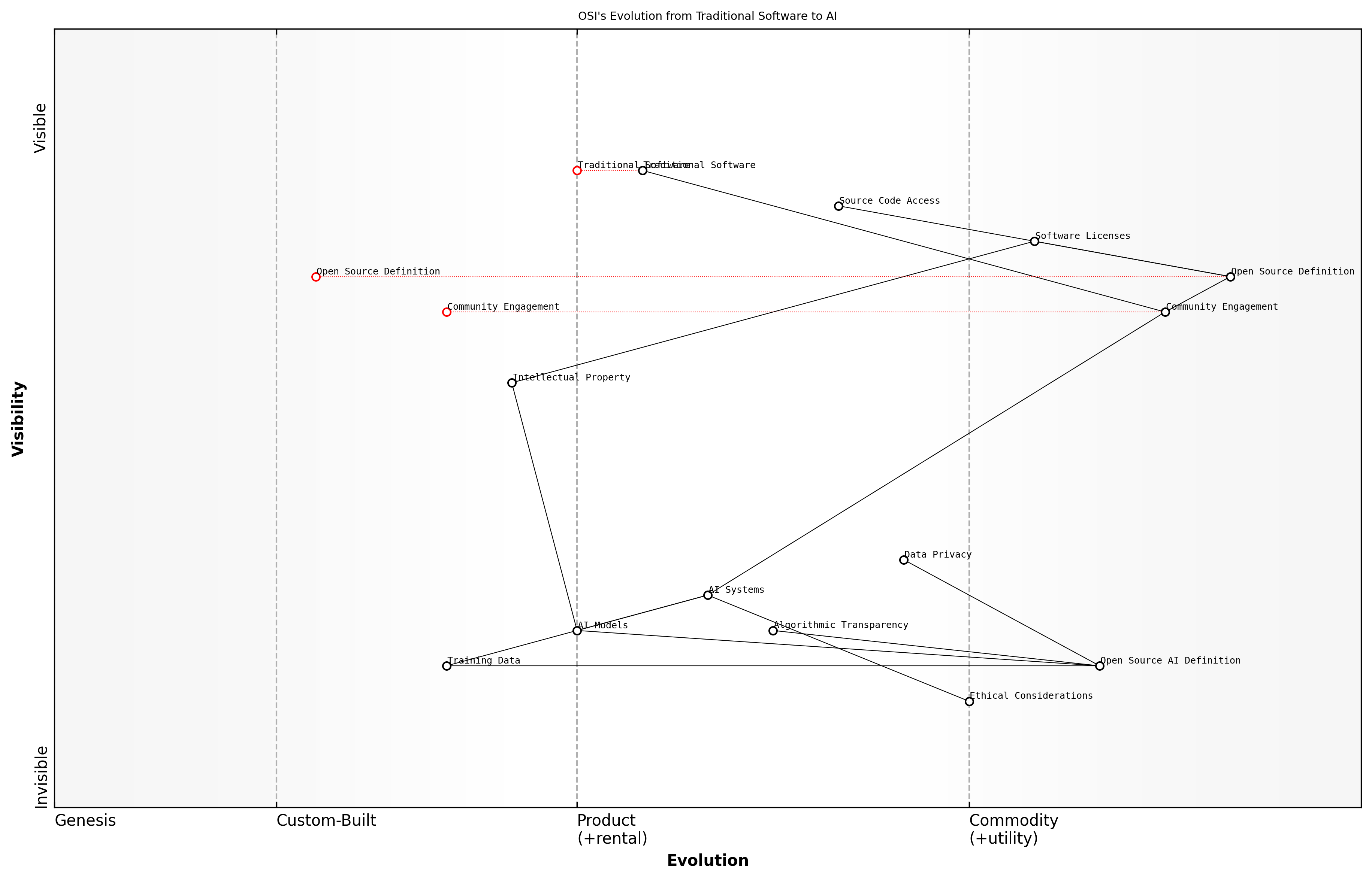

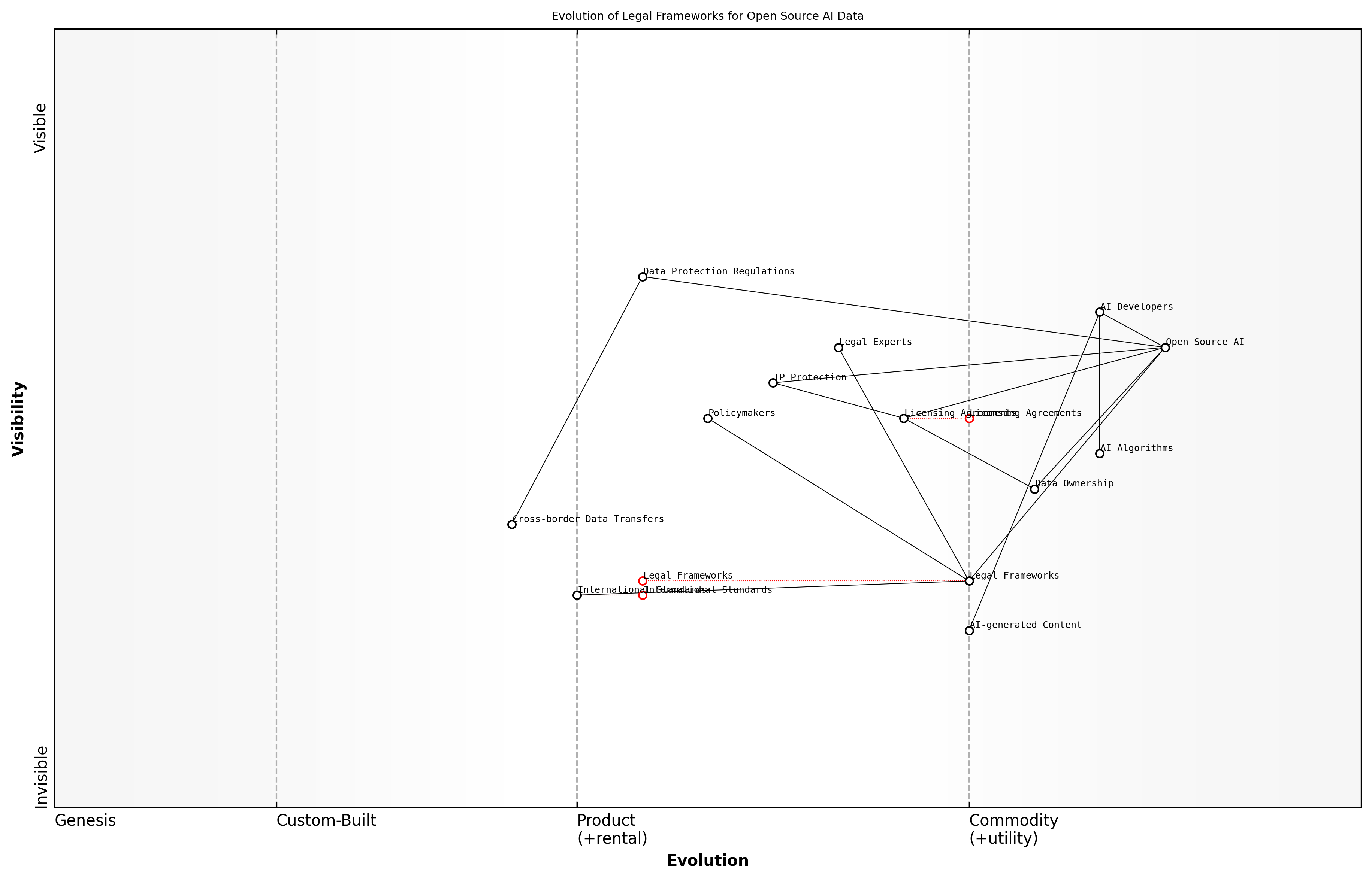

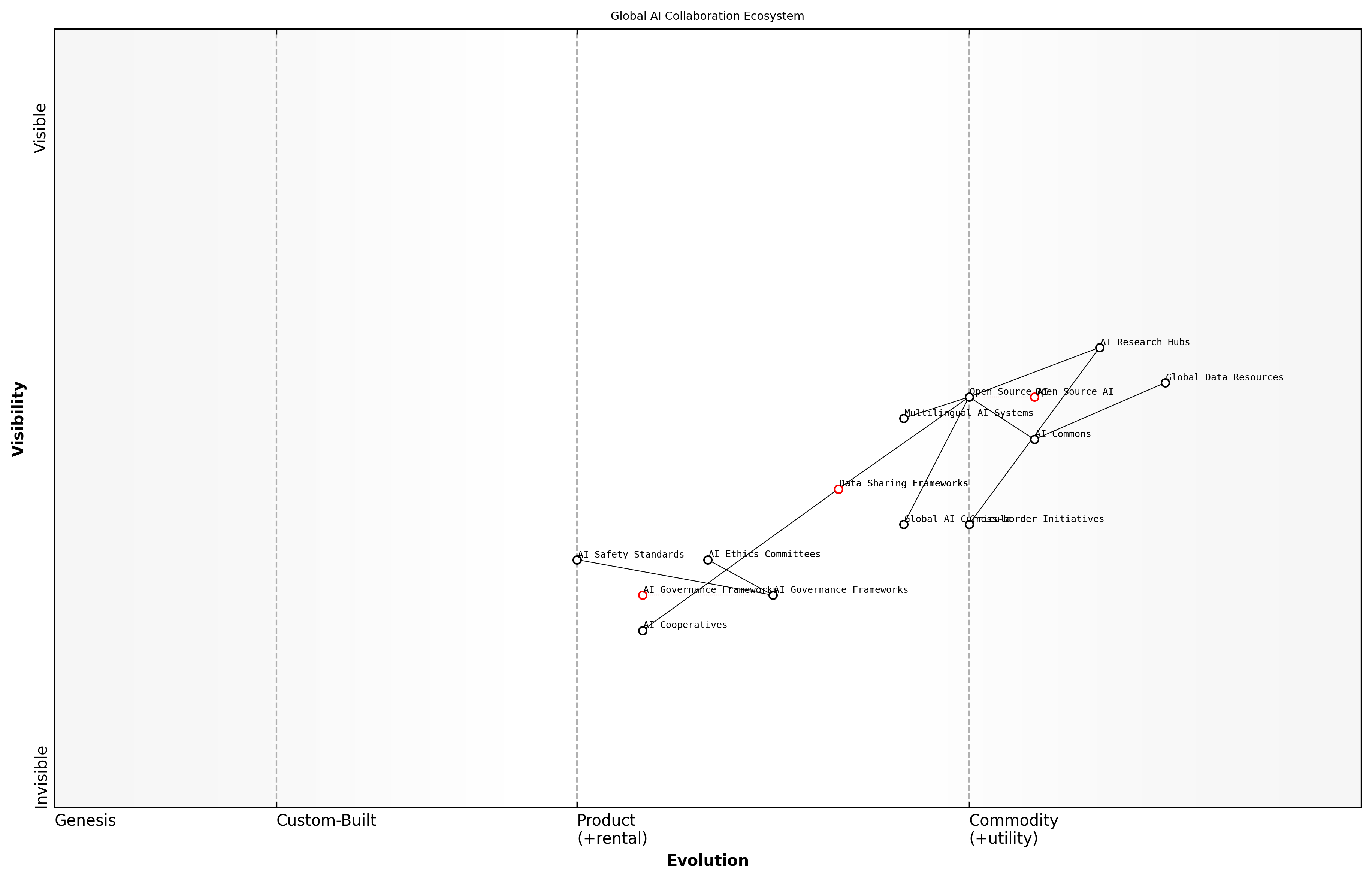

Wardley Map Assessment

OSI is at a critical juncture, needing to rapidly evolve its core definitions and practices to encompass AI technologies. The organization has a strong foundation in traditional open source but must quickly build capabilities in AI governance, ethics, and community engagement to remain relevant and influential. Prioritizing the development of an Open Source AI Definition and fostering partnerships in the AI ecosystem will be crucial for success. The integration of ethical considerations and data privacy into open source AI practices presents both a challenge and an opportunity for OSI to lead in shaping the future of open and responsible AI development.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_03_english_OSI's Role in Shaping Open Source Definitions.md)

As OSI continues to refine its approach to open source AI, it faces the challenge of balancing the need for specificity with the desire for broad applicability. The organisation must navigate complex issues such as data privacy, algorithmic transparency, and the potential for AI systems to evolve in ways that may not be fully predictable at the time of their initial release. These considerations underscore the importance of OSI's ongoing efforts to adapt and expand its definitions to meet the needs of a rapidly evolving technological landscape.

In conclusion, OSI's role in shaping open source definitions has been crucial to the growth and success of the open source movement. As the organisation extends its focus to AI, it carries forward a legacy of promoting openness and collaboration. The challenges posed by AI are significant, but they also present an opportunity for OSI to reaffirm and evolve its core principles, ensuring that the benefits of open source can be fully realised in the age of artificial intelligence.

The Need for an Open Source AI Definition (OSAID)

As artificial intelligence (AI) continues to revolutionise industries and reshape societies, the need for a comprehensive Open Source AI Definition (OSAID) has become increasingly apparent. The Open Source Initiative (OSI), long recognised as the steward of the Open Source Definition (OSD) for software, finds itself at a critical juncture where its expertise and leadership are essential in navigating the complex landscape of AI development and deployment.

The convergence of open source principles and AI technologies presents unique challenges and opportunities that necessitate a dedicated framework. The OSAID aims to address these by providing clear guidelines and standards for what constitutes 'open source' in the context of AI systems. This definition is crucial for several reasons:

- Ensuring transparency and accountability in AI development

- Promoting collaboration and knowledge sharing within the AI community

- Addressing ethical concerns and potential biases in AI systems

- Facilitating the democratisation of AI technologies

- Establishing a common language and set of expectations for open source AI projects

The complexity of AI systems, which often involve not just software but also complex models, algorithms, and vast datasets, requires a nuanced approach to openness. Traditional open source software definitions, while valuable, do not fully capture the multifaceted nature of AI systems. The OSAID must consider aspects such as model architecture, training methodologies, and crucially, the role of data in AI development and deployment.

The open source movement has always been about more than just code. With AI, we're entering a new frontier where the principles of openness and collaboration are more important than ever. The OSAID is not just a definition; it's a roadmap for ethical and transparent AI development.

One of the key challenges in developing the OSAID is striking the right balance between openness and the protection of intellectual property and sensitive data. AI systems often rely on proprietary datasets or models that may have commercial value or contain personal information. The OSAID must provide guidance on how to navigate these complexities while still adhering to the core principles of open source.

Moreover, the OSAID needs to address the unique ethical considerations that arise in AI development. This includes issues such as algorithmic bias, fairness, and the potential for AI systems to be used in ways that infringe on privacy or human rights. By incorporating these ethical dimensions, the OSAID can help ensure that open source AI projects are not only technically sound but also socially responsible.

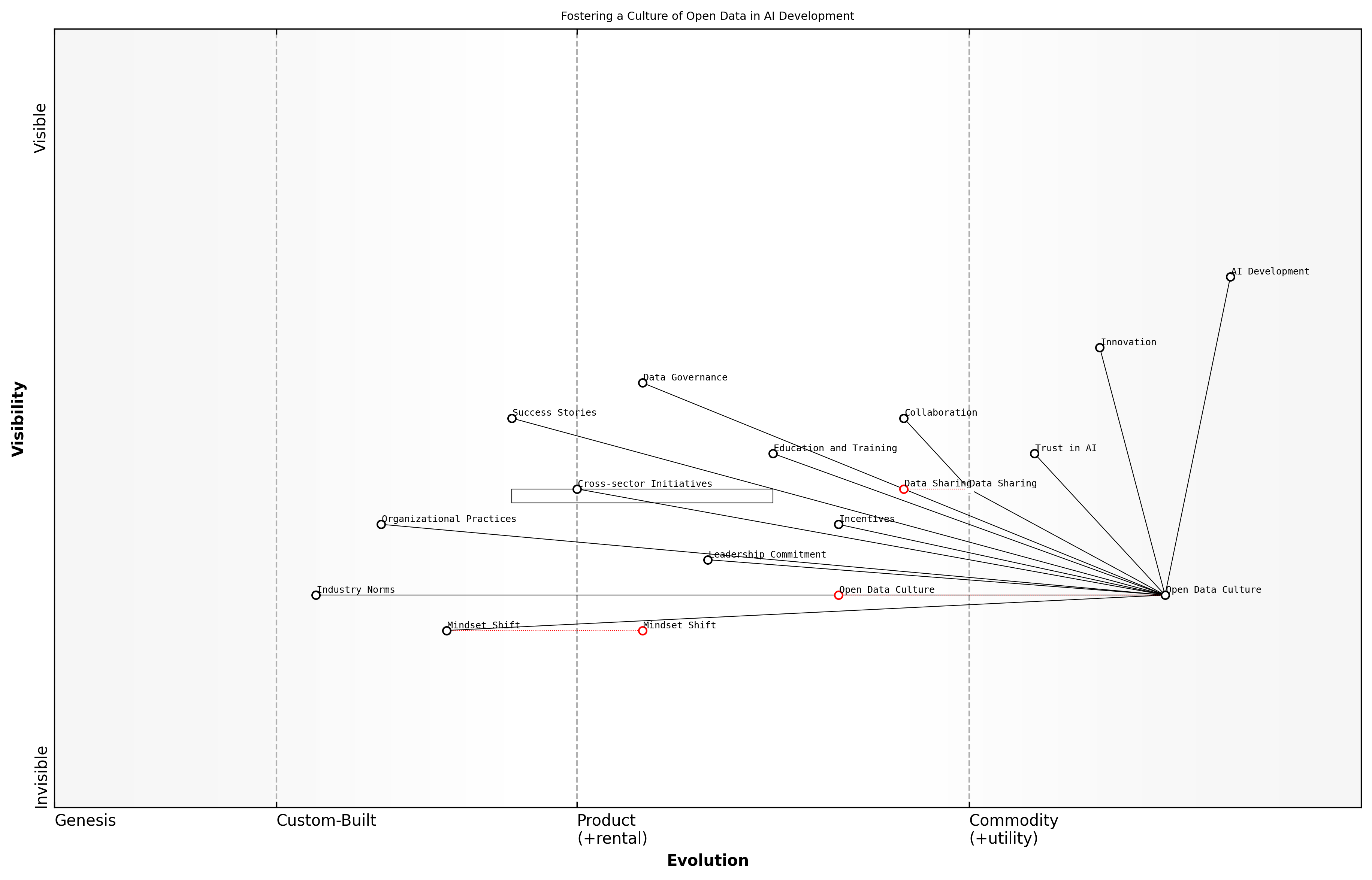

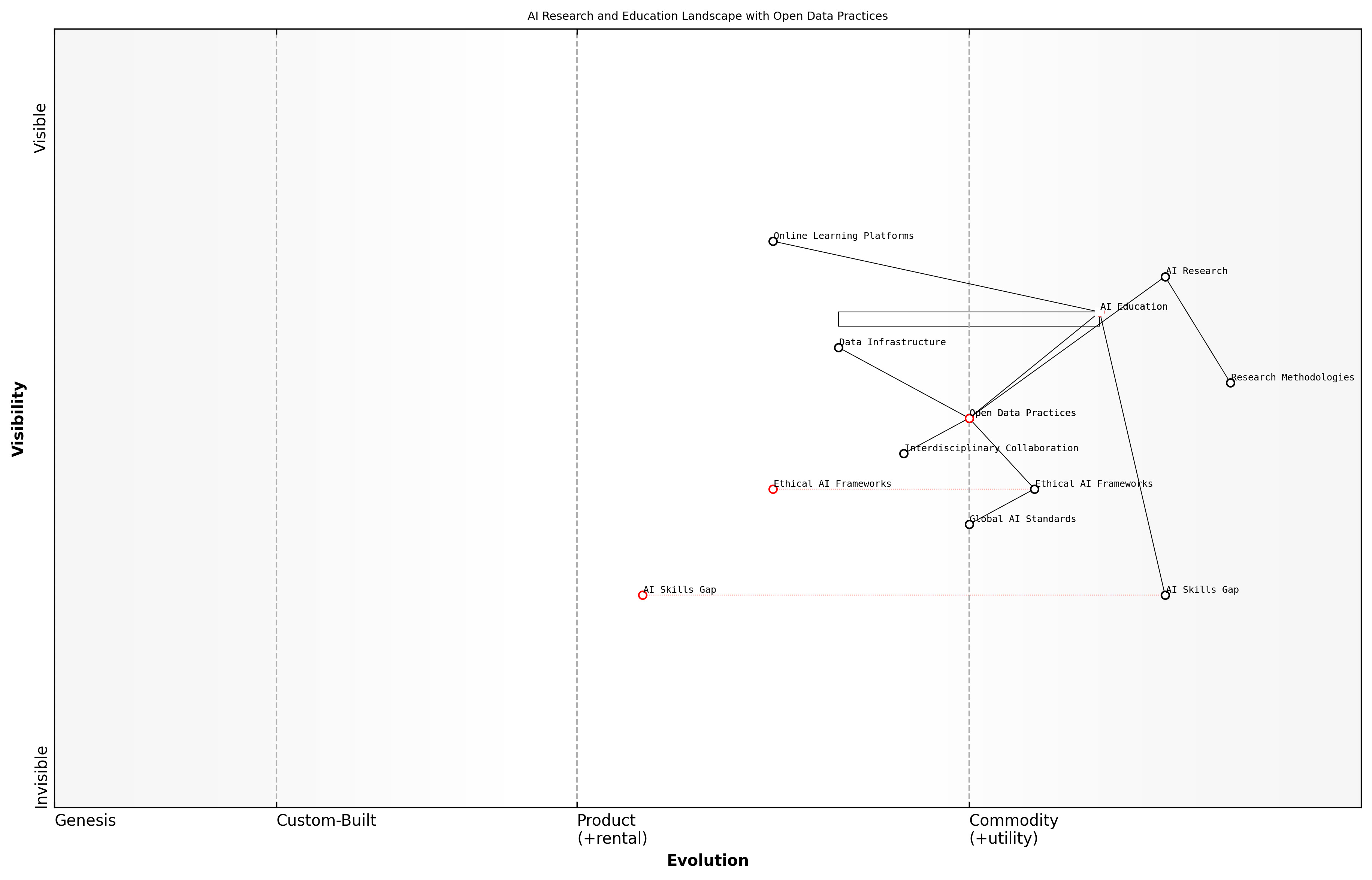

Wardley Map Assessment

The Open Source AI Definition evolution represents a critical juncture in the development of AI technologies. By leveraging existing open source principles and focusing on ethical considerations and community governance, there's a significant opportunity to shape the future of AI development in a more open, transparent, and responsible manner. Success will require careful navigation of rapidly evolving technologies, regulatory landscapes, and community dynamics, with a strong emphasis on building robust governance structures and ethical frameworks.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_04_english_The Need for an Open Source AI Definition (OSAID).md)

The development of the OSAID also presents an opportunity to foster greater collaboration between different stakeholders in the AI ecosystem. This includes researchers, developers, policymakers, and end-users. By providing a common framework and set of expectations, the OSAID can facilitate more effective communication and cooperation across these diverse groups.

Furthermore, the OSAID has the potential to accelerate innovation in AI by lowering barriers to entry and promoting the sharing of knowledge and resources. This is particularly important in a field where access to large-scale computing resources and extensive datasets can often determine the pace of progress.

Open source has been a catalyst for innovation in software development. With a well-crafted OSAID, we have the opportunity to unleash the same collaborative power in the realm of AI, potentially leading to breakthroughs that benefit all of humanity.

However, the creation of the OSAID is not without its challenges. One of the primary hurdles is the rapid pace of AI development, which means that any definition must be flexible enough to accommodate new technologies and methodologies as they emerge. The OSAID must strike a delicate balance between providing clear guidelines and remaining adaptable to future innovations.

Another significant consideration is the global nature of AI development. The OSAID must be applicable across different legal and regulatory frameworks, taking into account varying approaches to data protection, intellectual property, and AI governance around the world. This international perspective is crucial for ensuring the widespread adoption and relevance of the OSAID.

- Defining clear criteria for what constitutes 'open' in AI systems

- Addressing the role of data in open source AI projects

- Incorporating ethical considerations and safeguards

- Ensuring compatibility with existing legal and regulatory frameworks

- Providing guidance on licensing and intellectual property issues specific to AI

- Establishing mechanisms for community governance and contribution to AI projects

In conclusion, the need for an Open Source AI Definition is clear and pressing. As AI continues to play an increasingly central role in our societies and economies, having a robust framework for open source AI development is essential. The OSAID has the potential to shape the future of AI in a way that promotes innovation, collaboration, and ethical considerations. It is a crucial step towards ensuring that the benefits of AI are widely shared and that its development aligns with the values of transparency, accountability, and community-driven progress that have long been the hallmarks of the open source movement.

The Current Release Candidate OSAID: An Overview

The Open Source Initiative (OSI) has taken a significant step towards addressing the evolving landscape of artificial intelligence by proposing a release candidate for the Open Source AI Definition (OSAID). This initiative represents a crucial juncture in the convergence of open source principles and AI technologies, aiming to establish a framework that ensures transparency, accessibility, and ethical considerations in AI development.

The release candidate OSAID, as it currently stands, encompasses several key principles that reflect the OSI's commitment to fostering an open and collaborative AI ecosystem. These principles are designed to extend the ethos of open source software to the realm of AI, addressing the unique challenges and opportunities presented by this rapidly evolving field.

- Transparency of AI models and algorithms

- Reproducibility of AI systems

- Interoperability and portability

- Non-discrimination in access and use

- Ethical considerations in AI development and deployment

While these principles form a solid foundation for open source AI, it is crucial to note that the current release candidate OSAID has a significant omission: the explicit inclusion of data as a fundamental component of open source AI. This oversight presents a critical gap in the definition, given the inextricable link between AI systems and the data upon which they are built and trained.

The current OSAID release candidate represents a commendable step towards open source AI, but without explicit inclusion of data, it falls short of addressing the full spectrum of openness required in AI development.

The absence of data considerations in the OSAID has far-reaching implications. AI models, no matter how sophisticated or transparently designed, are fundamentally shaped by the data they are trained on. The quality, diversity, and ethical sourcing of this data are as crucial to the performance and fairness of AI systems as the algorithms themselves. By not addressing data openness, the current OSAID risks creating a definition of open source AI that is incomplete and potentially misleading.

Furthermore, the lack of data inclusion in the OSAID could lead to scenarios where AI systems are considered 'open source' despite being trained on proprietary or inaccessible datasets. This situation would severely limit the reproducibility and verifiability of AI systems, two core tenets of the open source philosophy. It could also perpetuate existing biases and inequalities in AI development, as access to high-quality, diverse datasets would remain a significant barrier to entry for many researchers and developers.

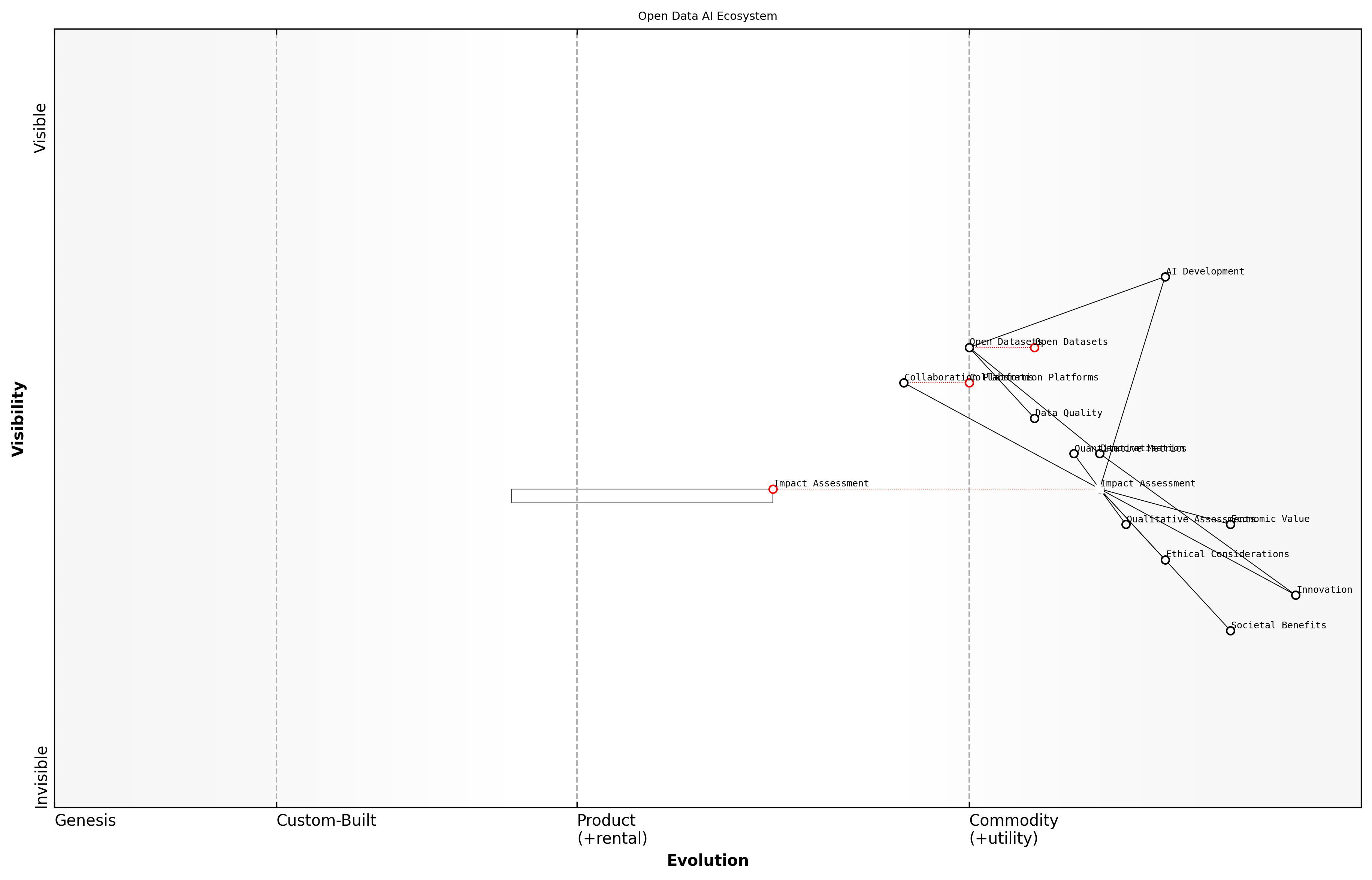

Wardley Map Assessment

The Open Source AI Ecosystem map reveals a mature technical foundation with emerging focus on ethical and transparent AI development. The strategic positioning of Ethical Considerations as a genesis component presents a significant opportunity for leadership and differentiation in the industry. To capitalise on this, organisations should prioritise the development of ethical AI frameworks and practices, while continuing to advance technical capabilities in areas such as interoperability and reproducibility. The strong open source community provides a solid base for collaborative innovation, but careful attention must be paid to potential bottlenecks in the Open Source AI Definition and Data components. By addressing these challenges and leveraging the identified opportunities, stakeholders can contribute to a more robust, ethical, and innovative AI ecosystem.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_05_english_The Current Release Candidate OSAID: An Overview.md)

The OSI's release candidate OSAID, while a step in the right direction, must evolve to explicitly include data as a core component of open source AI. This inclusion would ensure that the definition aligns with the realities of AI development and the principles of openness, transparency, and accessibility that the open source movement has long championed.

For open source AI to truly fulfil its potential, we must recognise that openness in algorithms without openness in data is like having a car without fuel – technically complete, but practically useless.

As the AI community and stakeholders provide feedback on this release candidate, it is imperative that the conversation focuses not only on refining the existing principles but also on addressing the critical gap of data inclusion. The future of open source AI depends on a holistic definition that recognises the symbiotic relationship between algorithms and data, ensuring that both are subject to the principles of openness and accessibility that have made open source software such a transformative force in the digital world.

Chapter 1: The Critical Role of Data in AI Development

Understanding AI's Dependence on Data

The Data-Algorithm Symbiosis in AI

In the realm of artificial intelligence, the relationship between data and algorithms is not merely complementary; it is fundamentally symbiotic. This intricate interplay forms the bedrock of AI systems, driving their capabilities, limitations, and potential for innovation. As we delve into the critical role of data in AI development, it is imperative to understand that algorithms, no matter how sophisticated, are essentially inert without the lifeblood of data flowing through them.

At its core, AI is a data-driven technology. The algorithms that power AI systems are designed to learn patterns, make predictions, and generate insights based on the data they are fed. This learning process, whether supervised, unsupervised, or reinforced, is entirely dependent on the quality, quantity, and diversity of the data available. It is this symbiotic relationship that enables AI to evolve from simple rule-based systems to complex, adaptive entities capable of tackling intricate real-world problems.

Data is the fuel that powers the AI engine. Without high-quality, diverse data, even the most advanced AI algorithms are rendered impotent.

The symbiosis between data and algorithms in AI manifests in several critical ways:

- Learning and Adaptation: AI algorithms use data to learn and adapt their behaviour. This process of continuous refinement based on new data inputs is what gives AI its power to improve over time.

- Pattern Recognition: The ability of AI to identify complex patterns within vast datasets is a direct result of the synergy between sophisticated algorithms and comprehensive data.

- Predictive Capabilities: The accuracy of AI predictions is intrinsically linked to the breadth and depth of historical data available for analysis.

- Feature Extraction: AI algorithms can automatically identify relevant features within datasets, but this capability is only as good as the data provided.

- Model Generalisation: The capacity of AI models to perform well on unseen data is heavily influenced by the diversity and representativeness of the training data.

The implications of this symbiosis extend far beyond technical considerations. They touch upon fundamental issues of AI ethics, fairness, and transparency. When we consider the inclusion of data in the Open Source AI Definition (OSAID), we must recognise that algorithms without open access to the data they were trained on are essentially black boxes. This opacity can lead to issues of bias, lack of accountability, and limited reproducibility – all of which run counter to the principles of open source.

Moreover, the data-algorithm symbiosis in AI underscores the need for a holistic approach to open source AI. Simply making algorithms available without corresponding datasets is akin to providing a car without fuel – the potential for movement exists, but the means to realise it are absent. This reality challenges us to rethink our approach to open source in the AI context, pushing us towards a more comprehensive definition that encompasses both the algorithmic and data components of AI systems.

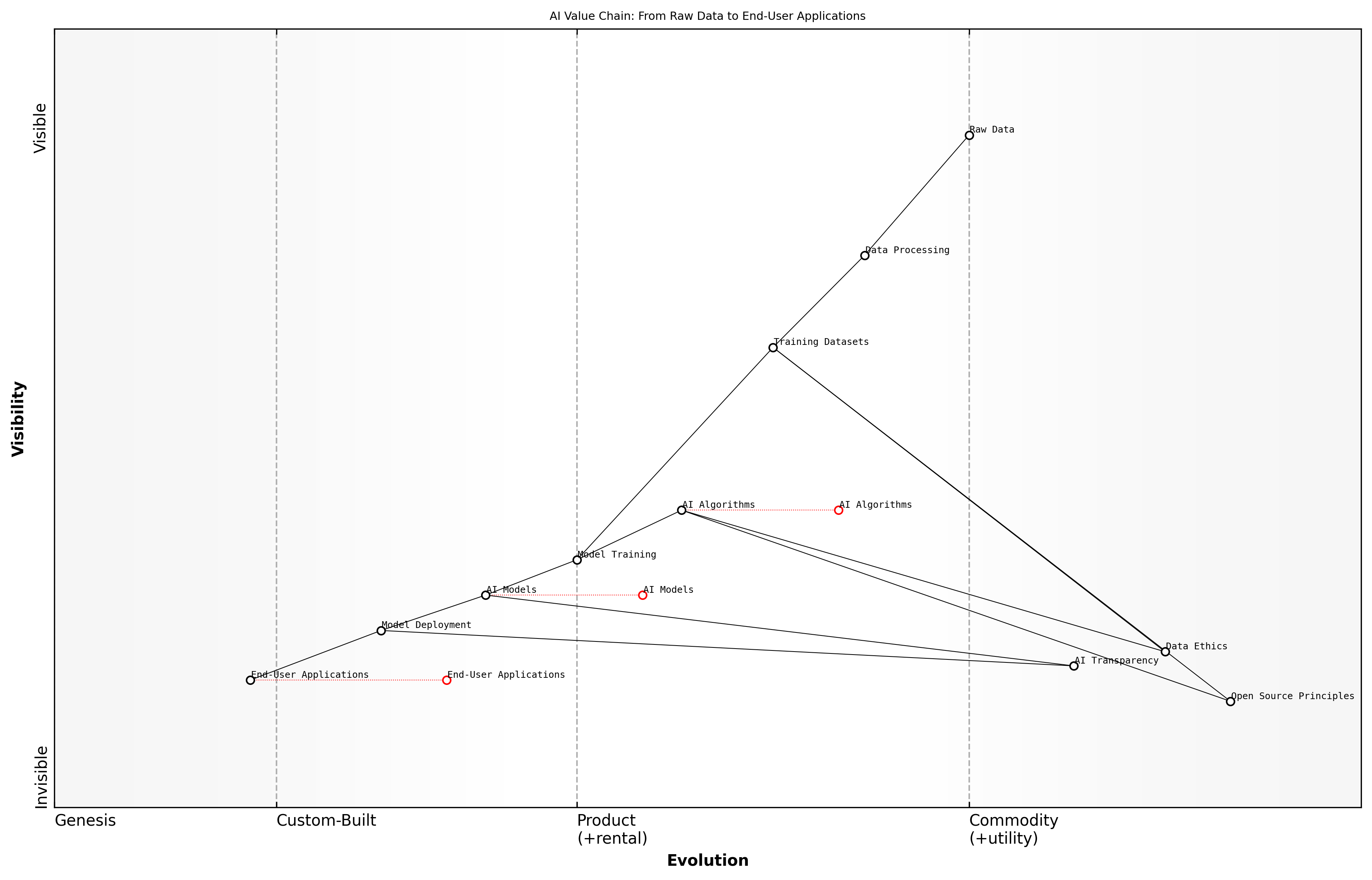

Wardley Map Assessment

This map reveals a maturing AI industry with increasing emphasis on ethical considerations and open source principles. To succeed, organisations must excel in data management and algorithm development while also prioritising transparency and ethical practices. The key to long-term success lies in balancing proprietary advantages with open collaboration and maintaining a strong focus on end-user needs and trust.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_06_english_The Data-Algorithm Symbiosis in AI.md)

As we progress through this chapter, we will explore in greater depth how this symbiotic relationship shapes the development, deployment, and governance of AI systems. We will examine the challenges and opportunities it presents for the open source community, and why a data-inclusive approach to OSAID is not just beneficial, but essential for the future of ethical, transparent, and innovative AI development.

The future of AI lies not just in better algorithms, but in the synergistic combination of advanced algorithms with rich, diverse, and ethically sourced datasets. This is the frontier that open source AI must boldly explore.

Quality, Quantity, and Diversity: The Data Trifecta

In the realm of artificial intelligence, data serves as the lifeblood that powers the cognitive capabilities of AI systems. The efficacy and reliability of AI models are intrinsically linked to the characteristics of the data used to train them. This critical relationship forms what I refer to as the 'Data Trifecta' – a triumvirate of quality, quantity, and diversity that collectively determine the robustness and applicability of AI systems.

Quality, the first pillar of the Data Trifecta, is paramount in ensuring the accuracy and reliability of AI models. High-quality data is characterised by its accuracy, completeness, consistency, and timeliness. In my experience advising government bodies on AI implementation, I've observed that the use of poor-quality data can lead to erroneous predictions and decisions, potentially resulting in significant societal and economic consequences.

The quality of your data determines the upper limit of your AI's performance. No amount of algorithmic sophistication can compensate for fundamentally flawed or inaccurate data.

Quantity, the second pillar, is equally crucial. Large datasets provide AI models with more examples to learn from, enabling them to identify patterns and relationships with greater precision. However, it's important to note that quantity alone is not sufficient; it must be balanced with quality and diversity. In my work with public sector organisations, I've seen how the pursuit of large datasets without proper quality controls can lead to the amplification of biases and errors.

- Increased statistical power and reduced overfitting

- Better generalisation to unseen data

- Improved handling of edge cases and rare events

- Enhanced ability to learn complex patterns and relationships

Diversity, the third pillar of the Data Trifecta, is perhaps the most overlooked yet critically important aspect. A diverse dataset ensures that AI models can generalise well across different scenarios and populations. It helps mitigate biases and ensures fairness in AI decision-making processes. In my consultancy work, I've emphasised the importance of data diversity to ensure AI systems serve all segments of society equitably.

Diversity in data is not just about ethical considerations; it's about building AI systems that are truly intelligent and adaptable to the complexities of our world.

The interplay between these three pillars is complex and nuanced. For instance, increasing the quantity of data without maintaining quality can dilute the overall effectiveness of the dataset. Similarly, a high-quality but homogeneous dataset may lead to AI systems that perform exceptionally well in limited contexts but fail when faced with diverse real-world scenarios.

In the context of open source AI, the Data Trifecta takes on even greater significance. Open source initiatives have the potential to democratise access to high-quality, large-scale, and diverse datasets. This is particularly crucial for researchers, smaller organisations, and public sector entities that may not have the resources to compile comprehensive datasets independently.

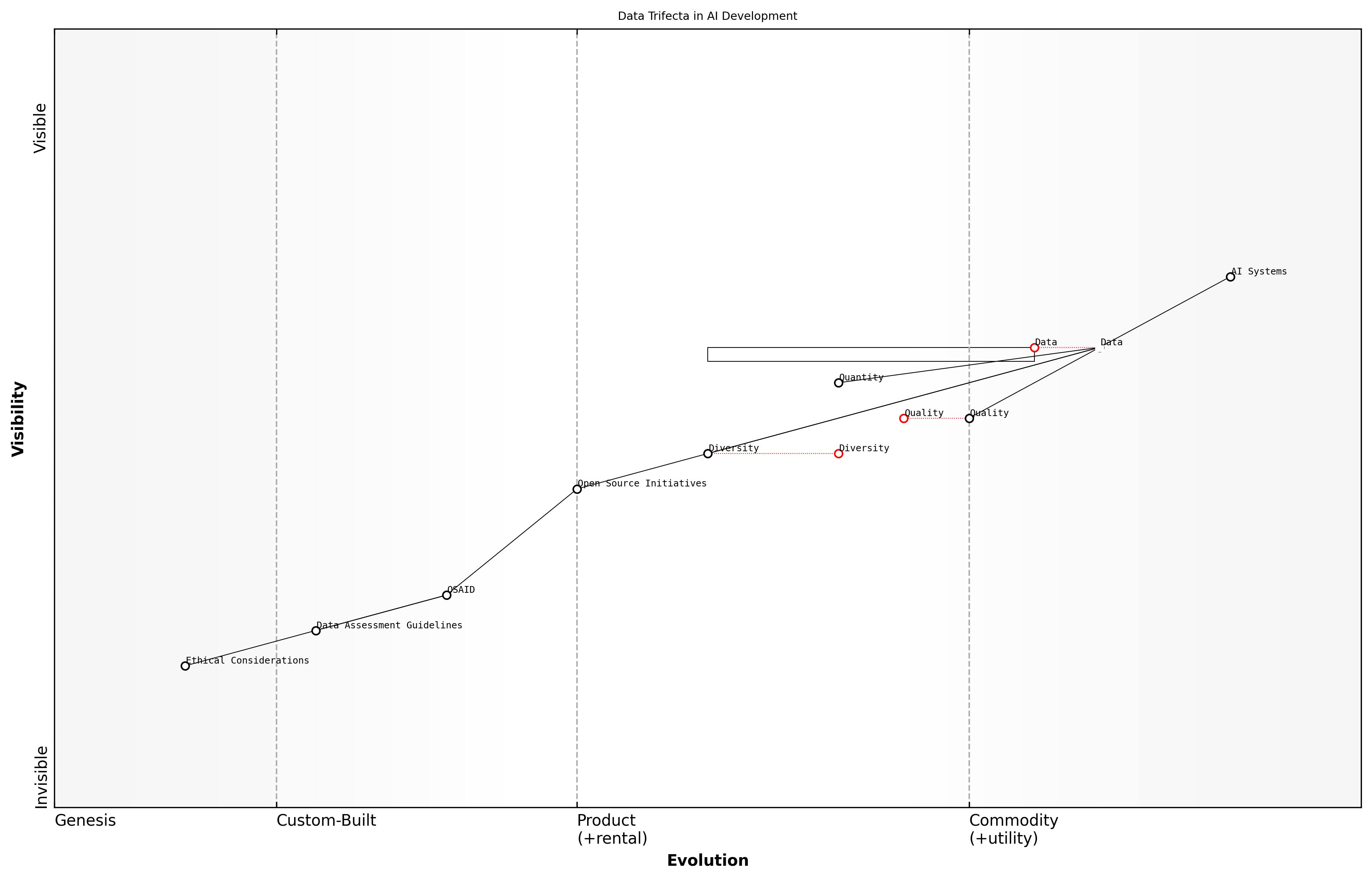

Wardley Map Assessment

This Wardley Map reveals a strategic landscape where the success of AI systems is deeply tied to the quality, quantity, and diversity of data, underpinned by ethical considerations and open source initiatives. The key strategic imperative is to evolve capabilities in data diversity and ethical AI development, while leveraging open source collaboration to accelerate progress and ensure broad societal benefit. Organisations that can effectively balance these elements while driving innovation in AI systems will be well-positioned for future success in this rapidly evolving field.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_07_english_Quality, Quantity, and Diversity: The Data Trifecta.md)

The Open Source Initiative's (OSI) release candidate for the Open Source AI Definition (OSAID) must explicitly address the Data Trifecta to ensure the development of robust, fair, and widely applicable AI systems. By incorporating guidelines for data quality assessment, quantity benchmarks, and diversity requirements, the OSAID can set a new standard for responsible and effective AI development.

As we move forward in the era of data-driven AI, it is imperative that we recognise the Data Trifecta not just as a theoretical concept, but as a practical framework for guiding the development and deployment of AI systems. By embracing this approach within the open source community, we can foster an ecosystem that produces AI technologies that are not only powerful and efficient but also ethical, inclusive, and truly beneficial to society as a whole.

The Limitations of AI Without Open Data

As an expert in the field of AI and open source initiatives, I can unequivocally state that the limitations of AI systems without access to open data are profound and far-reaching. The absence of open data in AI development creates a cascade of challenges that significantly hamper the potential of AI technologies and their ability to serve society at large.

One of the most critical limitations of AI without open data is the inherent bias and lack of diversity in training datasets. Proprietary datasets, often collected and curated by a small subset of organisations or individuals, inevitably reflect the biases and limitations of their creators. This narrow perspective can lead to AI systems that perpetuate and even amplify existing societal biases, particularly in sensitive areas such as facial recognition, natural language processing, and decision-making algorithms.

The quality of an AI system is only as good as the data it's trained on. Without open, diverse datasets, we risk creating AI that reflects a limited worldview, potentially exacerbating societal inequalities rather than solving them.

Another significant limitation is the barrier to innovation and scientific progress. When data is not openly available, it creates a monopolistic environment where only a select few organisations with access to large proprietary datasets can effectively develop and improve AI systems. This concentration of power not only stifles competition but also slows down the overall pace of AI advancement. Open data, on the other hand, allows for collaborative efforts, peer review, and the rapid iteration of ideas that are crucial for pushing the boundaries of AI capabilities.

- Reduced ability to validate and reproduce AI research findings

- Limited cross-domain applications due to data silos

- Increased risk of overfitting and poor generalisation in AI models

- Difficulty in addressing ethical concerns and building public trust

- Barriers to entry for smaller organisations and researchers in AI development

The lack of open data also poses significant challenges in terms of transparency and accountability. Without access to the underlying data, it becomes nearly impossible for external parties to audit AI systems for fairness, safety, and compliance with ethical standards. This opacity can lead to a lack of trust in AI technologies, particularly in high-stakes applications such as healthcare, criminal justice, and financial services.

Furthermore, the absence of open data severely limits the ability to address global challenges that require collaborative efforts. Climate change, pandemic response, and sustainable development are just a few examples of areas where AI could make significant contributions, but only if researchers and developers worldwide have access to comprehensive, diverse datasets.

Open data in AI is not just about technological advancement; it's about creating a more equitable, transparent, and collaborative approach to solving some of humanity's most pressing challenges.

It's also worth noting that the limitations of AI without open data extend to the realm of education and skill development. Without access to real-world, diverse datasets, aspiring AI practitioners and researchers are limited in their ability to learn, experiment, and develop the skills necessary to advance the field. This creates a talent bottleneck that further concentrates AI expertise within a small number of well-resourced organisations.

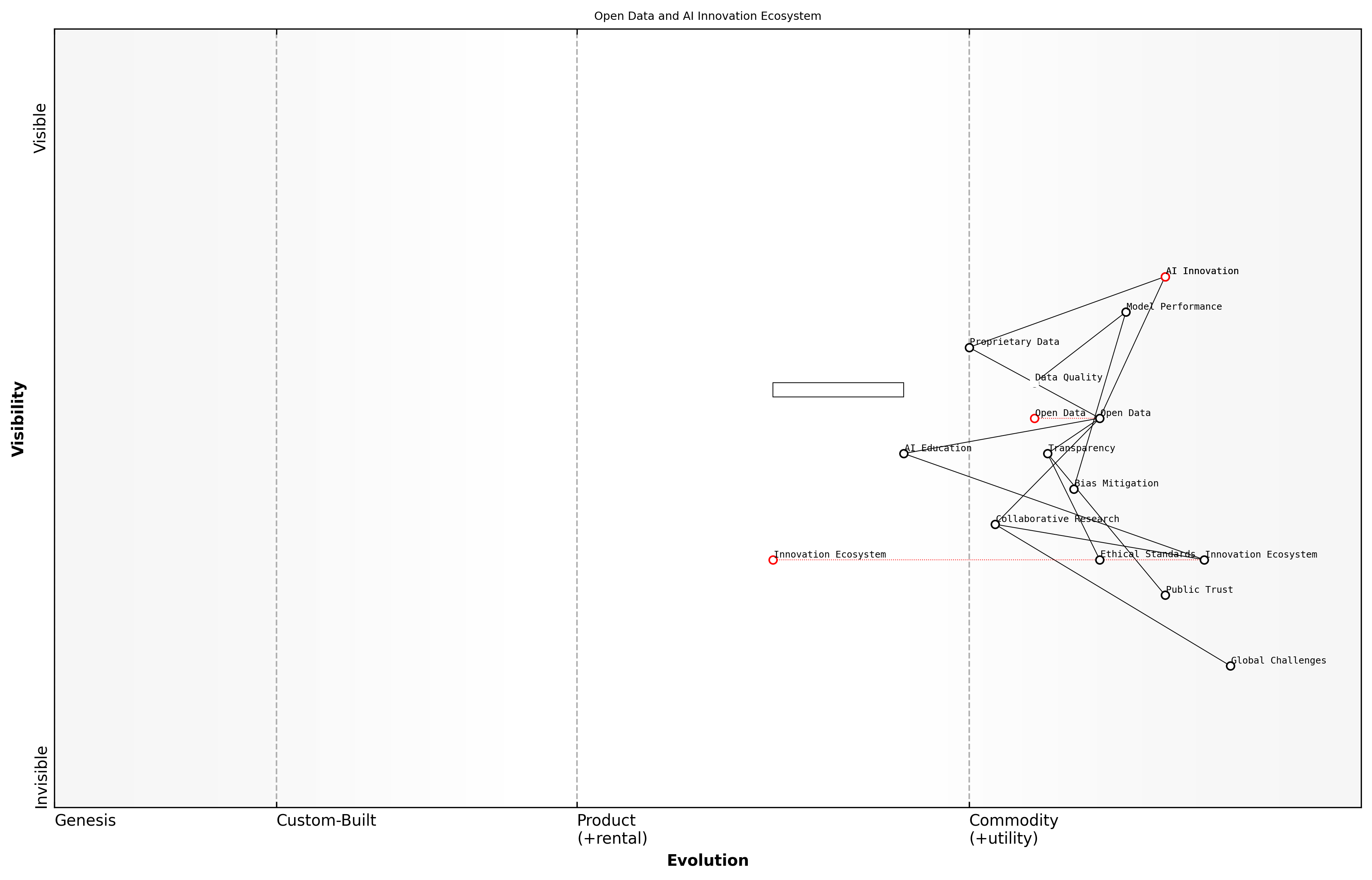

Wardley Map Assessment

This Wardley Map reveals a forward-thinking AI innovation ecosystem that prioritises openness, ethics, and societal impact. The strategic focus on Open Data and Transparency positions the ecosystem well for sustainable growth and public acceptance. However, addressing capability gaps in AI Education and Data Quality is crucial for realising the full potential of this ecosystem. The integration of Ethical Standards and focus on Bias Mitigation provide a strong foundation for building Public Trust. To maintain a competitive edge, organisations within this ecosystem should invest in collaborative research, contribute to open data initiatives, and continuously innovate in AI technologies while adhering to ethical principles. The ecosystem's success will largely depend on its ability to balance rapid innovation with responsible development practices that address Global Challenges and benefit Society as a whole.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_08_english_The Limitations of AI Without Open Data.md)

In conclusion, the limitations of AI without open data are multifaceted and deeply impactful. They range from technical constraints on model performance and generalisability to broader societal issues of fairness, transparency, and equitable access to AI technologies. As we continue to develop and refine the Open Source AI Definition (OSAID), it is imperative that we recognise data as an integral component of AI systems, not just as a separate entity. Only by embracing open data practices can we unlock the full potential of AI and ensure its benefits are widely and fairly distributed across society.

The Evolution of Open Source in AI

From Open Source Software to Open Data

The evolution of open source in artificial intelligence (AI) represents a paradigm shift that extends far beyond the realm of software development. As an expert who has witnessed and contributed to this transformation, I can attest to the profound impact this evolution has had on the AI landscape. The journey from open source software to open data is not merely a technological progression; it's a fundamental reimagining of how we approach AI development and deployment.

The open source movement, which began in the software domain, laid the groundwork for collaborative development and knowledge sharing. This ethos of openness and transparency quickly found resonance in the AI community, where the complexity and scale of challenges demanded collective effort. However, as AI systems became more sophisticated, it became increasingly clear that software alone was insufficient. The critical role of data in training and refining AI models emerged as a central concern.

The shift from open source software to open data in AI is not just an evolution, but a revolution. It's redefining the very foundations of how we create, validate, and deploy AI systems.

This transition to open data in AI has been driven by several key factors:

- Recognition of data as a critical resource: As AI models grew in complexity, the quality and quantity of training data became paramount. Open source software without access to robust datasets was akin to having a powerful engine without fuel.

- Democratisation of AI development: Open data initiatives have lowered the barriers to entry for AI research and development, enabling a broader range of participants to contribute to and benefit from AI advancements.

- Addressing bias and fairness: The availability of diverse, open datasets has become crucial in tackling issues of bias and ensuring fairness in AI systems, a concern that extends beyond the capabilities of software alone.

- Reproducibility and transparency: Open data practices have enhanced the ability to reproduce and validate AI research, fostering greater trust and accountability in the field.

- Collaborative problem-solving: Open data has facilitated unprecedented collaboration on complex AI challenges, from healthcare to climate change, enabling researchers and practitioners to pool resources and insights.

The evolution towards open data in AI has not been without challenges. Issues of data privacy, intellectual property rights, and the potential for misuse have necessitated careful consideration and the development of new ethical frameworks and governance models. However, the benefits of this shift have been undeniable, catalysing innovation and accelerating the pace of AI advancement.

As we stand at this juncture, it's clear that the future of open source AI is inextricably linked to open data. The Open Source Initiative's (OSI) release candidate for the Open Source AI Definition (OSAID) must reflect this reality. By including data as a fundamental component, the OSAID can ensure that it remains relevant and impactful in shaping the future of AI development.

Open source AI without open data is like a library without books. It's the synergy between open software and open data that will drive the next wave of AI innovation and ensure its benefits are widely accessible and ethically grounded.

The journey from open source software to open data in AI is not just a historical progression; it's an ongoing process that continues to shape the landscape of AI research and application. As we move forward, the integration of open data principles into the fabric of open source AI will be crucial in addressing the complex challenges and opportunities that lie ahead.

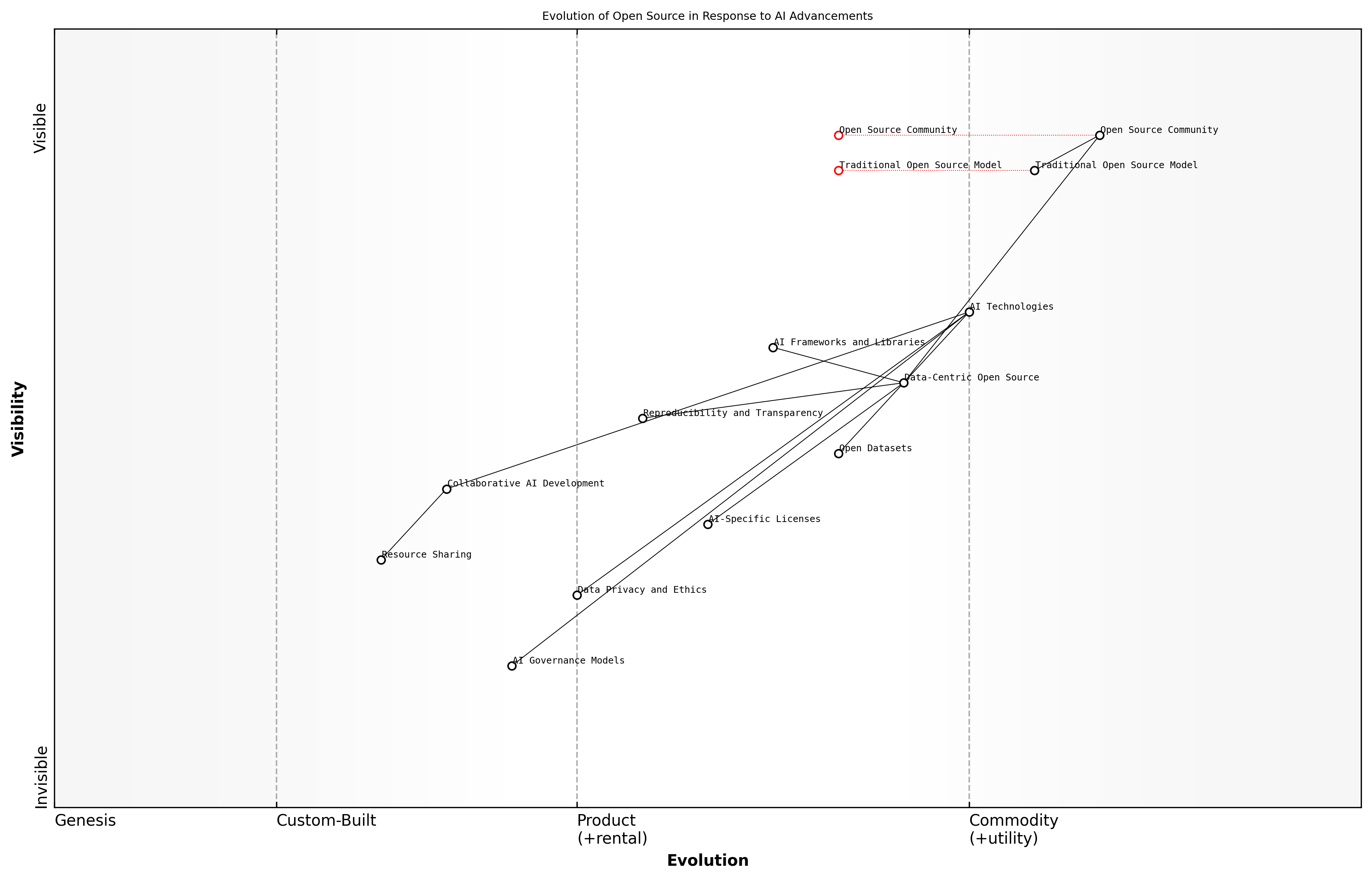

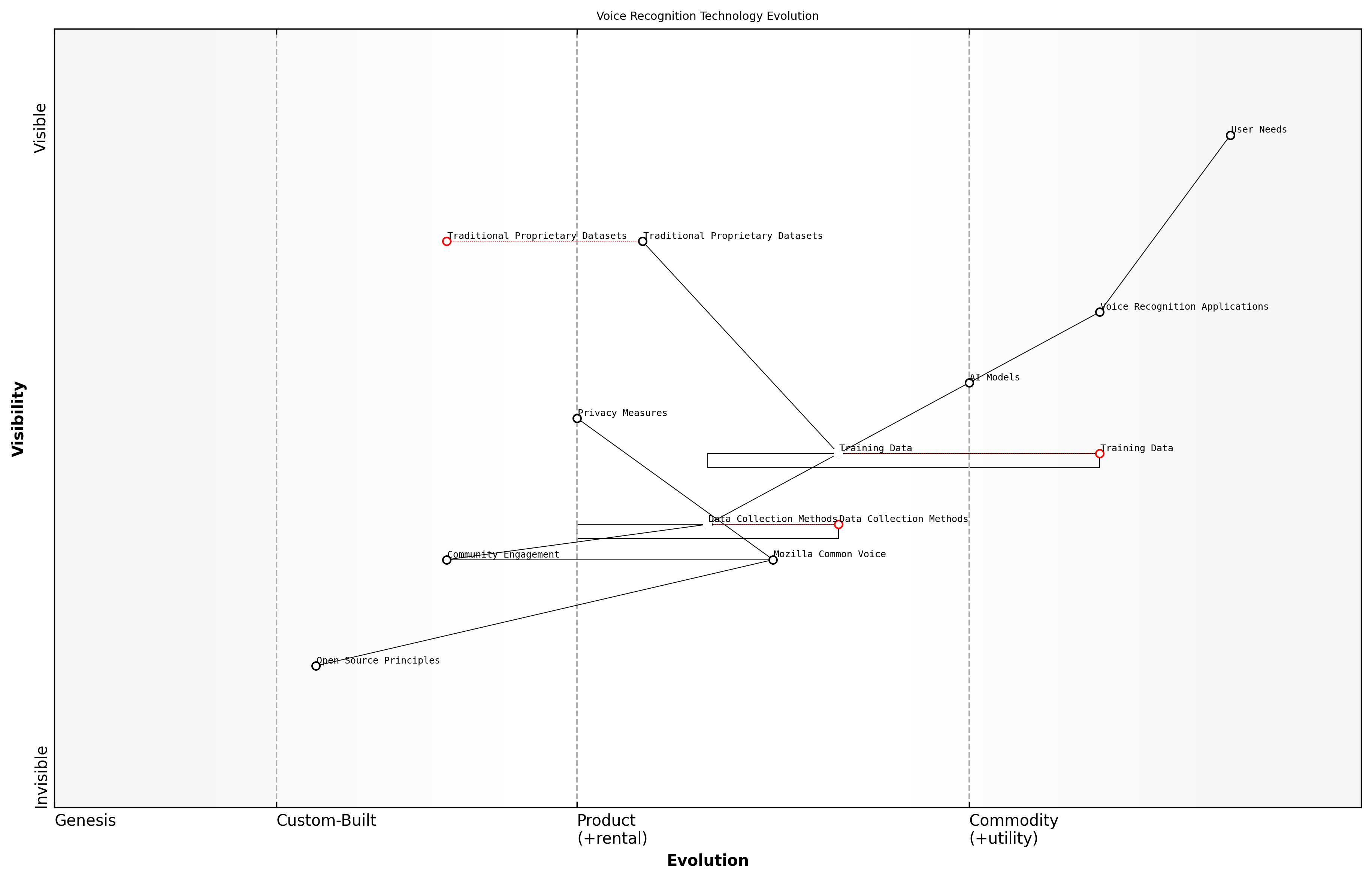

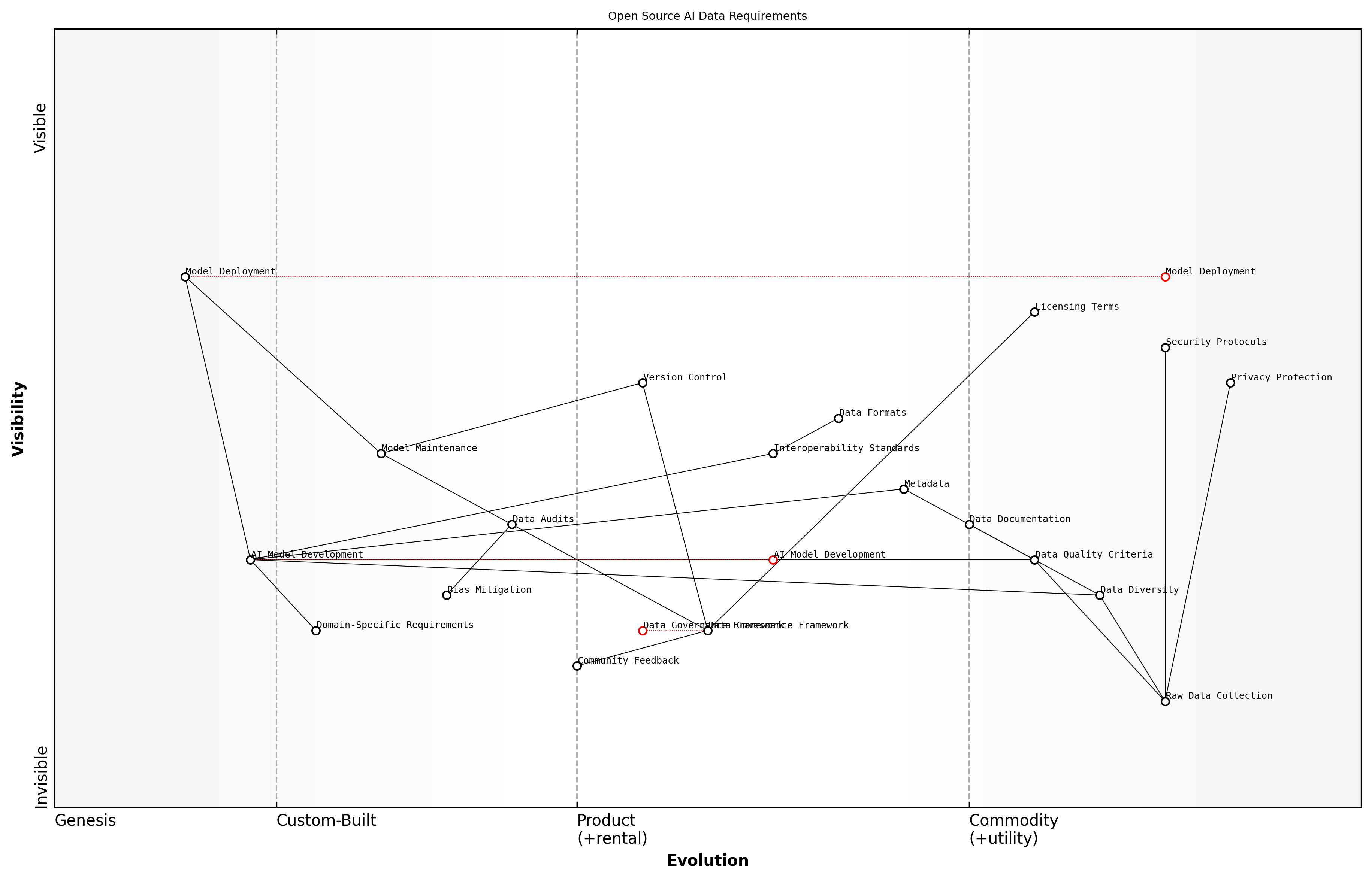

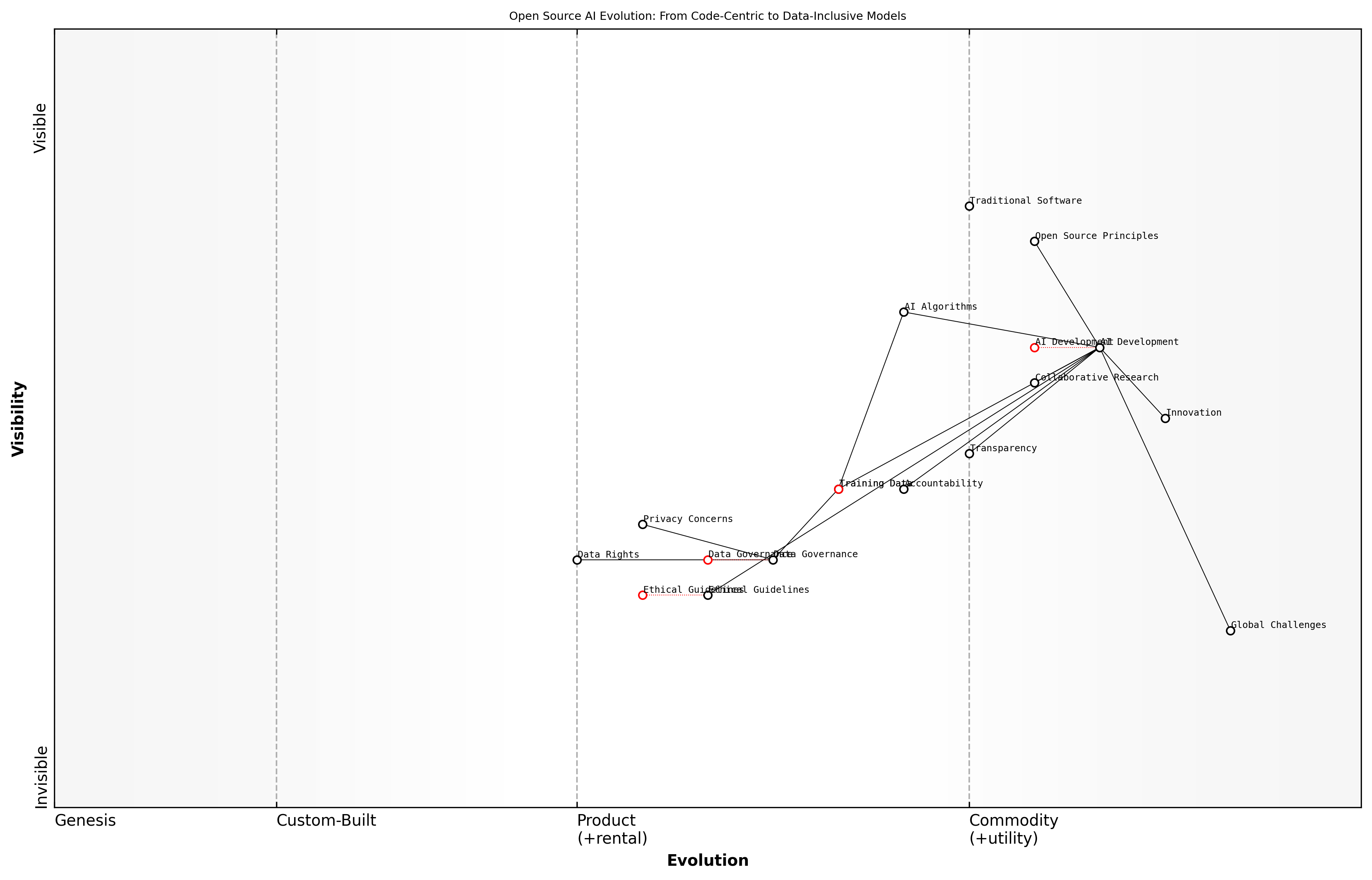

Wardley Map Assessment

This Wardley Map reveals a strategic shift in AI development from a focus on open source software to the critical role of open data. It highlights the need for balancing rapid technological advancement with ethical considerations and governance. The key strategic imperatives are to evolve data management practices, accelerate the development of ethical frameworks and governance models, and foster a collaborative, transparent AI ecosystem. Success in this landscape will require a multifaceted approach that addresses technical innovation, ethical responsibility, and industry-wide cooperation.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_09_english_From Open Source Software to Open Data.md)

In conclusion, the evolution from open source software to open data in AI represents a fundamental shift in how we approach the development and deployment of AI systems. This transition underscores the critical importance of including data considerations in the OSI's Open Source AI Definition. By embracing this evolution, we can ensure that the principles of openness, collaboration, and innovation that have driven the open source movement continue to shape the future of AI in a way that is inclusive, ethical, and transformative.

The Gap in Current Open Source AI Definitions

As we delve into the evolution of open source in AI, it becomes increasingly apparent that there is a significant gap in current open source AI definitions. This gap, centred around the inclusion of data, represents a critical oversight that threatens to undermine the very principles of openness and collaboration that the open source movement was built upon. As an expert who has advised numerous government bodies and technology leaders on AI policy, I can attest to the far-reaching implications of this definitional shortcoming.

The current landscape of open source AI definitions primarily focuses on the accessibility and transparency of algorithms and models. While this is undoubtedly crucial, it overlooks a fundamental component of AI systems: the data upon which these models are trained and operate. This oversight creates a paradoxical situation where an AI system can be considered 'open source' even if its core training data remains proprietary or inaccessible.

An open source AI without open data is like a car without fuel – technically complete, but practically useless.

This gap in definition has several profound implications:

- Limited Reproducibility: Without access to the training data, it becomes virtually impossible to reproduce the results of an AI system, a cornerstone of scientific and technological progress.

- Restricted Innovation: The inability to access or understand the underlying data hampers the ability of researchers and developers to build upon existing AI models, potentially stifling innovation.

- Opacity in Decision-Making: When the data driving AI decisions is hidden, it becomes challenging to audit these systems for bias, fairness, and ethical considerations.

- Perpetuation of Data Monopolies: By not mandating data openness, current definitions inadvertently support the concentration of valuable data in the hands of a few large corporations or institutions.

The root of this definitional gap can be traced back to the origins of the open source movement in software development. Traditional software often operated on static, predefined datasets, making the source code the primary focus of openness. However, AI systems are fundamentally different. They are dynamic, learning entities whose behaviour is as much a product of their training data as their algorithmic structure.

This shift in paradigm necessitates a corresponding evolution in our understanding and definition of 'open source' in the context of AI. We must expand our conception to encompass not just the code that powers AI systems, but also the data that shapes their understanding and decision-making processes.

In the realm of AI, data is not just an input – it's an integral part of the system's architecture and functionality.

Addressing this gap requires a multifaceted approach. It involves not only updating formal definitions but also fostering a cultural shift in how we perceive and value data in the AI ecosystem. This shift must be reflected in policy, in industry practices, and in the ethos of the AI research community.

From my experience working with government agencies on AI initiatives, I've observed firsthand the challenges that arise when data is not given equal consideration to algorithms in open source projects. Public sector AI initiatives, in particular, stand to benefit enormously from a more inclusive definition that mandates data openness, as it would enhance transparency, facilitate cross-agency collaboration, and build public trust.

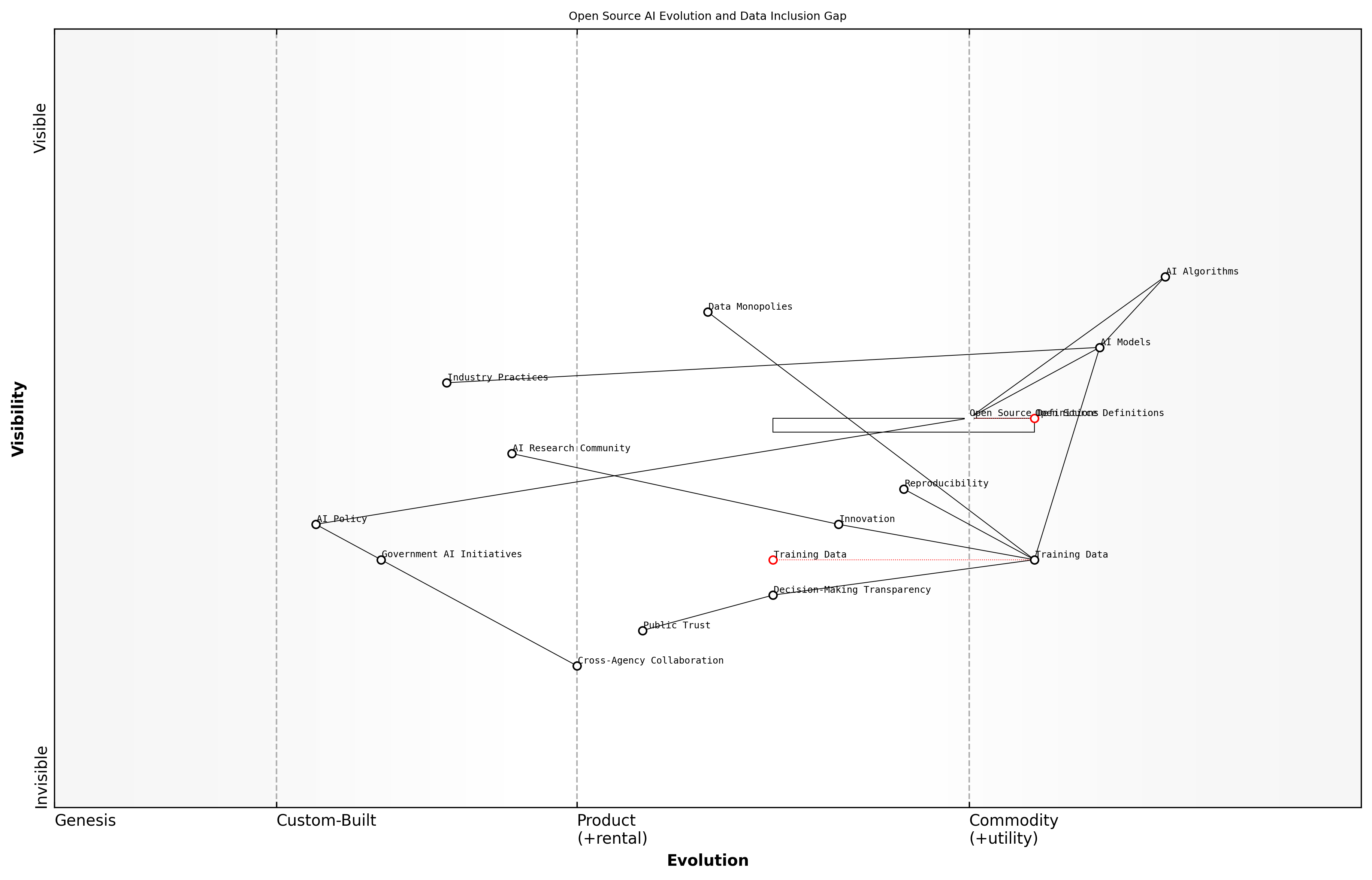

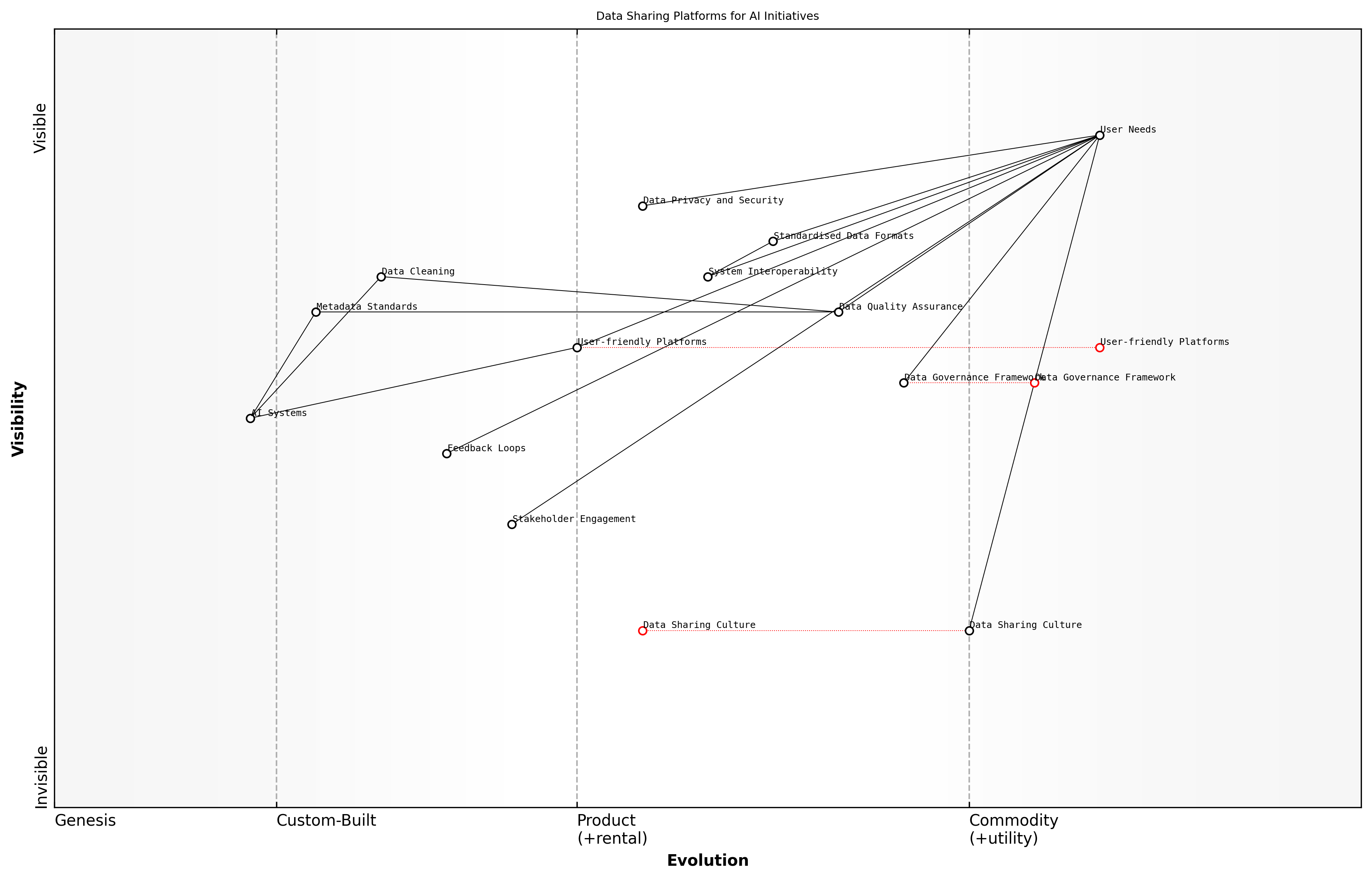

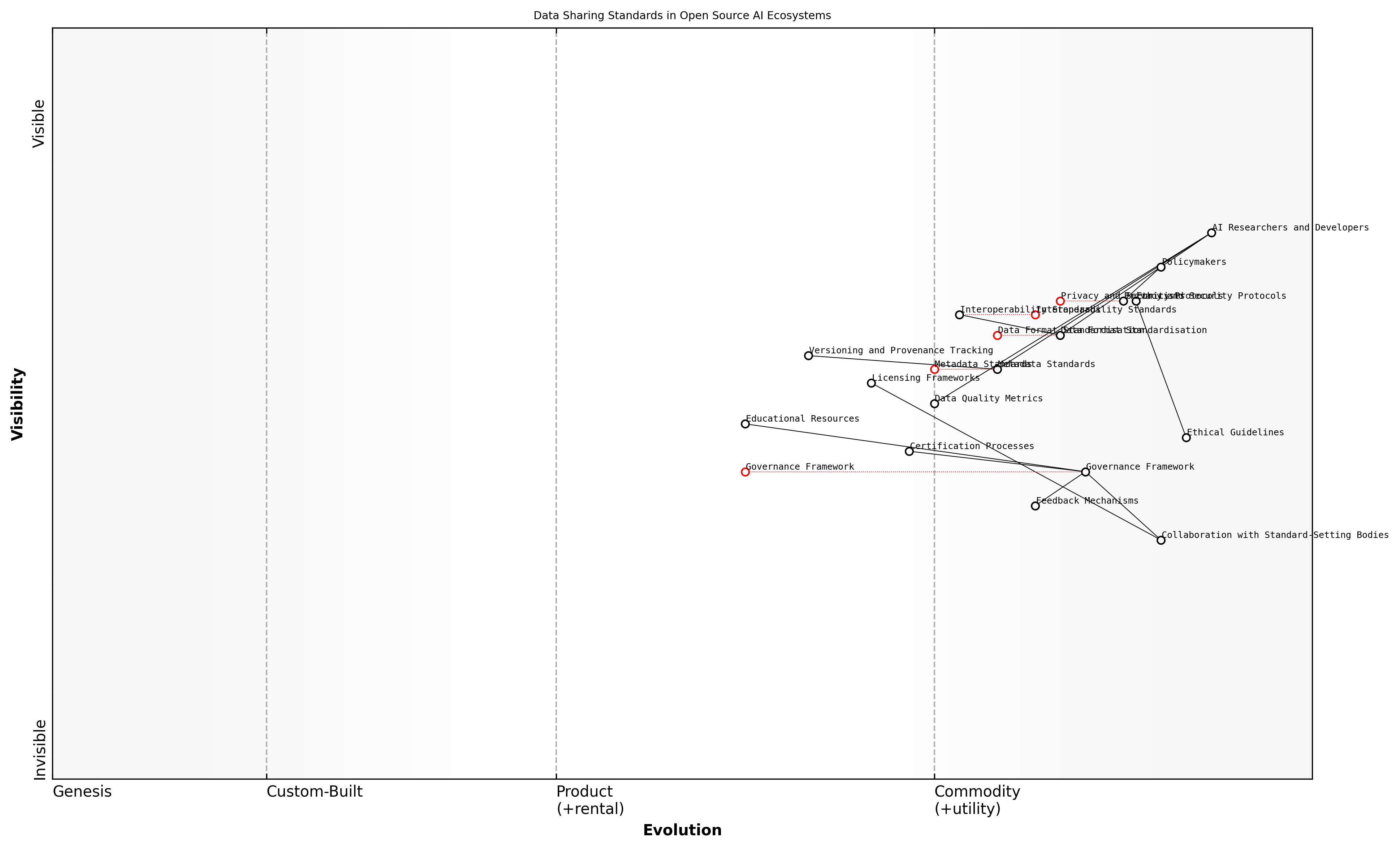

Wardley Map Assessment

This map reveals a strategic imperative to address the gap in current open source AI definitions, particularly concerning data inclusivity. The evolution of open source definitions and training data practices will be crucial in shaping a more transparent, innovative, and trustworthy AI ecosystem. Key actions should focus on standardising open source practices, ensuring data diversity, and fostering collaboration across sectors to counter potential data monopolies and build public trust in AI systems.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_10_english_The Gap in Current Open Source AI Definitions.md)

As we move forward, it is imperative that we bridge this gap in current open source AI definitions. By explicitly including data as a core component of what constitutes 'open source' in AI, we can ensure that the principles of openness, collaboration, and innovation that have driven the success of open source software are fully realised in the AI domain. This inclusive approach will not only accelerate AI development but also promote a more equitable and transparent AI ecosystem that benefits society as a whole.

Why Data Inclusion is Non-Negotiable for OSAID

As we delve into the critical importance of data inclusion in the Open Source AI Definition (OSAID), it becomes evident that this aspect is not merely a desirable feature but an absolute necessity. The evolution of open source in AI has brought us to a pivotal juncture where the traditional focus on algorithms and code is no longer sufficient to ensure true openness and accessibility in AI development.

The non-negotiable nature of data inclusion in OSAID stems from several fundamental factors that are intrinsic to the nature of AI and its development process:

- Data as the Lifeblood of AI: AI systems are fundamentally data-driven. Without access to high-quality, diverse datasets, even the most sophisticated algorithms remain theoretical constructs with limited practical application.

- Reproducibility and Verification: Open source principles emphasise the ability to reproduce and verify results. In AI, this is impossible without access to the training data used to develop models.

- Bias Mitigation and Fairness: Scrutiny of training data is crucial for identifying and mitigating biases in AI systems, a key ethical consideration that cannot be addressed through code alone.

- Democratisation of AI Development: True democratisation of AI requires not just open algorithms but also open data, enabling a wider range of participants to contribute to and benefit from AI advancements.

- Transparency and Accountability: As AI systems increasingly impact critical aspects of society, transparency in both algorithms and data becomes essential for ensuring accountability and building public trust.

Open source AI without open data is like a car without fuel – it may look impressive, but it won't take us anywhere.

The current landscape of AI development often sees a disconnect between open source algorithms and the proprietary datasets used to train them. This creates a significant barrier to entry for many potential contributors and limits the ability of the wider community to fully understand, validate, and improve upon existing AI models.

Moreover, the exclusion of data from open source AI definitions perpetuates a power imbalance in the AI ecosystem. Large corporations and institutions with access to vast proprietary datasets gain an insurmountable advantage, stifling innovation and diversity in AI development. This runs counter to the core principles of open source, which aim to level the playing field and foster collaborative innovation.

Wardley Map Assessment

This map represents a forward-thinking approach to open source AI development, recognising the critical importance of ethical considerations and governance alongside technical excellence. The strategic focus should be on accelerating the evolution of components like AI Governance and Data Transparency while maintaining leadership in AI Algorithms and Training Data. This balanced approach will likely lead to more sustainable, societally beneficial AI development and provide a strong competitive advantage in an increasingly ethics-conscious market.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_11_english_Why Data Inclusion is Non-Negotiable for OSAID.md)

The inclusion of data in OSAID is also crucial for addressing the growing concerns around AI ethics and governance. Without transparent access to training data, it becomes nearly impossible to conduct thorough audits of AI systems, assess their fairness, or identify potential biases. This lack of transparency can lead to the deployment of AI systems that perpetuate or even exacerbate existing societal inequalities.

In the realm of AI, data transparency is not just about openness; it's about responsibility, accountability, and the ethical development of technologies that will shape our future.

Furthermore, the rapid advancement of AI technologies, particularly in areas such as deep learning and natural language processing, has heightened the importance of data quality and diversity. These sophisticated models require vast amounts of high-quality, diverse data to achieve optimal performance and generalisability. By including data requirements in OSAID, we can foster an ecosystem that prioritises the creation and sharing of such datasets, ultimately leading to more robust and reliable AI systems.

It's important to acknowledge that including data in OSAID does come with challenges, particularly around privacy, intellectual property, and data protection regulations. However, these challenges are not insurmountable and should be viewed as opportunities to develop innovative solutions that balance openness with necessary protections. The AI community has already begun to explore approaches such as federated learning, differential privacy, and synthetic data generation, which could provide pathways to data inclusion while addressing these concerns.

- Develop clear guidelines for data sharing that respect privacy and intellectual property rights

- Encourage the creation of open, high-quality datasets for AI research and development

- Promote the use of privacy-preserving technologies in data sharing for AI

- Establish standards for data documentation and provenance in open source AI projects

- Foster collaboration between legal experts, ethicists, and AI practitioners to address data-related challenges

In conclusion, the inclusion of data in OSAID is non-negotiable because it is fundamental to realising the full potential of open source AI. It is essential for ensuring transparency, fairness, and accountability in AI systems, fostering innovation and collaboration, and ultimately creating AI technologies that are truly open, accessible, and beneficial to society as a whole. As we move forward, it is imperative that the AI community, policymakers, and other stakeholders work together to overcome the challenges and establish a robust framework for data inclusion in open source AI initiatives.

Chapter 2: Ethical Implications of Data Access and Sharing in AI

The Ethics of AI Data Practices

Privacy Concerns in Data Sharing

As we delve into the ethics of AI data practices, privacy concerns in data sharing emerge as a paramount issue that demands our utmost attention. The exponential growth of AI technologies, coupled with the increasing volume and granularity of data collected, has amplified the potential for privacy breaches and misuse of personal information. This subsection explores the multifaceted nature of privacy concerns in the context of open source AI and data sharing, drawing from my extensive experience advising government bodies and technology leaders on these critical matters.

At the heart of the privacy debate lies the tension between the need for vast amounts of data to train and improve AI systems and the fundamental right of individuals to maintain control over their personal information. This dichotomy is particularly pronounced in the realm of open source AI, where the ethos of transparency and collaboration often collides with the imperative to protect sensitive data.

The challenge we face is not whether to share data, but how to share it responsibly while safeguarding individual privacy. This is the cornerstone of ethical AI development in the open source community.

One of the primary concerns in data sharing for AI development is the risk of re-identification. Even when data is anonymised, the sophisticated nature of AI algorithms can often piece together disparate data points to reconstruct individual identities. This risk is exacerbated in the open source context, where data may be widely distributed and combined with other datasets in unforeseen ways.

- Inadvertent disclosure of sensitive personal information

- Potential for data to be used for purposes beyond its original intent

- Challenges in obtaining meaningful consent for data use in AI training

- Difficulty in ensuring data security across diverse open source platforms

Another critical aspect of privacy in data sharing is the concept of data sovereignty. This is particularly relevant in the context of cross-border data flows, where different jurisdictions may have varying levels of data protection regulations. As an expert who has advised on international data governance frameworks, I can attest to the complexity of navigating these waters, especially when it comes to open source AI initiatives that often transcend national boundaries.

The General Data Protection Regulation (GDPR) in the European Union has set a high bar for data protection, influencing global standards. However, its implementation in the context of open source AI development presents unique challenges. For instance, the right to erasure ('right to be forgotten') can be particularly problematic when data has been widely distributed and incorporated into AI models.

In the age of AI, privacy is not just about protecting data; it's about preserving human autonomy and dignity in the face of increasingly powerful predictive technologies.

To address these privacy concerns, the open source AI community must adopt a proactive approach to privacy protection. This includes implementing privacy-by-design principles, developing robust anonymisation techniques, and creating transparent data governance frameworks. My work with various government agencies has shown that successful privacy protection in open source AI requires a combination of technical solutions, policy frameworks, and cultural shifts within the developer community.

- Implement differential privacy techniques to add noise to datasets

- Develop federated learning approaches to keep data localised

- Create clear data usage agreements and consent mechanisms

- Establish ethical review boards for open source AI projects

- Promote education and awareness about privacy risks in AI development

It's crucial to recognise that privacy concerns in data sharing are not static; they evolve with technological advancements and societal expectations. As such, the Open Source Initiative's AI Definition must be flexible enough to accommodate these changing dynamics while providing a robust framework for privacy protection.

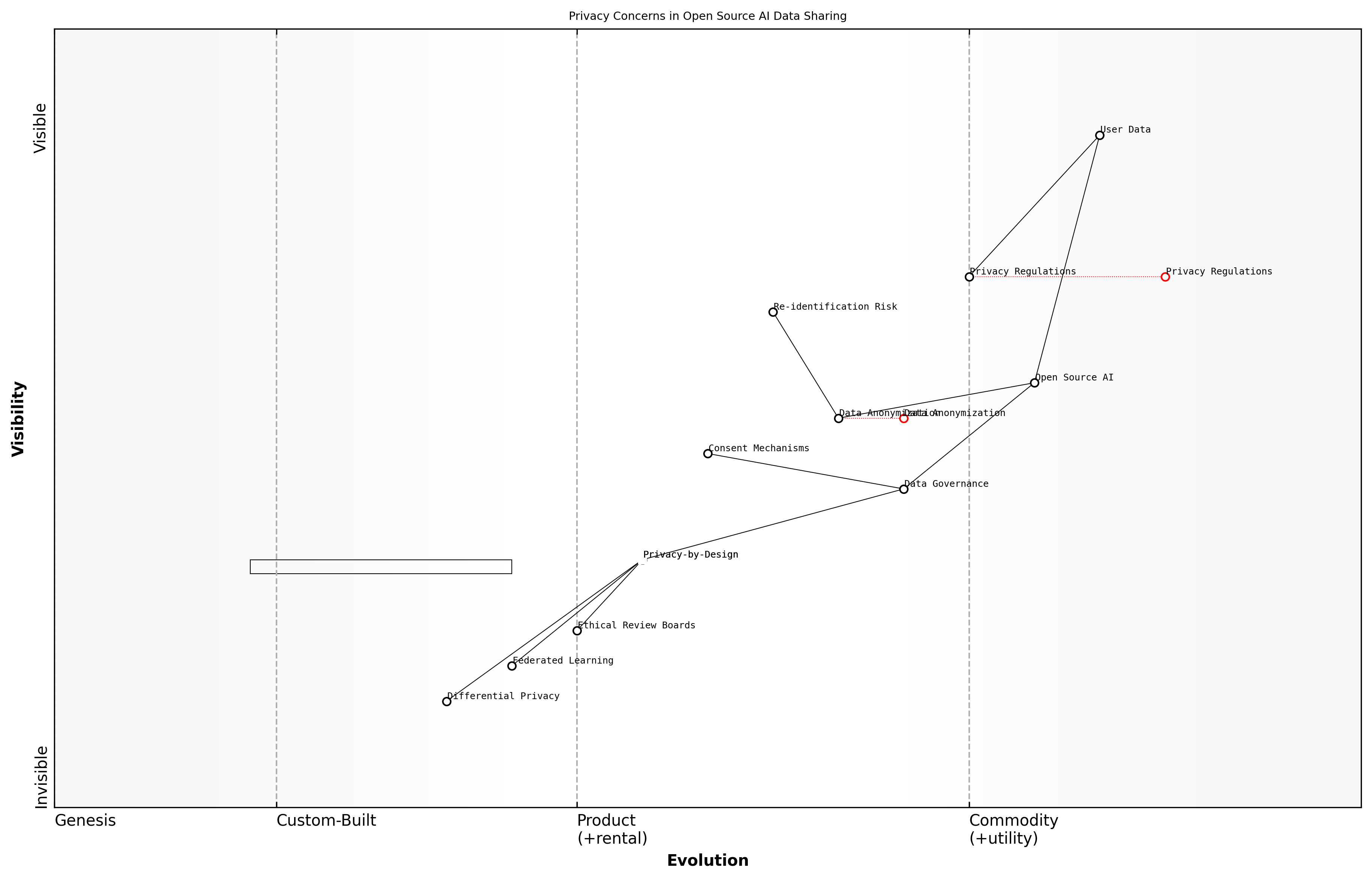

Wardley Map Assessment

The map reveals a strategic imperative to balance open source AI innovation with robust privacy protections. Organisations must evolve from traditional anonymisation techniques to more advanced, integrated privacy-preserving approaches. Success will depend on proactively adopting Privacy-by-Design principles, investing in emerging technologies like Federated Learning and Differential Privacy, and fostering a culture of ethical AI development. The future competitive advantage lies in the ability to innovate rapidly while maintaining the highest standards of data privacy and user trust.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_12_english_Privacy Concerns in Data Sharing.md)

In conclusion, addressing privacy concerns in data sharing is not just an ethical imperative but a practical necessity for the sustainable development of open source AI. By integrating strong privacy protections into the fabric of open source AI initiatives, we can foster trust, encourage participation, and ultimately realise the full potential of collaborative AI development. As we move forward, it is incumbent upon us as leaders in this field to champion privacy-preserving technologies and practices, ensuring that the benefits of open source AI are realised without compromising individual rights and freedoms.

Bias and Fairness in AI Datasets

As we delve deeper into the ethical implications of data access and sharing in AI, one of the most critical and complex issues we encounter is the presence of bias in AI datasets and the subsequent challenge of ensuring fairness in AI systems. This topic is not merely an academic concern but a pressing real-world issue with far-reaching consequences for individuals, communities, and society at large.

Bias in AI datasets is a multifaceted problem that stems from various sources, including historical inequalities, underrepresentation of certain groups, and flawed data collection methodologies. These biases, when embedded in AI systems, can lead to discriminatory outcomes, perpetuate existing societal inequalities, and even create new forms of digital discrimination. As a seasoned expert who has advised numerous government bodies on AI ethics, I can attest to the gravity of this issue and its potential to undermine public trust in AI technologies.

The algorithms we create are only as good as the data we feed them. If that data is biased, incomplete, or unrepresentative, we risk creating AI systems that perpetuate and amplify societal inequalities rather than mitigating them.

One of the primary challenges in addressing bias in AI datasets is the inherent complexity of identifying and quantifying bias. Bias can manifest in subtle ways, often reflecting deep-seated societal prejudices that may not be immediately apparent. For instance, in my work with a large public sector organisation, we uncovered bias in a dataset used for automated decision-making in social services. The dataset, while seemingly comprehensive, significantly underrepresented certain ethnic minorities and socioeconomic groups, leading to potentially unfair outcomes in resource allocation.

- Demographic bias: Underrepresentation or misrepresentation of certain groups based on race, gender, age, or other protected characteristics.

- Sampling bias: Flaws in data collection methods that result in non-representative samples.

- Historical bias: Perpetuation of past discriminatory practices through historical data.

- Measurement bias: Inconsistencies or errors in how data is measured or recorded across different groups.

- Aggregation bias: Loss of important nuances when data is combined or generalised.

Addressing these biases requires a multifaceted approach that combines technical solutions with ethical considerations and policy frameworks. From a technical standpoint, we need robust methodologies for bias detection and mitigation. This includes advanced statistical techniques, fairness-aware machine learning algorithms, and comprehensive data auditing processes. However, technical solutions alone are insufficient.

Equally crucial is the need for diverse and inclusive teams in AI development. My experience has consistently shown that diverse teams are better equipped to identify potential biases and develop more equitable solutions. This diversity should extend beyond just the development team to include stakeholders, domain experts, and representatives from potentially affected communities.

Fairness in AI is not just about algorithms and datasets; it's about ensuring that the development process itself is inclusive, transparent, and accountable to the communities it serves.

Furthermore, we must recognise that fairness in AI is not a one-size-fits-all concept. Different contexts and applications may require different notions of fairness. For instance, in a project I led for a government education department, we had to carefully consider various fairness metrics - such as demographic parity, equal opportunity, and individual fairness - to ensure that an AI-driven student placement system was equitable across diverse student populations.

The inclusion of data considerations in the Open Source AI Definition (OSAID) presents a unique opportunity to address these challenges at a fundamental level. By mandating transparency in dataset composition, documentation of data collection methodologies, and clear guidelines for bias detection and mitigation, we can create a framework that promotes fairness from the ground up.

- Mandatory bias audits for datasets used in open source AI projects

- Clear documentation requirements for data provenance and collection methodologies

- Guidelines for diverse and inclusive data collection practices

- Frameworks for ongoing monitoring and updating of datasets to address evolving biases

- Community-driven processes for identifying and addressing potential biases

However, it's crucial to acknowledge that achieving perfect fairness is often an aspirational goal rather than a fully attainable reality. The complexity of human societies and the nuances of fairness mean that we must approach this challenge with humility and a commitment to continuous improvement.

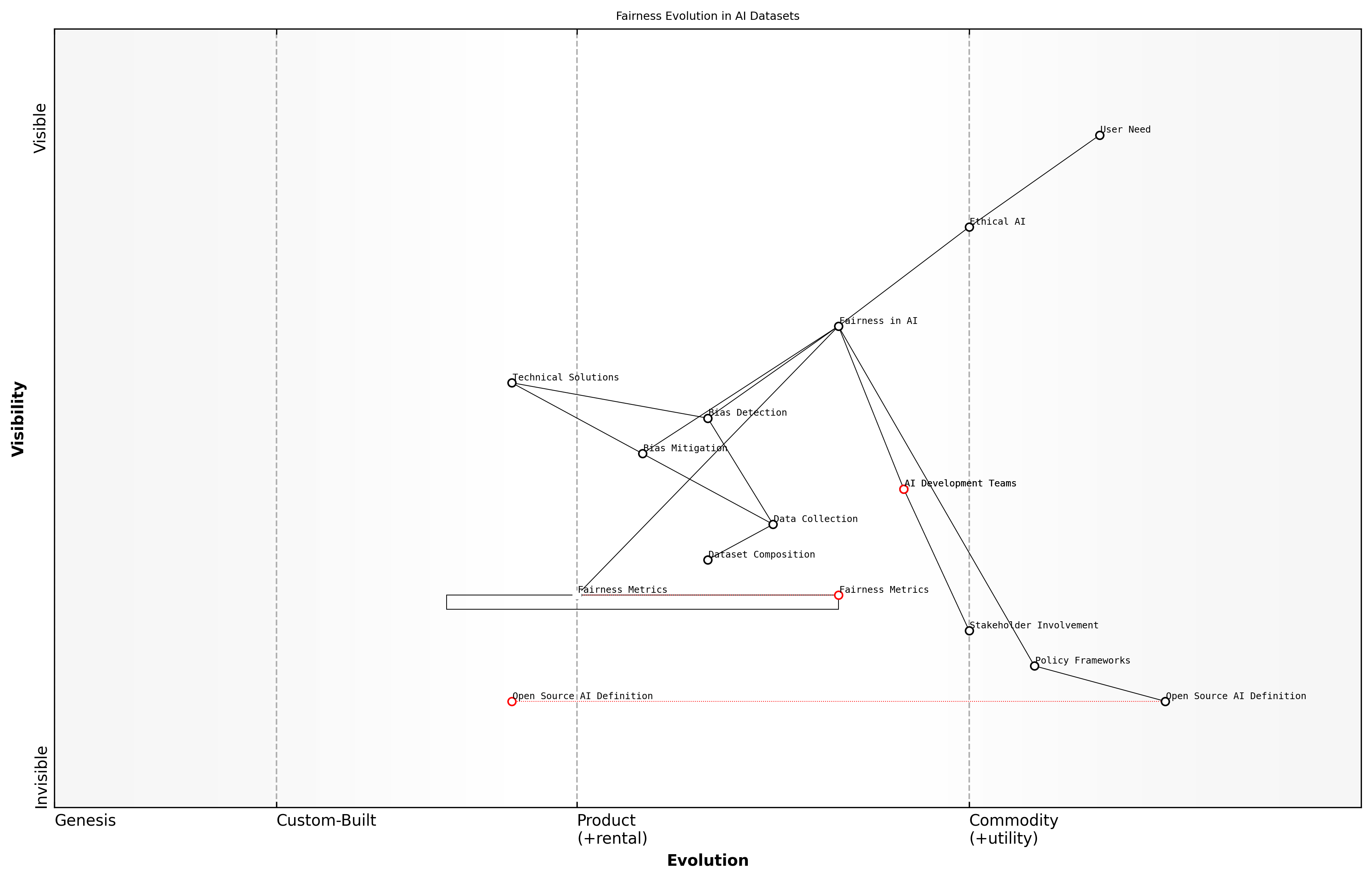

Wardley Map Assessment

This map reveals a strategic landscape where ethical considerations and fairness are becoming central to AI development. Organisations that can effectively integrate technical solutions, policy frameworks, and stakeholder involvement while leading in areas like fairness metrics and open source AI definitions will be well-positioned to dominate the ethical AI market. The key to success lies in balancing rapid technical innovation with thoughtful policy development and inclusive practices.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_13_english_Bias and Fairness in AI Datasets.md)

In conclusion, addressing bias and ensuring fairness in AI datasets is not just an ethical imperative but a crucial factor in building AI systems that are trustworthy, effective, and beneficial to society as a whole. By incorporating robust data practices into the OSAID, we can set a new standard for ethical AI development that prioritises fairness and inclusivity. This approach not only mitigates the risks associated with biased AI but also unlocks the full potential of AI to address societal challenges and promote equality.

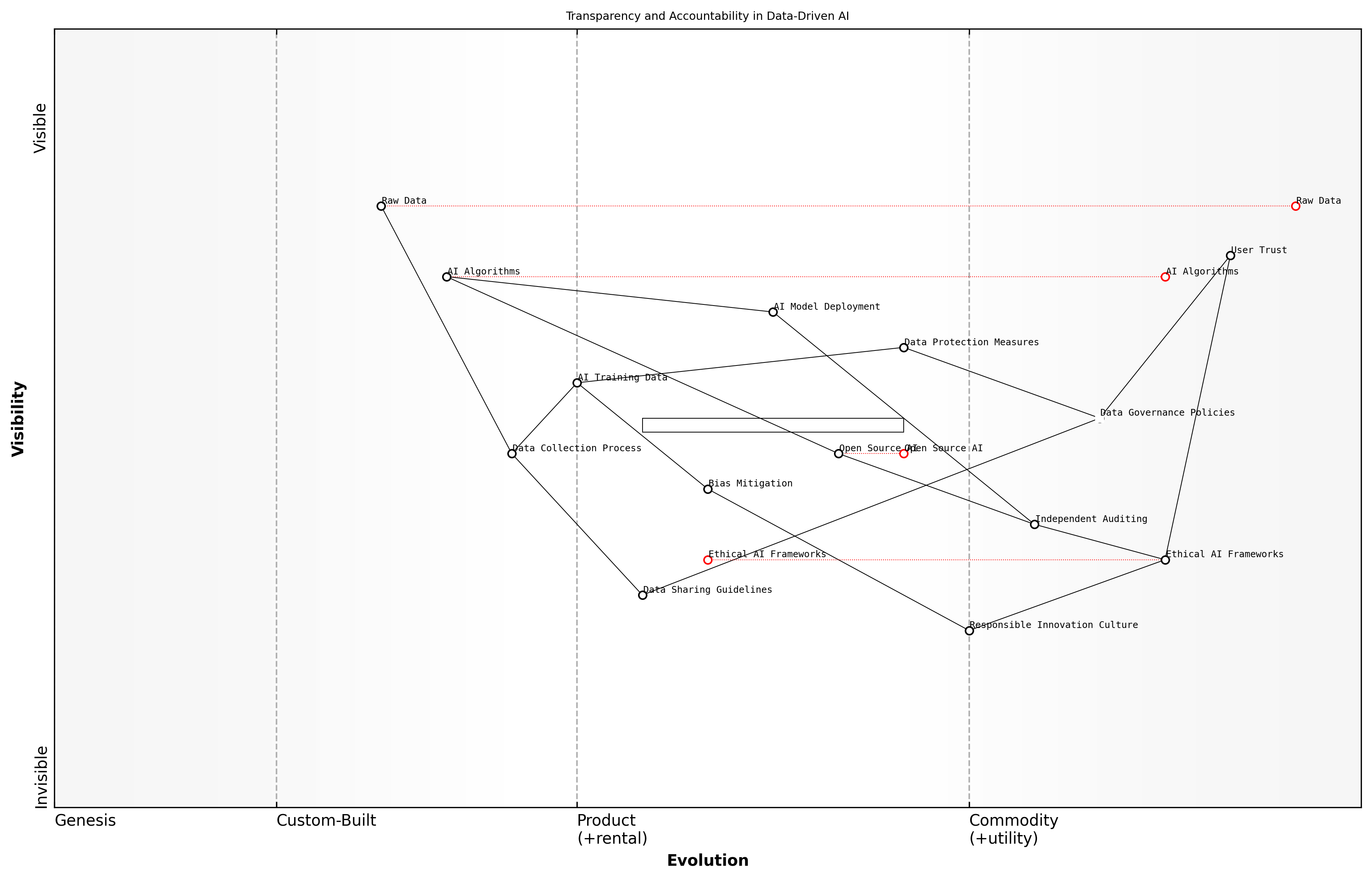

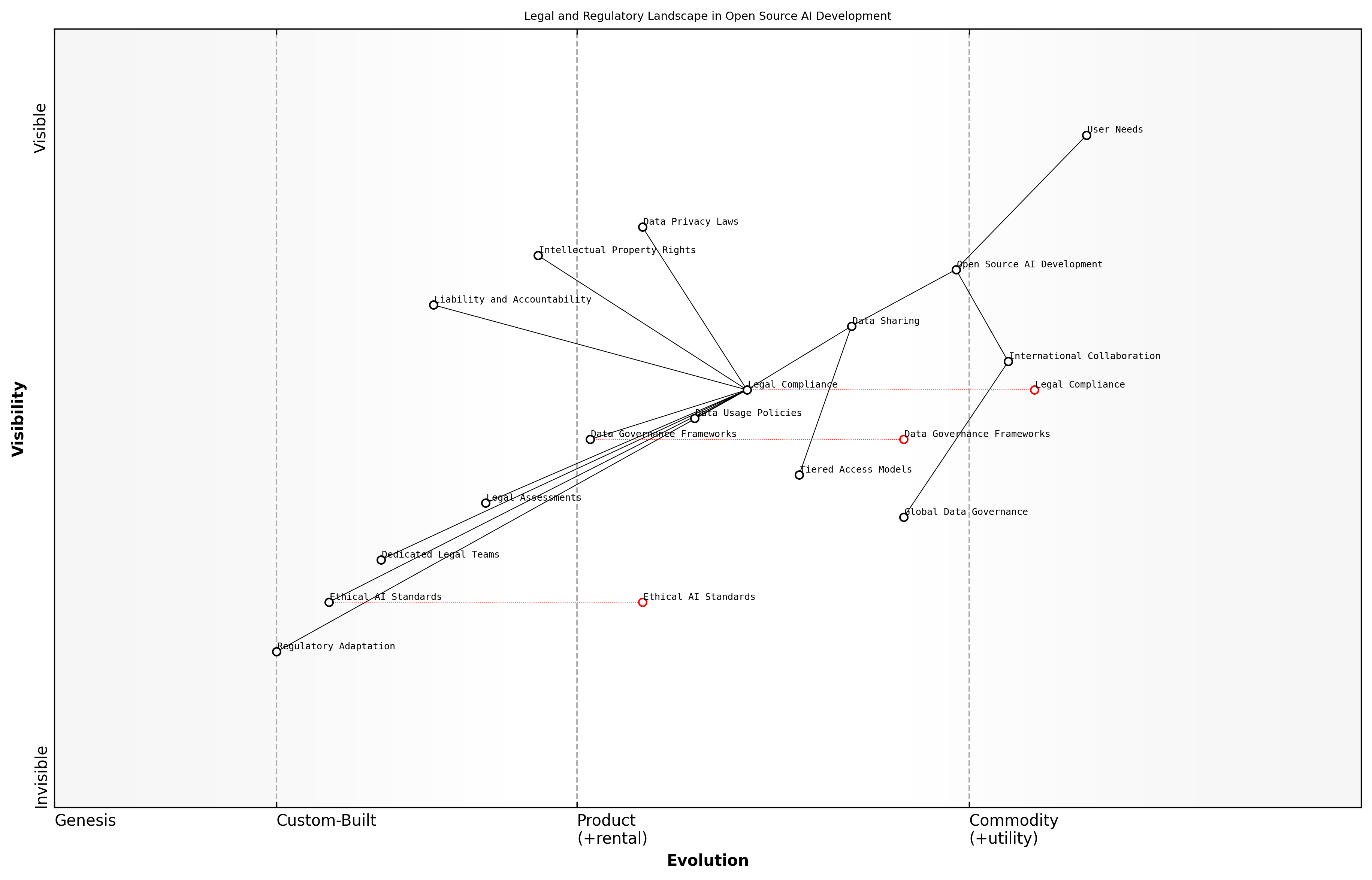

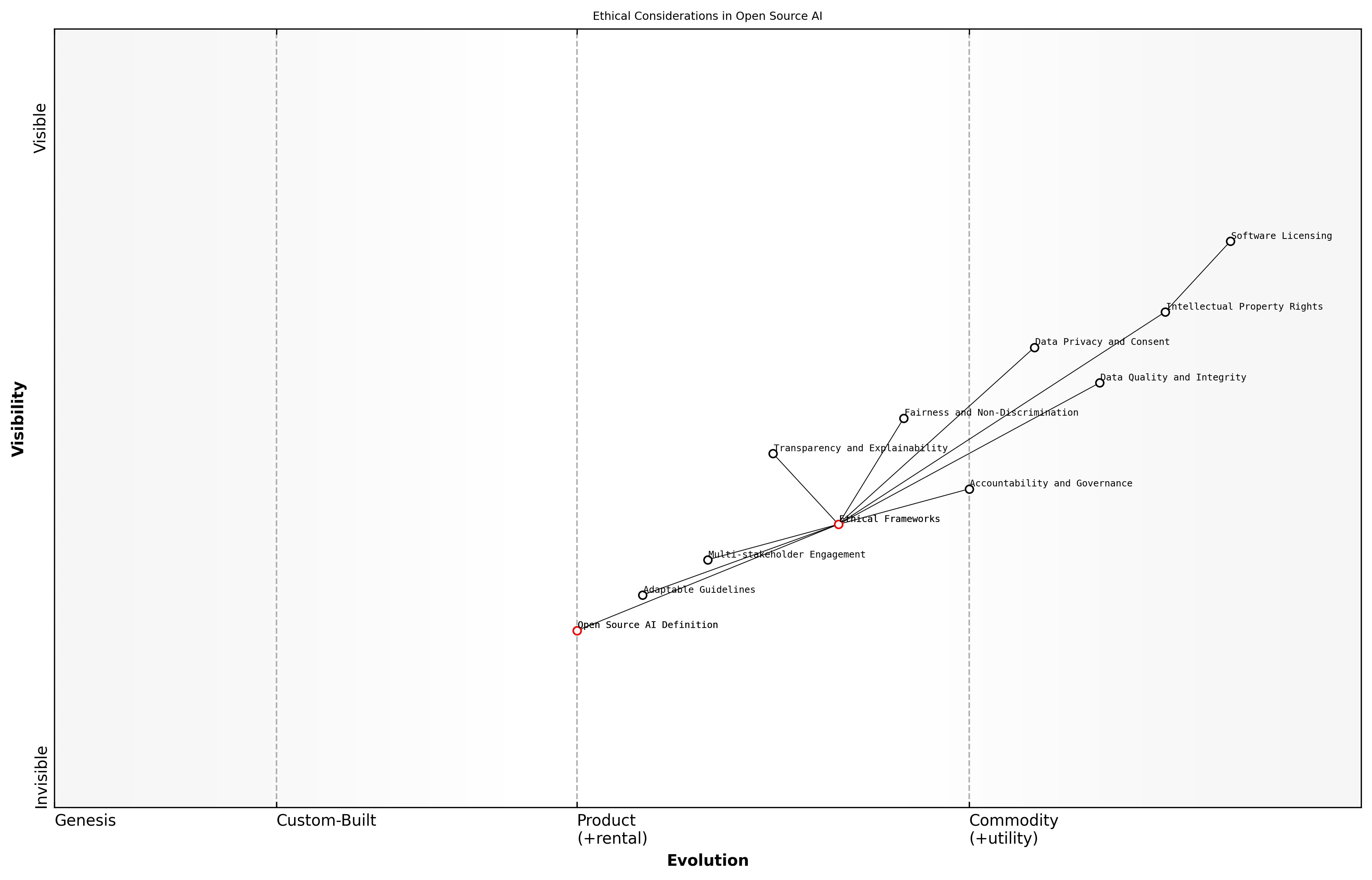

Transparency and Accountability in Data-Driven AI

In the rapidly evolving landscape of artificial intelligence, transparency and accountability have emerged as critical pillars for ensuring ethical and responsible development and deployment of AI systems. As an expert in this field, I can attest that these principles are particularly crucial when it comes to data-driven AI, where the quality, provenance, and handling of data directly impact the outcomes and societal implications of AI applications.

Transparency in data-driven AI refers to the openness and clarity surrounding the data used to train and operate AI systems. This encompasses not only the raw data itself but also the processes of data collection, curation, and preprocessing. Accountability, on the other hand, pertains to the responsibility of AI developers, deployers, and users to ensure that their systems are fair, unbiased, and aligned with ethical standards and societal values.

Transparency without accountability is just window dressing. True ethical AI requires both - the willingness to be open about our data and processes, and the commitment to take responsibility for their impacts.

The imperative for transparency and accountability in data-driven AI is multifaceted. Firstly, it allows for scrutiny and validation of AI systems, enabling researchers, policymakers, and the public to understand how these systems arrive at their decisions or predictions. This is particularly crucial in high-stakes domains such as healthcare, criminal justice, and financial services, where AI-driven decisions can have profound impacts on individuals' lives.

- Enables independent auditing and verification of AI systems

- Facilitates the identification and mitigation of biases in training data

- Supports informed consent and user trust in AI applications

- Enhances the ability to trace and address unintended consequences

- Promotes continuous improvement and refinement of AI models

In the context of open source AI, transparency and accountability take on additional dimensions. The open source ethos inherently aligns with transparency, as it encourages the sharing of code, algorithms, and ideally, the data used to train AI models. However, the inclusion of data in open source AI definitions, such as the OSI's OSAID, presents both opportunities and challenges.

On one hand, including data in open source AI definitions can significantly enhance transparency and accountability. It allows for comprehensive review of the entire AI pipeline, from data collection to model deployment. This level of openness can lead to more robust and fair AI systems, as it enables a diverse community of researchers and practitioners to identify and address potential issues in both the data and the algorithms.

Open source AI without open data is like a car without fuel. It may look impressive, but it won't take us where we need to go in terms of truly transparent and accountable AI systems.

However, the inclusion of data in open source AI definitions also raises complex ethical and practical considerations. Privacy concerns, data ownership rights, and the potential for misuse of sensitive information must be carefully balanced against the benefits of transparency. This necessitates the development of nuanced frameworks and guidelines for responsible data sharing in open source AI contexts.

- Implementing robust anonymisation and data protection measures

- Establishing clear data governance policies and access controls

- Developing ethical guidelines for data collection and usage in AI

- Creating mechanisms for ongoing monitoring and auditing of data-driven AI systems

- Fostering a culture of responsible innovation in the AI community

As we navigate these challenges, it's crucial to recognise that transparency and accountability in data-driven AI are not static goals, but ongoing processes. They require continuous engagement, adaptation, and collaboration among diverse stakeholders, including AI developers, policymakers, ethicists, and representatives from affected communities.

Wardley Map Assessment

This Wardley Map reveals a strategic focus on building user trust through ethical AI development and robust governance. The organisation is well-positioned to lead in transparent and accountable AI, but must address key capability gaps and potential misalignments. By prioritising ethical frameworks, independent auditing, and responsible innovation, while also advancing technical capabilities, the organisation can establish a strong competitive advantage in the rapidly evolving AI landscape.

[View full Wardley Map report](markdown/wardley_map_reports/wardley_map_report_14_english_Transparency and Accountability in Data-Driven AI.md)

In conclusion, the inclusion of data in open source AI definitions, such as the OSI's OSAID, is a critical step towards enhancing transparency and accountability in AI systems. It presents an opportunity to set new standards for ethical AI development and deployment, fostering trust and ensuring that the benefits of AI are realised responsibly and equitably across society. As we move forward, it is imperative that we continue to refine our approaches to transparency and accountability, always keeping in mind the profound impact that data-driven AI can have on individuals and communities worldwide.

Balancing Openness and Protection

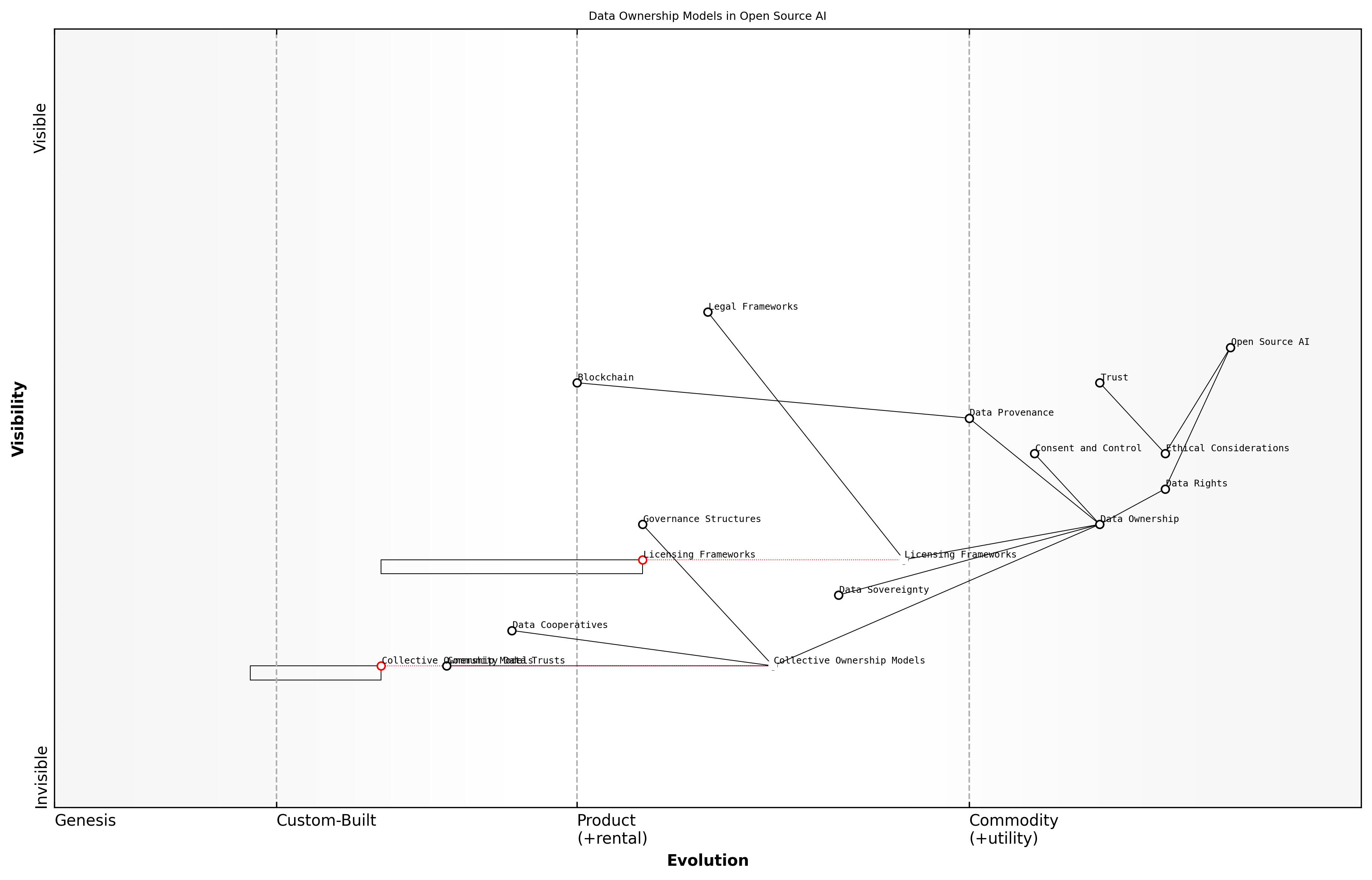

Data Rights and Ownership in Open Source AI

In the realm of open source AI, the question of data rights and ownership stands as a critical and complex issue that demands careful consideration. As we navigate the intersection of open source principles and AI development, it becomes increasingly apparent that traditional notions of intellectual property and data ownership must be re-examined and adapted to this new paradigm.

The fundamental tension in open source AI lies in balancing the ethos of openness and collaboration with the need to protect sensitive data and respect individual privacy. This balance is crucial not only for ethical reasons but also for fostering trust and encouraging participation in open source AI initiatives.

The challenge we face is not whether to share data, but how to share it responsibly and ethically while preserving the spirit of open source.

To address this challenge, we must consider several key aspects of data rights and ownership in the context of open source AI:

- Data Provenance and Attribution

- Consent and Control

- Licensing Frameworks

- Data Sovereignty

- Collective Ownership Models

Data Provenance and Attribution: In open source AI projects, it is crucial to establish clear mechanisms for tracking the origin and lineage of data. This not only ensures proper credit is given to data contributors but also helps in maintaining the integrity and quality of datasets. Implementing robust data provenance systems allows for transparency and accountability, which are essential in building trust within the open source community.

Consent and Control: A fundamental principle in data rights is the concept of informed consent. In open source AI, this translates to giving data subjects (individuals whose data is being used) the ability to understand how their data will be used and the option to control its usage. This may involve implementing granular permissions systems that allow individuals to specify which parts of their data can be used and for what purposes.

Licensing Frameworks: The development of specialised licensing frameworks for AI data is an area that requires significant attention. While software licensing models like GPL and MIT have been adapted for AI code, data licensing remains a more complex issue. There is a need for licences that can accommodate the unique characteristics of AI datasets, including their dynamic nature and potential for bias.

We need to evolve our licensing frameworks to reflect the nuanced nature of AI data, balancing openness with responsible use and ethical considerations.

Data Sovereignty: In an increasingly globalised AI landscape, the concept of data sovereignty becomes particularly relevant. This involves recognising and respecting the rights of nations, communities, or indigenous groups to maintain control over data that pertains to their people, culture, or natural resources. Open source AI initiatives must be sensitive to these concerns and develop protocols that honour data sovereignty principles.

Collective Ownership Models: The collaborative nature of open source AI development calls for innovative approaches to data ownership. Collective ownership models, where data is held in trust for the benefit of the community, could provide a framework for balancing individual rights with the collective good. These models could include data cooperatives or community data trusts that manage and govern the use of shared datasets.

Wardley Map Assessment

This Wardley Map reveals a dynamic and evolving landscape of data ownership in open source AI. The strategic focus should be on balancing innovation with ethical considerations, fostering trust, and developing new models of collective ownership. Key areas for investment include data provenance, governance structures, and emerging ownership models. The ability to navigate the complex interplay between technical capabilities, legal frameworks, and community trust will be crucial for success in this ecosystem.