Open Source as a Competitive Weapon: Strategy, Community and Business Insight

Business StrategyOpen Source as a Competitive Weapon: Strategy, Community and Business Insight

Table of Contents

- Open Source as a Competitive Weapon: Strategy, Community and Business Insight

- Introduction: The Rise of Open Source as a Board‑Level Asset

- Chapter 1: Strategic Frameworks and Cross‑Disciplinary Foundations

- Chapter 2: Building and Governing High‑Impact Communities

- Sample CONTRIBUTING.md

- Sample dashboard configuration - Continuous iteration based on data

- Sample RACI escalation snippet - Maintaining a positive community culture

- Sample integration module definition

- Example GitLab CI job for licence scanning - Defensive publishing and patent strategies

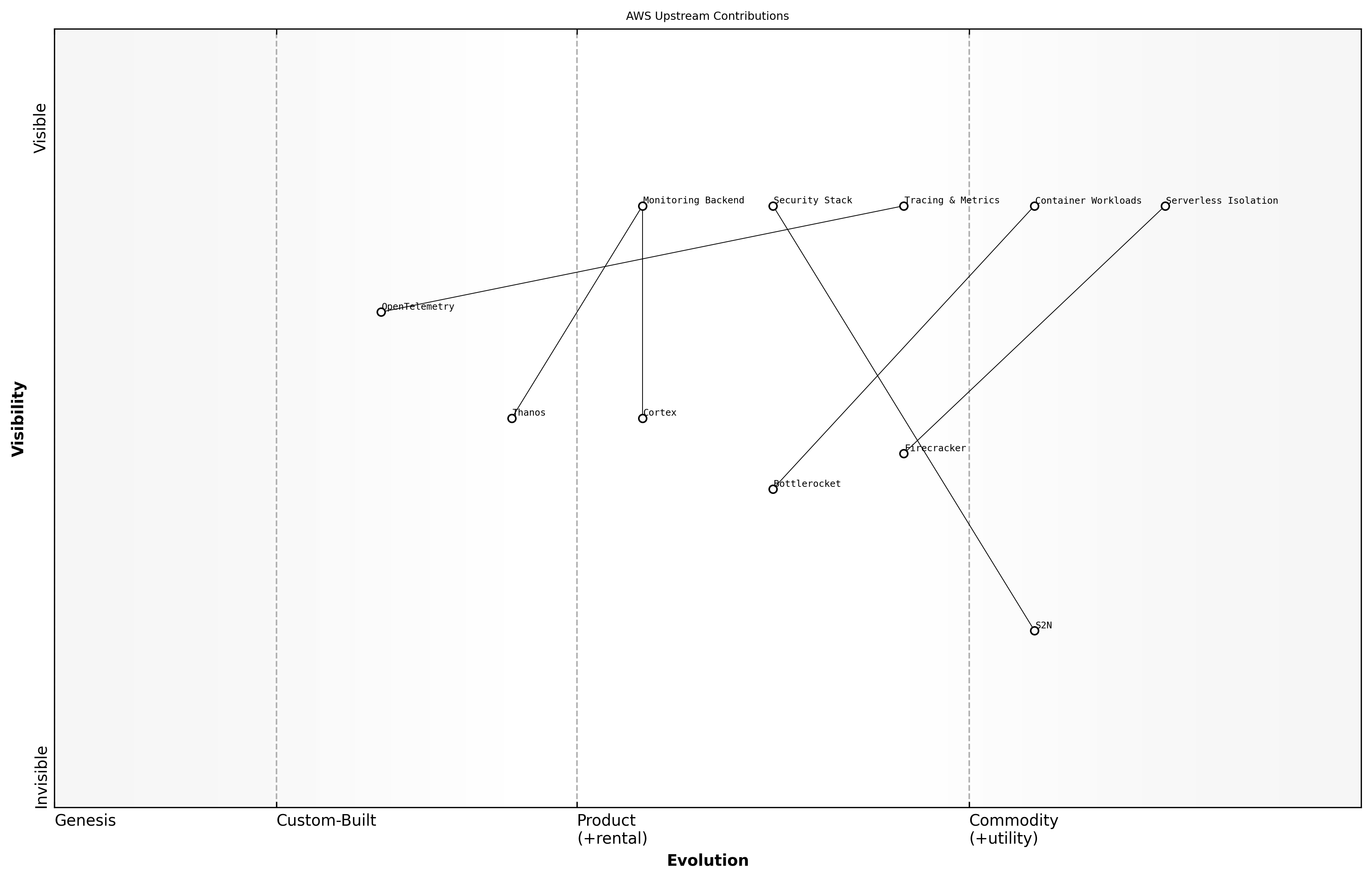

- Sample tracking of AWS open source contributions - Balancing competition and collaboration - Analysing ecosystem influence

- Sample KPI configuration for community dashboard - Dashboard design patterns - Customisation examples

- Strategic Context

- Open Source Opportunity

- Evidence

- Proposed Actions

- Expected Outcomes - Stakeholder engagement strategies - Measuring and celebrating wins

- Sample announcement in team channel

Introduction: The Rise of Open Source as a Board‑Level Asset

From Hobby to Strategy

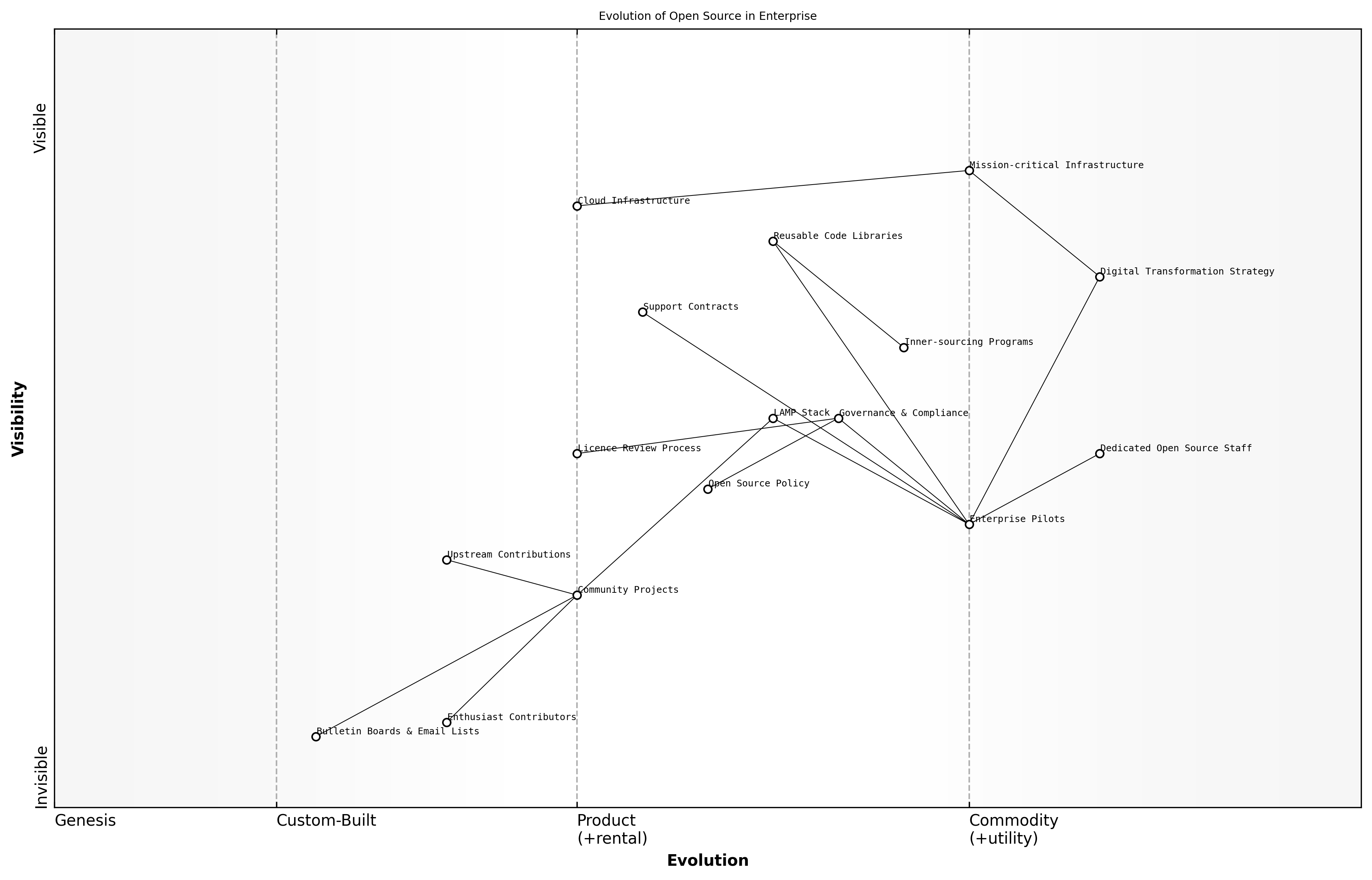

The evolution of open source in enterprise

Open source began as a passion project among academics and enthusiasts, rooted in the belief that software should be freely shared, studied and improved collaboratively. Early communities formed around bulletin boards and email lists, driven by curiosity rather than commercial gain. Code releases lacked formal support structures, and projects were maintained informally by individuals volunteering their spare time.

Over time, pioneering enterprises recognised the potential to reduce costs and accelerate development cycles by leveraging these community‑driven innovations. The rise of the LAMP stack in the early 2000s demonstrated how open source components could underpin mission‑critical infrastructure. Organisations began to experiment with internal deployments, assigning dedicated staff to evaluate stability, performance and security.

As these pilots matured, the narrative shifted from mere cost‑saving to value creation. Open source projects became conduits for external expertise, tapping into global contributor networks to enhance features and fix bugs at unprecedented speed. Enterprises invested in upstream contributions, recognising that participating actively in communities yielded reputational and technical dividends.

This phase ushered in formal governance and compliance practices. Procurement teams established open source policies, legal departments built licence review processes, and dedicated support contracts emerged from specialist vendors. Programmes such as inner‑sourcing extended open source principles to internal teams, fostering cross‑department collaboration and re‑usable code libraries.

- Cost optimisation through reuse of battle‑tested components

- Accelerated innovation via community contributions

- Mitigation of vendor lock‑in and proprietary risk

- Access to a broader talent pool and skills ecosystem

- Enhanced security through transparent code review

Boardrooms began to view open source not as a technical curiosity but as a strategic lever for digital transformation. It aligns with broader imperatives such as interoperability, sovereign capability and rapid response to evolving threats. By embedding open source into enterprise strategy, organisations unlock agility and resilience at scale.

What was once a cottage industry has become mission‑critical says a senior government official

This journey from hobby to strategy sets the stage for the frameworks and governance models that follow. Understanding this evolution is crucial for leaders seeking to harness open source as a sustained competitive weapon.

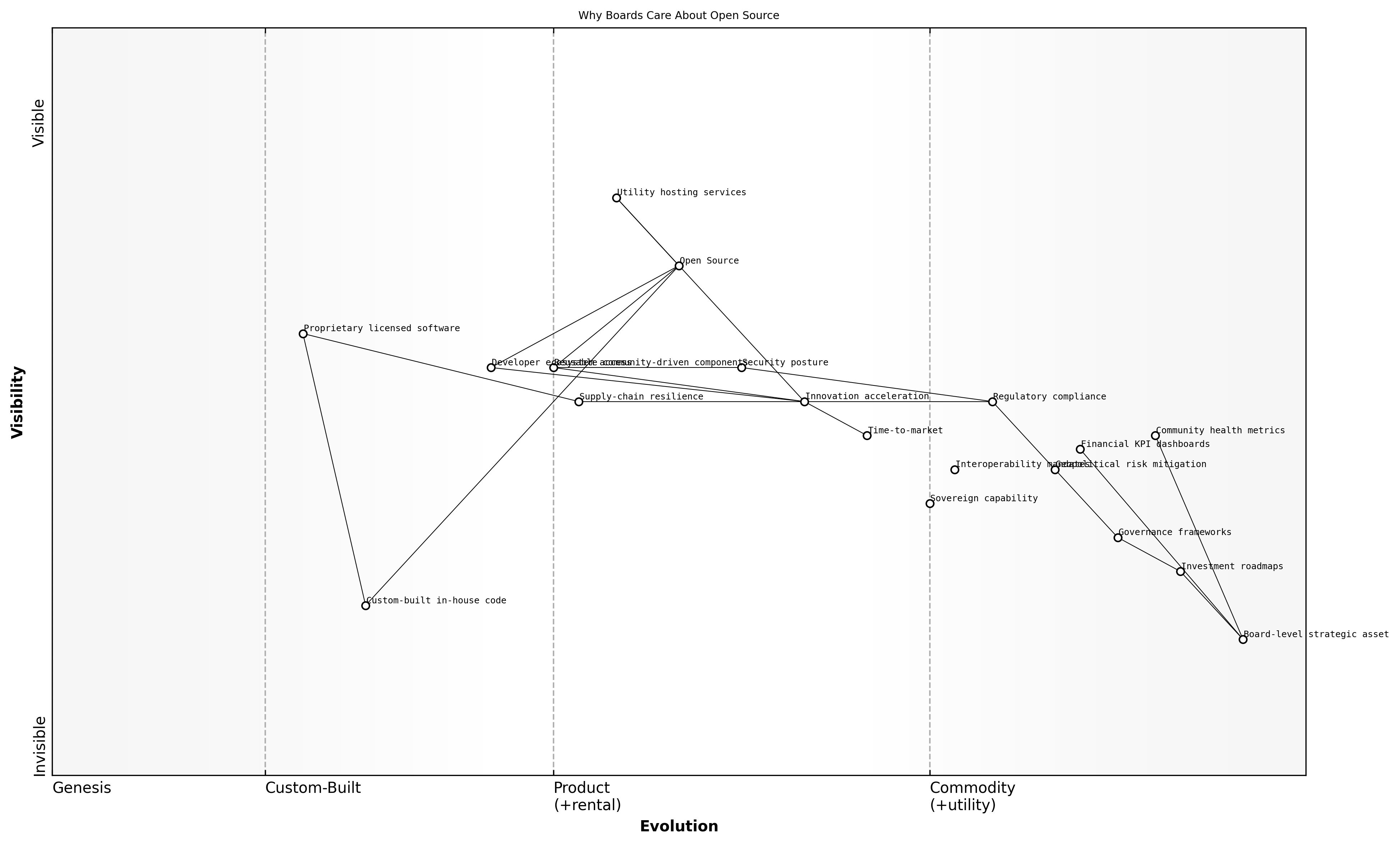

Why boards now care about open source

Board‑level executives are increasingly attuned to open source as a strategic asset rather than a niche technical concern. As organisations navigate rapid digital transformation, the transparent, collaborative nature of open source offers unique advantages that resonate with highest‑level imperatives.

Beyond cost savings, boards recognise open source as a lever for accelerating innovation, de‑risking supplier relationships, and fostering greater organisational agility. The shift from pilot projects to enterprise‑wide adoption has elevated open source to a boardroom priority.

- Strategic agility through reusable, community‑driven components

- Cost optimisation via avoidance of proprietary licence fees

- Enhanced security posture with transparent, peer‑reviewed code

- Supply‑chain resilience and reduced vendor lock‑in risk

- Access to global talent and a vibrant developer ecosystem

- Accelerated time‑to‑market by leveraging upstream innovations

- Alignment with sovereign capability and interoperability mandates

These drivers align tightly with board‑level concerns such as regulatory compliance, geopolitical risk, and sustainable digital strategy. By embedding open source within governance frameworks and investment roadmaps, organisations mitigate long‑term risks and unlock new avenues for competitive differentiation.

Open source is no longer an IT project but a strategic imperative, says a senior government official

Measuring the impact of open source initiatives requires new metrics that speak to executive audiences. Boards now expect dashboards that track community health alongside financial KPIs, ensuring transparency in both technical progress and business value.

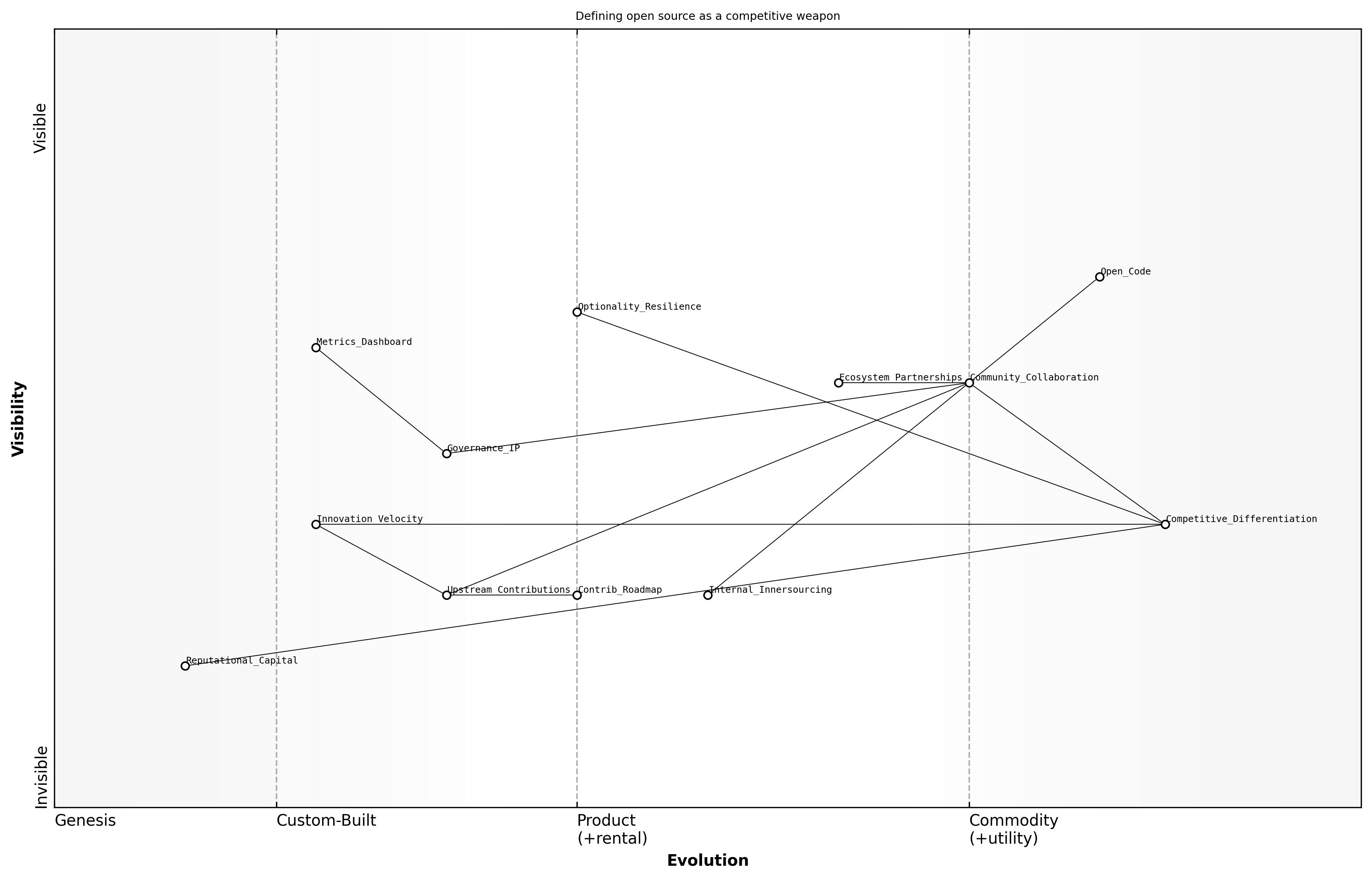

Defining open source as a competitive weapon

Having traced the journey from hobbyist origins to board‑level strategy, we now define open source as a competitive weapon: a deliberate approach in which organisations harness transparency, community collaboration and shared innovation to shape markets, accelerate delivery and exert strategic influence in digital ecosystems.

- Strategic differentiation through customisable, open technologies that competitors cannot easily replicate

- Ecosystem leverage by galvanising global communities to co‑create features, fix defects and influence roadmaps

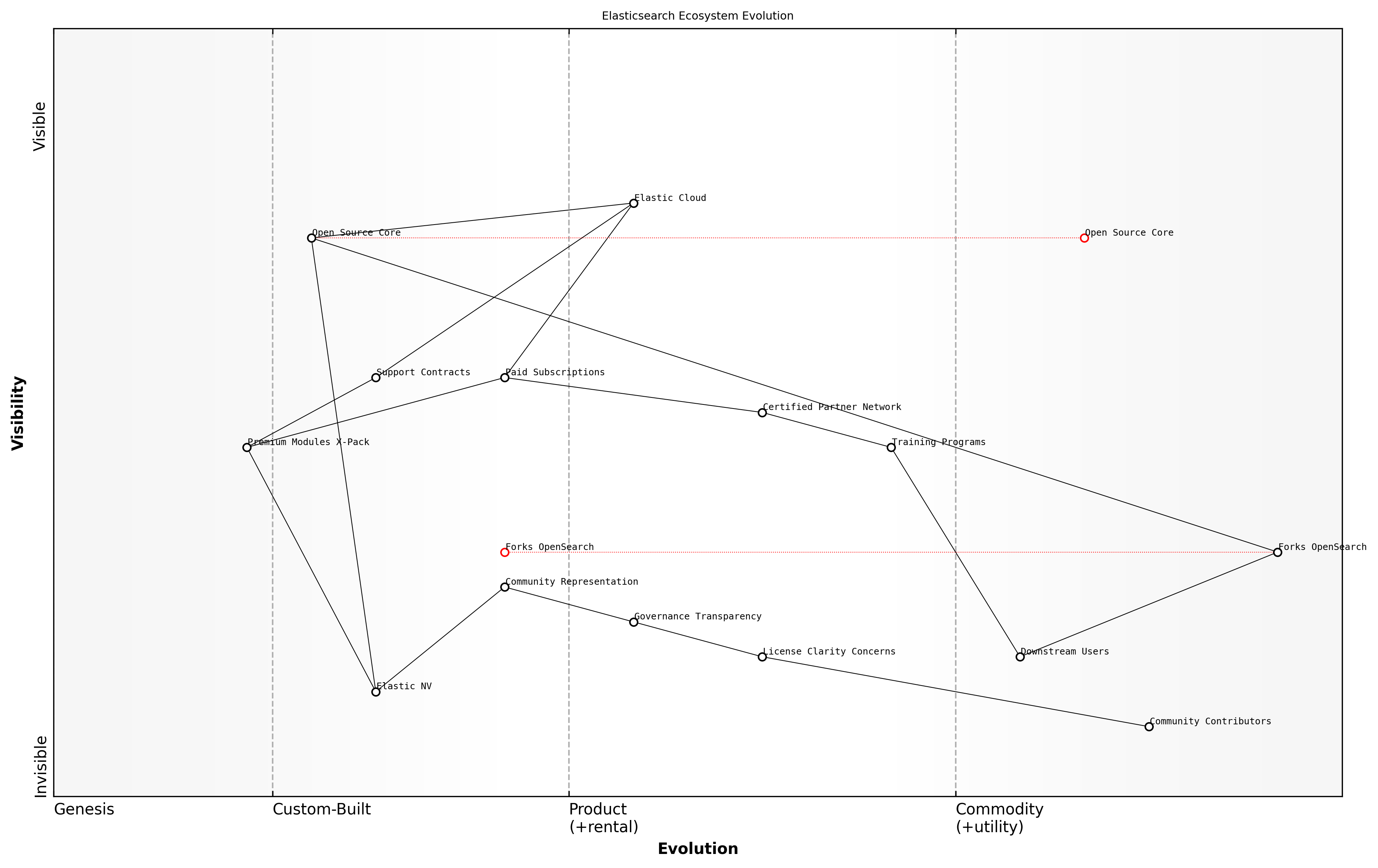

- Optionality and resilience via avoidance of single‑vendor lock‑in and the freedom to fork or self‑host

- Innovation velocity driven by upstream contributions and continuous peer‑review feedback loops

- Reputational capital earned by visible leadership and stewardship of critical open projects

This concept aligns with core principles of open source as a public good and commons management. By treating open code as an asset to be orchestrated rather than a cost to be controlled, organisations unlock network effects, drive supply‑side scale and foster competitive advantage. They move beyond consumption towards active ecosystem leadership.

Open source becomes a competitive weapon when organisations shift from mere usage to orchestrating and shaping the ecosystem, says a leading expert in the field

- Establish an inner‑sourcing programme to mirror external community practices within the enterprise

- Define a contributory roadmap that directs internal investments towards high‑impact upstream projects

- Implement governance and IP policies that balance openness with security and compliance

- Develop metrics dashboards that blend community health indicators with business KPIs

- Cultivate ecosystem partnerships and alliance structures to defend against competitive encroachment

By operationalising open source as a competitive weapon, boards can steer sustainable digital transformation, balancing strategic risk with long‑term value creation and ensuring that open innovation remains a continual source of differentiation.

Key Themes and Structure of This Book

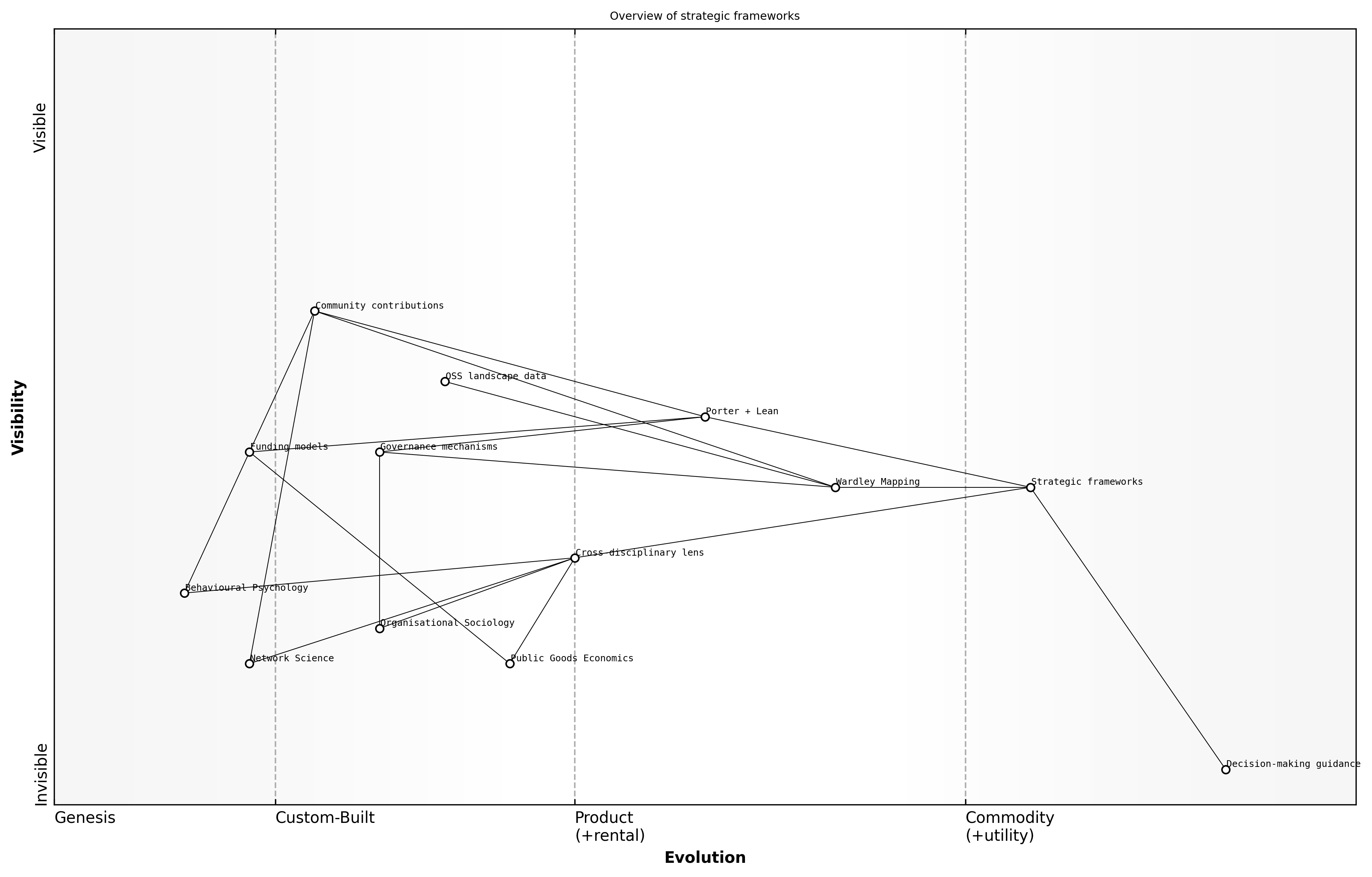

Overview of strategic frameworks

Strategic frameworks provide a common language for boards and leadership teams to understand the unique dynamics of open source. They guide decision‑making by revealing where to invest, how to differentiate and when to collaborate or compete.

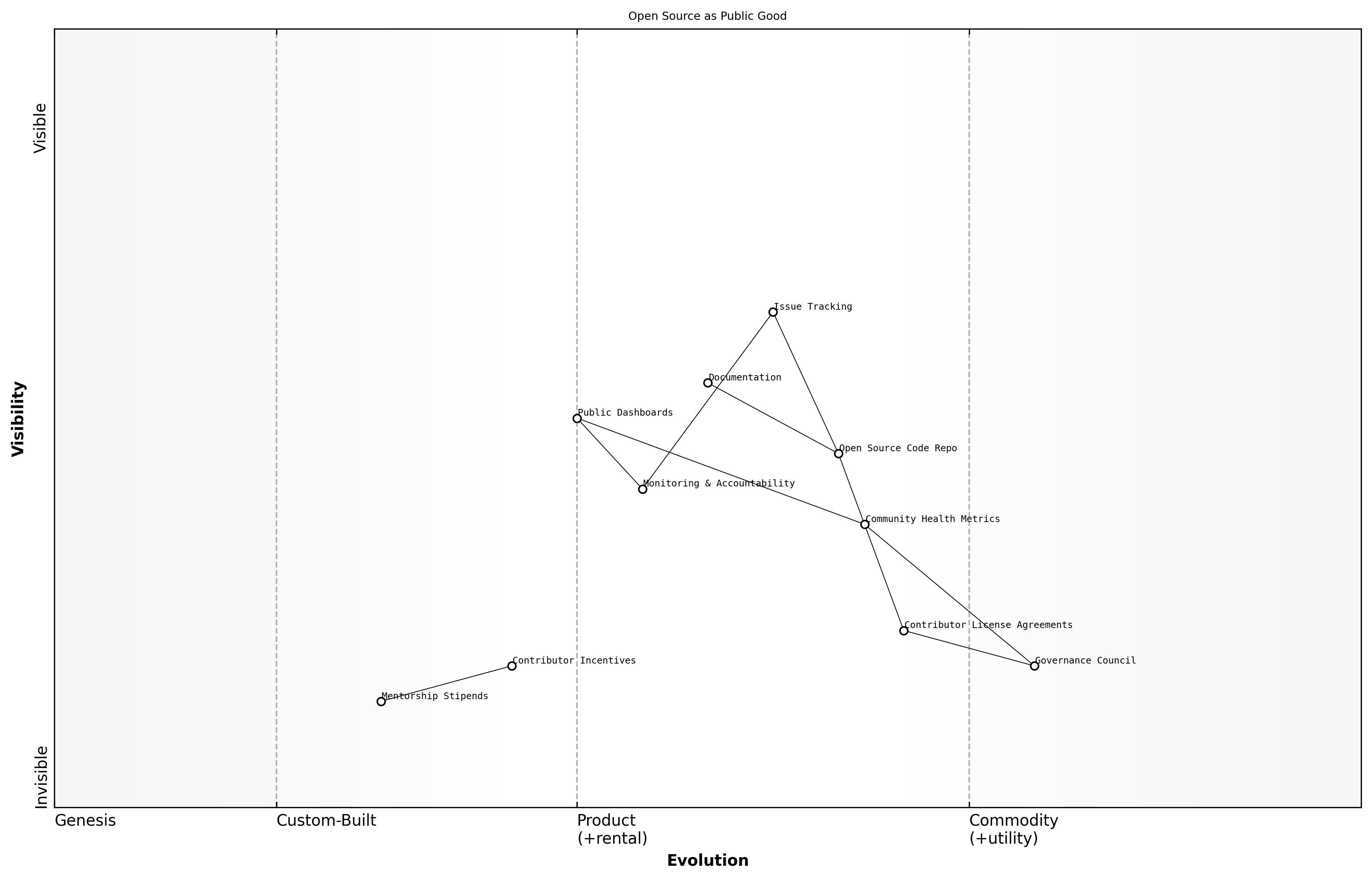

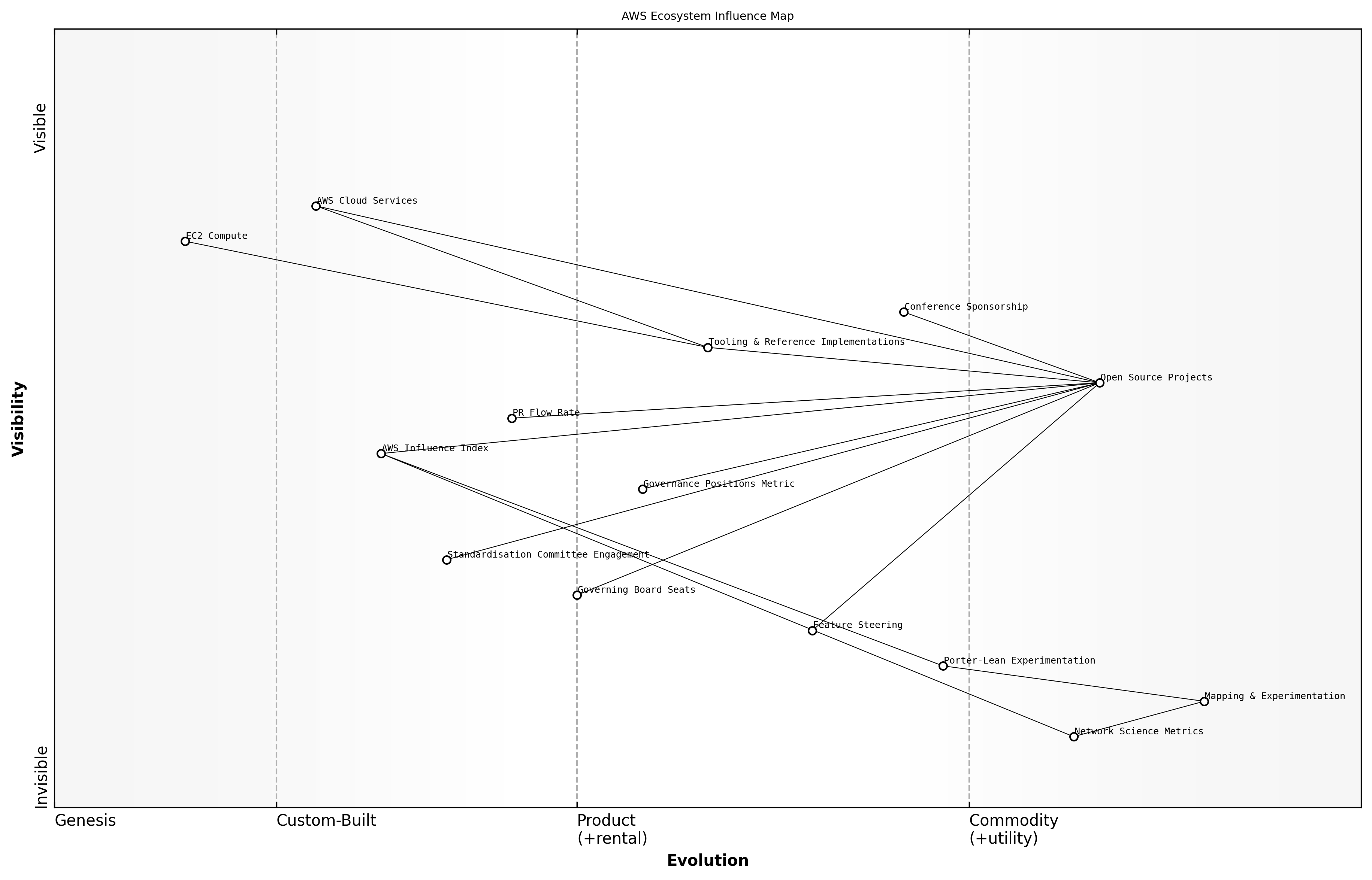

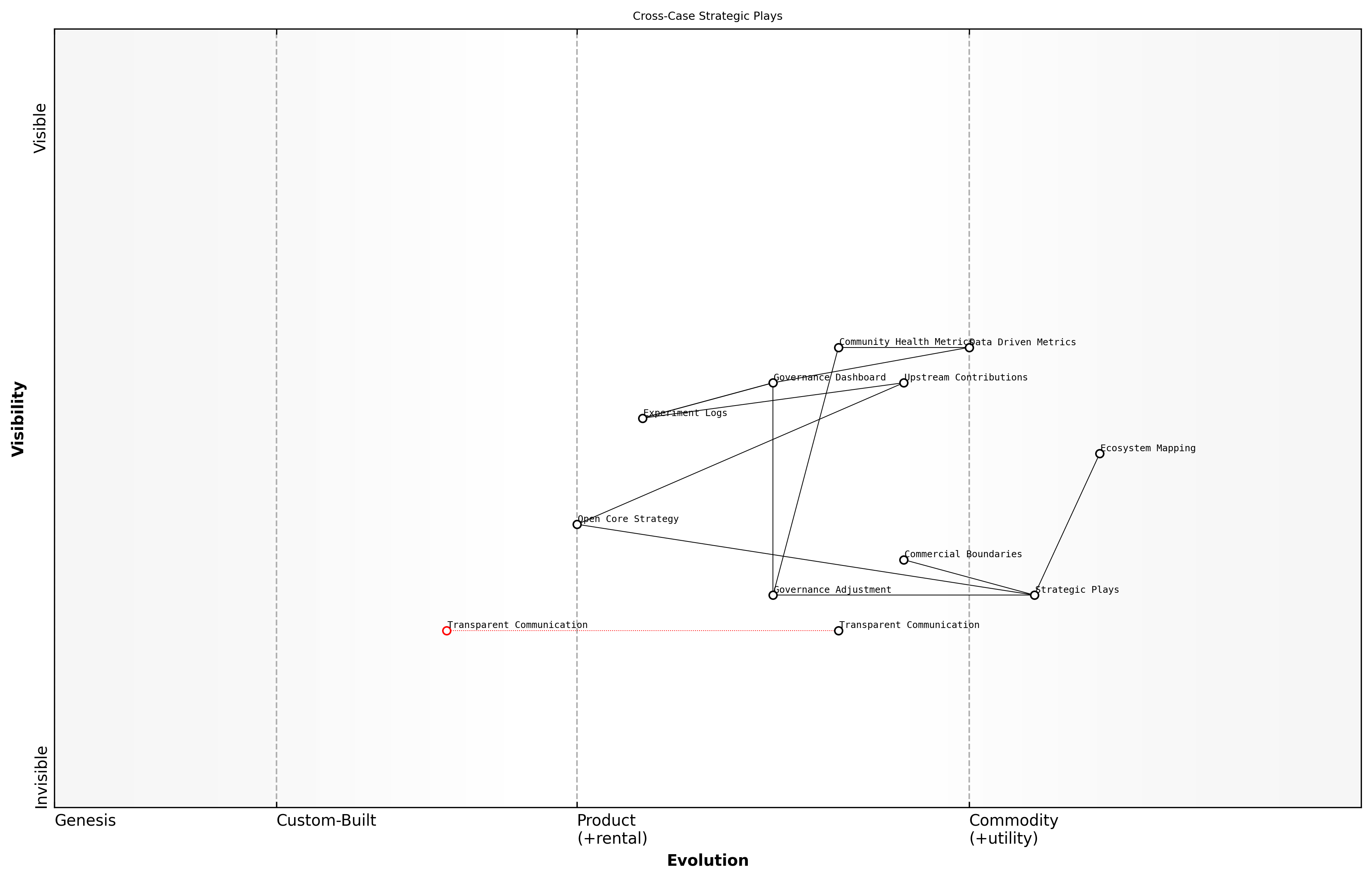

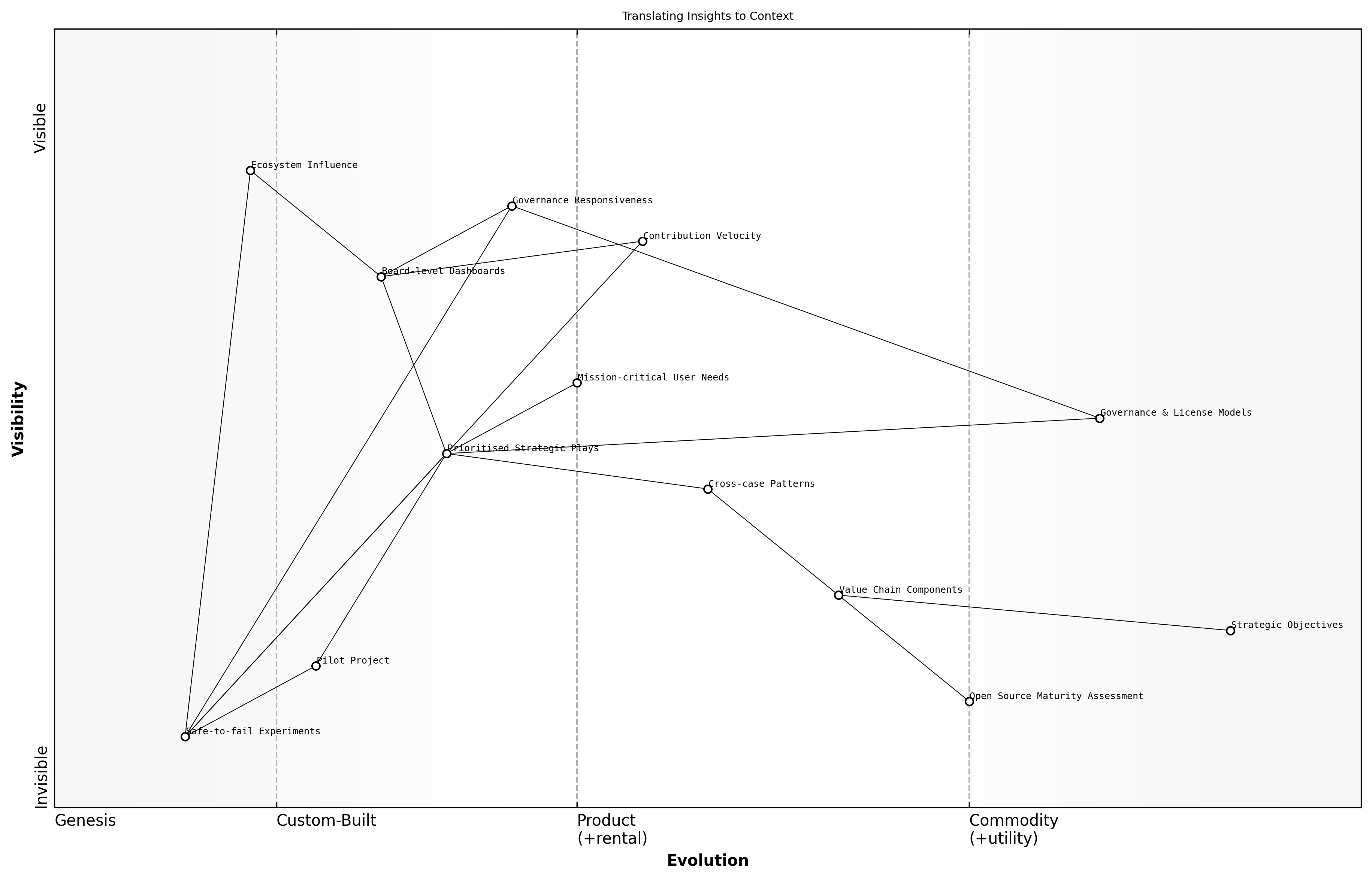

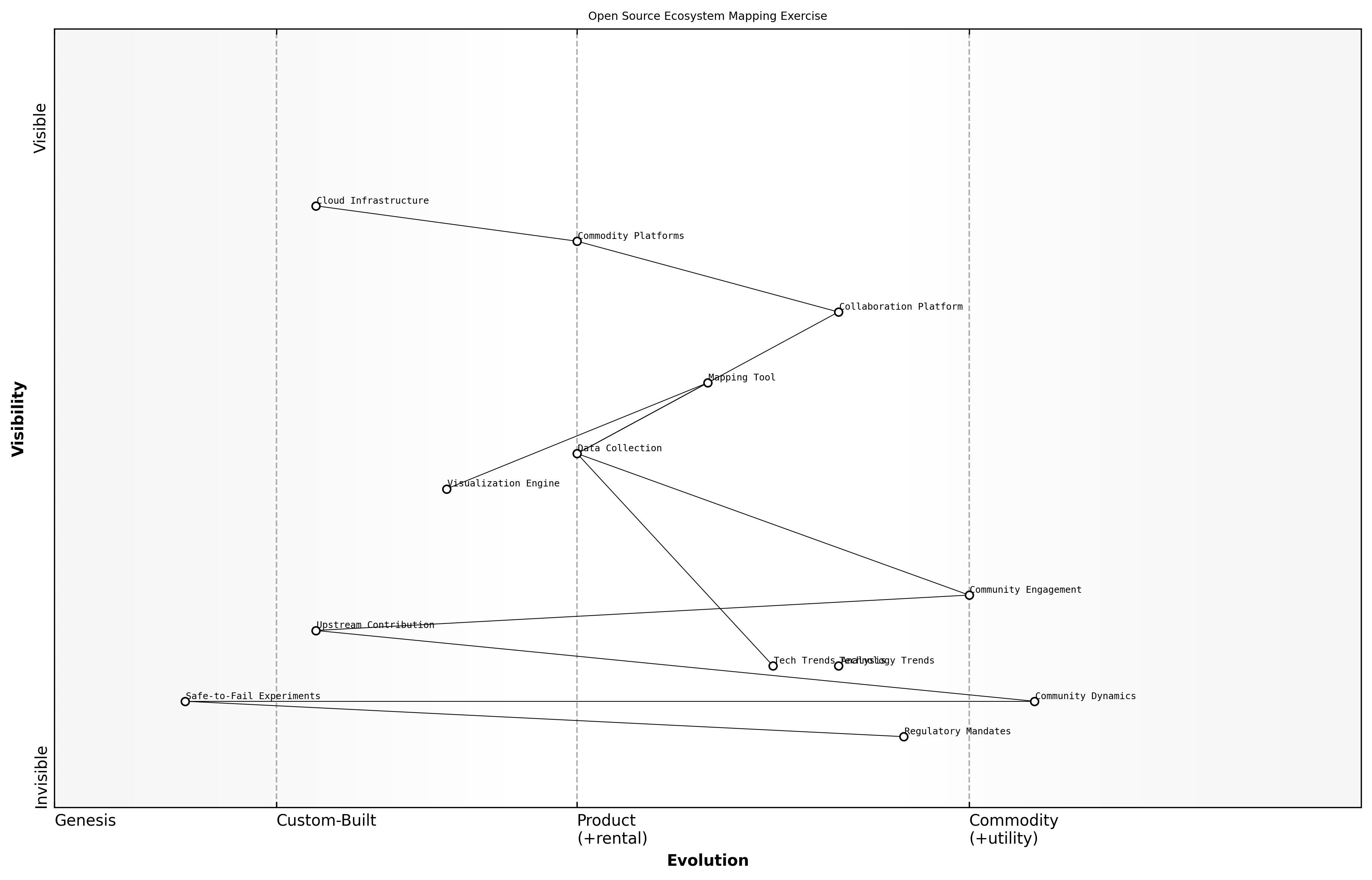

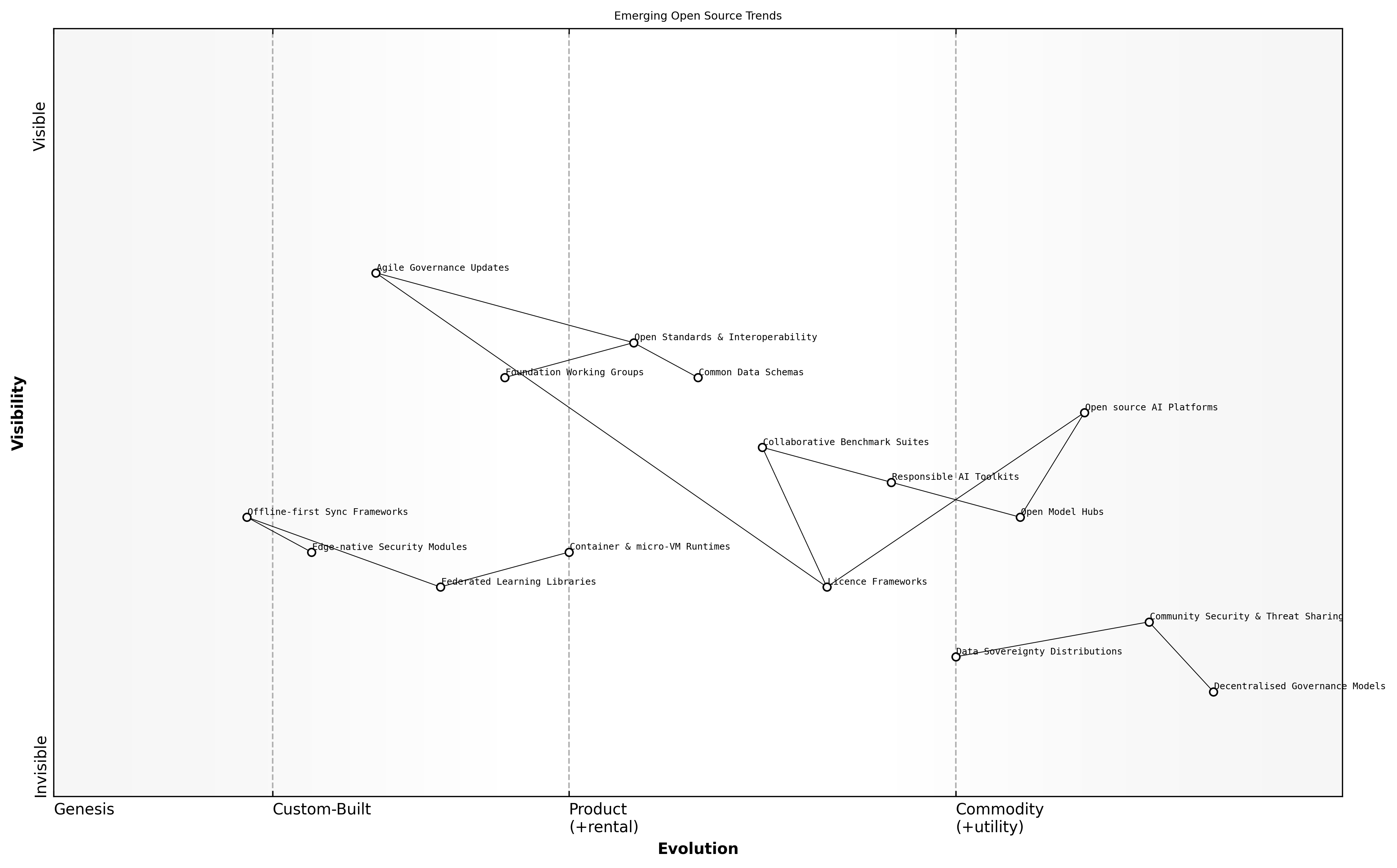

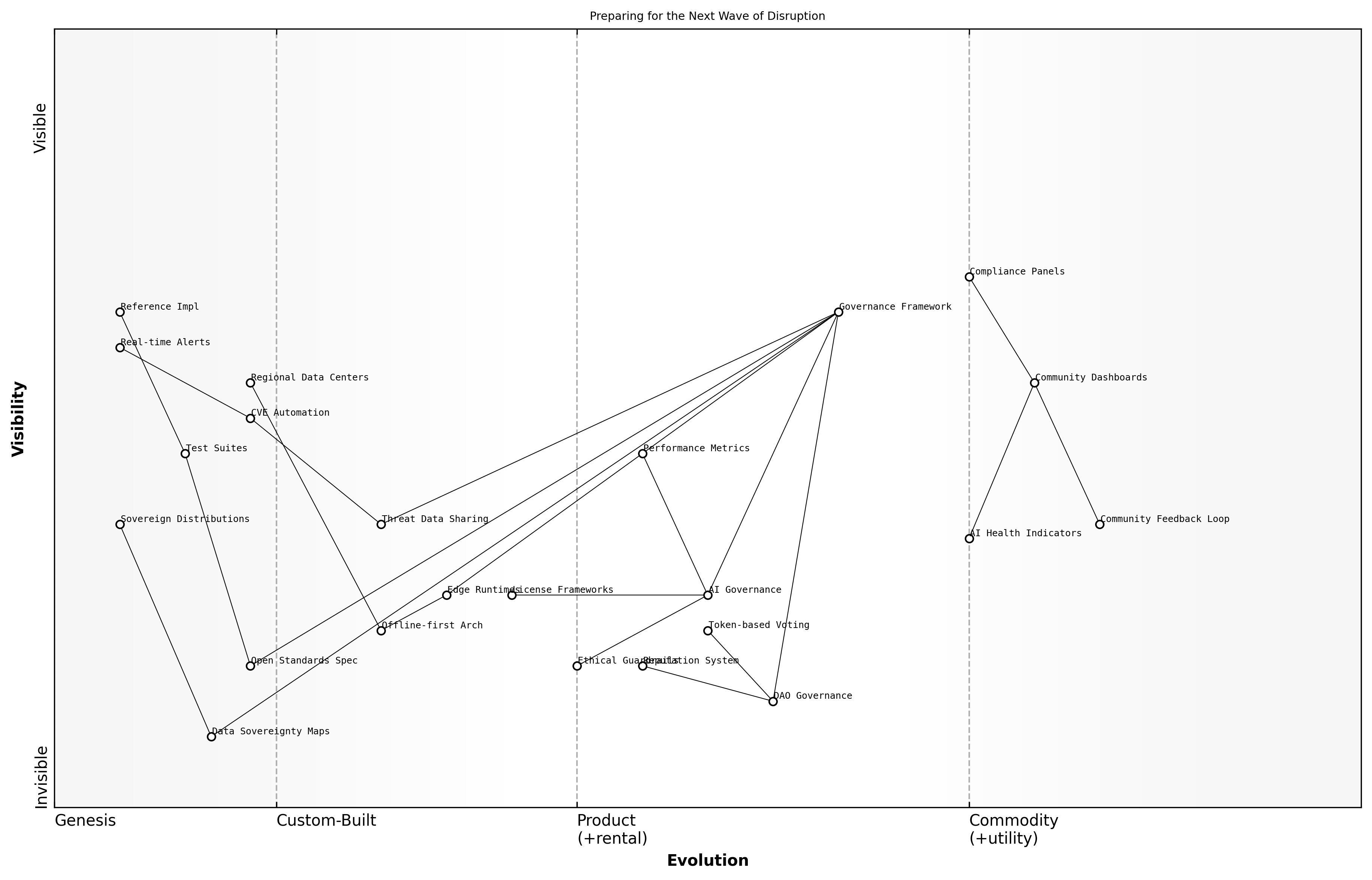

- Wardley Mapping: visualising the open source landscape, value chain stages and evolution trajectories

- Porter’s Five Forces meets Lean Startup: analysing competitive pressure and adopting iterative experimentation

- Cross‑disciplinary lenses: integrating economic public‑goods theory, organisational sociology, network science and behavioural psychology

Wardley Mapping offers a visual representation of an organisation’s components—from genesis to commodity—and their user needs. By plotting open source projects within this map, leaders can identify strategic plays such as commoditisation, customisation and ecosystem orchestration. This framework helps prioritise investments in upstream contributions or in‑house development based on movement along the evolution axis.

Combining Porter’s Five Forces with Lean Startup principles creates a powerful hybrid. The Five Forces model highlights supplier power, barrier to entry, and competitive rivalry within open source communities, while Lean Startup emphasises rapid hypothesis testing, minimal viable engagements and validated learning. Together they inform when to launch new open source initiatives, how to structure governance and how to iterate features based on community feedback.

Cross‑disciplinary lenses enrich these strategic perspectives. Public‑goods economics reveals how shared infrastructure can be sustained and funded. Organisational sociology uncovers governance tensions and decision‑making norms. Network science maps contributor relationships and dependency graphs. Behavioural psychology guides incentive design and motivation strategies to attract and retain talent.

Strategic frameworks allow us to see patterns and make deliberate plays based on community velocity and ecosystem positioning says a leading expert in the field

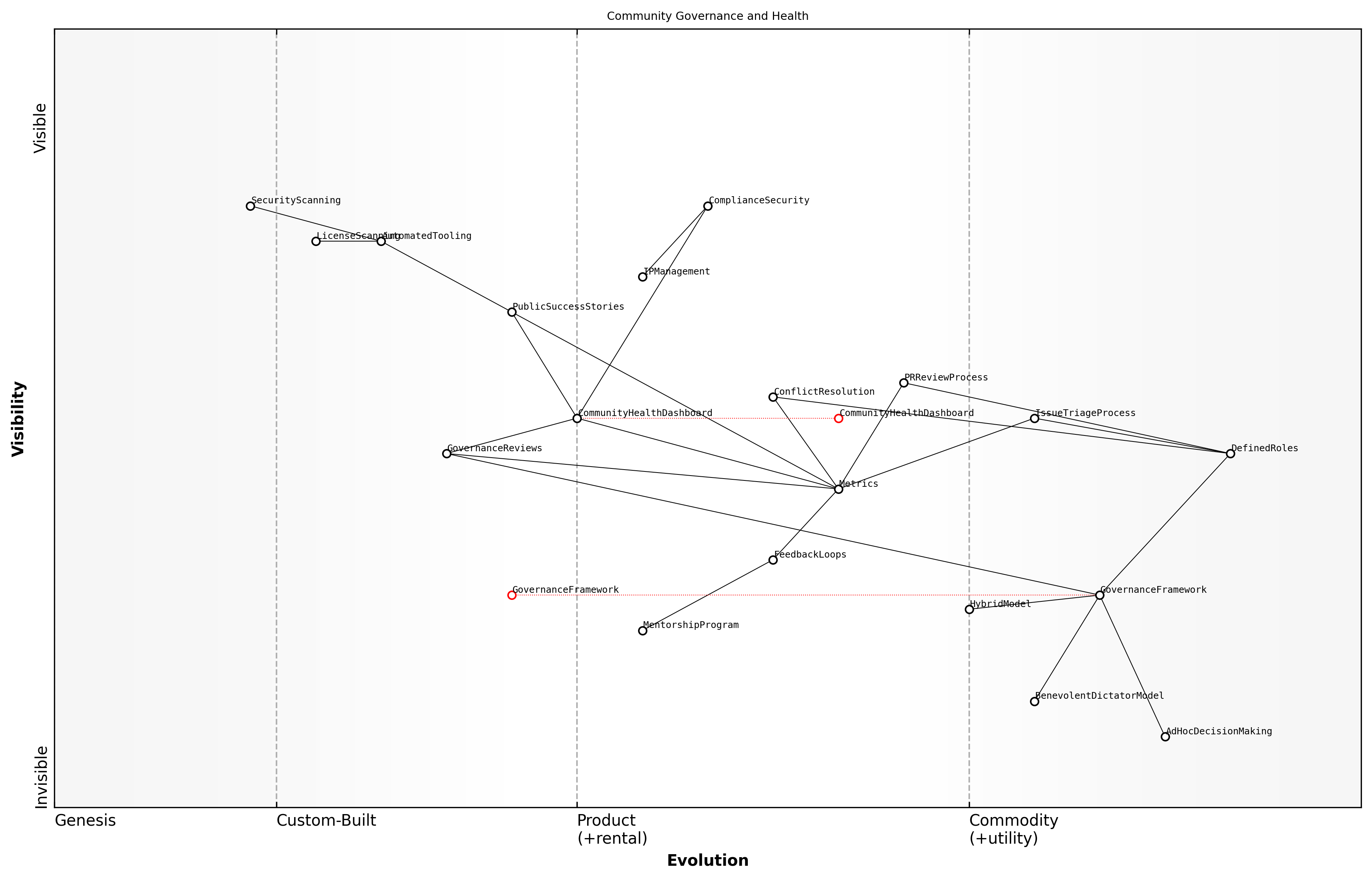

Community governance and health

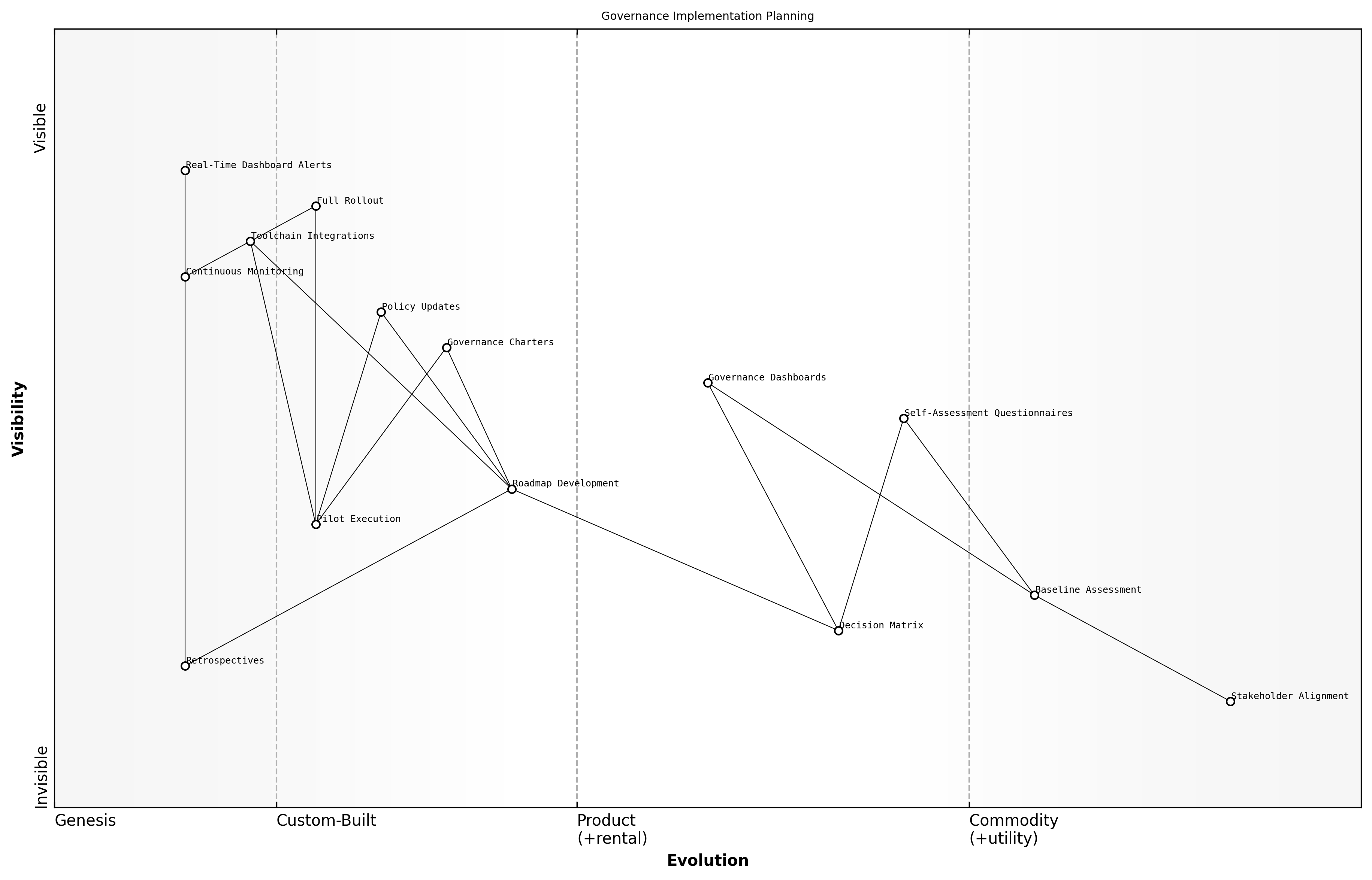

Community governance and health represent the heartbeat of any open source initiative, bridging strategic objectives with the on‑the‑ground contributor experience. A well‑governed community ensures transparency, fosters trust and aligns diverse stakeholders towards common goals. For boards and executives, these dynamics translate directly into risk mitigation, innovation velocity and reputational capital.

- Transparent decision pathways and clear governance charters

- Defined roles and responsibilities for maintainers, contributors and users

- Robust processes for issue triage, pull request review and conflict resolution

- Metrics that balance technical progress with community vitality

- Regular feedback loops between leadership and contributors

Selecting an appropriate governance model—whether benevolent dictator, foundation‑led or hybrid—establishes the structural foundation for healthy community engagement. Governance frameworks must evolve alongside the project lifecycle, enabling scalability without compromising agility. Effective governance also ensures alignment with enterprise policies around compliance, security and intellectual property management.

Building a community health dashboard converts abstract metrics into actionable insights for both technologists and boardroom audiences. Dashboards should present a balanced scorecard, combining quantitative indicators—such as contributor growth, issue resolution times and retention rates—with qualitative signals like sentiment analysis and community feedback summaries. This enables continuous iteration and strategic investment decisions.

Monitoring community health ensures strategic alignment with enterprise goals says a senior government official

- Define and track leading and lagging community indicators

- Establish regular governance reviews and retrospectives

- Leverage automated tooling for licence compliance and security scanning

- Foster mentorship programmes to onboard new contributors

- Celebrate milestones and publicise success stories to sustain motivation

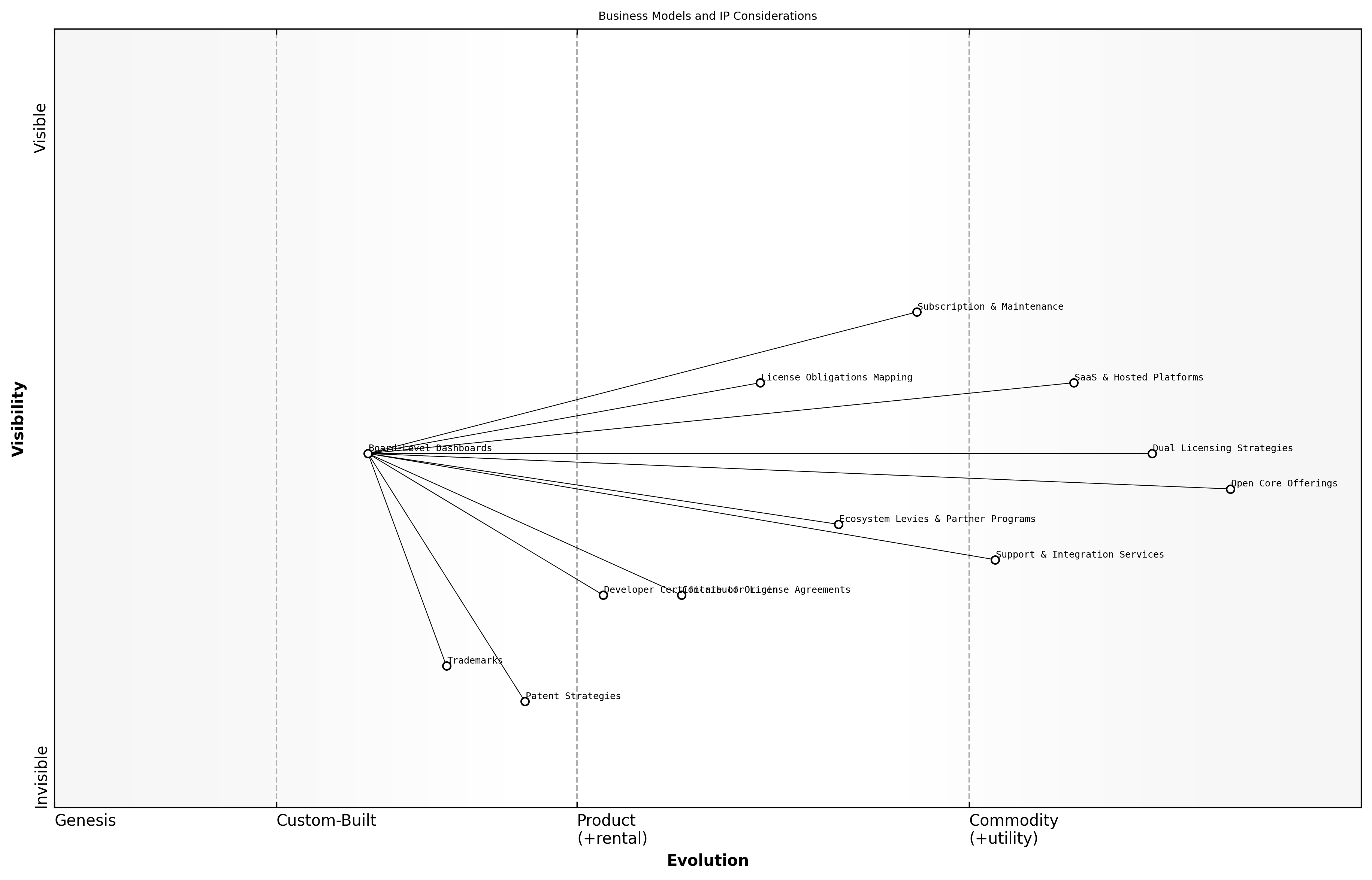

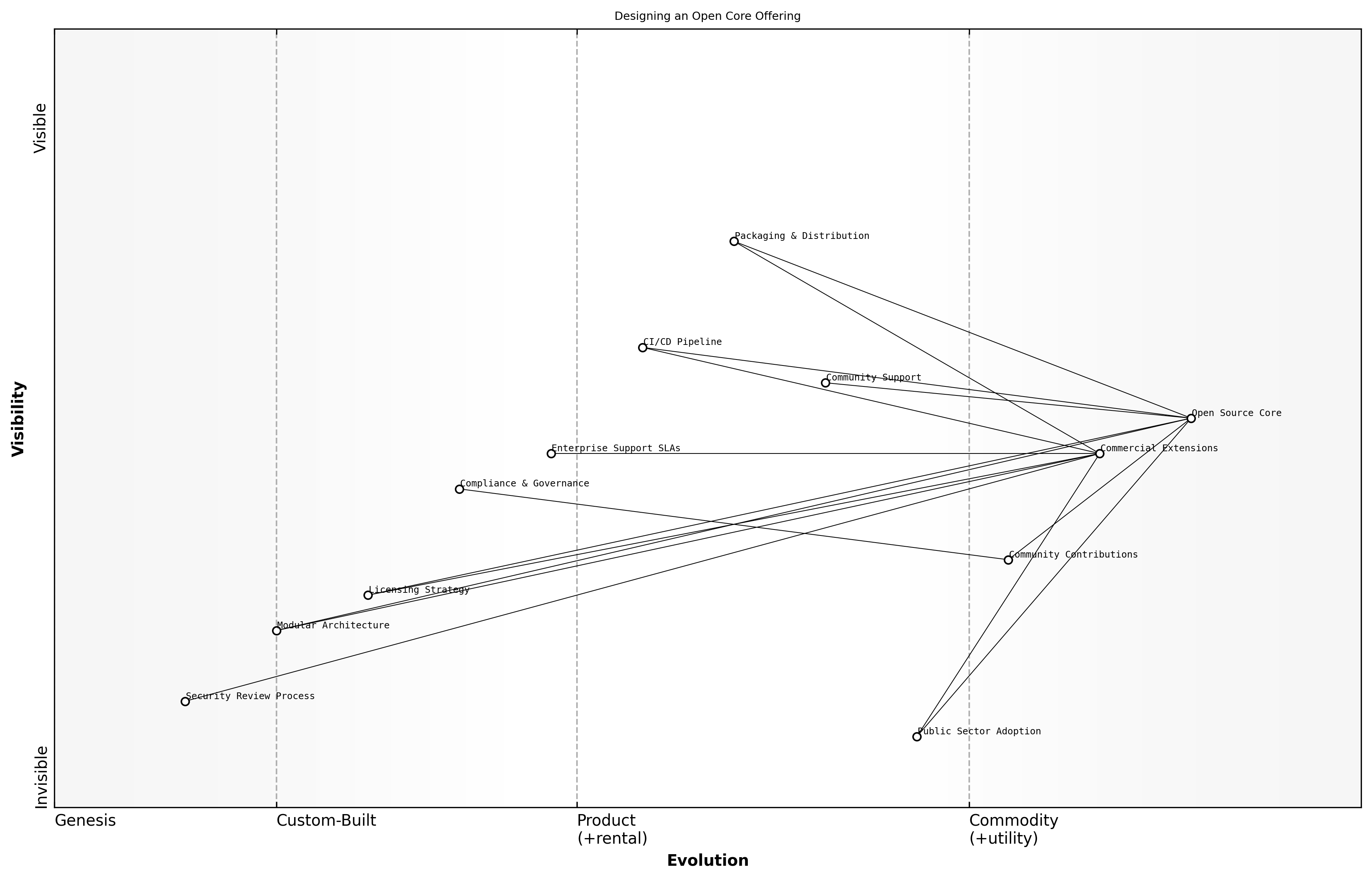

Business models and IP considerations

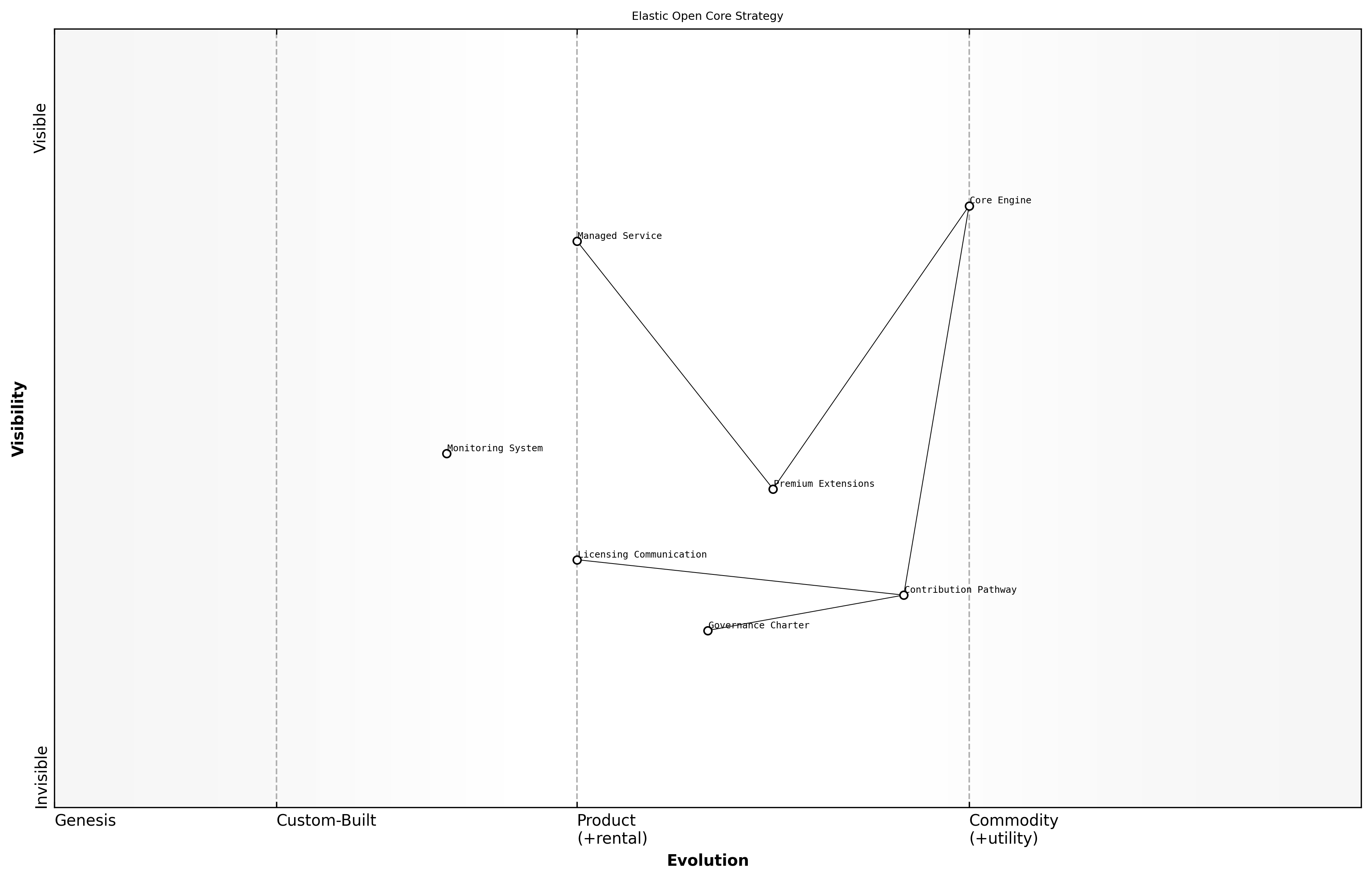

Business models and IP considerations represent the bridge between open source strategy and sustainable value capture. In the context of digital transformation, organisations must design revenue mechanisms that complement community dynamics and implement legal frameworks that preserve trust, minimise risk and align with board‑level objectives.

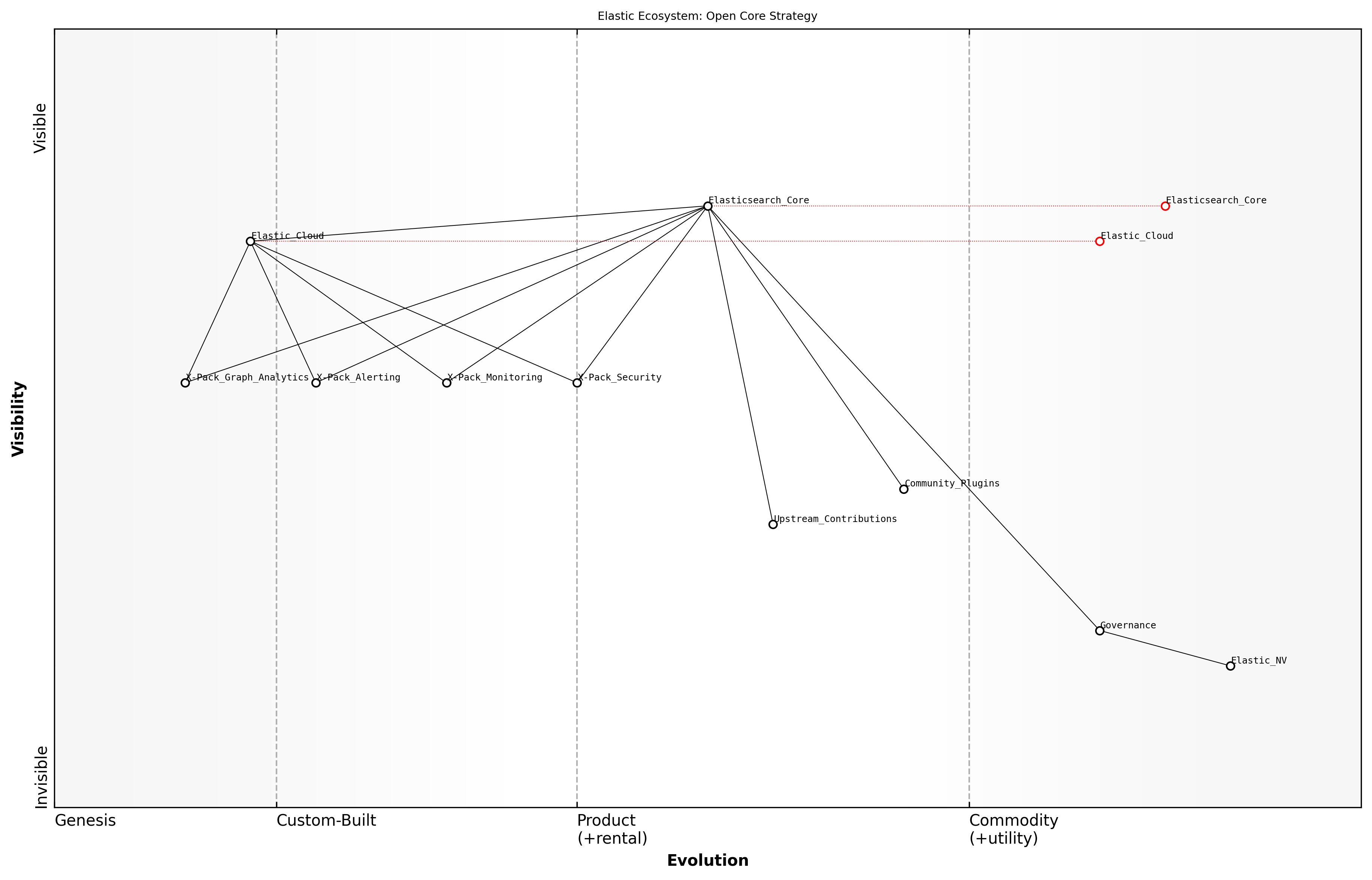

- Open core offerings that differentiate proprietary extensions from community editions

- Dual licensing strategies balancing copyleft and commercial licences

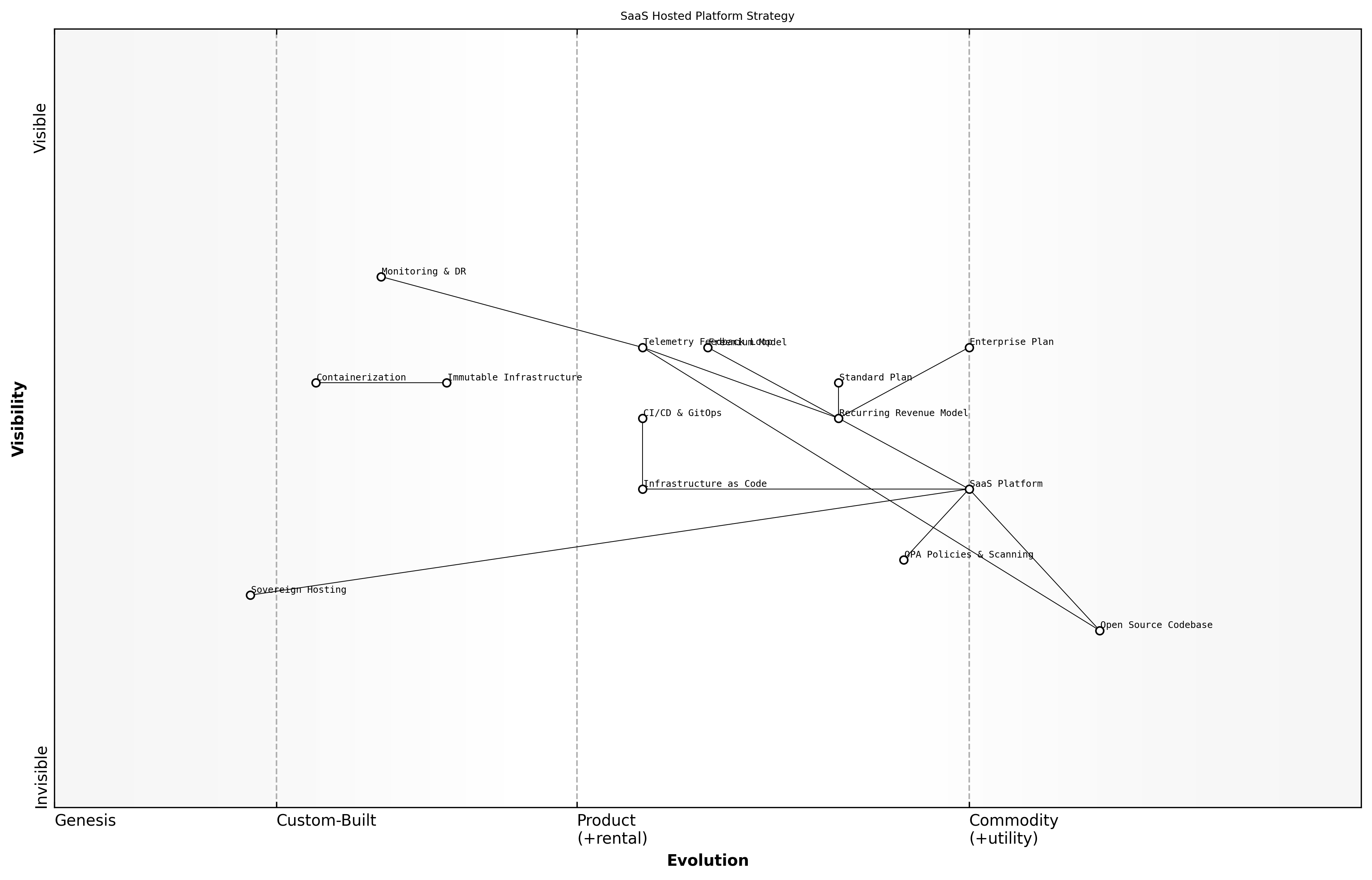

- SaaS and hosted platform plays with tiered feature access

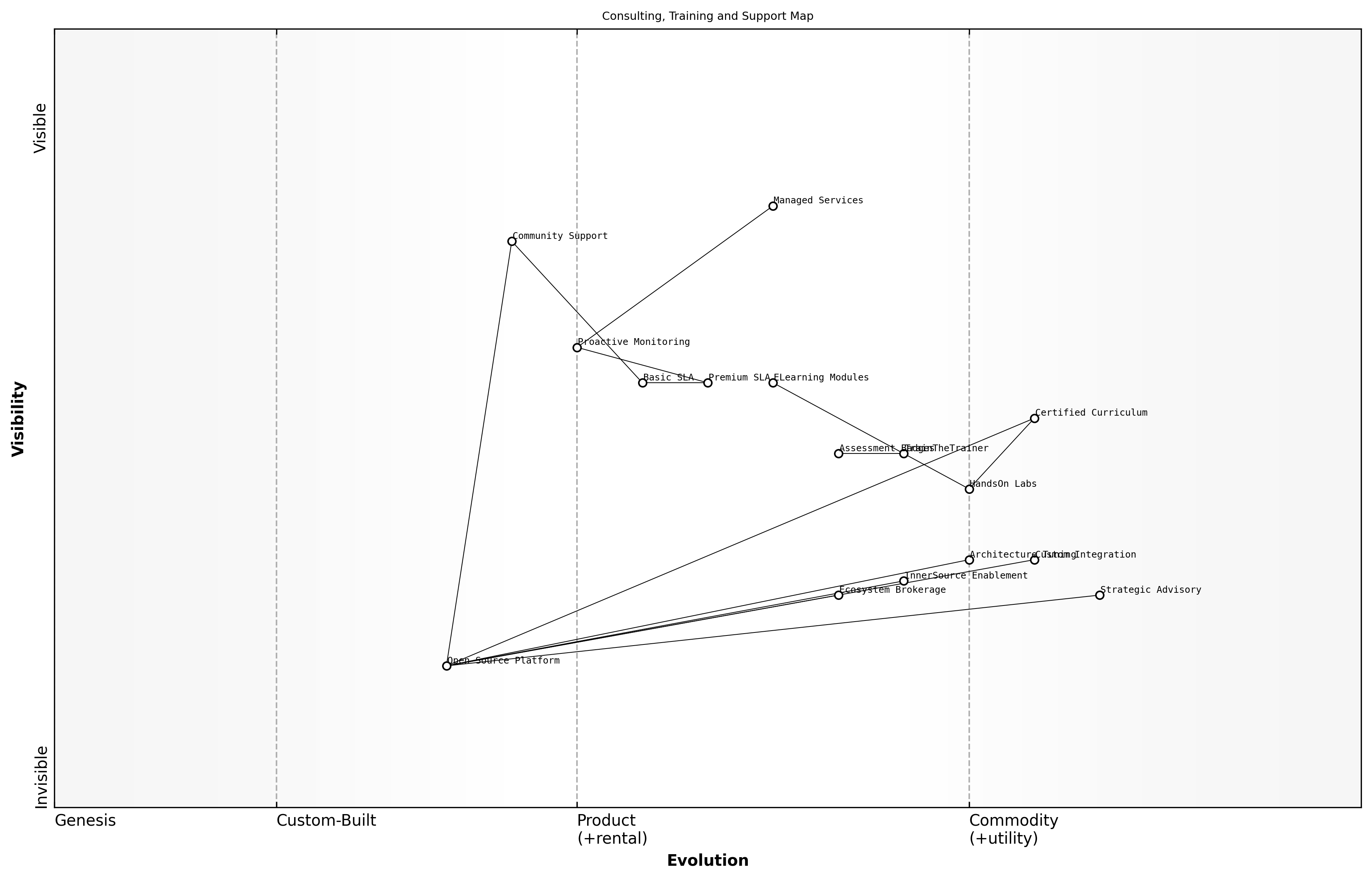

- Support, training and integration services as recurring revenue streams

- Subscription and maintenance models for predictable cash flow

- Ecosystem levies and partner programmes to capture network value

Each business model must align with IP management practices to safeguard compliance and maintain community trust. This involves mapping licence obligations, implementing contributor licence agreements (CLAs) or developer certificate of origin (DCO) processes, and defining patent strategies that deter adversarial filings without stifling innovation.

At the board level, dashboards should surface both financial and legal metrics. By integrating business performance indicators with IP risk indicators, leadership teams gain visibility into the health of their open source programme as both a revenue engine and a protected asset.

- Revenue by model (open core vs SaaS vs services)

- Licence compliance incidents and remediation time

- Patent filings and defensive publishing counts

- Contribution ROI (upstream pull requests vs internal investment)

- Community engagement index aligned to commercial uptake

A senior government official highlights the need for legal rigour to balance openness with protection and ensure open source delivers strategic value

By combining tailored business models with robust IP frameworks, organisations transform open source from a cost‑saving tactic into a sustained competitive weapon. This synergy drives revenue, mitigates legal exposure and strengthens market influence.

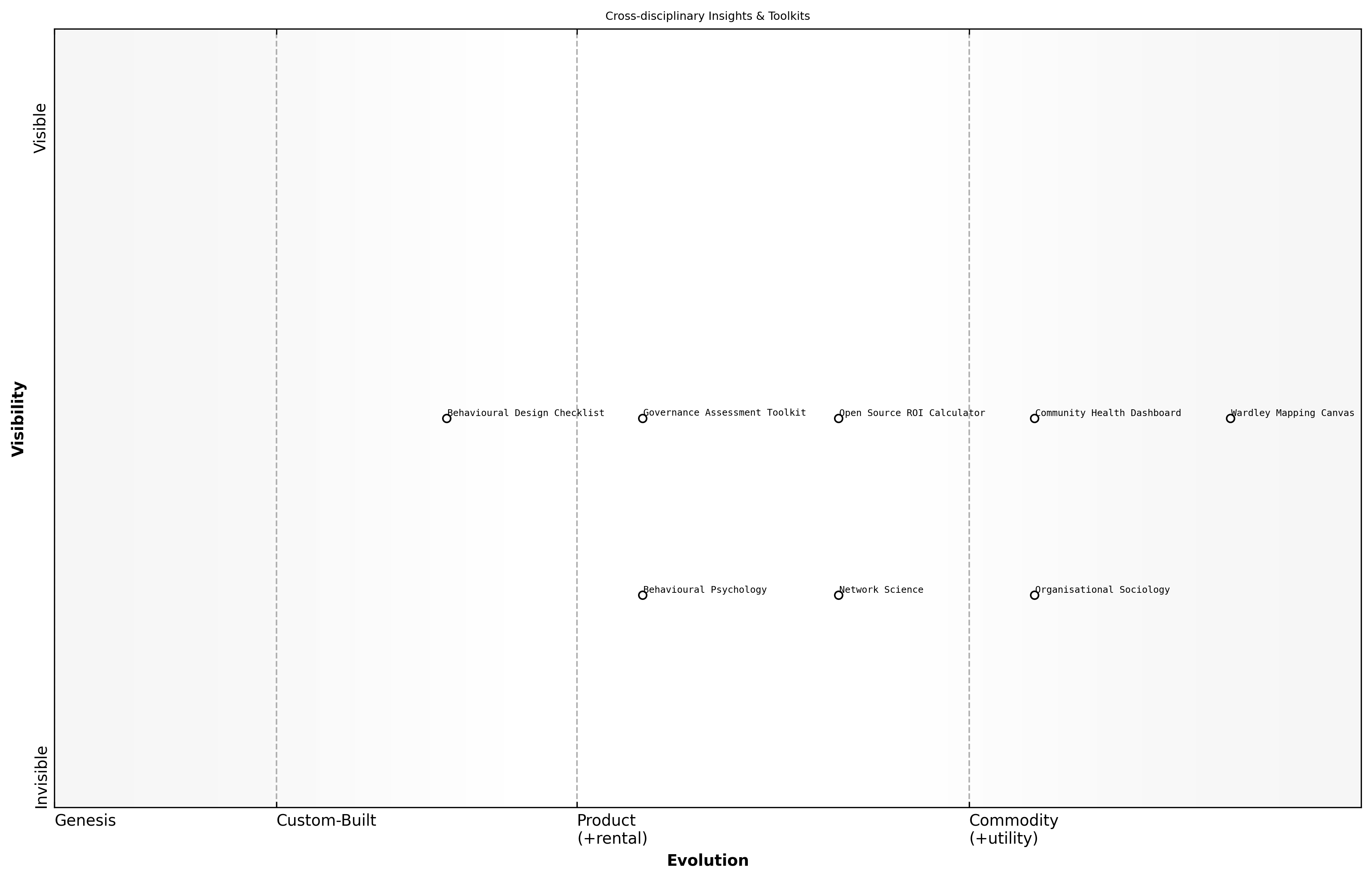

Cross‑disciplinary insights and toolkits

In order to wield open source as a competitive weapon, boards and leadership teams must draw on a diverse set of academic and practical disciplines. Cross‑disciplinary insights bridge high‑level strategy and operational execution, ensuring that governance, community dynamics and commercial models are informed by robust theory and evidence. Toolkits then translate these insights into actionable artefacts that drive consistency and repeatability across initiatives.

- Economic public‑goods theory and commons management for understanding incentives and resource allocation

- Organisational sociology to map power dynamics, roles and informal networks within communities

- Network science to visualise ecosystem topology, identify key hubs and assess resilience

- Behavioural psychology for designing contribution pathways, recognition systems and feedback loops that motivate participants

While theory shapes our understanding of how communities form and evolve, toolkits offer ready‑to‑use templates and methodologies. These artefacts enable executives and programme leads to operationalise cross‑disciplinary concepts quickly, reducing the time from insight to impact and ensuring that strategic intent is embedded in day‑to‑day practices.

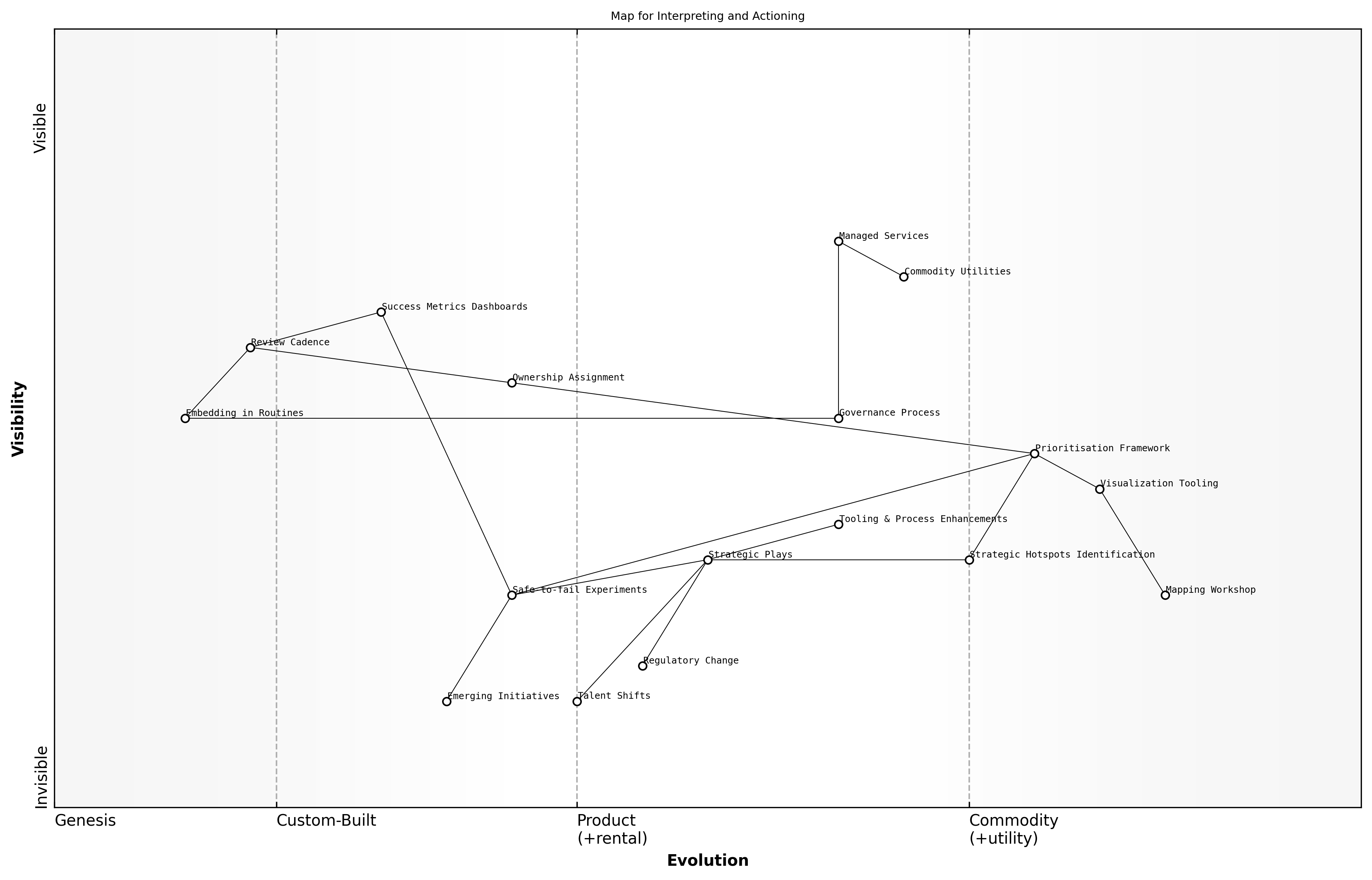

- Wardley Mapping Canvas with custom layers for community maturity and ecosystem influence

- Community Health Dashboard template combining sociological indicators and network metrics

- Open Source ROI Calculator that incorporates public‑goods spillover effects and licence‑related cost avoidance

- Governance Assessment Toolkit featuring decision matrices and legal compliance checklists

- Behavioural Design Checklist for onboarding flows, mentorship programmes and contributor recognition

A senior government official remarks that combining academic rigour with practical templates transforms open source from an abstract strategy into a replicable and measurable asset

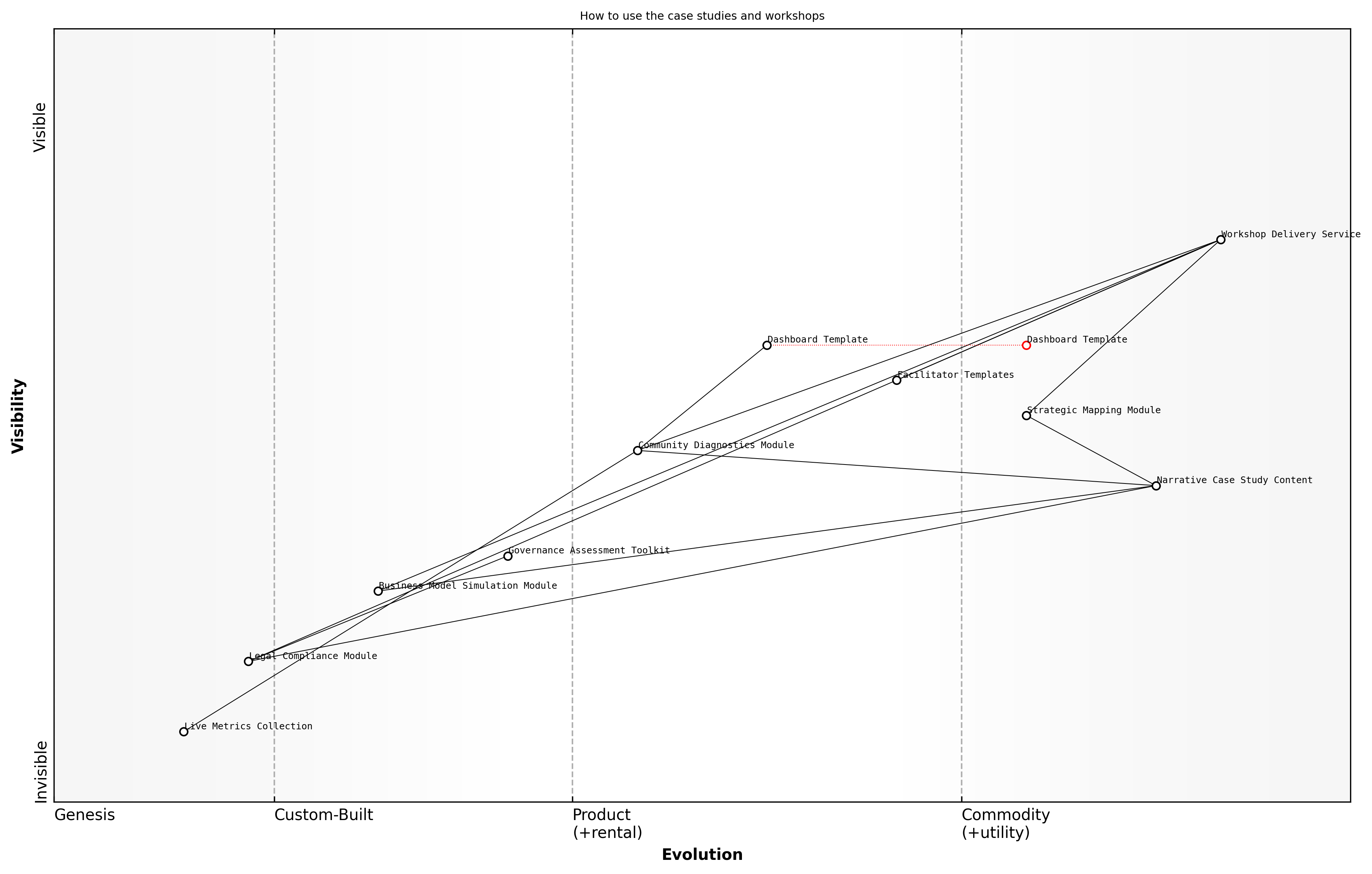

How to use the case studies and workshops

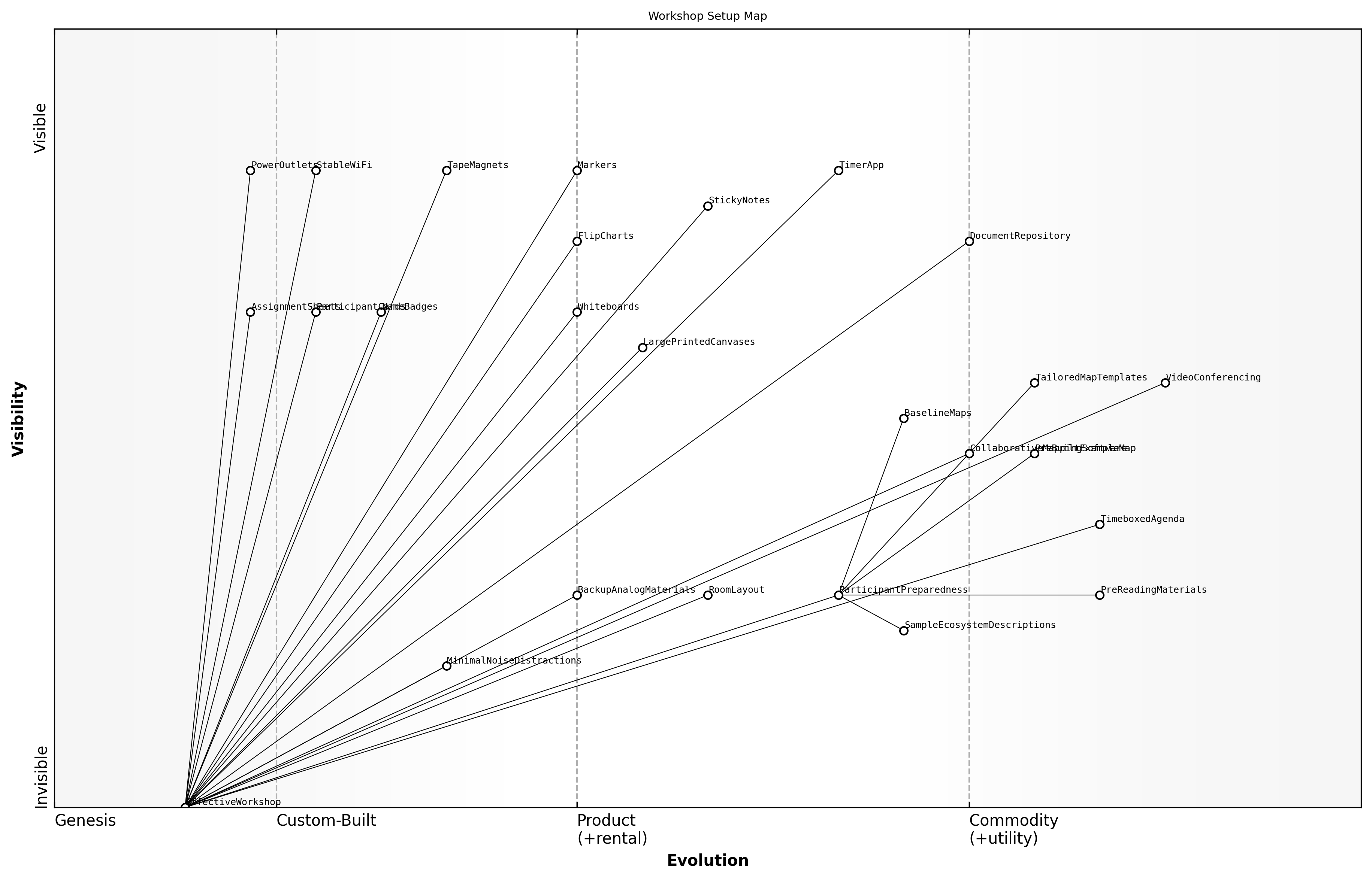

The case studies and workshop guides in this book are designed to bridge theory and practice, enabling executives and open source programme leads to internalise strategic frameworks and apply them to real‑world scenarios. By combining narrative analysis with hands‑on exercises, readers gain both contextual understanding and actionable insights.

Each case study follows a structured format: context and challenge, strategic framework application, outcomes and lessons learned. Workshops then translate these lessons into interactive exercises, reinforcing key principles such as ecosystem mapping, community health assessment and licence strategy design.

- Review the case background and identify the critical decision points where open source acted as a competitive lever

- Map the project’s evolution on a Wardley canvas to visualise component maturity and strategic plays

- Analyse community governance and health metrics to understand contributor dynamics and risk factors

- Evaluate business model choices and IP strategies, drawing on the comparative examples provided

- Facilitate a workshop session using the provided templates to adapt insights to your organisation’s context

Workshops are organised around modular toolkits that correspond to core themes: strategic mapping, community diagnostics, commercial modelling and legal compliance. Facilitators can mix and match exercises to suit time constraints and team composition, ensuring alignment with board‑level priorities and operational realities.

- Strategic Mapping Exercise: use the Wardley Mapping canvas to plot your organisation’s open source portfolio

- Community Health Drill‑Down: populate the dashboard template with live metrics from an active project

- Business Model Simulation: run a role‑play to negotiate open core and dual licensing scenarios

- Compliance Walkthrough: apply the governance assessment toolkit to a sample codebase and identify gaps

Tailoring the workshop modules to your organisation’s maturity level ensures that strategic insights translate into pragmatic roadmaps, says a leading expert in the field

To maximise impact, we recommend running a multi‑day workshop that sequentially addresses mapping, community health and monetisation, punctuated by guided reflections on each case study. This approach nurtures cross‑functional alignment and embeds open source principles deeply into strategic decision‑making.

Chapter 1: Strategic Frameworks and Cross‑Disciplinary Foundations

Wardley Mapping for Open Source Advantage

Core concepts of Wardley Mapping

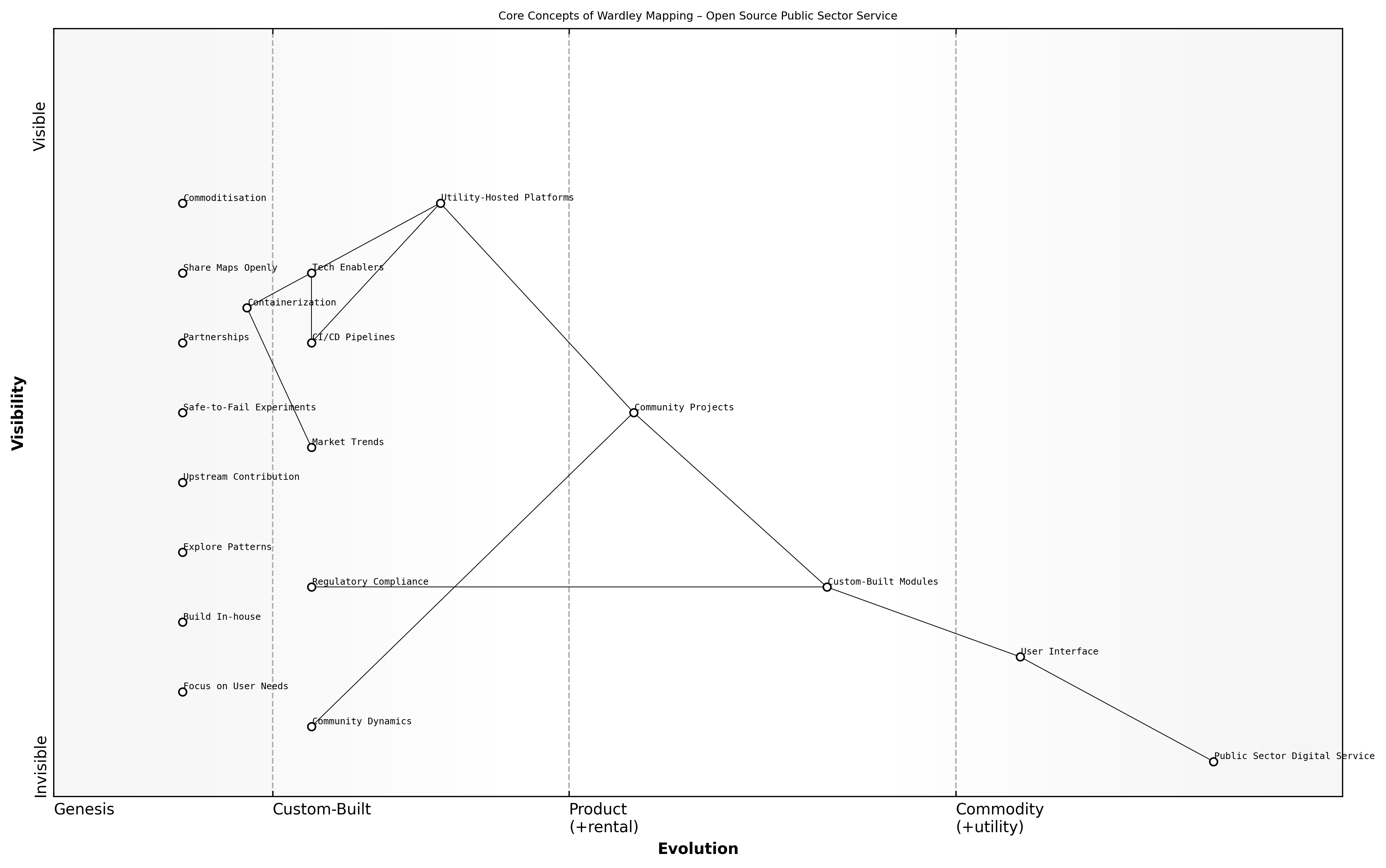

Wardley Mapping offers a dynamic visualisation of an organisation’s landscape, enabling leaders to identify strategic opportunities and risks. In the context of open source, mapping clarifies how components evolve from early innovation to ubiquitous commodity, guiding decisions on where to invest in upstream contributions or where to rely on established community projects.

A Wardley Map is composed of two axes: the value chain (vertical) and the evolution axis (horizontal). The vertical axis represents user needs and the activities required to meet them, while the horizontal axis tracks maturity from Genesis to Custom Built, Product/Rental and Commodity/Utility.

- Genesis: novel or untested capabilities often found in research or R&D labs

- Custom Built: bespoke solutions tailored to specific user needs

- Product/Rental: standardised offerings available for purchase or lease

- Commodity/Utility: ubiquitous services with high automation and minimal differentiation

Mapping begins with decomposition: breaking down a high‑level user need into its constituent components. This process reveals dependencies and prioritises activities based on strategic importance and evolution stage.

- Identify the anchor user need that drives value

- List all activities required to satisfy that need

- Position each activity along the evolution axis

- Determine dependencies by drawing links between components

The climate layer captures external factors that influence component evolution. In open source projects, climate includes community trends, regulatory mandates and technological shifts.

- Community dynamics such as contributor growth or attrition

- Regulatory changes affecting licence compliance or data sovereignty

- Market trends, for instance the rise of containerisation or AI

- Technological enablers like CI/CD pipelines or cloud‑native platforms

Doctrines are guiding principles and best practices that apply across all maps. They ensure consistency and focus, helping teams navigate complexity even when specifics differ.

- Focus on user needs not solutions

- Explore patterns and commonalities across maps

- Adopt safe‑to‑fail experiments to reduce risk

- Share maps openly to build communal understanding

Motion refers to the strategic plays that move components along the map. Organisations can decide to build in‑house, contribute upstream, form partnerships or commoditise offerings, depending on position and ambition.

- Migrating custom‑built capabilities into community‑driven projects

- Commoditising mature components via managed services

- Chaining components to create integrated value streams

- Exploiting locality advantage by hosting or contributing regionally

Mapping the evolution of each component revealed where to shift development upstream and where to consume community offerings says a senior government official

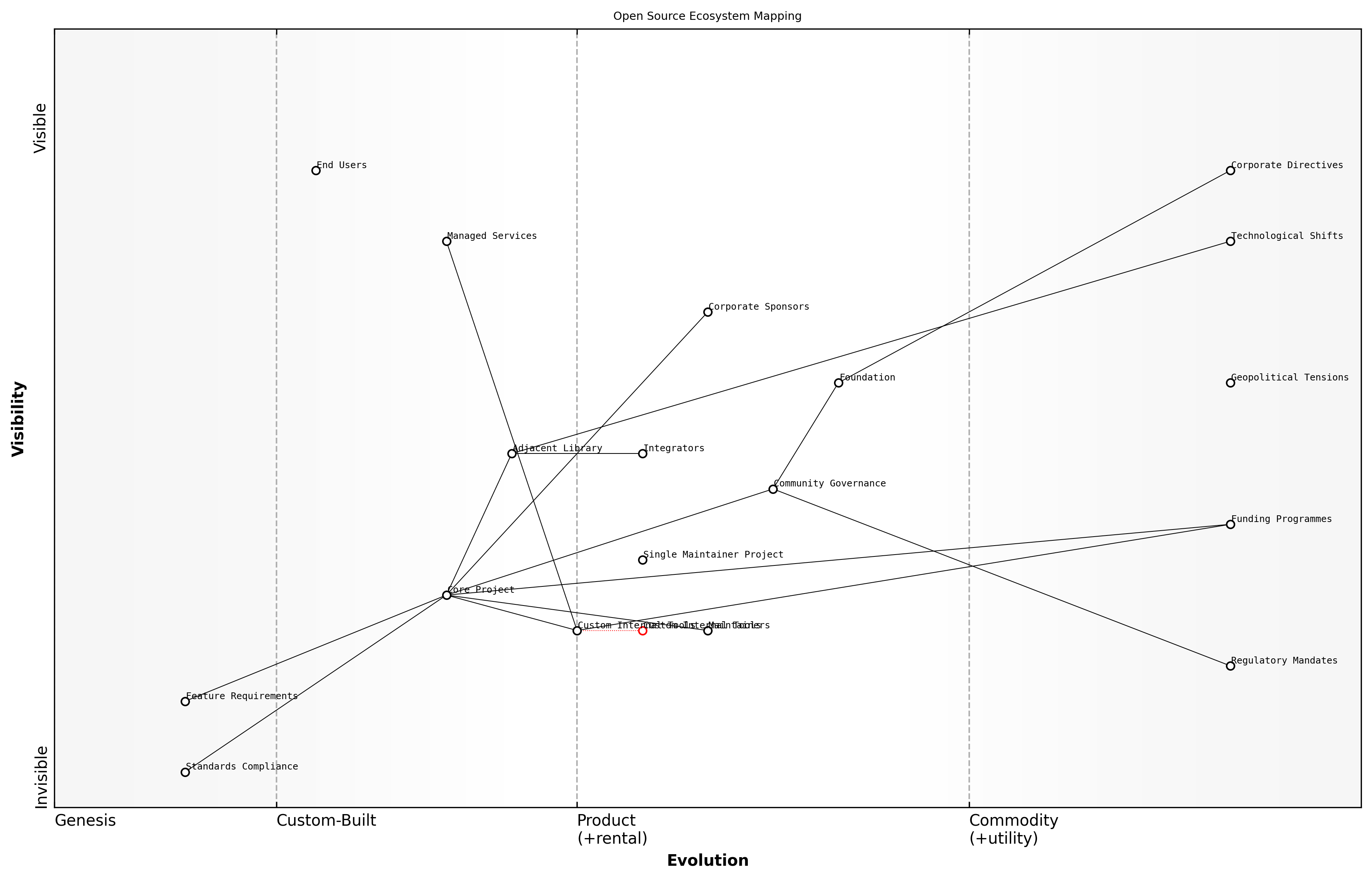

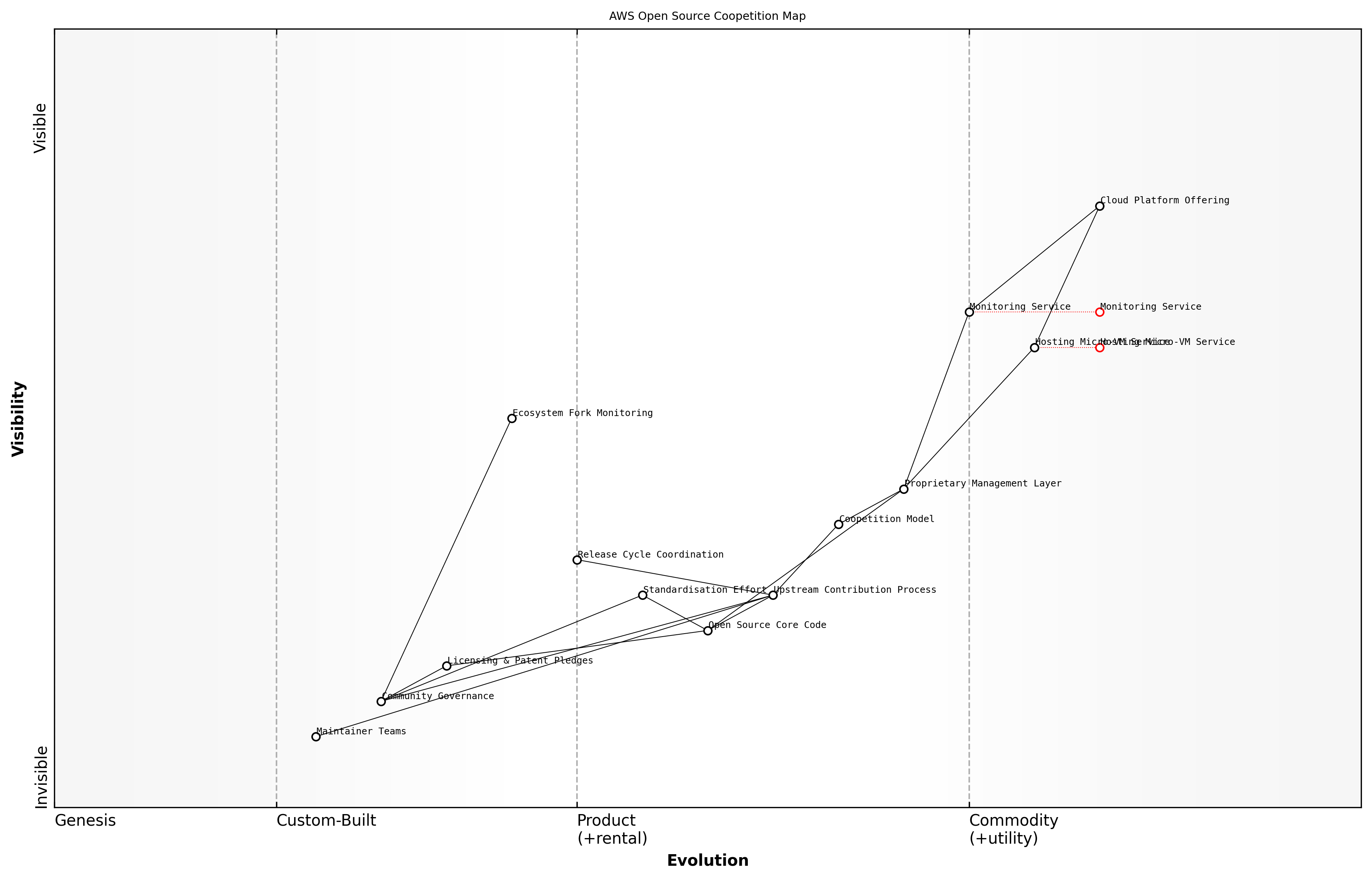

Mapping an open source ecosystem

Mapping an open source ecosystem extends the core principles of Wardley Mapping from individual components to the entire landscape of projects, contributors, governance bodies and downstream adopters. This holistic perspective enables leaders to visualise interdependencies and strategic dynamics across the ecosystem rather than isolated modules.

By decomposing the ecosystem into value chain activities—from project inception through to commoditised infrastructure—we reveal how community health, governance models and market forces interact. This approach directly supports open source as a competitive weapon by pinpointing where to invest in upstream contributions, where to commoditise services and where to cultivate partnerships.

- Define ecosystem scope, including core projects, adjacent libraries and hosting platforms

- Identify user needs and anchor points such as standards compliance or feature requirements

- List all ecosystem participants: maintainers, corporate sponsors, integrators and end users

- Position each participant and activity along the evolution axis from Genesis to Commodity

- Draw dependencies to illustrate flows of code, governance decisions and resource contributions

The climate layer in an ecosystem map captures external influences that shape community evolution. Regulatory mandates, funding programmes, geopolitical tensions and emerging technology trends all exert pressure on where projects move along the evolution axis and how contributors allocate effort.

- Regulatory changes impacting licence compliance and data sovereignty

- Funding and grant initiatives that seed new projects in Genesis

- Geopolitical considerations influencing hosting location or contributor access

- Technological shifts such as containerisation or AI that drive adoption

- Corporate strategic directives that prioritise certain repositories or foundations

Interpreting the ecosystem map reveals strategic plays such as migrating custom internal tools into community projects, commoditising mature components via managed services or forming foundations to steward mid‑stage technologies. The map guides resource allocation to high‑impact areas and highlights potential single points of failure.

Visualising the entire open source ecosystem accelerates alignment between technical teams and boardroom strategists, says a senior government official

Practical considerations for ecosystem mapping include updating the map at regular intervals, involving cross‑functional stakeholders (legal, security, procurement) and aligning mapping workshops with release cadences and community governance meetings.

- Identify strategic gaps where ecosystem stewardship can yield competitive differentiation

- Mitigate risks by spotting dependencies on immature or single‑maintainer projects

- Prioritise upstream contribution investments to accelerate project maturity

- Inform partnership and funding decisions based on ecosystem topology

- Enhance cross‑team collaboration by providing a shared visual reference

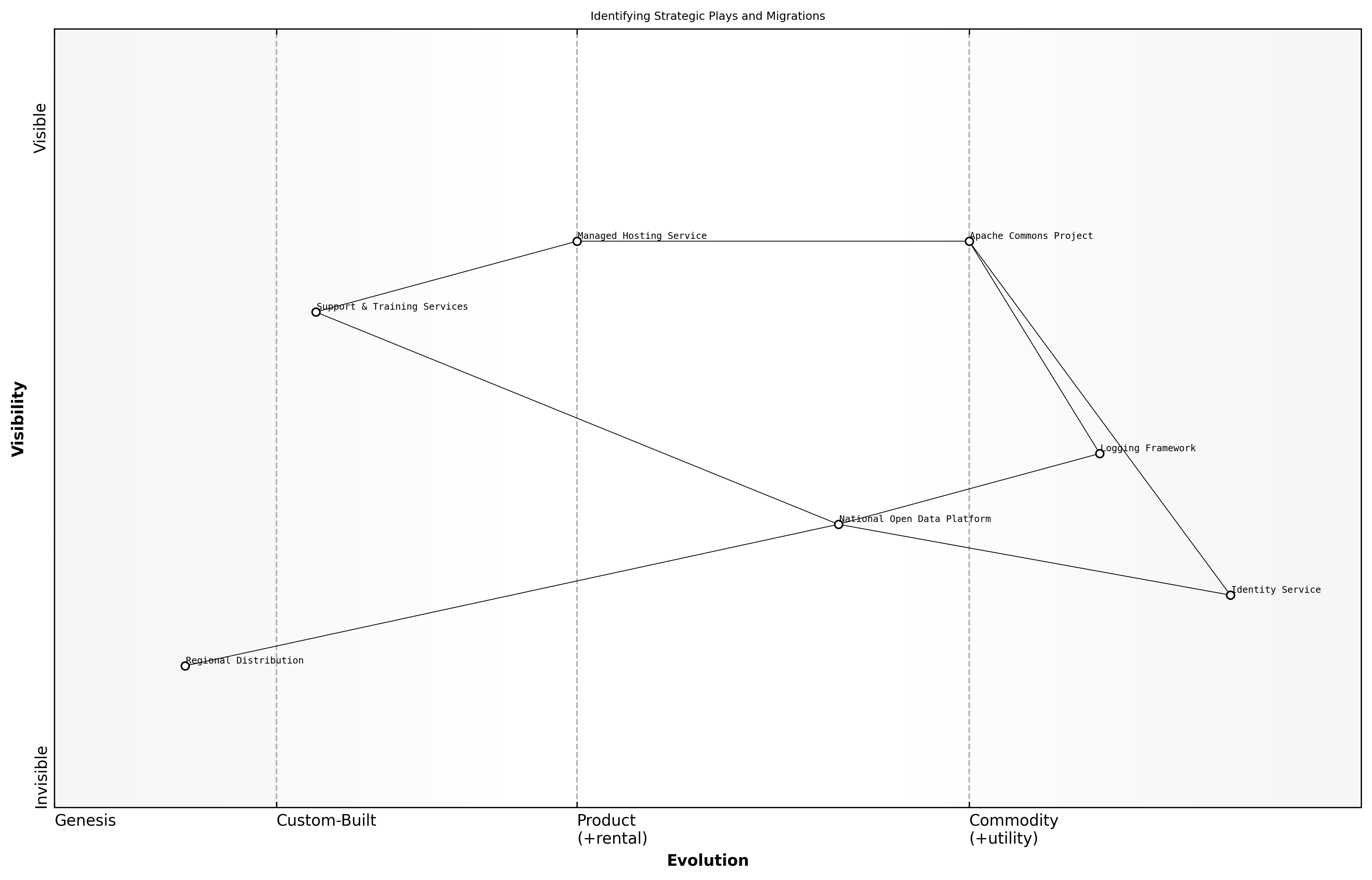

Identifying strategic plays and migrations

In the context of open source as a competitive weapon, identifying strategic plays and migrations is critical for leadership teams. Wardley Mapping reveals not only where components reside on the evolution axis but also the possible motions—known as plays—that organisations can execute to reshape their landscape. By consciously selecting plays, public sector bodies and enterprises align investment, community engagement and risk management towards strategic objectives.

- Contribute upstream to accelerate maturity of Custom Built components and reduce internal maintenance overhead

- Commodify as a service by offering managed hosting for Commodity utilities, capturing recurring revenue and strengthening ecosystem influence

- Chain components into integrated platforms, bundling open source modules to deliver end‑to‑end solutions that competitors struggle to replicate

- Migrate in‑house builds into community‑driven projects, shifting maintenance burden and benefiting from collective innovation

- Localise or sovereignise critical utilities by forking or tailoring regional distributions to meet data sovereignty and security mandates

- Harvest value from mature components through tiered support and training services, balancing open access with premium offerings

Each play aligns with a specific phase on the Wardley Map evolution axis. For instance, a novel capability in Genesis may warrant in‑house development and targeted upstream contributions to seed a new community. Conversely, a Product/Rental offering can be commoditised as a hosted service when automation and scale make proprietary differentiation unsustainable.

Consider a national open data platform that migrated its bespoke logging framework into the Apache Commons project. By contributing extensively upstream, the agency reduced maintenance costs by 40 percent, benefited from a broader security review and set the foundation for a new managed service payroll integration that generated positive revenue.

- Component maturity and user dependency profiles

- Health and governance of the target open source community

- Regulatory constraints around licence compatibility and data residency

- Internal capability to manage contributor relationships and code reviews

- Commercial impact of commoditising versus retaining proprietary control

- Alignment with broader interoperability and sovereign capability mandates

Open source migrations allow agencies to focus on value creation rather than reinventing the wheel says a senior government official

Selecting the right play requires cross‑disciplinary insight. Economic public‑goods theory guides the understanding of upstream investment returns, while organisational sociology highlights power dynamics when forking or localising community projects. Network science can identify critical hubs for influence, and behavioural psychology informs recognition systems that motivate contributors during migration phases.

Porter’s Five Forces Meets Lean Startup

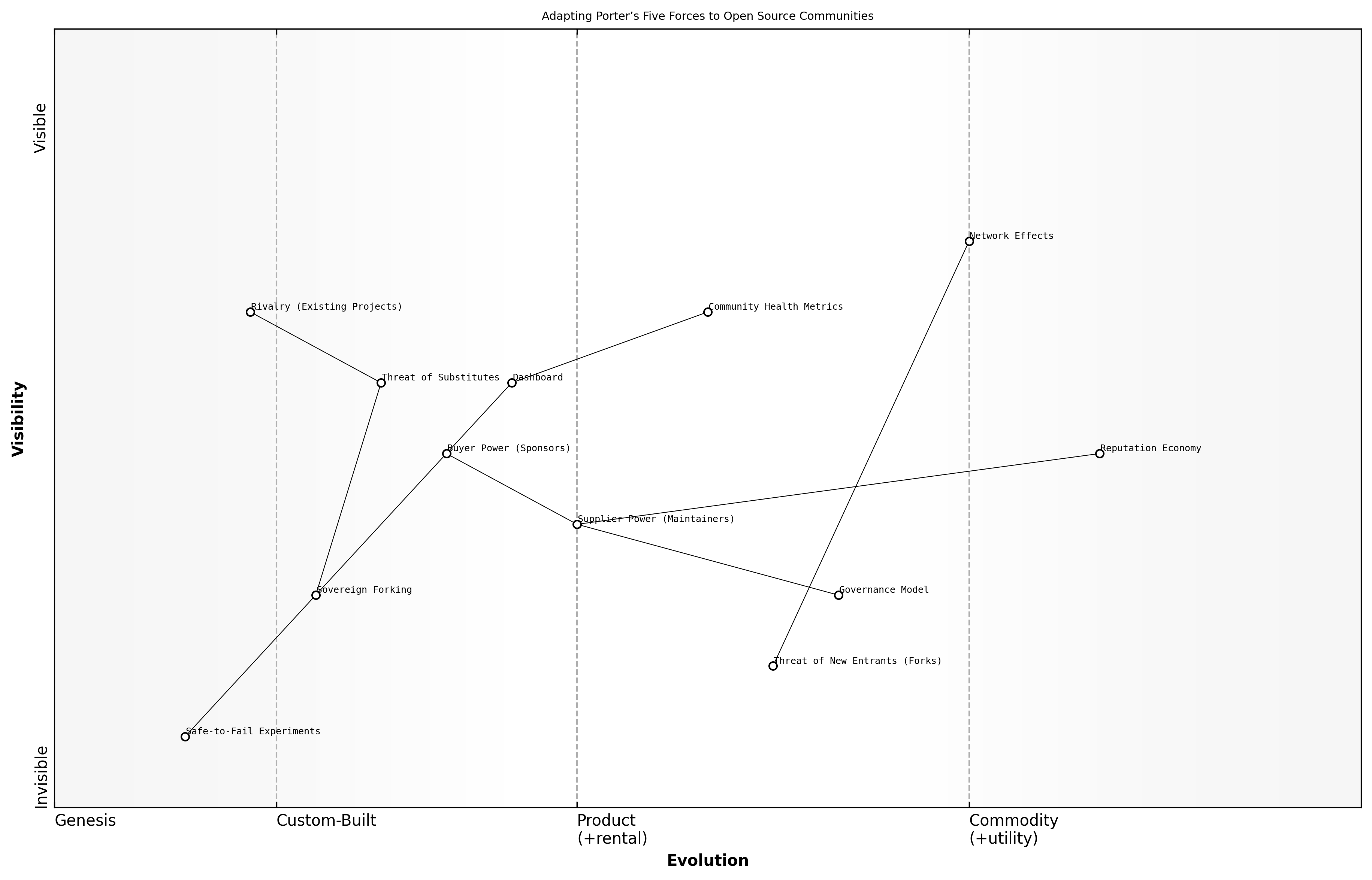

Adapting Porter’s model to communities

Open source communities are more than code repositories and mailing lists: they are dynamic ecosystems in which organisations, individual contributors and end users interact under shifting power relationships. Adapting Porter’s Five Forces to this context provides a structured way to analyse competitive pressures, collaboration opportunities and risks that shape community health and strategic outcomes.

Porter’s original model identifies five forces that determine industry profitability: threat of new entrants, bargaining power of suppliers, bargaining power of buyers, threat of substitute products or services, and intensity of competitive rivalry. In open source communities, these forces manifest differently, demanding a reinterpretation that accounts for the public‑goods nature of code, network effects and governance structures.

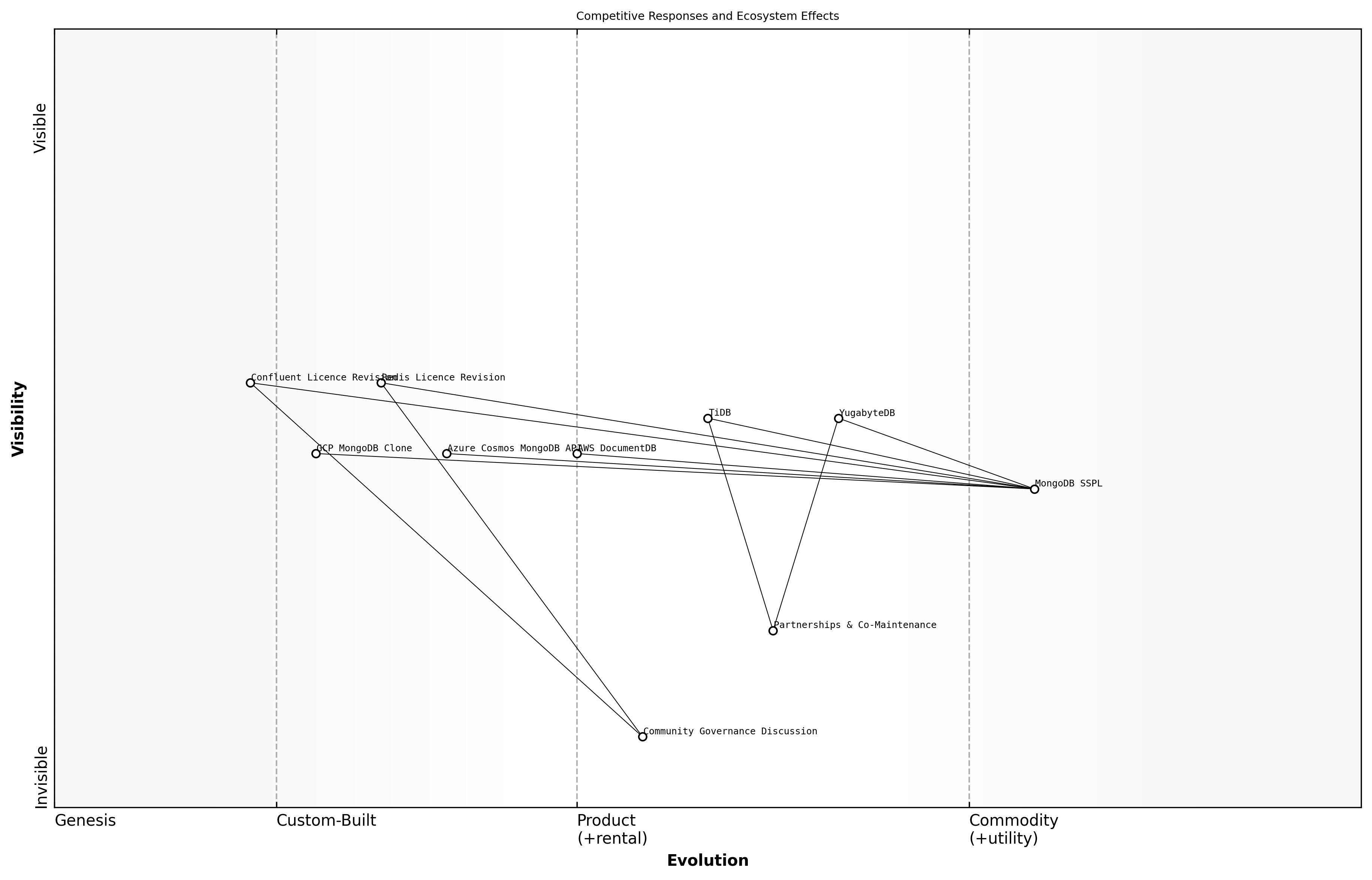

- Threat of new entrants becomes the risk of forked projects or new communities forming around adjacent technologies, potentially drawing away contributors and users.

- Bargaining power of suppliers maps to the influence of key maintainers and core contributors whose decisions on roadmap, licensing and governance can shape project direction.

- Bargaining power of buyers reflects downstream organisations and integrators that adopt, package or host the software, whose support commitments and funding can sway feature priorities.

- Threat of substitutes covers alternative libraries, frameworks or proprietary offerings that address similar use cases, affecting contributor retention and community growth.

- Rivalry among existing projects encapsulates competition for mindshare, funding, contributor time and ecosystem integrations across similar open source initiatives.

However, open source communities also introduce unique dynamics. Contributors are both suppliers and customers of code; they vote with pull requests, issue reporting and governance participation. Decision pathways are transparent, and forking acts as both a competitive incentive and a safety valve against stagnation or unfavourable governance changes.

- Network effects as a barrier to entry: large, active communities deter new forks by offering rich plugin ecosystems and rapid issue resolution.

- Reputation economy: individual contributors build social capital, influencing their bargaining power within governance bodies and sponsor organisations.

- Governance model as a force multiplier: foundation‑led or hybrid governance can stabilise supplier power by diluting single‑maintainer influence and opening formal voting rights.

- Community health metrics: visible indicators such as contributor growth, issue backlog age and merge times affect perceptions of rivalry and substitute risk.

- Sovereign forking: public sector bodies may fork projects to meet regulatory or data‑sovereignty mandates, reconfiguring threat of substitutes in regional markets.

To operationalise this adapted model, practitioners should define measurable indicators for each force and integrate them into a community dashboard. This enables continuous monitoring of shifts in contributor behaviour, adoption trends and governance disputes, providing an early‑warning system for strategic decision‑makers.

- Number of new forks and divergence in feature branches (threat of new entrants)

- Concentration ratio of code contributions among top n% of maintainers (supplier power)

- Volume and monetary value of corporate sponsorships or paid support contracts (buyer power)

- Rate of migration to alternative projects or proprietary replacements (substitute threat)

- Comparative commit activity and release cadence across similar open source initiatives (competitive rivalry)

By reframing Porter’s forces through a community lens, organisations can identify where to invest in governance reforms, contributor incentives or integration partnerships to shift the balance of power in their favour says a leading strategist in open source

This community‑centric interpretation of Porter’s model dovetails with Lean Startup principles by emphasising validated learning through continuous measurement. Running safe‑to‑fail experiments—such as trial governance changes or sponsored contributor programmes—allows teams to test hypotheses about force dynamics, iterate rapidly and refine their competitive positioning within the open source ecosystem.

Lean Startup principles in open source projects

Open source projects often navigate high uncertainty around feature viability, contributor engagement and downstream adoption. Lean Startup principles provide a structured approach to reduce risk through rapid experimentation, validated learning and iterative development cycles tailored to community dynamics.

- Build a minimal viable release by identifying the smallest set of features that address a core user need and publishing an alpha or beta version

- Measure community response through issue volume, pull request feedback, download counts and sentiment in discussion forums

- Learn by analysing data and qualitative feedback to refine the roadmap, pivot modules, or double down on promising features

Applying innovation accounting in an open source context means choosing metrics that reflect both technical progress and community health. By focusing on actionable indicators, project maintainers can demonstrate value to sponsors and adapt priorities based on real‑world evidence rather than assumptions.

- Cycle time from issue creation to merged pull request to measure development speed

- Number of active contributors per release to assess community engagement

- Download or installation metrics to gauge adoption and identify popular features

- Rate of new issues and feature requests to understand user pain points

Adopting lean experiments in an open source project accelerates learning while keeping contributor motivation high says a leading strategist in open source

Experimentation fosters a culture of safe‑to‑fail prototypes, encouraging contributors to propose novel ideas without fear of wasted effort. Over time, small validated wins compound into a robust roadmap aligned with both community needs and strategic objectives.

[Insert Lean Startup Experiment Canvas illustrating build‑measure‑learn cycles in an open source project]

Integrating both frameworks for maximum insight

By uniting Porter’s Five Forces with Lean Startup principles, organisations gain a dual lens that combines competitive analysis with rapid experimentation. This integrated approach helps uncover hidden risks in an open source community while iteratively testing interventions to shift the balance of power in your favour.

- Map each force to a set of testable hypotheses that address community dynamics and market pressures

- Design minimal viable experiments (MVEs) to probe supplier power, substitute threats and competitive rivalry

- Embed community health and performance metrics into build–measure–learn cycles

- Use validated learning to adapt governance, contribution pathways and licensing strategies

- Prioritise safe‑to‑fail pilots in areas with high impact and high uncertainty

A practical four‑step process brings these ideas to life:

- Select the force you wish to influence (for example, reduce bargaining power of key maintainers).

- Frame a Lean experiment by defining an MVE (such as a streamlined mentorship programme).

- Measure outcomes with both force‑related indicators (contributor concentration ratio) and Lean metrics (cycle time from issue to merge).

- Learn and iterate by adjusting incentives, governance charters or tooling based on real‑world evidence.

[Insert Combined Framework Matrix illustrating how each of Porter’s forces aligns with build–measure–learn stages and corresponding community metrics]

Integrating both frameworks into a single dashboard enables board‑level oversight with aligned KPIs. For instance, tracking a supplier_power_index alongside average merge cycle time reveals whether core contributors are becoming bottlenecks or if community resilience is improving.

metrics:

supplier_power_index: top_5_contributor_percentage

cycle_time: average_time_to_merge

experiments:

- hypothesis: lifting entry barriers will reduce threat_of_substitutes

mve: simplified contribution guide

success_metric: 20% increase in new contributors per sprint

Combining competitive forces analysis with lean experimentation delivers actionable insights that boards can trust says a leading strategist in open source

Cross‑Disciplinary Lenses

Economic public‑goods theory and commons management

Economic public‑goods theory provides the conceptual foundation for understanding open source as a non‑rivalrous, non‑excludable resource. By treating code and documentation as public goods, organisations unlock network effects that drive innovation velocity and ecosystem resilience.

Traditional public‑goods economics identifies two core properties that apply directly to open source projects. Recognising these properties helps leaders design governance models and funding mechanisms that sustain healthy communities and guard against under‑provision or over‑use.

- Non‑rivalry: one user’s consumption of code does not diminish its availability to others

- Non‑excludability: projects licensed under permissive or copyleft terms remain accessible to all

- Positive network externalities: each additional contributor or adopter increases overall value

Commons management theory, pioneered by Elinor Ostrom, offers design principles for stewarding shared resources without centralised control or privatisation. Open source communities embody these principles by instituting transparent processes, defined roles and collective decision‑making.

- Clearly defined boundaries for contributor and user roles

- Congruence between rules and local conditions, for example code‑of‑conduct aligning with project goals

- Collective decision‑making through meritocratic or democratic governance

- Graduated sanctions for rule violations, such as moderation steps before removal

- Conflict resolution mechanisms that preserve trust and minimize fragmentation

- Monitoring and accountability via public dashboards and transparent issue tracking

Effective commons management transforms a software project from a collection of individual efforts into a self‑sustaining ecosystem says a leading expert in the field

Integrating public‑goods theory with open source governance enables organisations to: identify funding gaps, calibrate contribution incentives and measure the health of the commons. These insights inform strategic plays such as upstream contributions or localised forks to meet sovereign requirements.

In practice, government and public‑sector bodies can apply commons management by matching resource provisioning with contributor incentives and embedding governance charters into procurement contracts. This approach ensures long‑term sustainability and reduces reliance on proprietary vendors.

- Establish public funding pools to cover critical maintenance tasks

- Implement contributor licence agreements that respect community norms

- Integrate community health metrics into performance dashboards

- Offer mentorship stipends to lower barriers for new contributors

- Set up polycentric governance councils to distribute decision authority

- Conduct periodic audits of licence compliance and code quality

By viewing open source contributions as investments in a shared public good, organisations shift from short‑term gains to enduring competitive advantage says a senior government official

commons_metrics:

total_contributors: integer

active_maintainers: integer

funding_allocated: currency

issues_closed_per_month: integer

governance_meetings_held: integer

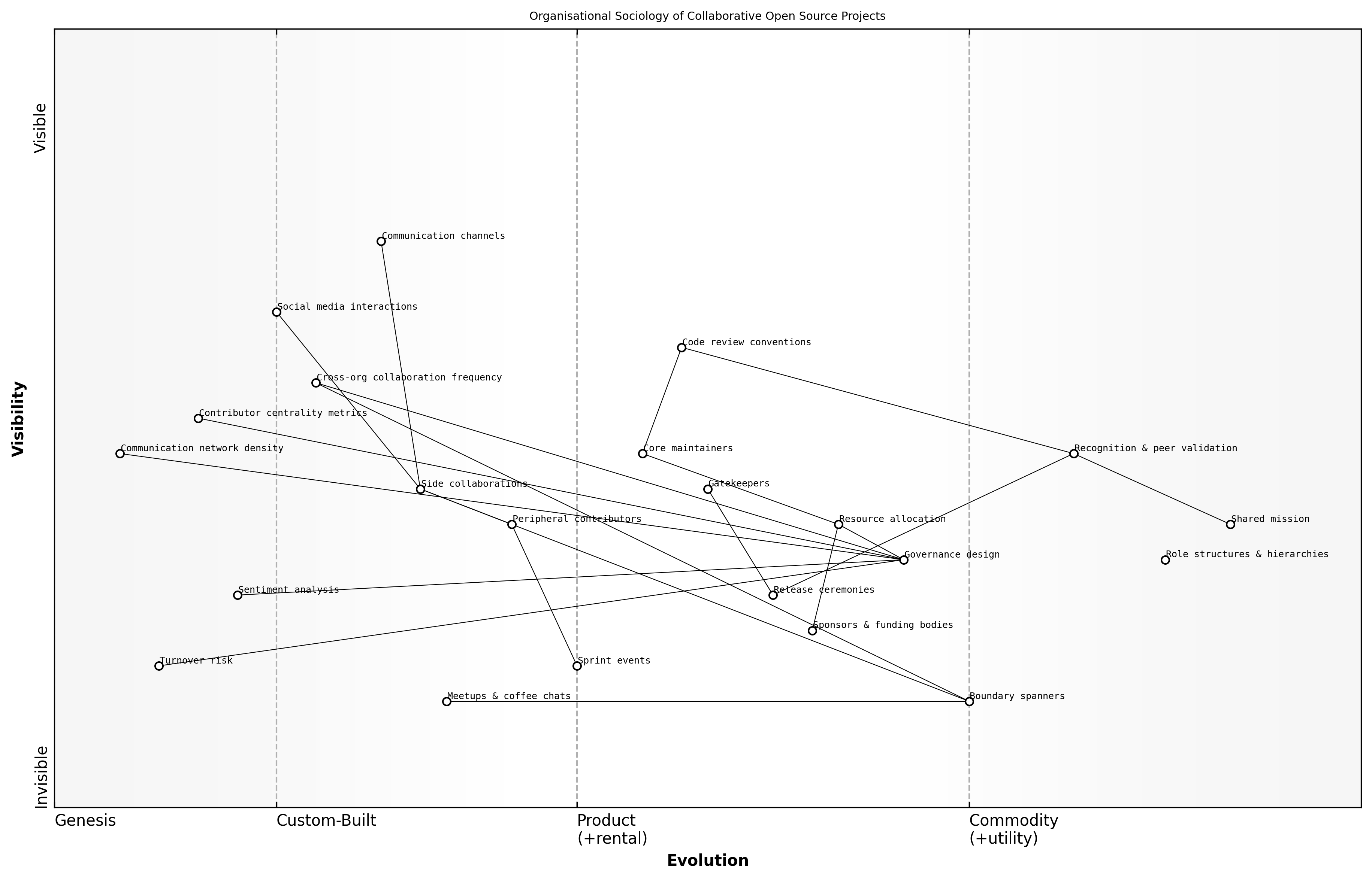

Organisational sociology of collaborative projects

Organisational sociology examines how social structures, roles and power relationships shape behaviour and outcomes within collaborative open source projects. By applying these lenses, leaders in the public sector can understand the hidden dynamics driving contributor engagement and strategic influence, ensuring their initiatives become sustained engines of innovation.

- Role structures and hierarchies that emerge organically

- Informal networks and boundary spanners connecting stakeholder groups

- Cultural norms, rituals and shared symbols that reinforce identity

- Motivational drivers and identity formation among contributors

- Impact of power relations on decision making and resource distribution

Social structures in open source communities often defy traditional organisational charts. Instead of rigid hierarchies, projects feature fluid constellations where influence is earned through merit and visible contributions. Understanding these emergent structures helps strategists identify who holds de facto authority, who acts as connectors and where potential bottlenecks lie.

- Core maintainers who set technical direction

- Peripheral contributors who offer sporadic patches

- Gatekeepers such as release managers and triage teams

- Sponsors and funding bodies influencing roadmap priorities

- Boundary spanners linking internal teams with the wider community

Informal networks underpin the flow of knowledge and resources across project boundaries. These networks often operate through direct messaging channels, social media interactions and side collaborations. Recognising boundary spanners—individuals who bridge corporate and community spheres—allows organisations to strengthen ties and accelerate knowledge transfer.

Cultural norms and rituals—such as code review conventions, release ceremonies and collaborative sprints—reinforce a shared sense of purpose and belonging. These symbolic practices sustain engagement, transmit values to newcomers and reduce coordination friction by establishing predictable patterns of collaboration.

- Code of Conduct announcements that set behavioural expectations

- Regular release retrospectives that celebrate achievements

- Mentorship programmes and sprint events to onboard new contributors

- Community stand‑ups and town halls for transparent decision making

- Informal gatherings like meetups and virtual coffee chats to build trust

Contributor identity and motivation emerge from a blend of personal, social and instrumental factors. Contributors find purpose through recognition, peer validation and alignment with project goals. For government bodies, fostering a sense of shared mission—such as delivering public good—can strengthen intrinsic motivation and loyalty.

Organisational sociology reveals that trust is the currency of open source communities and understanding power dynamics is essential for effective stewardship says a senior strategist

Integrating organisational sociology insights into governance design and strategic planning empowers leadership to anticipate community reactions, allocate resources effectively and design interventions that align social incentives with policy objectives. By mapping social roles alongside technical components, public sector leaders can deploy open source as a nuanced tool of statecraft and innovation.

- Contributor centrality metrics to identify key influencers

- Density of communication networks to gauge collaboration strength

- Turnover rates in core teams as risk indicators

- Sentiment analysis of discussion forums for cultural health

- Frequency of cross‑organisation collaborations

Network science: understanding ecosystem topology

Applying network science to open source ecosystems reveals the hidden architecture of connections between projects, contributors, governance bodies and adopters. By viewing the community as a graph of nodes and edges, public sector organisations can identify critical hubs, potential points of failure and opportunities to strengthen resilience and influence.

- Nodes representing actors such as maintainers, corporate sponsors, downstream integrators and user organisations

- Edges capturing interactions like code contributions, issue comments, sponsorship relationships and governance votes

- Topological patterns including centralisation, modular clusters and bridging nodes

- Dynamic metrics tracking growth, churn and information flow over time

At its core, ecosystem topology analysis decomposes the community into its structural components. Leaders in government and public sector contexts can use this lens to ensure that critical services are not overly dependent on a small number of maintainers, to spot emerging subcommunities working on strategic features, and to measure the robustness of governance networks.

Network metrics provide quantitative insight into community health and risk. By integrating these into a dashboard alongside Wardley maps and Porter–Lean experiments, boards gain a multi‑dimensional view of strategic posture and emergent threats.

- Degree centrality measuring the number of direct connections each actor has

- Betweenness centrality identifying nodes that bridge otherwise disconnected clusters

- Clustering coefficient revealing tight‑knit subcommunities and their cohesion

- Average path length indicating how quickly information diffuses across the network

- Network density quantifying overall connectivity relative to possible links

- Modularity scores to detect thematic or functional groupings within the ecosystem

Practical applications include designing succession plans for key maintainers, targeting outreach to under‑connected user groups, and simulating the impact of contributor departures. For example, a ministry of defence might map dependencies in a secure communications stack, then proactively invest in training new maintainers to reduce single‑point‑of‑failure risk.

[Insert Network Map: detailed description of a force‑directed graph visualising contributor interactions, module dependencies and sponsorship flows, annotated with centrality heatmaps]

A step‑by‑step approach to ecosystem topology analysis empowers teams to move from raw data to strategic insight:

- Collect contribution and interaction data from repositories, mailing lists and governance platforms

- Construct a graph data model defining nodes, edges and attributes such as role or organisation

- Compute centrality, clustering and modularity metrics using network analysis libraries

- Visualise the graph to highlight critical nodes and clusters

- Interpret results in the context of strategic plays (for instance, strengthening weak bridges)

- Integrate findings into board‑level dashboards alongside financial and health KPIs

A network view uncovers dependencies you cannot see in code alone says a senior government official

metrics:

nodes:

total: node_count

key_hubs: top_10_percent_centrality

edges:

total: edge_count

average_degree: avg_degree

connectivity:

density: edge_density

clustering: average_clustering_coefficient

information_flow:

average_path_length: avg_path_length

betweenness: top_5_betweenness

Behavioural psychology: motivating contributors

Behavioural psychology examines the mental models and stimuli that drive individuals to participate actively in collaborative projects. By understanding what motivates contributors, organisations can sculpt environments that foster sustained engagement and innovation in open source communities.

Motivation arises from a combination of intrinsic and extrinsic factors. Intrinsic drivers—such as mastery, purpose and autonomy—fuel long‑term commitment, while extrinsic elements—like recognition, rewards and social proof—kick‑start participation and reinforce positive behaviour.

- Mastery: the desire to learn new skills and solve challenging problems

- Autonomy: freedom to choose tasks, tools and working styles

- Purpose: meaningful mission that aligns personal and community goals

- Recognition: visible acknowledgement of contributions through badges, leaderboards or shout‑outs

- Belonging: sense of identity and social connection within the project

To translate these drivers into practice, projects should implement behavioural design patterns that guide contributors along a clear journey. Reducing friction at key stages and offering timely feedback creates a scaffold for sustained engagement.

- Clear onboarding flows with step‑by‑step guides and interactive tutorials

- Micro‑commit milestones that celebrate small wins and build confidence

- Mentorship pairings that connect newcomers with experienced contributors

- Gamification elements such as progress bars, badges and community challenges

- Regular feedback loops through code reviews, surveys and retrospective meetings

Intrinsic motives such as purpose and mastery often outweigh external rewards in sustaining long‑term engagement says a leading expert in open source

[Insert Behavioural Design Checklist: detailed table mapping motivational techniques to lifecycle stages from onboarding through stewardship]

Case Study: A public‑sector digital transformation unit introduced a badge system recognising first contributions, code reviews and documentation efforts. In six months, new contributor retention rose by 40% and average pull‑request completion time dropped by 25%, illustrating the power of timely recognition and feedback loops.

metrics:

autonomy_index: percentage_of_contributions_initiated_by_contributors

mastery_score: average_number_of_reviewed_pull_requests_per_contributor

recognition_count: badges_awarded_per_release_cycle

engagement_rate: active_contributors_per_week

experiments:

- hypothesis: introducing a mentorship programme will increase mastery_score by 20%

mve: pilot mentor‑mentee pairing for ten newcomers

success_metric: 20 percent uplift in average pull‑requests reviewed

Chapter 2: Building and Governing High‑Impact Communities

Selecting and Evolving Governance Models

Benevolent dictator vs foundation models

Selecting an appropriate governance model is foundational to building high‑impact open source communities. In government and public sector contexts, the choice between a benevolent dictator model and a foundation‑led approach carries significant implications for agility, risk management and ecosystem influence. This section examines both models, contrasts their benefits and limitations, and outlines criteria for evolving governance to meet strategic objectives.

The benevolent dictator model, sometimes referred to as a BDFL (Benevolent Dictator For Life), concentrates decision‑making authority in a single lead maintainer or small core team. This individual or group sets the technical direction, reviews contributions, and resolves disputes. While it can accelerate decision cycles and ensure coherent vision, it also introduces potential single‑point‑of‑failure risks.

-

Clear technical vision driven by a single authority

-

Rapid decision‑making and streamlined roadmap execution

-

Strong personal accountability for project success

-

Lower overhead compared with formal governance bodies

-

Risk of bottlenecks if the lead maintainer lacks capacity

-

Perceived lack of transparency in decision processes

-

Difficulty in scaling contributor base and delegating responsibilities

-

Potential for abrupt forks if personal disputes arise

A benevolent dictator model can deliver speed and coherence but requires careful succession planning to avoid mission‑critical outages says a senior government official

In contrast, a foundation model establishes a neutral legal and organisational entity to oversee project governance. Foundations typically define charters, boards, working groups and formal voting processes. They offer a robust framework for vendor neutrality, structured fundraising and regulatory compliance, which aligns well with public sector expectations around accountability and transparency.

-

Impartial governance that mitigates individual bias

-

Formal processes for decision‑making, dispute resolution and compliance

-

Enhanced trust from corporate sponsors and regulatory bodies

-

Scalable structure supporting multiple projects under one umbrella

-

Increased administrative overhead and slower decision cycles

-

Potential dilution of technical leadership and vision

-

Complexity in aligning diverse stakeholder interests

-

Need for sustained funding to maintain foundation operations

While benevolent dictator models excel in early‑stage projects requiring tight coordination, foundation models are ideal for mature ecosystems demanding neutrality and scalability. Public sector bodies often begin with a lead‑maintainer approach and later transition to a foundation as community size, compliance requirements and stakeholder diversity increase.

- Project maturity and contributor count

- Regulatory and compliance obligations

- Funding and sponsorship complexity

- Risk tolerance and succession planning

- Community expectations for transparency

Evolving governance demands a structured decision framework. Organisations should monitor key indicators such as contributor growth, decision latency and dispute frequency. When thresholds are crossed, a migration plan ensures continuity of operations, protection of intellectual property and community alignment.

- Contributor headcount exceeding maintainable ratio

- Average time to resolve critical issues surpassing service‑level targets

- Increase in third‑party sponsorships and legal agreements

- Recurring conflicts that cannot be resolved by individual maintainers

[Insert governance evolution decision matrix illustrating triggers, decision points and migration steps from benevolent dictator to foundation model]

Governance must adapt as communities grow; static models create hidden risks says a leading strategist in open source

Hybrid governance approaches

Hybrid governance combines the strengths of benevolent dictator and foundation models to balance rapid decision‑making with formal accountability in open source projects. This approach recognises that as communities grow and stakeholder diversity increases, neither extreme centralisation nor full decentralisation delivers optimal results on its own.

In a hybrid model, a core technical steering committee or lead maintainer coexists with a legal entity or advisory board. Day‑to‑day technical decisions remain agile under the guidance of experienced maintainers, while strategic, financial and compliance responsibilities are overseen by a structured governance body.

- A technical steering committee empowered to approve roadmaps and architectural changes

- A legal foundation or advisory board responsible for policy, fundraising and licence compliance

- Working groups or special interest teams for modules, localisation and security

- Defined escalation pathways between technical and strategic bodies

- Transparent charters that document roles, decision rights and conflict‑resolution processes

This design harnesses the agility of the benevolent dictator model for innovation velocity, while leveraging the neutral platform of a foundation to enhance trust, diversify sponsorship and mitigate single‑point‑failure risks.

- Accelerated feature delivery through empowered maintainers

- Enhanced legal and financial stability via a formal entity

- Greater inclusivity by delegating module‑specific authority

- Clearer accountability for compliance and policy adherence

- Improved succession planning with distributed leadership

However, hybrid governance introduces complexity that must be managed carefully. Overlapping authorities can create ambiguity. Documentation and communication protocols are essential to prevent decision‑latency and governance friction.

- Risk of duplicated effort between technical and strategic bodies mitigated by clear charters

- Potential for slowed decisions without defined escalation rules

- Increased administrative overhead requiring dedicated secretariat support

- Necessity for ongoing alignment sessions to reconcile roadmap priorities

Organisation maturity and ecosystem factors guide the adoption of hybrid models. Projects typically transition when contributor numbers exceed single‑maintainer capacity, when sponsorship volumes require oversight, or when regulatory mandates demand formalised structures.

- Contributor count surpasses maintainable ratio under benevolent dictator model

- Complex funding and sponsorship agreements require fiduciary governance

- Community diversity spans multiple time zones, languages and sectors

- Heightened compliance or security requirements from public sector regulations

- Desire to decentralise module‑level authority without losing strategic coherence

A well‑executed hybrid governance framework harnesses both centralised agility and decentralised inclusivity, says a senior government official

Criteria for model selection

Selecting an appropriate governance model requires a clear set of criteria that align organisational objectives, community dynamics and regulatory obligations. In public sector contexts, where transparency, accountability and risk management are paramount, these criteria serve as decision levers for choosing between benevolent dictator, hybrid or foundation models and guide the timing of any future evolution.

- {}

- {}

- {}

- {}

- {}

- {}

- {}

Each criterion should be mapped to measurable indicators drawn from community dashboards and governance assessments. For example, a contributors_per_maintainer ratio above a defined threshold may trigger consideration of a hybrid model, while an uptick in governance_dispute_frequency could prompt a move to a foundation structure with formal dispute‑resolution processes.

[Insert governance evolution decision matrix illustrating triggers, decision points and migration steps from benevolent dictator through hybrid to foundation]

```yaml

metrics:

contributors_per_maintainer: 30

critical_issue_response_time_hours: 72

sponsor_agreements_count: 5

governance_dispute_frequency_per_month: 2

> Governance selection should reflect both current community dynamics and future strategic ambitions says a senior government advisor

By embedding these criteria into a continuous review process, public sector bodies can ensure their governance model remains fit for purpose. Regularly revisiting thresholds, reviewing community feedback and aligning with evolving regulatory landscapes protects both innovation velocity and institutional integrity.

### <a id="contributor-onboarding-and-retention"></a>Contributor Onboarding and Retention

#### <a id="crafting-clear-contribution-pathways"></a>Crafting clear contribution pathways

A well‑defined contribution pathway is the backbone of any high‑impact open source community. By mapping the journey from first contact to sustained engagement, organisations reduce uncertainty, accelerate learning curves and align contributor efforts with strategic objectives. In government and public sector contexts, clear pathways not only foster inclusion but also ensure that mission‑critical projects benefit from reliable, diverse participation.

- Awareness: discoverability via documentation, websites and outreach

- Preparation: setting up development environments and granting access

- First Contribution: low‑risk tasks to build confidence

- Ramp‑up: deeper technical work and mentorship support

- Stewardship: assuming leadership roles and driving roadmap initiatives

Each phase serves a distinct purpose. In the Awareness stage, clear calls to action on project portals and social channels invite newcomers. Preparation involves automated scripts and containerised environments that eliminate setup errors. First Contribution tasks—such as typo fixes, documentation improvements or test coverage enhancements—are labelled accordingly, signalling safe entry points. As contributors gain familiarity, they progress to Ramp‑up tasks with structured mentorship. Finally, Stewardship recognises those ready to influence architecture, governance and community strategy.

- Comprehensive CONTRIBUTING.md guide with step‑by‑step screenshots

- Issue and pull request templates to standardise submissions

- Automated continuous integration checks with clear pass/fail feedback

- Dedicated chat channels or forums for real‑time support

- Badge systems or digital recognitions that celebrate milestones

Reducing friction requires both technical and social tooling. Automated scripts (`./setup.sh`), container images (`Dockerfile`), or virtual machine templates accelerate environment onboarding. Issue templates pre‑populate metadata, guiding contributors to include version information, test cases and compliance checklists. Real‑time communication channels—such as Slack, Mattermost or Matrix—ensure questions are answered promptly, preventing drop‑off at critical moments.

[Insert Contributor Journey Map: diagram illustrating phases from newcomer to maintainer with key touchpoints, resources and decision gates]

Inclusivity and accessibility are essential. Documentation should use plain language, adhere to accessibility guidelines (WCAG), and offer translations where possible. Interactive tutorials or browser‑based sandboxes allow contributors to experiment without local setup. Providing multiple pathways—text guides, video walkthroughs and paired programming sessions—caters to diverse learning styles and strengthens retention.

> A well defined path transforms confusion into confidence says a senior government official

Sample CONTRIBUTING.md

1. Get the code

git clone https://example.org/project.git

cd project

./setup.sh

2. Choose an issue

Look for issues labelled good‑first‑issue or documentation in ISSUE_TEMPLATE.md.

3. Submit a pull request

Create a branch, commit with a clear message, and open a PR referencing the issue number.

4. Automated checks

Ensure all CI tests pass and fix any lint or formatting errors reported in the build logs.

5. Review and merge

Engage in the code review, address feedback, and celebrate your first merged contribution!

By combining structured pathways, automated tooling and inclusive practices, public sector projects can turn sporadic interest into sustained contributions. This structured approach not only uplifts community health metrics—reducing time‑to‑first‑merge and increasing contributor retention—but also embeds open source principles into organisational culture, reinforcing open source as a strategic asset.

#### <a id="mentorship-and-documentation-best-practices"></a>Mentorship and documentation best practices

Effective mentorship and comprehensive documentation are twin pillars of contributor onboarding and retention. In high‑impact open source communities, a structured mentorship programme accelerates learning and fosters a sense of belonging, while living documentation ensures consistency, reduces dependency on direct support and embeds institutional knowledge.

- Clear pairing mechanisms for new contributors and experienced maintainers

- Defined mentorship goals and milestones aligned with project roadmap

- Regular check‑ins and feedback sessions to reinforce learning

- Access to paired programming or shadowing opportunities

- Recognition and support for mentors as well as mentees

Documentation must serve both as a reference and a teaching tool. Well‑structured docs reduce cognitive load for newcomers and provide experienced contributors with clear guidelines on code style, architecture decisions and testing requirements.

- Maintain a living CONTRIBUTING.md with step‑by‑step setup and contribution guidelines

- Use templates for issues and pull requests to standardise metadata and streamline reviews

- Provide inline code examples and tutorials for common workflows

- Implement clear navigation with labelled sections and a searchable index

- Offer translations and accessibility‑compliant formats where possible

- Update documentation as part of the definition of done in every release cycle

By integrating mentorship with documentation, communities create a self‑reinforcing ecosystem where mentors and novices alike rely on shared resources. Mentors guide contributors through docs, while feedback from mentoring sessions highlights gaps and drives continuous improvements in documentation.

[Insert Contributor Mentorship Flowchart: diagram showing the interplay between mentorship touchpoints and documentation resources]

- Average time from initial query to mentor assignment

- First‑contribution completion time with mentor support versus without

- Frequency of documentation updates following mentorship feedback

- Contributor satisfaction scores from periodic surveys

- Mentor engagement rate and uptake of support incentives

> Effective mentorship and living documentation transform onboarding into a strategic advantage says a senior government official

#### <a id="incentives-recognition-and-longterm-engagement"></a>Incentives, recognition and long‑term engagement

Incentives and recognition are pivotal for converting one‑off contributors into long‑term community stewards. Drawing on behavioural psychology, projects that align reward structures with intrinsic motivations and fair visibility foster sustained engagement and reduce contributor churn.

- Mastery – opportunities to learn, mentor and solve complex problems

- Purpose – participation in a mission that aligns with public service goals

- Autonomy – freedom to select tasks and influence roadmaps

- Recognition – visible credit for work and community status

- Belonging – sense of identity within a diverse contributor network

Effective programmes balance intrinsic and extrinsic incentives. Intrinsic rewards nurture long‑term commitment, while targeted extrinsic benefits accelerate early participation and reinforce positive behaviour.

- Stewardship roles such as module maintainer or working group lead

- Skill development through sponsored training and conference passes

- Influence over feature prioritisation and governance charters

- Mission alignment via invitations to strategic planning workshops

- Swag and branded merchandise for community events

- Bounties or small grants for high‑value issues

- Certificates of contribution issued by a foundation or public body

- Access to specialist tooling or cloud credits

A transparent recognition framework sets clear criteria and ensures equity. Contributors understand how to progress and what behaviours earn visibility, creating a virtuous cycle of motivation and reward.

- Digital badges for milestones such as first PR, code review or documentation improvement

- Leaderboards tracked in community dashboards and newsletters

- Mention in release notes and blog posts highlighting key achievements

- Annual community awards voted on by peers

> Sustained contribution thrives when individuals see their efforts valued and visible says a leading expert in the field

Long‑term engagement is cemented by clear progression pathways. From onboarding tasks to governance roles, each stage should build on prior achievements and open new avenues for influence and decision‑making.

- First contribution – fix a typo or write a test case

- Regular contributor – own a set of issues and mentor newcomers

- Module maintainer – approve PRs, manage releases and review roadmap proposals

- Technical steering group – set strategic direction and oversee governance

- Ambassador roles – represent the project at events and liaise with stakeholders

Aligning incentive programmes with community health metrics ensures that recognition efforts drive strategic outcomes. Dashboards should track retention rates, contributor growth and the impact of reward initiatives.

Insert Mentorship Progression Map illustrating contributor progression and incentive touchpoints

Continuous monitoring and iteration keep incentive structures relevant. Regular surveys, metric reviews and feedback loops enable adjustments that reflect evolving community needs and public sector priorities.

engagement_metrics:

retention_rate: contributors_active_over_6_months_percentage

churn_rate: contributors_not_returning_percentage

recognition_count: badges_awarded_per_quarter

promotion_rate: contributors_advancing_stages_per_cycle

> Well structured incentives turn casual volunteers into committed stewards says a senior government official

### <a id="measuring-community-health"></a>Measuring Community Health

#### <a id="key-health-metrics-and-indicators"></a>Key health metrics and indicators

Measuring community health requires a balanced set of metrics that capture both activity and vibrancy. In government and public sector contexts, these indicators help boards understand innovation velocity, risk exposure and strategic alignment with mission objectives.

Metrics can be classified as **quantitative** or **qualitative**, and as **leading** or **lagging** indicators. Leading metrics surface emerging trends, while lagging metrics confirm historical performance. Qualitative metrics reveal sentiment and governance dynamics, supplementing numeric data with human context.

- Contributor growth rate: percentage increase in new contributors per period

- Contributor retention rate: proportion of contributors active over multiple cycles

- Activity volume: number of commits, pull requests and issue interactions

- Response times: average time to triage issues and merge pull requests

- Backlog health: age and size of open issues and pull requests

- Bus factor: concentration ratio of contributions among top maintainers

- Community sentiment: tone and content of discussion forums and surveys

- Governance dispute frequency: number of conflicts requiring formal resolution

- Diversity and inclusion: representation across skill levels and demographics

- Mentorship effectiveness: satisfaction scores from mentee feedback

- Documentation quality: completeness and clarity scored by newcomer experience

Integrating these metrics into a dashboard provides boards with a real‑time view of community health, highlighting areas for intervention and investment. Setting thresholds for each indicator enables safe‑to‑fail experiments and timely governance adjustments.

community_health_metrics:

contributor_growth_rate: new_contributors_percentage

retention_rate: contributors_active_over_6_months_percentage

response_time_hours: avg_issue_triage_and_merge_time

backlog_size: open_issues_count

bus_factor: top_5_contributor_percentage

sentiment_score: forum_sentiment_index

[Insert Community Health Dashboard template illustrating metric trends and threshold alerts]

> Robust metrics surface issues before they become critical and guide strategic investments says a senior government official

#### <a id="building-and-using-community-dashboards"></a>Building and using community dashboards

In order to translate community health metrics into actionable governance and strategic investments, organisations require a centralised dashboard that provides clarity, context and real‑time visibility. A well designed community dashboard bridges the gap between raw data and board‑level decision‑making by highlighting emerging risks, tracking progress against thresholds and aligning insights with broader open source strategy.

- Clarity: use intuitive visualisations and concise labels to reduce cognitive load

- Real‑time data: automate metric collection and refresh intervals to surface early warnings

- Threshold alerts: define leading and lagging indicator thresholds to trigger interventions

- Visual layering: group related metrics into panels or tabs for role‑based views

- Accessibility: ensure dashboards meet WCAG guidelines and support multiple devices

- Integration: embed links to underlying issue trackers, network maps and experiment logs

Selecting the right metrics is critical for a dashboard to serve as a competitive weapon. Metrics should balance technical progress, contributor dynamics and qualitative sentiment. By choosing a mix of leading indicators (for example, contributor growth rate) and lagging indicators (for example, bus factor), leaders can monitor both emerging trends and historical performance.

- Contributor growth chart with new versus returning contributors

- Retention heatmap showing active contributors per week

- Bus factor gauge tracking concentration of top maintainers

- Sentiment timeline from forums, issue comments and surveys

- Response time histogram for issue triage and pull‑request merges

- Backlog health gauge measuring age and size of open issues

- Network centrality panel highlighting key hubs and bridges

[Insert Community Dashboard Prototype: a wireframe showing metric panels with threshold alerts and trend lines]

To derive strategic insight, dashboards must integrate with frameworks such as Wardley Mapping and the combined Porter‑Lean model. By linking metric panels to strategic plays and experiment outcomes, organisations can visualise how community health impacts migration decisions, value chain evolution and competitive positioning.

- Align each metric with a strategic play or evolution stage on your Wardley Map

- Map response‑time improvements to Lean Startup build–measure–learn cycles

- Embed links from dashboard panels to issue or experiment reports

- Annotate map components with health thresholds to guide play selection

- Share annotated maps and dashboards in quarterly board briefings

> A well designed dashboard turns raw data into strategic insight says a chief open source strategist

Building a community dashboard is an iterative process. Start with a minimum viable dashboard that surfaces core metrics. Solicit feedback from maintainers, contributors and executives, then refine visualisations, add qualitative sentiment panels and introduce advanced analytics such as anomaly detection or predictive modelling.

# <a id="sample-dashboard-configuration"></a>Sample dashboard configuration

metrics:

contributor_growth: weekly_new_returning_ratio

bus_factor: top_5_contributor_percentage

response_time: avg_hours_to_merge

backlog_health: open_issues_age_distribution

alerts:

- metric: response_time

threshold: 48h

severity: warning

- metric: bus_factor

threshold: 30%

severity: critical

panels:

- title: Contributor Dynamics

metrics: [contributor_growth, retention_rate]

- title: Technical Risk

metrics: [bus_factor, backlog_health]

[Insert Community Dashboard Workshop Exercise: instructions for a hands‑on session to build a customised dashboard from sample data sources]

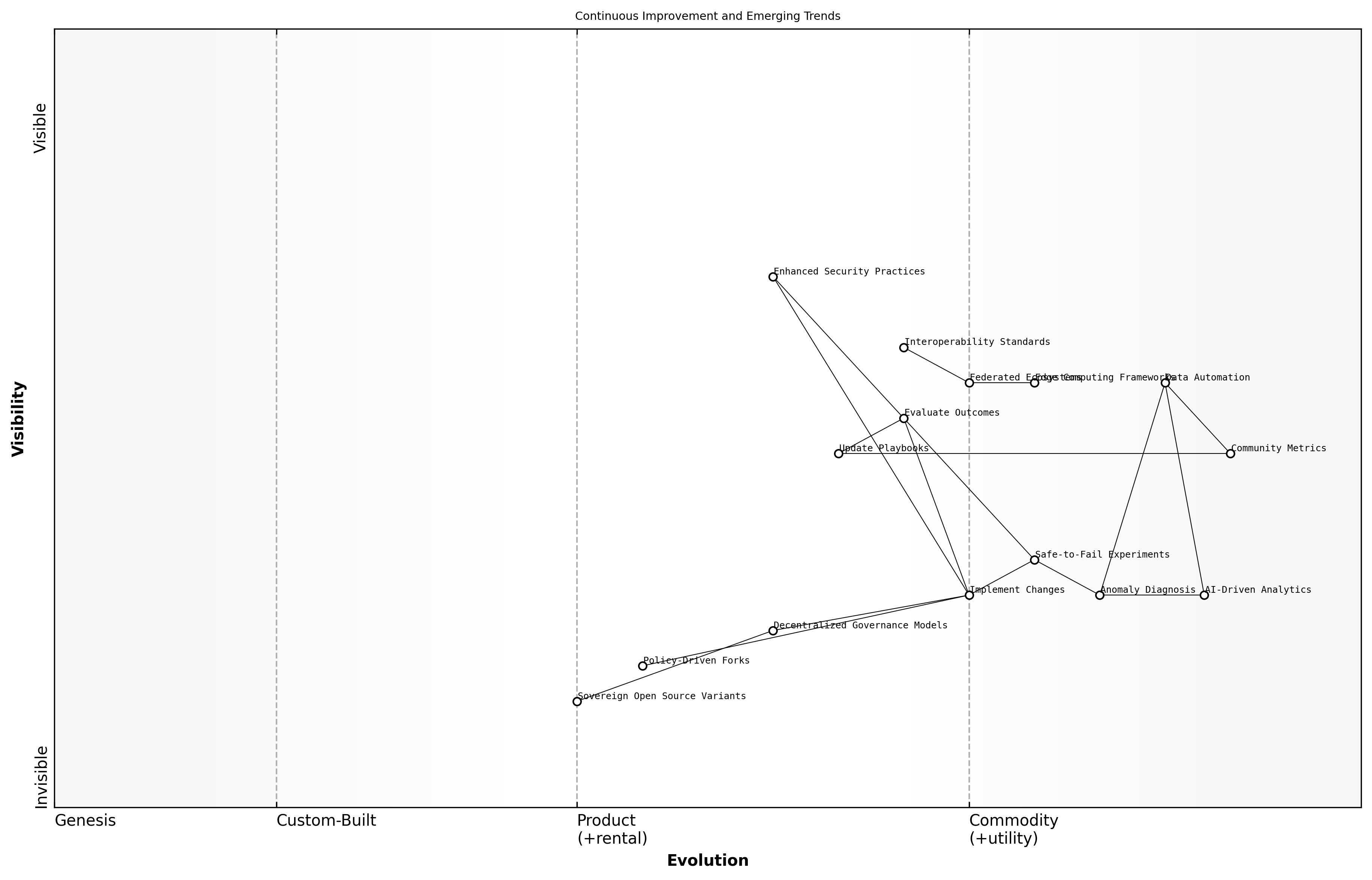

#### <a id="continuous-iteration-based-on-data"></a>Continuous iteration based on data

In high‑impact open source communities, continuous iteration based on data is the mechanism that turns raw metrics into targeted improvements. By establishing tight feedback loops between measurement and action, public sector projects can respond rapidly to emerging issues, adapt governance practices and enhance contributor experiences in line with strategic objectives.

- Define and prioritise key community health metrics aligned with user needs and strategic plays

- Automate data collection and dashboard updates to ensure real‑time visibility

- Diagnose trends and anomalies against leading and lagging indicators

- Design safe‑to‑fail experiments or process adjustments

- Implement changes in documentation, tooling or governance workflows

- Measure impact and refine hypotheses for the next cycle

Dashboards serve not only as reporting tools but as living instruments for driving iteration. When a metric crosses a predefined threshold—such as average merge time exceeding 48 hours or bus factor falling below 25 percent—teams can trigger reviews, convene working groups and allocate resources to root‑cause analysis.

metrics: merge_time_hours: threshold: 48 alert: warning bus_factor: threshold: 25 alert: critical actions:

- metric: merge_time_hours on_alert: convene_triage_meeting

- metric: bus_factor on_alert: recruit_backup_maintainers

[Insert Iteration Cycle Diagram: a circular flowchart illustrating metric collection, diagnosis, experiment design, implementation, evaluation and adjustment]

Experimentation can target diverse aspects of community operations: updating CONTRIBUTING.md to reduce first‑time merge latency, tweaking CI tooling to surface test failures earlier, or revising governance charters to streamline decision escalation. Each change is framed as a hypothesis, tested in a controlled manner and evaluated with both quantitative and qualitative feedback.

> Data driven iteration ensures that adjustments are timely and aligned with strategic objectives says a senior government official

Regular retrospectives—open to maintainers, contributors and stakeholders—close the loop by reviewing experiment outcomes, sharing lessons learned and updating the dashboard thresholds. Over successive cycles, this approach creates a resilient community culture where continuous improvement is embedded into everyday governance.