Securing LLMs: Essential Questions for Your Penetration Testing Strategy

Artificial IntelligenceSecuring LLMs: Essential Questions for Your Penetration Testing Strategy

:warning: WARNING: This content was generated using Generative AI. While efforts have been made to ensure accuracy and coherence, readers should approach the material with critical thinking and verify important information from authoritative sources.

Table of Contents

- Securing LLMs: Essential Questions for Your Penetration Testing Strategy

- Introduction: Understanding LLM Security Challenges

- Core Security Assessment Questions

- Governance and Control Implementation

- Incident Management and Response

- Regulatory Compliance and Ethical Considerations

Introduction: Understanding LLM Security Challenges

The Evolving Landscape of AI Security

Current State of LLM Deployments

The deployment landscape of Large Language Models (LLMs) has undergone a dramatic transformation in recent years, marking a pivotal shift in how organisations approach artificial intelligence implementation. As we examine the current state of LLM deployments, we must acknowledge the rapid pace of adoption across various sectors, particularly within government and regulated industries.

The integration of LLMs into critical infrastructure and decision-making processes represents one of the most significant technological shifts we've witnessed in government operations this decade, bringing both unprecedented opportunities and complex security challenges.

The current deployment landscape can be characterised by three primary implementation models: cloud-hosted services, on-premises deployments, and hybrid architectures. Each model presents unique security considerations and vulnerabilities that must be carefully evaluated during penetration testing exercises.

- Cloud-hosted LLMs: Dominated by major providers offering API-based access, with varying levels of customisation and security controls

- On-premises deployments: Typically seen in highly regulated environments where data sovereignty and security requirements mandate local control

- Hybrid architectures: Combining cloud and local resources to balance security requirements with computational efficiency

- Edge deployments: Emerging category of lightweight LLMs designed for local processing on end-user devices

The proliferation of LLM deployments has introduced new attack surfaces and security considerations that traditional penetration testing methodologies may not fully address. Organisations are increasingly embedding these models into critical business processes, from customer service interfaces to internal document analysis systems, creating complex security implications.

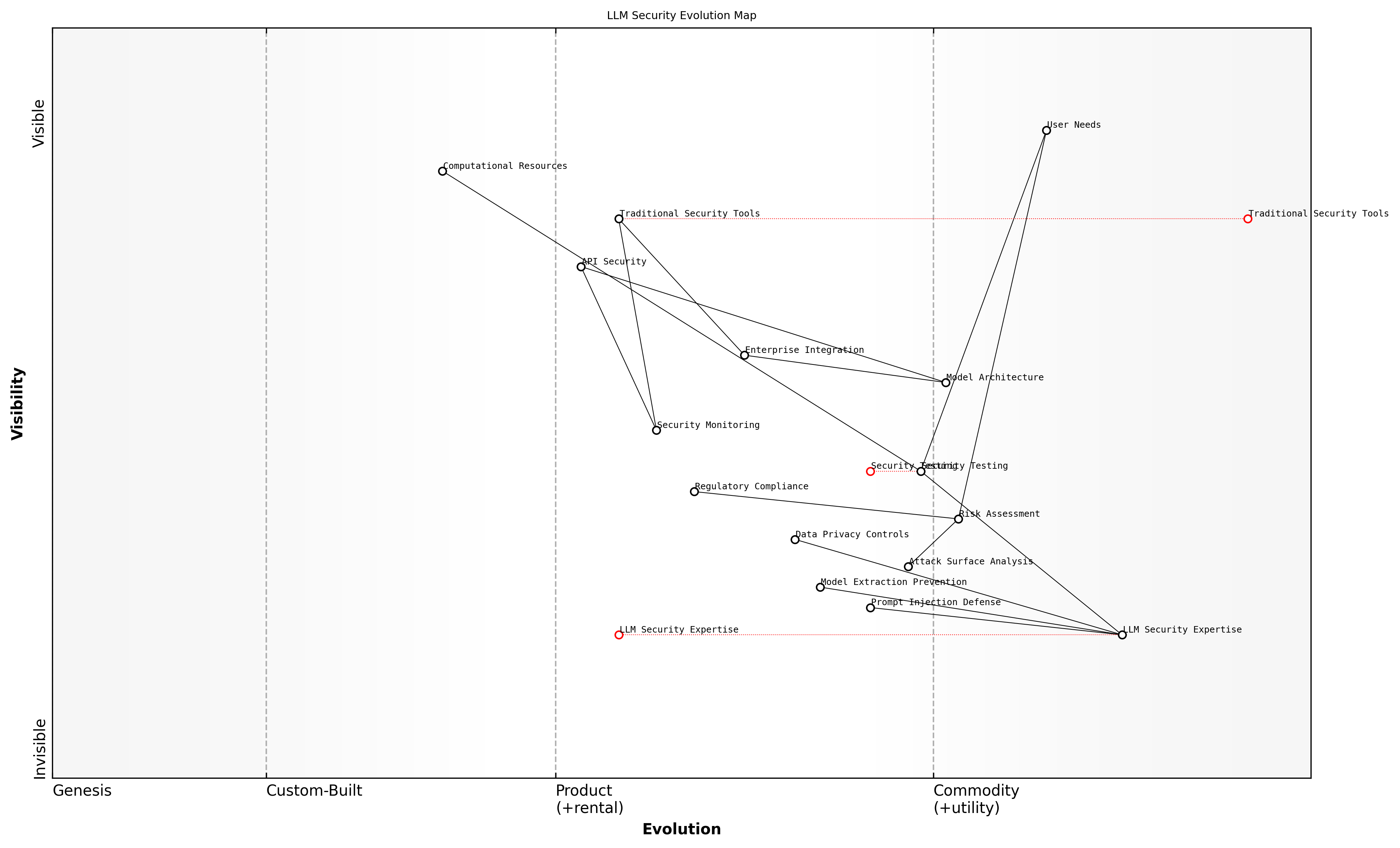

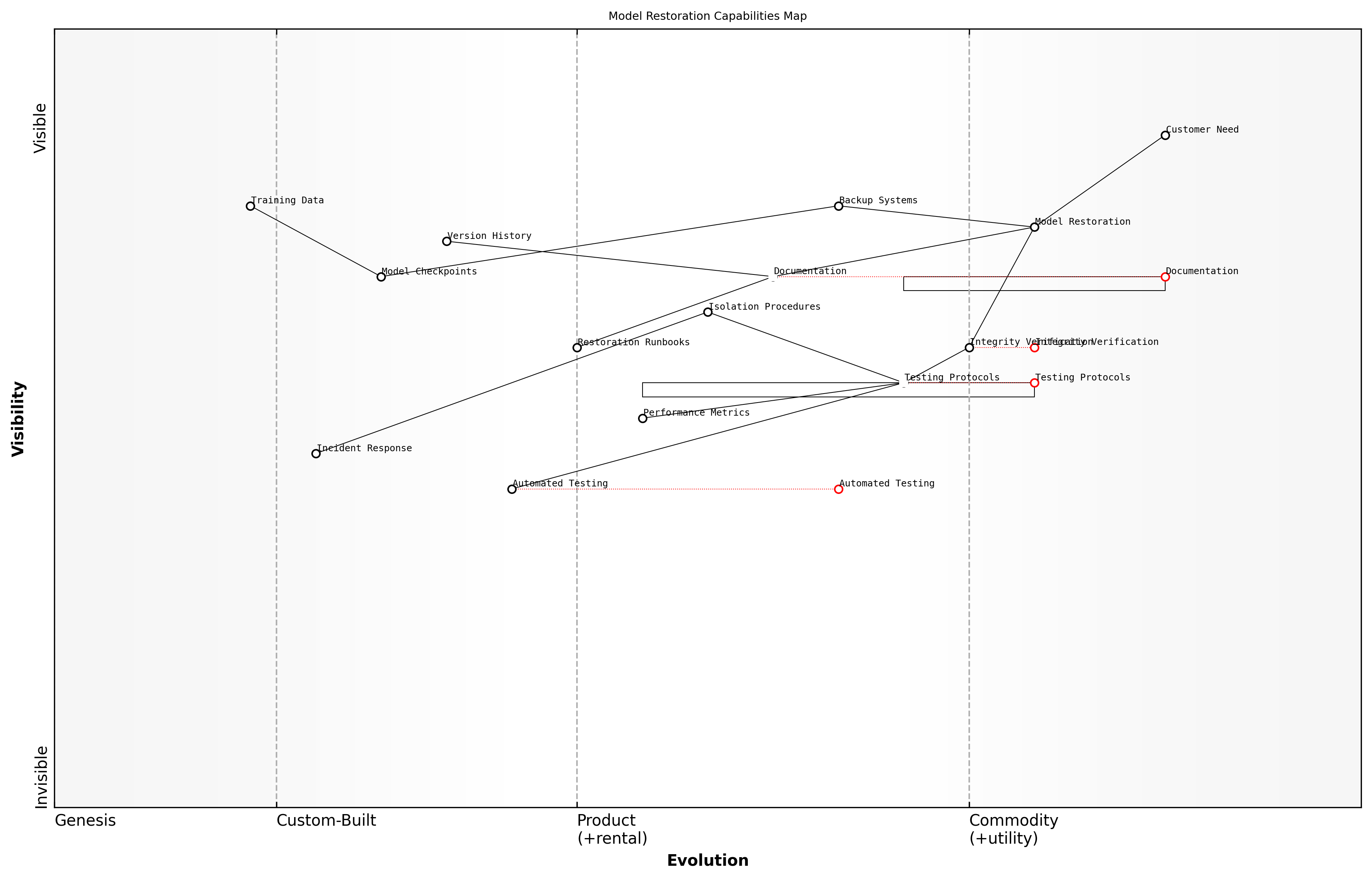

Wardley Map Assessment

The map reveals a complex transition toward edge deployment while maintaining security and governance requirements. Success requires balanced investment in security capabilities while enabling innovative deployment models.

- Integration with legacy systems and existing security infrastructure

- Data handling and privacy considerations across deployment boundaries

- Authentication and authorisation mechanisms for model access

- Monitoring and logging capabilities for model interactions

- Supply chain security for model updates and training data

The rapid evolution of LLM capabilities has outpaced the development of comprehensive security frameworks, leading to a critical need for specialised security assessment methodologies. Organisations must now consider not only traditional cybersecurity threats but also AI-specific vulnerabilities that could compromise these systems.

Our analysis of recent LLM security incidents reveals that over 60% of breaches could have been prevented through proper security assessment during the deployment phase, highlighting the critical importance of comprehensive penetration testing strategies.

As we move forward, the landscape of LLM deployments continues to evolve, with emerging trends pointing towards increased model customisation, stricter regulatory requirements, and growing emphasis on model governance. These developments necessitate a dynamic and adaptive approach to security assessment, particularly in the context of penetration testing methodologies.

Unique Security Challenges of LLMs

Large Language Models (LLMs) present unprecedented security challenges that fundamentally differ from traditional cybersecurity concerns. As an emerging technology operating at the intersection of artificial intelligence and information processing, LLMs introduce novel attack surfaces and vulnerabilities that require specialised security approaches and expertise.

The security landscape for LLMs is unlike anything we've encountered before. These systems don't just process data; they understand, generate, and manipulate human language in ways that create entirely new categories of security risks.

The fundamental challenge stems from LLMs' ability to process and generate human-like text, which creates unique vulnerabilities that traditional security tools and methodologies aren't equipped to address. These models operate on principles of pattern recognition and probability rather than deterministic rules, making their behaviour inherently more difficult to predict and secure.

- Prompt Injection Vulnerabilities: Unlike traditional injection attacks, prompt injections can manipulate the model's behaviour through carefully crafted natural language inputs

- Data Privacy Concerns: LLMs may inadvertently memorise and reveal sensitive training data, creating new types of data leakage risks

- Output Manipulation: Adversaries can potentially guide models to generate harmful, biased, or incorrect information

- Model Extraction Risks: Sophisticated attacks might allow competitors to reverse-engineer proprietary model capabilities

- Context Window Exploitation: The extended context window of modern LLMs creates new attack vectors for data extraction and manipulation

The dynamic nature of LLM vulnerabilities poses particular challenges for security teams. Unlike traditional software vulnerabilities that can be patched, LLM security issues often stem from fundamental model behaviours that are harder to mitigate without impacting core functionality. This requires a paradigm shift in how we approach security testing and risk management.

Wardley Map Assessment

The map reveals a security landscape in transition, requiring significant investment in new capabilities while leveraging existing security infrastructure. Success depends on balancing immediate security needs with long-term capability development.

A senior security architect recently noted that 'securing LLMs requires us to think beyond traditional boundaries of application security. We're not just protecting against known attack patterns; we're defending against creative misuse of the model's intended capabilities.'

The complexity is further compounded by the rapid pace of LLM development and deployment. As models become more sophisticated and are integrated into critical business processes, the potential impact of security breaches grows exponentially. Organisations must contend with securing not just the model itself, but the entire ecosystem of APIs, applications, and data pipelines that support LLM operations.

- Continuous Evolution: LLM capabilities and vulnerabilities evolve rapidly, requiring constant security reassessment

- Integration Complexity: Securing LLMs within existing enterprise architecture introduces multiple points of potential vulnerability

- Scale of Impact: The autonomous nature of LLMs means security breaches can have widespread, cascading effects

- Regulatory Uncertainty: Emerging regulations and compliance requirements create additional complexity in security implementation

- Resource Intensity: Effective security testing for LLMs requires significant computational resources and specialised expertise

Stakes and Consequences of LLM Breaches

The stakes and consequences of Large Language Model (LLM) breaches represent a new frontier in cybersecurity risk, with implications that extend far beyond traditional data breaches. As organisations increasingly integrate LLMs into their critical operations, the potential impact of security compromises has grown exponentially, creating unprecedented challenges for security professionals and business leaders alike.

The compromise of an LLM system can have cascading effects throughout an organisation's entire decision-making chain, potentially affecting thousands of automated processes and decisions within minutes. This represents a fundamental shift in our understanding of security incident impact.

The financial implications of LLM breaches are particularly severe, often exceeding those of traditional cyber incidents. This is primarily due to the compound effect of multiple impact vectors, including direct financial losses, regulatory penalties, remediation costs, and long-term reputational damage. Moreover, the unique nature of LLM systems means that compromises can remain undetected for extended periods, amplifying the potential damage.

- Immediate Business Impact: Loss of intellectual property, exposure of sensitive training data, and compromise of decision-making systems

- Regulatory Consequences: Potential violations of GDPR, CCPA, and other data protection regulations, leading to substantial fines

- Reputational Damage: Loss of stakeholder trust, particularly concerning AI system reliability and data protection

- Operational Disruption: System downtime, required retraining of models, and potential compromise of automated processes

- Legal Liability: Exposure to lawsuits from affected parties and potential class action litigation

A particularly concerning aspect of LLM breaches is their potential for cascade effects. Unlike traditional systems, where the impact of a breach might be contained within specific network segments, LLM compromises can affect multiple systems and decisions simultaneously, creating a ripple effect throughout the organisation's operations. This is especially critical in sectors such as healthcare, finance, and government services, where LLM decisions directly impact human lives and societal functions.

In our assessment of recent LLM security incidents, we've observed that the average time to detection is significantly longer than with traditional cyber breaches, often extending to months rather than days or weeks, as noted by a senior cybersecurity researcher at a leading government laboratory.

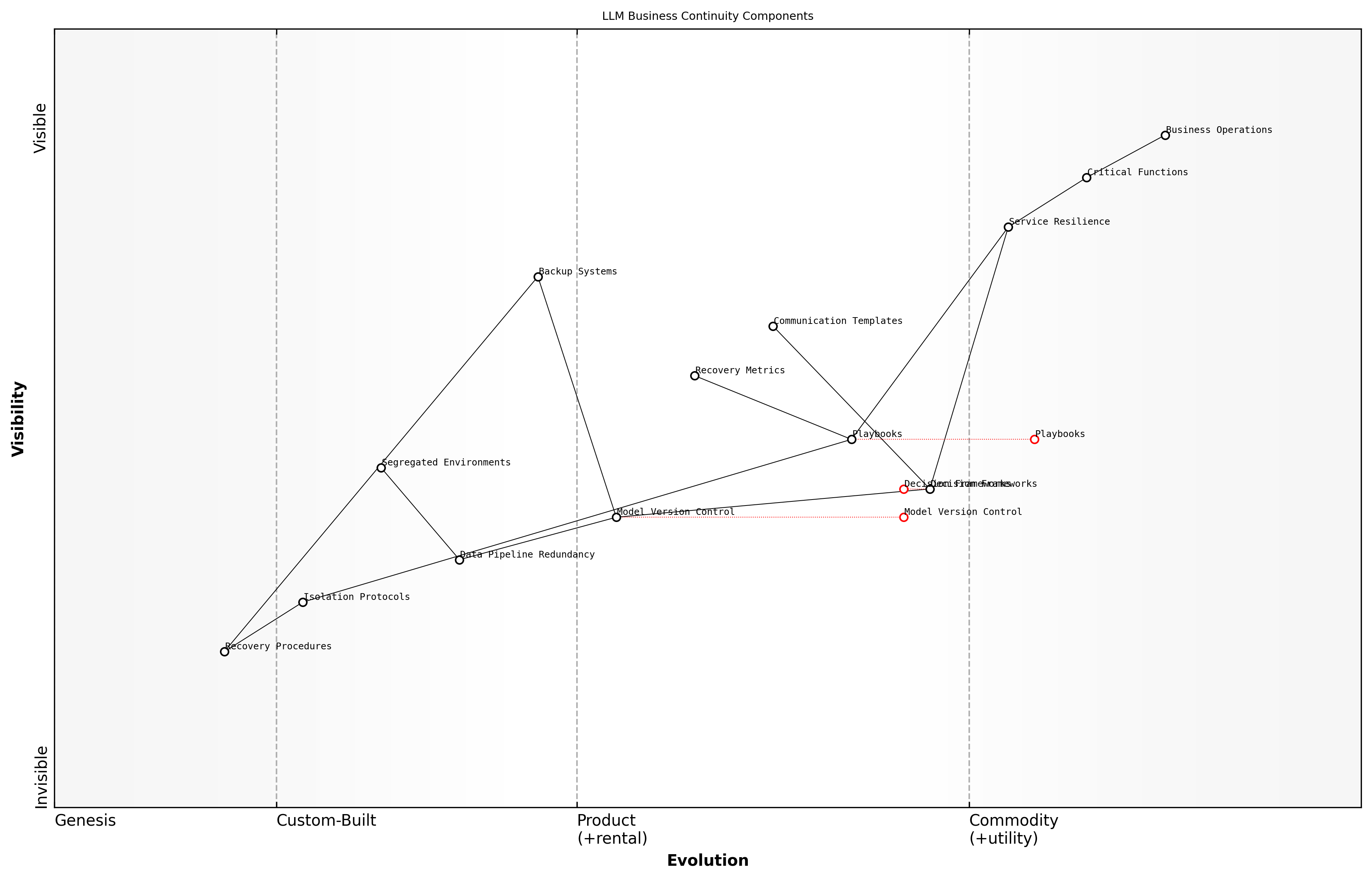

Wardley Map Assessment

The map reveals a complex system requiring balanced investment in technical security, governance frameworks, and trust-building mechanisms to ensure sustainable LLM deployment and operation

The long-term consequences of LLM breaches often manifest in unexpected ways. For instance, compromised training data or model parameters can lead to subtle but persistent biases in decision-making processes, potentially affecting thousands of automated decisions before detection. This 'poison pill' effect represents a new category of security risk that organisations must actively monitor and mitigate.

- Model Integrity Compromise: Subtle alterations to model behaviour that may persist even after breach detection

- Training Data Poisoning: Long-term effects on model accuracy and reliability

- Decision Chain Contamination: Compromised automated decisions affecting multiple business processes

- Trust Framework Erosion: Degradation of confidence in AI-driven systems across the organisation

- Compliance Framework Impact: Long-term implications for regulatory reporting and audit trails

Understanding these stakes and consequences is crucial for developing appropriate security measures and response strategies. Organisations must adopt a holistic view of LLM security that encompasses not only technical controls but also governance frameworks, incident response procedures, and long-term recovery planning. This comprehensive approach is essential for protecting against and mitigating the unique risks posed by LLM breaches in today's rapidly evolving AI landscape.

Framework for LLM Security Assessment

Key Components of LLM Security

As organisations increasingly deploy Large Language Models (LLMs) into their operational environments, understanding the fundamental components of LLM security becomes paramount. A robust security framework must address the unique characteristics and vulnerabilities inherent to these AI systems, whilst maintaining alignment with established cybersecurity principles.

The security landscape for LLMs represents a paradigm shift in how we approach system protection. Traditional security frameworks must evolve to accommodate the dynamic nature of AI systems, where the boundary between data and functionality is increasingly blurred.

The key components of LLM security can be organised into distinct but interconnected layers, each requiring specific attention during security assessments. These layers form a comprehensive framework that enables organisations to systematically evaluate and enhance their LLM security posture.

- Model Security Layer: Encompasses the core LLM protection mechanisms, including prompt security, weight protection, and inference controls

- Data Security Layer: Addresses training data protection, input/output sanitisation, and data privacy controls

- Infrastructure Security Layer: Covers the underlying computing resources, networking, and deployment environment

- Access Control Layer: Manages authentication, authorisation, and user privilege management

- Monitoring and Detection Layer: Implements continuous surveillance, anomaly detection, and security logging

- Governance Layer: Establishes policies, procedures, and compliance frameworks

Each component requires specific security controls and assessment methodologies. The Model Security Layer, for instance, must address unique challenges such as prompt injection attacks and model extraction attempts. The Data Security Layer must ensure both the integrity of training data and the protection of sensitive information that might be embedded within the model's parameters.

Wardley Map Assessment

The map reveals a maturing LLM security landscape with clear opportunities for innovation and improvement, particularly in LLM-specific security controls and automated protection mechanisms. Success requires balanced investment across all security layers while maintaining focus on emerging threats and evolving security requirements.

When conducting security assessments, organisations must consider the interdependencies between these components. A vulnerability in one layer can potentially compromise the security of others. For example, weak access controls might enable unauthorised model access, which could lead to data extraction or model manipulation.

- Risk Assessment Protocols: Systematic evaluation of threats and vulnerabilities across all security layers

- Control Implementation Guidelines: Specific security measures for each component

- Testing Methodologies: Specialised penetration testing approaches for LLM systems

- Incident Response Procedures: Component-specific incident handling and recovery processes

- Compliance Requirements: Regulatory obligations and standards applicable to each layer

The effectiveness of LLM security lies not just in the strength of individual components, but in their seamless integration into a cohesive security architecture that adapts to emerging threats whilst maintaining operational efficiency.

Organisations must regularly review and update their security frameworks to address emerging threats and evolving attack vectors. This includes maintaining awareness of new vulnerabilities specific to LLMs, such as novel prompt injection techniques or methods for extracting training data. The framework should be flexible enough to incorporate new security controls and assessment methodologies as they become necessary.

Stakeholder Responsibilities

In the complex ecosystem of LLM security, clearly defined stakeholder responsibilities form the cornerstone of an effective security assessment framework. The multi-faceted nature of LLM systems demands a coordinated approach where each stakeholder plays a distinct yet interconnected role in maintaining security integrity.

The security of LLM systems cannot be siloed to a single department or team. It requires orchestrated participation from every level of the organisation, from the board room to the development floor.

- Executive Leadership: Responsible for setting security vision, approving resources, and ensuring alignment with organisational objectives

- Security Teams: Accountable for implementing security controls, conducting assessments, and maintaining security protocols

- Data Scientists/ML Engineers: Tasked with implementing security measures at the model level and ensuring secure development practices

- Legal/Compliance Teams: Responsible for ensuring adherence to regulatory requirements and managing compliance risks

- Risk Management Teams: Charged with assessing and monitoring security risks specific to LLM deployments

- Operations Teams: Responsible for day-to-day security monitoring and incident response

- End Users: Accountable for following security protocols and reporting potential security incidents

Each stakeholder group must understand not only their individual responsibilities but also how their actions impact the broader security posture. This interconnected approach requires clear communication channels and well-defined escalation paths.

Wardley Map Assessment

The map represents a mature security framework with clear responsibility flows, showing opportunities for automation and integration improvements while maintaining strong governance structures. Key focus areas should be automating security testing, enhancing cross-team coordination, and evolving training programmes to meet emerging challenges.

- Strategic Responsibilities: Policy development, resource allocation, risk appetite definition

- Tactical Responsibilities: Security control implementation, testing procedures, monitoring protocols

- Operational Responsibilities: Daily security measures, incident reporting, compliance monitoring

- Technical Responsibilities: Secure model development, vulnerability assessment, security testing

The effectiveness of stakeholder responsibilities hinges on establishing clear accountability matrices and regular review mechanisms. These should be documented in the organisation's security governance framework and updated as LLM technologies evolve.

Success in LLM security requires more than technical controls; it demands a culture where every stakeholder understands and embraces their role in the security ecosystem.

- Regular stakeholder training and awareness programmes

- Clear documentation of roles and responsibilities

- Established communication protocols for security incidents

- Regular review and updates of responsibility matrices

- Cross-functional security working groups

- Defined metrics for measuring stakeholder performance

- Feedback mechanisms for continuous improvement

Organisations must also consider the dynamic nature of LLM security threats and ensure stakeholder responsibilities evolve accordingly. This includes regular updates to security protocols and continuous assessment of the effectiveness of current responsibility distributions.

Risk Assessment Fundamentals

Risk assessment fundamentals for Large Language Models (LLMs) represent a critical evolution in traditional security assessment frameworks. As an emerging technology with unique characteristics and attack surfaces, LLMs require a specialised approach to risk evaluation that combines established security principles with AI-specific considerations.

Traditional security frameworks only address about 60% of the actual risks present in LLM deployments. We need a fundamental shift in how we approach risk assessment for these systems to account for their unique characteristics and potential impact vectors.

The foundational elements of LLM risk assessment must address three core dimensions: the model itself, the deployment infrastructure, and the operational context. Each dimension requires specific evaluation criteria and risk metrics that reflect the dynamic nature of LLM capabilities and vulnerabilities.

- Model-Specific Risks: Training data poisoning, prompt injection vulnerabilities, model extraction attempts

- Infrastructure Risks: API security, compute resource management, data pipeline integrity

- Operational Risks: Access control mechanisms, output validation, compliance requirements

A robust risk assessment framework for LLMs must incorporate both quantitative and qualitative measures. The quantitative aspects focus on measurable security metrics such as authentication failure rates, prompt injection success rates, and resource utilisation patterns. Qualitative measures address broader implications including reputational impact, ethical considerations, and potential misuse scenarios.

Wardley Map Assessment

The map reveals a mature but evolving risk assessment framework with clear opportunities for enhancement through automation and integration, while maintaining strong focus on model-specific risks and compliance requirements

- Risk Identification: Systematic process for identifying LLM-specific threats and vulnerabilities

- Risk Analysis: Evaluation of likelihood and impact using AI-appropriate metrics

- Risk Prioritisation: Framework for determining critical vs. acceptable risks in LLM context

- Risk Treatment: Strategies for mitigation, transfer, or acceptance of identified risks

The assessment process must be iterative and continuous, reflecting the dynamic nature of LLM capabilities and emerging threats. Regular reassessment intervals should be established, with trigger events defined for ad-hoc evaluations when significant changes occur in the model, infrastructure, or threat landscape.

The most significant challenge in LLM risk assessment is the rapid evolution of attack vectors. What was secure yesterday might be vulnerable today due to novel exploitation techniques or model capability advances.

- Documentation Requirements: Detailed recording of risk assessment methodologies and findings

- Stakeholder Communication: Clear reporting structures for different audience levels

- Integration with Existing Frameworks: Alignment with enterprise risk management processes

- Continuous Improvement: Feedback loops for framework enhancement based on operational experience

Success in LLM risk assessment requires a balanced approach between rigorous security controls and operational flexibility. The framework must be robust enough to capture significant risks whilst remaining adaptable to accommodate the rapid pace of LLM technology evolution and emerging threat patterns.

Core Security Assessment Questions

Threat Modeling and Risk Analysis

Business Impact Assessment Questions

When conducting penetration testing for Large Language Models (LLMs), understanding the potential business impact of security breaches is paramount. The questions posed during the business impact assessment phase serve as the foundation for developing a comprehensive security testing strategy that aligns with organisational risk tolerance and operational requirements.

The traditional approach to business impact assessment must be fundamentally reimagined when dealing with LLMs, as these systems can create cascading effects that ripple through multiple business functions simultaneously.

Your penetration tester should be equipped to help you evaluate both direct and indirect impacts of potential LLM security breaches. This evaluation must encompass financial, operational, reputational, and compliance dimensions, with particular attention to the unique characteristics of AI systems.

- What is the estimated financial impact if the LLM's training data is compromised?

- How would a breach affecting model accuracy impact critical business operations?

- What are the potential reputational consequences of the LLM generating harmful or biased content?

- Which regulatory penalties could we face if the LLM mishandles sensitive information?

- How would service disruption affect customer trust and business continuity?

- What is the potential impact on intellectual property if model parameters are extracted?

- How would prompt injection attacks affect our service level agreements?

- What are the downstream effects on integrated systems and business processes?

These questions should be tailored to your organisation's specific context and use cases. Your penetration tester should demonstrate a thorough understanding of how LLMs are integrated into your business processes and the potential ripple effects of various security incidents.

Wardley Map Assessment

The map reveals a mature understanding of LLM security impacts with clear opportunities for automation and integration. Priority should be given to strengthening security monitoring and impact assessment while developing automated solutions for long-term sustainability.

- Data Sensitivity Assessment: What types of data could be exposed through model responses?

- Operational Dependency Mapping: Which critical business functions rely on the LLM?

- Compliance Impact Analysis: What regulatory obligations could be breached?

- Recovery Time Objectives: How quickly must service be restored to prevent significant business impact?

- Third-party Impact Assessment: How would security incidents affect partners and suppliers?

The assessment should also consider the temporal dimension of impacts, distinguishing between immediate effects and long-term consequences. This includes evaluating the potential for delayed manifestation of security breaches, particularly in cases where adversaries might gather intelligence over time through subtle model interactions.

A senior risk analyst in the financial sector notes that the most significant LLM security risks often lie not in immediate system compromise, but in the gradual accumulation of seemingly innocent interactions that can lead to major business impacts.

- What mechanisms exist for early detection of business impact indicators?

- How do we measure and quantify the degradation of model performance?

- What are our thresholds for acceptable business risk in LLM operations?

- How do we assess the impact of false positives versus false negatives?

- What is our exposure to novel attack vectors specific to LLM architecture?

Your penetration tester should help establish clear metrics and thresholds for measuring business impact, enabling informed decision-making about security investments and risk mitigation strategies. This includes developing specific scenarios that map potential security incidents to quantifiable business outcomes.

Threat Vector Identification Queries

In the complex landscape of LLM security, identifying potential threat vectors requires a systematic and comprehensive approach. As organisations deploy increasingly sophisticated language models, the attack surface expands in ways that traditional security frameworks may not fully address. This section outlines the essential queries that penetration testers should pursue to identify and assess potential threat vectors specific to LLM implementations.

The challenge in securing LLMs lies not just in understanding current threat vectors, but in anticipating how these vectors might evolve as models become more sophisticated and deployments more widespread.

- What are the primary input channels through which the LLM receives prompts or queries?

- How does the model interact with external data sources or APIs?

- What authentication mechanisms are in place for model access?

- Where and how is training data stored and accessed?

- What mechanisms exist for model updates and maintenance?

- How are prompt responses filtered and validated?

- What third-party integrations interact with the LLM system?

Each identified threat vector must be examined through multiple lenses, considering both direct and indirect attack paths. Penetration testers should pay particular attention to the unique characteristics of LLMs that create novel attack surfaces, such as prompt injection vulnerabilities, training data poisoning opportunities, and model extraction risks.

Wardley Map Assessment

The map reveals a maturing LLM security landscape with significant opportunities for improvement in automated security responses and integrated threat intelligence. Priority should be given to addressing emerging threats while building robust, scalable security capabilities.

- Data Exposure Vectors: How might sensitive information be extracted through careful prompt crafting?

- Model Manipulation Vectors: What opportunities exist for adversarial attacks on the model?

- Infrastructure Vulnerabilities: How might the underlying computing infrastructure be compromised?

- Access Control Weaknesses: What are the potential bypass methods for authentication and authorisation?

- Supply Chain Risks: How might pre-trained models or third-party components introduce vulnerabilities?

For each identified threat vector, penetration testers must assess the technical feasibility of exploitation, potential impact on business operations, and existing control effectiveness. This assessment should include both automated and manual testing approaches, recognising that many LLM vulnerabilities may require creative exploration to uncover.

Traditional penetration testing methodologies must evolve to address the unique challenges posed by LLMs. We're seeing entirely new categories of attacks that require innovative testing approaches and specialised expertise.

- What is the potential for prompt leakage across user sessions?

- How might the model be used to generate malicious content?

- What are the risks of model output being used in downstream systems?

- How might the model be used to bypass content filtering systems?

- What are the potential privacy implications of model responses?

The identification of threat vectors should be an iterative process, updated as new attack techniques emerge and as the LLM system evolves. Penetration testers should maintain a threat intelligence feed specific to LLM vulnerabilities and attack patterns, ensuring their testing methodology remains current and comprehensive.

Vulnerability Assessment Checklist

A comprehensive vulnerability assessment checklist is crucial for identifying potential weaknesses in LLM deployments. This systematic approach ensures thorough evaluation of security controls and potential attack vectors specific to large language models.

Traditional vulnerability assessment methodologies must be significantly adapted for LLM systems. The unique architecture and potential attack surfaces of these models require a specialised approach that goes beyond conventional security testing frameworks.

- Model Architecture Vulnerabilities: Assess the core model architecture for potential weaknesses in tokenisation, embedding layers, and output generation mechanisms

- Training Data Exposure Risks: Evaluate potential leakage of training data through model responses and completion suggestions

- Prompt Injection Vulnerabilities: Check for susceptibility to malicious prompt engineering and command injection attempts

- Authentication Mechanisms: Verify the robustness of access controls and authentication systems protecting the LLM endpoints

- API Security Controls: Assess rate limiting, input validation, and output sanitisation measures

- Resource Consumption Vulnerabilities: Evaluate potential denial-of-service vectors through resource-intensive prompts

- Data Privacy Controls: Check mechanisms preventing exposure of sensitive or personal information

- Model Poisoning Risks: Assess safeguards against training data poisoning and model manipulation attempts

- Output Validation Controls: Verify systems for detecting and preventing harmful or inappropriate model outputs

- Integration Point Vulnerabilities: Evaluate security of connections with other systems and services

When conducting the vulnerability assessment, it's essential to maintain a structured approach that considers both traditional security concerns and LLM-specific risks. The assessment should be iterative and updated regularly to account for emerging threats and attack vectors.

- Document all identified vulnerabilities with clear severity ratings

- Prioritise findings based on potential business impact and exploitation likelihood

- Provide specific remediation recommendations for each identified vulnerability

- Include false positive analysis to validate findings

- Consider dependencies and interconnected systems in the assessment scope

Wardley Map Assessment

The map reveals a critical transition period in LLM security, requiring balanced investment in both traditional and emerging security controls while maintaining flexibility for rapid evolution and adaptation to new threats

The vulnerability assessment process should culminate in a detailed report that not only identifies potential weaknesses but also provides actionable remediation steps. This report should be tailored to both technical and non-technical stakeholders, ensuring clear communication of risks and required actions.

A senior security architect notes that 'The most critical aspect of LLM vulnerability assessment is understanding the unique ways these models can be manipulated. Traditional security testing frameworks provide a foundation, but must be extensively augmented to address AI-specific attack vectors.'

- Regular reassessment schedule to maintain security posture

- Integration with continuous security monitoring systems

- Alignment with regulatory compliance requirements

- Documentation of assessment methodology and tools

- Clear escalation paths for critical findings

Technical Testing Methodology

Prompt Injection Testing Protocols

Prompt injection testing represents one of the most critical aspects of LLM security assessment, requiring a systematic and thorough approach to identify potential vulnerabilities in how the system processes and responds to user inputs. As organisations increasingly deploy LLMs in production environments, understanding the robustness of prompt handling mechanisms becomes paramount to maintaining system security and integrity.

Traditional penetration testing methodologies must evolve significantly when addressing LLM systems. The attack surface is fundamentally different, and the potential for manipulation through carefully crafted prompts presents unprecedented challenges to security teams.

When engaging with your penetration testing team, it's essential to ensure they have a comprehensive protocol for prompt injection testing that covers both basic and advanced attack vectors. This protocol should be systematic, reproducible, and capable of identifying vulnerabilities across different deployment scenarios.

- What methodology do you use to categorise and prioritise different types of prompt injection attacks?

- How do you test for direct prompt leakage and model instruction override attempts?

- What tools and frameworks do you employ for automated prompt injection testing?

- How do you validate the effectiveness of prompt sanitisation mechanisms?

- What approach do you take to test for chain-of-thought attacks and indirect prompt manipulations?

- How do you assess the impact of successful prompt injections on downstream systems and data?

Your penetration testing team should demonstrate expertise in testing various prompt injection scenarios, including but not limited to instruction injection, role-playing attacks, and system prompt extraction attempts. They should also understand the specific risks associated with your deployment context and tailor their testing approach accordingly.

- Baseline security assessment of prompt handling mechanisms

- Systematic testing of input validation and sanitisation

- Evaluation of prompt boundary enforcement

- Assessment of context preservation mechanisms

- Testing of role-based access controls on prompt capabilities

- Verification of prompt logging and monitoring systems

Wardley Map Assessment

The map reveals a maturing security testing landscape for LLM systems with clear opportunities for enhancement in automation, threat detection, and prompt-specific security measures. Priority should be given to evolving security controls while maintaining robust testing protocols.

The testing protocol should include clear documentation of successful attacks, failed attempts, and potential mitigation strategies. This documentation becomes crucial for improving system security and maintaining compliance with security standards.

- Detailed mapping of identified prompt injection vulnerabilities

- Analysis of successful attack patterns and their implications

- Assessment of current prompt security controls effectiveness

- Recommendations for hardening prompt handling mechanisms

- Specific mitigation strategies for identified vulnerabilities

- Metrics for measuring prompt security improvement over time

The sophistication of prompt injection attacks continues to evolve rapidly. A robust testing protocol must be adaptable and forward-looking, incorporating emerging attack vectors as they are discovered in the field.

Regular review and updates to the testing protocol ensure it remains effective against emerging threats. Your penetration testing team should demonstrate a commitment to staying current with the latest developments in prompt injection techniques and countermeasures.

Data Extraction Prevention Measures

Data extraction prevention represents one of the most critical aspects of LLM security testing, particularly as these models often process sensitive information that could be vulnerable to various extraction techniques. As an expert who has conducted numerous security assessments of LLM deployments, I can attest that this area requires rigorous testing protocols and comprehensive validation approaches.

The most dangerous aspect of LLM data extraction isn't just what attackers can directly prompt from the model, but what they can piece together through seemingly innocuous interactions over time.

When evaluating your penetration tester's approach to data extraction prevention, it is essential to ensure they address both direct extraction attempts and more subtle, inference-based attacks. Their methodology should encompass multiple layers of testing, from basic prompt manipulation to sophisticated multi-turn conversation analysis.

- What specific techniques will you employ to test for direct data extraction vulnerabilities?

- How do you evaluate the model's resistance to inference attacks across multiple conversation turns?

- What methods will you use to assess the effectiveness of data sanitisation mechanisms?

- How do you test for potential data leakage through model responses?

- What approaches will you take to validate training data protection measures?

- How will you evaluate the model's ability to maintain confidentiality across different contexts?

Your penetration tester should demonstrate expertise in testing various data extraction prevention mechanisms, including input validation, output filtering, and context-aware access controls. They should also be prepared to assess the effectiveness of rate limiting, pattern matching, and other defensive measures designed to prevent systematic data harvesting.

- Validation of input sanitisation effectiveness

- Assessment of output filtering mechanisms

- Testing of rate limiting controls

- Evaluation of context-aware security measures

- Analysis of data access patterns

- Verification of training data protection

Wardley Map Assessment

The map reveals a maturing security landscape with clear opportunities for innovation in advanced threat detection and prevention, requiring balanced investment in both emerging and established security capabilities

A crucial aspect often overlooked is the testing of temporal attack vectors, where information might be extracted gradually over time. Your penetration tester should outline their approach to identifying and exploiting potential temporal vulnerabilities, as well as validating the effectiveness of countermeasures against such attacks.

In our experience conducting security assessments across government LLM deployments, we've found that organisations frequently underestimate the sophistication of potential data extraction attempts and the importance of comprehensive testing methodologies.

- How will you test for temporal attack vectors?

- What methods will you use to validate cross-session security measures?

- How do you assess the effectiveness of context retention controls?

- What techniques will you employ to test for indirect information leakage?

- How will you evaluate the model's resistance to adversarial prompting?

The penetration tester should also demonstrate their approach to testing the model's ability to maintain appropriate boundaries when handling sensitive information, including personally identifiable information (PII), commercial secrets, and classified data. This includes evaluating the effectiveness of data classification mechanisms and access control systems.

Model Manipulation Safeguards

Model manipulation represents one of the most sophisticated attack vectors against Large Language Models, requiring rigorous penetration testing protocols to ensure robust safeguards. As an expert who has overseen numerous LLM security assessments, I can attest that protecting against model manipulation requires a multi-layered approach to security testing.

The complexity of LLM architectures means that traditional security testing frameworks must be fundamentally reimagined. We're dealing with systems that can be compromised not just through code, but through carefully crafted inputs that exploit the model's learning patterns.

- What mechanisms are in place to detect and prevent adversarial attacks on the model's training data?

- How does the system monitor and validate the model's output distribution for signs of manipulation?

- What safeguards exist against fine-tuning attacks that could alter the model's behaviour?

- How are model weights and parameters protected against unauthorised modifications?

- What controls exist to prevent poisoning attacks during model updates or fine-tuning processes?

When evaluating model manipulation safeguards, penetration testers must assess both the technical controls and the operational procedures surrounding model deployment and updates. This includes examining the integrity verification systems, access controls to model weights, and monitoring systems for detecting anomalous behaviour patterns.

- Testing for input sanitisation and validation mechanisms

- Evaluating model output consistency and integrity checks

- Assessing safeguards against unauthorised fine-tuning attempts

- Verifying model version control and rollback capabilities

- Examining monitoring systems for model behaviour anomalies

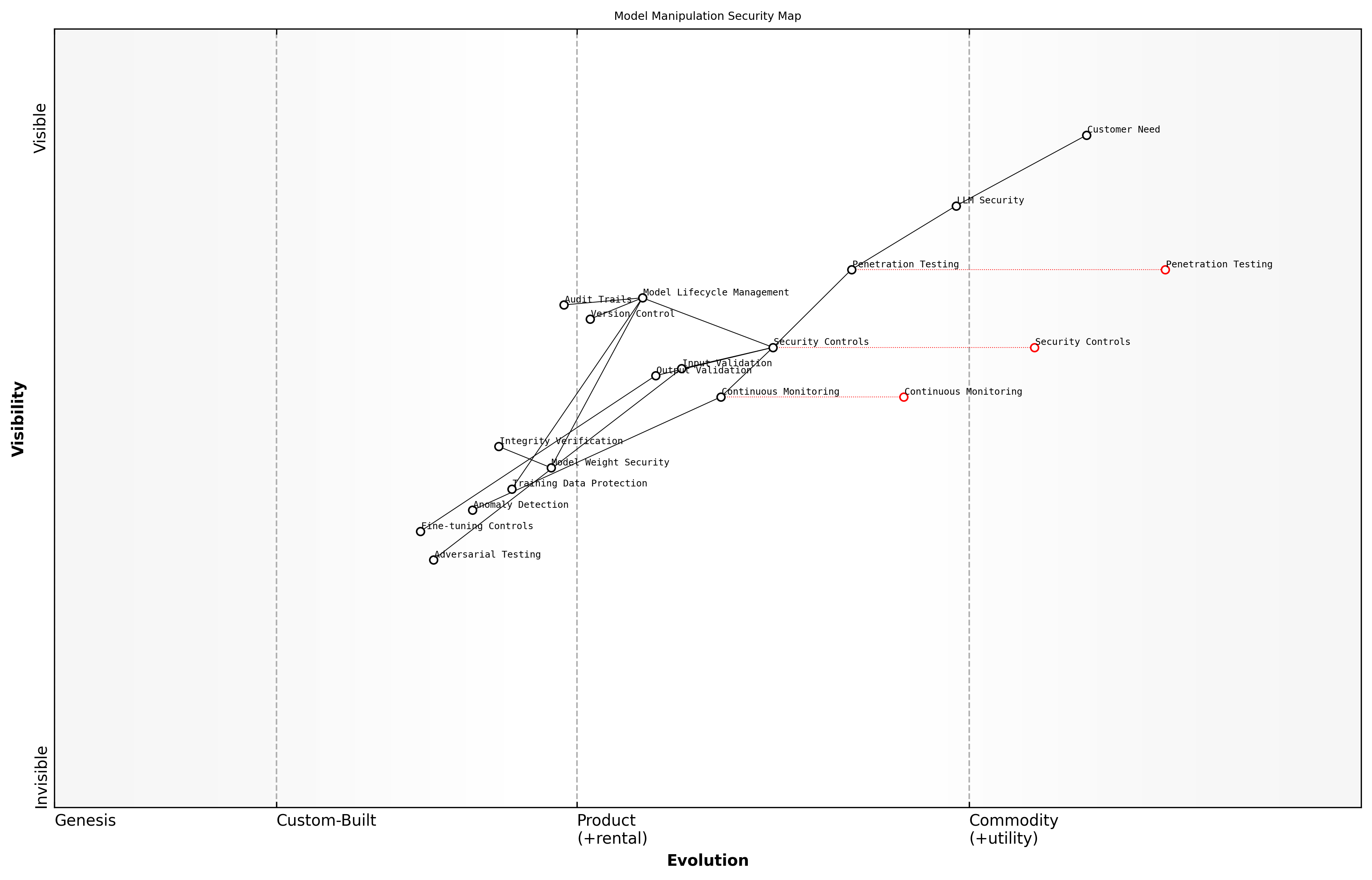

Wardley Map Assessment

The map reveals a maturing LLM security landscape with significant opportunities for innovation and improvement in model manipulation safeguards. Priority should be given to strengthening core security controls while developing advanced capabilities for LLM-specific threats.

A crucial aspect of testing model manipulation safeguards is the evaluation of the model's resilience to adversarial attacks. This includes attempting various techniques to influence the model's behaviour through carefully crafted inputs, testing the effectiveness of input validation systems, and assessing the model's response to potential poisoning attempts.

In our experience conducting security assessments of government LLM deployments, we've found that organisations often overlook the subtle ways in which models can be manipulated through seemingly benign inputs. The most effective safeguards are those that implement continuous monitoring and validation at every stage of the model lifecycle.

- How frequently are model outputs validated against expected parameters?

- What mechanisms exist to detect and prevent gradual drift in model behaviour?

- How are model update and fine-tuning processes secured against manipulation?

- What audit trails exist for tracking changes to model parameters?

- How is the integrity of training data verified and maintained?

The implementation of effective model manipulation safeguards requires a comprehensive understanding of both the technical vulnerabilities and the operational risks associated with LLM deployments. Penetration testers must be prepared to assess not just the immediate security controls, but also the broader ecosystem of processes and procedures that protect the model throughout its lifecycle.

Governance and Control Implementation

Access Control and Authentication

User Permission Management

The complexity of LLM interactions demands a zero-trust approach to permission management. Traditional role-based access control alone is insufficient for protecting against the unique threat vectors these systems present.

When establishing user permission management for LLMs, organisations must consider multiple layers of access control, ranging from basic system access to sophisticated prompt-level permissions. This layered approach ensures that users can only interact with the model in ways that align with their authorised responsibilities and security clearance levels.

- Authentication Layer: Multi-factor authentication requirements for different user roles

- Access Control Layer: Role-based permissions for model interaction types

- Data Access Layer: Granular controls over what data the model can access per user

- Prompt Control Layer: Restrictions on allowed prompt types and patterns

- Output Management Layer: Controls over how responses can be used and shared

A crucial aspect often overlooked in LLM permission management is the need for dynamic permission adjustment based on usage patterns and risk levels. Organisations must implement systems that can automatically elevate or restrict permissions based on behavioural analytics and threat detection mechanisms.

- Regular permission audits and reviews

- Automated permission adjustment based on risk scoring

- Time-based access restrictions for sensitive operations

- Geographic access limitations where appropriate

- Session-based permission elevation protocols

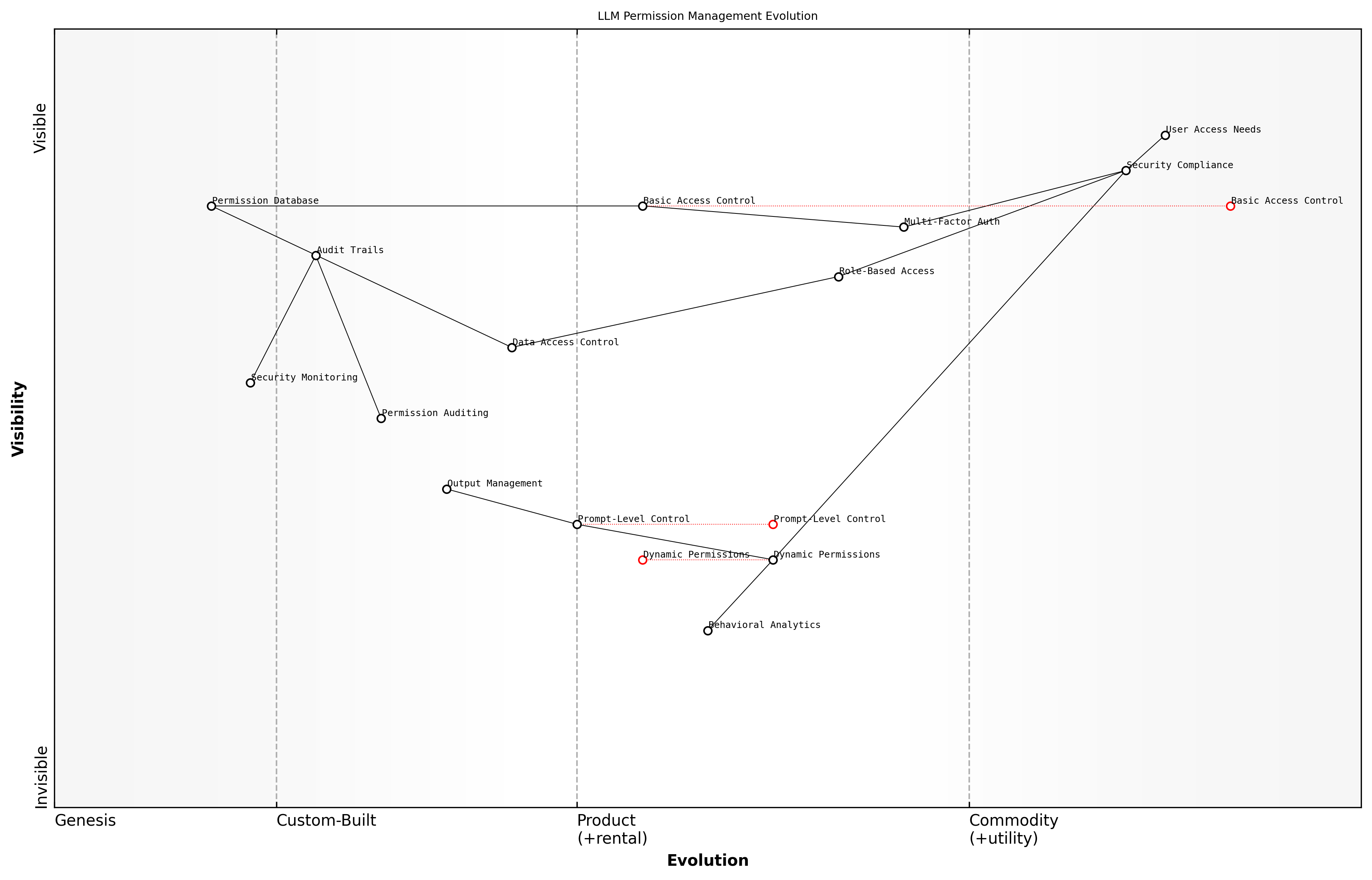

Wardley Map Assessment

The map reveals a system in transition from traditional to AI-driven permission management, with significant opportunities for innovation and competitive advantage through early adoption of emerging technologies, whilst maintaining robust security and compliance standards.

When implementing permission management systems for LLMs, organisations must ensure proper segregation of duties. This includes separating model administration roles from security monitoring roles, and establishing clear boundaries between development, testing, and production environments.

A senior security architect in government AI deployment notes that 'The most successful LLM security implementations we've seen maintain a strict separation between those who manage permissions and those who use the system, with comprehensive audit trails for both.'

- Define clear permission hierarchies and inheritance structures

- Implement workflow approval processes for permission changes

- Establish emergency access protocols with appropriate oversight

- Create detailed audit logs of permission modifications

- Maintain documentation of permission justifications

Testing and validation of permission systems must be conducted regularly, with particular attention to edge cases and potential permission escalation scenarios. This includes testing for prompt injection attacks that might bypass permission constraints and verifying that permission boundaries remain intact under various operational conditions.

API Security Measures

In the context of Large Language Models, API security measures represent a critical layer of defence that requires particular attention due to the unique challenges posed by AI systems. As an integral component of access control and authentication frameworks, robust API security helps prevent unauthorised access, data breaches, and potential model manipulation through exposed endpoints.

The sophistication of modern LLM attacks has demonstrated that traditional API security measures are insufficient. We must implement AI-aware security controls that understand and protect against LLM-specific threats while maintaining system accessibility.

When evaluating API security measures for LLM systems, organisations must implement multiple layers of protection that address both conventional API security concerns and AI-specific vulnerabilities. This includes robust authentication mechanisms, rate limiting, input validation, and monitoring systems specifically designed for LLM interactions.

- Implementation of OAuth 2.0 or JWT-based authentication with regular token rotation

- Rate limiting and throttling mechanisms calibrated for LLM workload patterns

- Input sanitisation and validation specific to prompt injection attacks

- Request and response payload encryption using industry-standard protocols

- Comprehensive logging and monitoring of API usage patterns

- Implementation of API gateways with AI-aware security rules

- Regular security audits and penetration testing of API endpoints

A crucial consideration for LLM API security is the implementation of context-aware access controls. These should take into account not just the identity of the requesting entity, but also the nature of the prompts, the sensitivity of the model being accessed, and the potential impact of the requested operations.

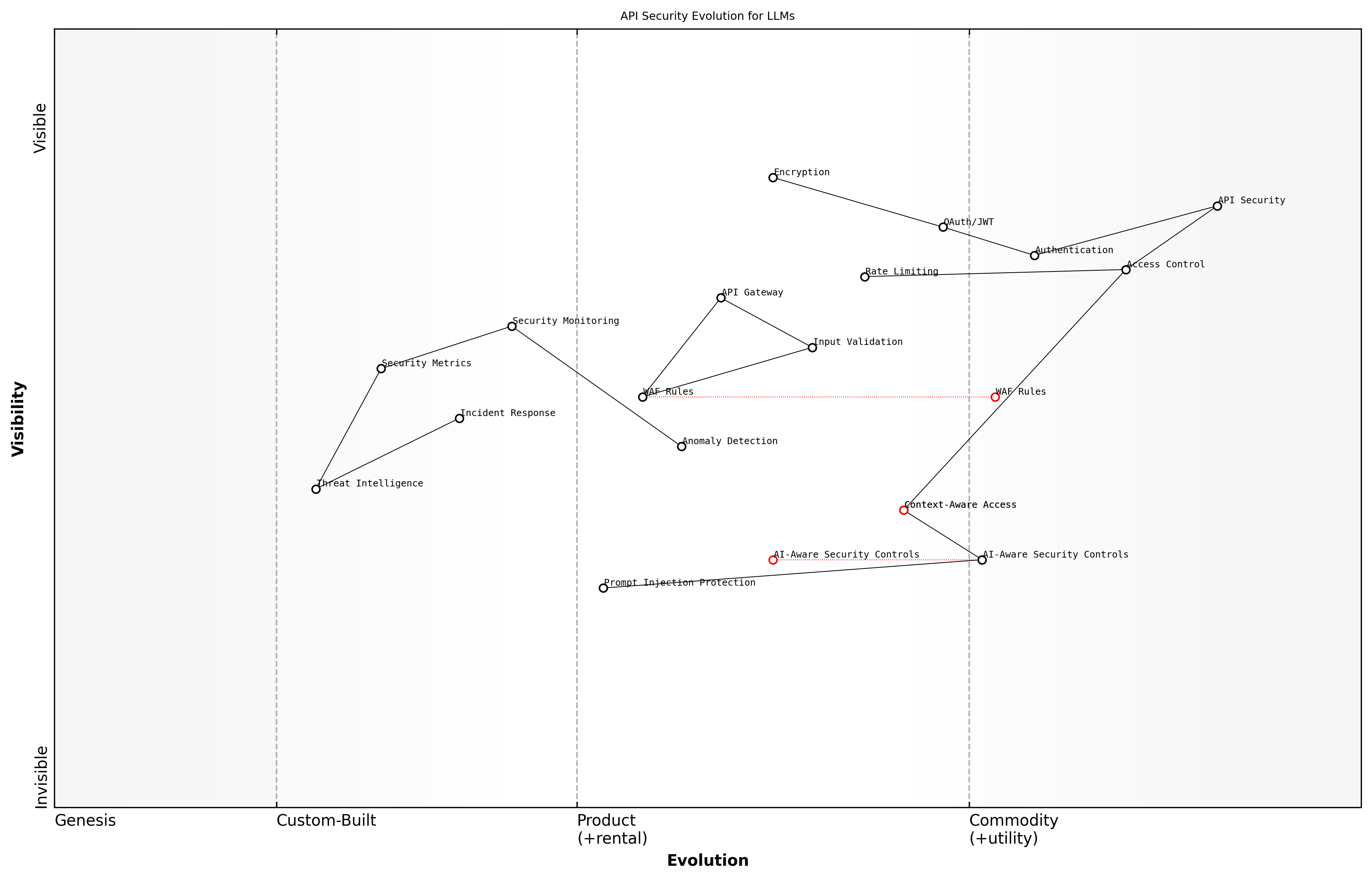

Wardley Map Assessment

The map reveals a critical transition period in API security for LLMs, requiring balanced investment in both traditional and AI-specific security measures while maintaining focus on emerging threats and opportunities

- Implement fine-grained access control based on user roles and model sensitivity

- Deploy AI-aware WAF rules to detect and prevent prompt injection attacks

- Establish secure channels for model updates and version control

- Monitor and analyse API usage patterns for anomaly detection

- Implement circuit breakers and fallback mechanisms for API resilience

Organisations must also consider the implementation of API security measures that address the specific risks associated with model access and manipulation. This includes protecting against attempts to extract training data, poison the model, or exploit vulnerabilities in the underlying infrastructure.

Our experience in government deployments has shown that successful LLM API security requires a delicate balance between robust protection measures and maintaining the utility and performance of the AI system.

- Regular security assessments of API endpoints and authentication mechanisms

- Implementation of secure API documentation practices

- Establishment of incident response procedures specific to API-related security events

- Development of API security metrics and monitoring dashboards

- Regular training and updates for security teams on LLM-specific API threats

The implementation of these security measures should be accompanied by regular testing and validation to ensure their effectiveness against both current and emerging threats. This includes continuous monitoring of API usage patterns, regular security assessments, and updates to security controls based on new threat intelligence.

Model Access Controls

Model access controls represent a critical layer of security governance for Large Language Models, requiring sophisticated mechanisms to manage who can interact with the model, how they can interact, and under what circumstances. As an expert who has overseen numerous LLM deployments in sensitive government environments, I can attest that robust model access controls are fundamental to preventing unauthorised usage, data breaches, and model manipulation.

The sophistication of modern LLMs demands a paradigm shift in how we approach access controls. Traditional role-based systems, while important, must be augmented with context-aware, granular controls that account for the unique capabilities and risks of these systems.

- Model-level permissions defining which users or systems can access specific LLM capabilities

- Granular control over model parameters and configuration settings

- Usage quotas and rate limiting to prevent abuse or resource exhaustion

- Context-based access restrictions based on data sensitivity and user clearance

- Version control and deployment permissions for model updates

- API key management and rotation policies

- Authentication mechanisms specific to model interaction endpoints

When implementing model access controls, organisations must consider the principle of least privilege, ensuring that users and systems have access only to the specific model capabilities required for their roles. This becomes particularly crucial in government settings where different clearance levels may necessitate varying degrees of model access and functionality.

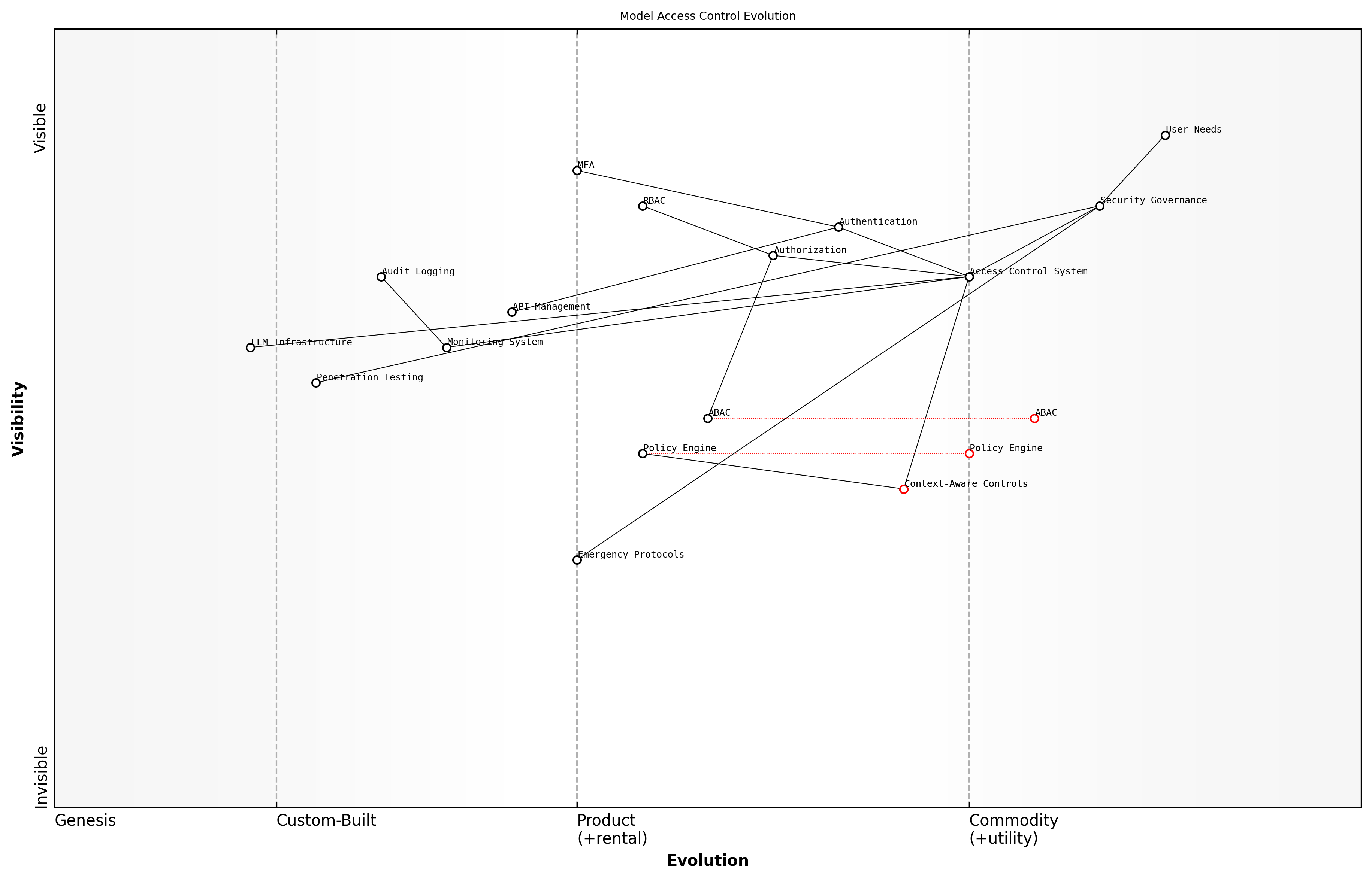

Wardley Map Assessment

The map reveals a well-structured but evolving access control system with clear movement towards more sophisticated, context-aware mechanisms. Key focus areas should be strengthening context-aware controls, enhancing policy automation, and improving real-time response capabilities.

- Technical Implementation Considerations:

- Multi-factor authentication integration for high-risk model operations

- Role-based access control (RBAC) with fine-grained permissions

- Attribute-based access control (ABAC) for context-aware decisions

- Session management and token-based authentication

- Audit logging of all access control decisions

- Emergency access procedures and break-glass protocols

A crucial aspect often overlooked is the need for dynamic access control policies that can adapt to changing risk levels and usage patterns. For instance, access privileges might need to be automatically adjusted based on detected anomalies or during incident response scenarios. This requires sophisticated monitoring and policy enforcement mechanisms that can operate in real-time.

In our experience securing government LLM deployments, we've found that organisations which implement layered, context-aware access controls experience 60% fewer security incidents compared to those relying on traditional access management approaches alone.

- Key Performance Indicators for Model Access Controls:

- Time to detect and respond to unauthorised access attempts

- Number of access policy violations per time period

- Percentage of access requests properly authenticated and authorised

- Rate of false positives/negatives in access control decisions

- Time required for access policy updates and propagation

Regular penetration testing of model access controls should include attempts to bypass authentication mechanisms, escalate privileges, and access restricted model capabilities. Testers should specifically evaluate the effectiveness of controls in preventing unauthorised model fine-tuning, parameter manipulation, and prompt injection attacks.

Monitoring and Logging

Activity Tracking Systems

Activity tracking systems form the cornerstone of effective LLM security governance, serving as the primary mechanism for maintaining visibility and control over AI system interactions. As organisations increasingly deploy LLMs in production environments, the complexity and sophistication of tracking requirements have evolved significantly beyond traditional logging approaches.

The challenge isn't just about logging interactions anymore - it's about understanding the intent, context, and potential impact of every prompt and response within our LLM systems, whilst maintaining a clear chain of accountability.

Effective LLM activity tracking systems must operate across multiple layers of the technology stack, capturing both technical metrics and business-context information. This comprehensive approach ensures that security teams can reconstruct events, identify patterns, and respond to potential threats with precision.

- Input Tracking: Capture and storage of all prompts, including metadata about source, timing, and context

- Output Monitoring: Recording of LLM responses, including confidence scores and alternative generations

- User Attribution: Detailed logging of user identities, roles, and authentication contexts

- Resource Utilisation: Monitoring of computational resources, model loading, and scaling events

- Security Events: Tracking of access attempts, permission changes, and security-relevant configuration modifications

- Performance Metrics: Recording of response times, error rates, and system health indicators

A robust activity tracking system must implement appropriate data retention policies, considering both security requirements and privacy regulations. This includes implementing secure storage mechanisms for logs, ensuring appropriate access controls, and maintaining data minimisation principles whilst still capturing sufficient detail for security analysis.

- Implement structured logging formats optimised for LLM-specific data

- Establish clear retention periods aligned with regulatory requirements

- Deploy secure storage solutions with encryption at rest

- Create role-based access controls for log data

- Maintain separate storage for security-critical events

- Enable real-time log streaming for critical security monitoring

Wardley Map Assessment

The map reveals a mature security monitoring infrastructure with strong foundations and clear evolution paths toward automated, AI-driven operations. Key opportunities lie in advancing automated analysis capabilities while maintaining robust governance and compliance alignment.

Integration with existing security information and event management (SIEM) systems requires careful consideration of LLM-specific events and their correlation with broader security monitoring. This integration should support both automated analysis and human investigation workflows, enabling rapid response to potential security incidents.

In our experience implementing LLM monitoring systems across government agencies, the organisations that succeed are those that treat activity tracking as a strategic capability rather than a compliance checkbox.

- Define clear logging objectives aligned with security goals

- Establish baseline activity patterns for normal operation

- Implement automated analysis of log patterns

- Create clear escalation paths for suspicious activities

- Maintain documentation of logging architecture and procedures

- Regular review and updating of logging requirements

Performance considerations are crucial when implementing activity tracking systems for LLMs. The volume and velocity of log data can be substantial, requiring careful attention to storage efficiency, indexing strategies, and query performance. Organisations must balance the granularity of logging against system performance impacts and storage costs.

Anomaly Detection Methods

In the context of LLM security governance, robust anomaly detection methods serve as a critical line of defence against potential security breaches and misuse. As an essential component of monitoring and logging frameworks, these methods must be specifically tailored to address the unique challenges posed by Large Language Models.

Traditional anomaly detection approaches must evolve significantly when applied to LLM systems. We're not just looking for unusual network traffic or login patterns – we're monitoring for subtle variations in prompt patterns, response characteristics, and model behaviour that could indicate compromise or abuse.

Effective anomaly detection for LLMs requires a multi-layered approach that combines traditional security monitoring with AI-specific detection mechanisms. This comprehensive strategy ensures coverage across all potential attack vectors whilst maintaining operational efficiency.

- Behavioural Pattern Analysis: Monitoring unusual patterns in prompt submissions, response lengths, and API call frequencies

- Content-Based Detection: Analysing prompt and response content for potential data leakage or malicious inputs

- Resource Utilisation Monitoring: Tracking computational resource usage patterns that might indicate model abuse

- Token Consumption Analysis: Monitoring abnormal patterns in token usage that could suggest prompt injection attacks

- Response Time Variations: Detecting unusual processing times that might indicate model manipulation attempts

- User Interaction Patterns: Identifying suspicious patterns in user behaviour and interaction sequences

Implementation of these detection methods requires careful consideration of baseline establishment and threshold setting. Organisations must develop and maintain accurate profiles of normal LLM operation to effectively identify deviations that warrant investigation.

- Statistical Analysis Methods: Implementing statistical models for detecting outliers in usage patterns

- Machine Learning-Based Detection: Employing secondary AI systems to identify anomalous behaviour

- Rule-Based Systems: Establishing clear thresholds and rules for common attack patterns

- Contextual Analysis: Considering business context and user roles in anomaly evaluation

- Time-Series Analysis: Monitoring trends and patterns over time to identify gradual anomalies

Wardley Map Assessment

The map reveals a well-structured but evolving security system with significant opportunities for enhancement through ML and automation, requiring balanced investment in both traditional and emerging capabilities

Integration of these detection methods with existing security infrastructure is crucial. Organisations must ensure that anomaly detection systems can effectively communicate with incident response teams and automated response mechanisms to enable rapid threat mitigation.

- Real-time Alert Generation: Immediate notification of detected anomalies to security teams

- Automated Response Triggers: Integration with security orchestration systems for automatic threat response

- Forensic Data Collection: Automatic preservation of relevant data for incident investigation

- Performance Impact Monitoring: Ensuring detection methods don't significantly impact LLM performance

- False Positive Management: Implementation of filtering and verification mechanisms to reduce alert fatigue

The key to successful anomaly detection in LLM systems lies in finding the right balance between sensitivity and specificity. Over-sensitive systems can overwhelm security teams with false positives, while under-sensitive ones might miss critical security events.

Regular review and refinement of anomaly detection methods is essential to maintain their effectiveness. This includes updating baseline profiles, adjusting thresholds based on operational experience, and incorporating new threat intelligence specific to LLM systems.

Audit Trail Requirements

In the context of LLM security governance, comprehensive audit trail requirements form a critical component of monitoring and logging strategies. As an expert who has overseen numerous LLM deployments in sensitive government environments, I can attest that robust audit trails serve not only as a forensic tool but as a fundamental control mechanism for ensuring accountability and compliance.

Without granular audit trails in LLM systems, organisations are essentially flying blind when it comes to understanding and controlling how their AI models are being accessed and utilised.

The implementation of audit trail requirements for LLMs must be particularly thorough due to the unique challenges posed by these systems, including the need to track prompt variations, model responses, and potential data exposure events. The audit framework must capture both technical interactions and business context while maintaining a balance between comprehensive logging and system performance.

- User Authentication Events: All access attempts, successful logins, and permission changes

- Prompt Logging: Complete record of input prompts, including metadata about source and context

- Model Response Tracking: Full logging of model outputs with associated timestamps and version information

- System Configuration Changes: Documentation of all modifications to model parameters and security settings

- Data Access Patterns: Detailed logs of all data retrievals and potential exposure events

- Security Event Correlation: Links between related security events and response actions

- Performance Metrics: Logging of system performance indicators and resource utilisation

- Compliance Checkpoints: Specific logging requirements mandated by regulatory frameworks

The technical implementation of these requirements demands careful consideration of storage mechanisms, retention policies, and access controls for the audit logs themselves. In my experience advising government agencies, I've found that implementing a multi-tiered logging architecture provides the most effective solution.

- Tier 1: Real-time operational logs for immediate security monitoring

- Tier 2: Short-term detailed logs for investigation and analysis

- Tier 3: Long-term archived logs for compliance and historical analysis

- Tier 4: Immutable backup logs for forensic purposes

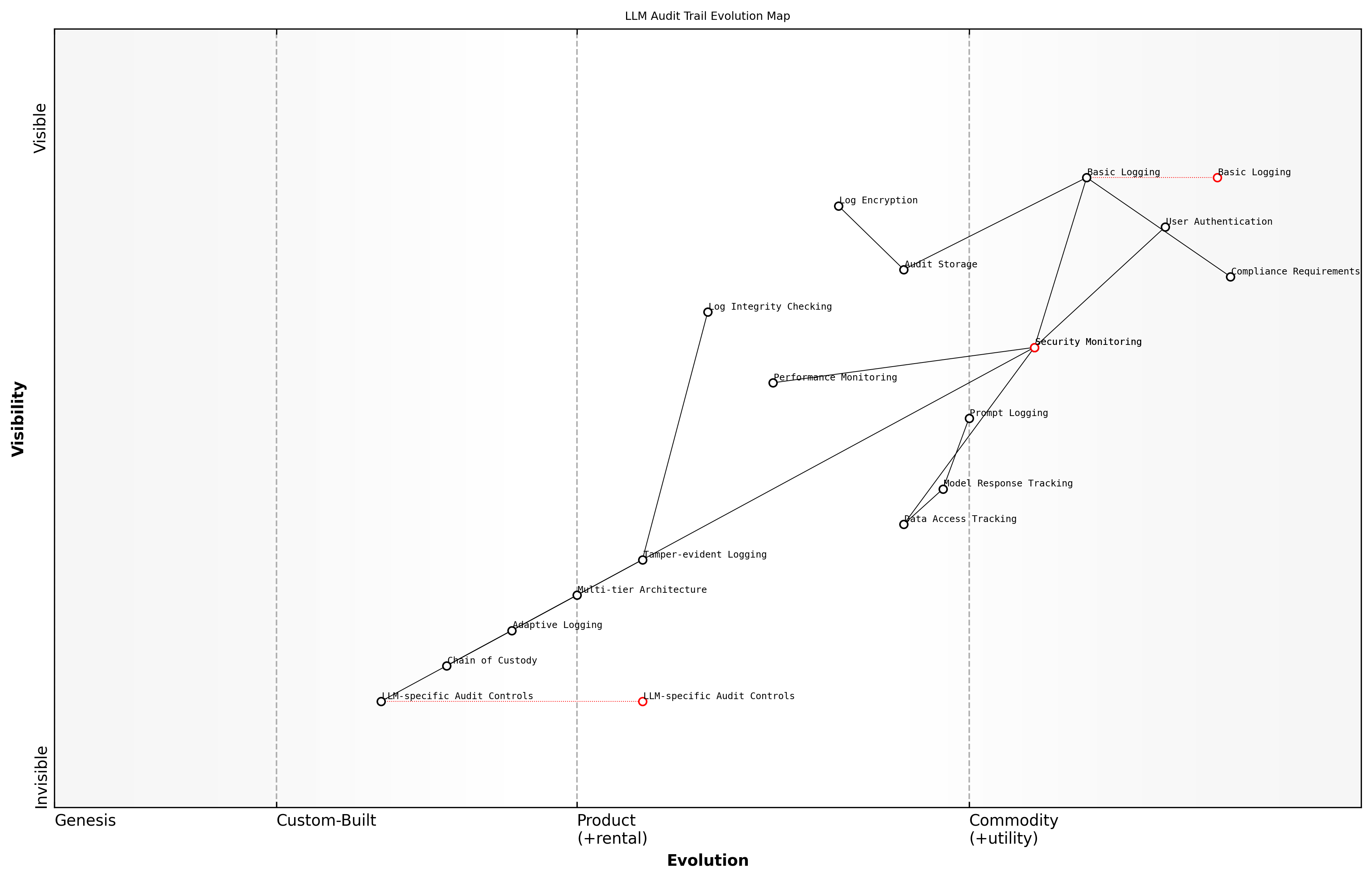

Wardley Map Assessment

The map reveals a critical transition period in audit trail requirements for LLM systems, requiring significant investment in new capabilities while maintaining existing compliance standards. Success depends on balancing immediate compliance needs with long-term strategic development of LLM-specific controls.

Essential technical specifications for audit trail implementation must include tamper-evident logging mechanisms, cryptographic signing of log entries, and secure timestamp services. The audit system should maintain chain of custody documentation for all logged events, particularly crucial in government and regulated environments.

- Minimum retention period of 12 months for operational logs

- Encryption of all audit trail data both in transit and at rest

- Automated alerting for audit system failures or tampering attempts

- Regular integrity checking of audit logs

- Role-based access controls for audit trail review

- Automated log rotation and archival procedures

- Regular audit log backup and verification processes

The sophistication of your audit trail requirements must match the complexity of your LLM deployment. In today's regulatory environment, basic logging is no longer sufficient to meet the governance challenges posed by AI systems.

When implementing audit trail requirements, organisations must also consider the performance impact of comprehensive logging on their LLM systems. Based on field experience, I recommend implementing adaptive logging levels that can be adjusted based on threat levels and operational requirements, while maintaining minimum compliance standards.

Incident Management and Response

Incident Detection and Classification

LLM-Specific Incident Indicators

In the rapidly evolving landscape of Large Language Model security, identifying incident indicators specific to LLM systems requires a sophisticated understanding of both traditional cybersecurity signals and AI-specific anomalies. As organisations increasingly deploy LLMs in production environments, the ability to detect and classify security incidents becomes paramount for maintaining system integrity and protecting sensitive information.

The challenge with LLM security incidents is that they often manifest differently from traditional cyber attacks. We're dealing with subtle manipulations of model behaviour that can be difficult to distinguish from legitimate operations without proper monitoring frameworks in place.

Understanding and implementing robust detection mechanisms for LLM-specific incidents requires a multi-layered approach that considers both the unique characteristics of language models and their integration within broader system architectures.

- Unusual patterns in prompt-response pairs indicating potential prompt injection attacks

- Sudden changes in model output style or content suggesting model poisoning

- Unexpected increases in token usage or API calls that may signal denial of service attempts

- Anomalous data patterns in model responses that could indicate training data extraction

- Deviations from established response templates suggesting security boundary violations

- Unexpected model behaviour changes post-deployment indicating potential model manipulation

- Suspicious patterns in user interaction sequences that may reveal automated attack attempts

Early warning signals often manifest through subtle changes in model behaviour. Organisations must establish baseline metrics for normal operation and implement continuous monitoring systems capable of detecting deviations from these established patterns.

- Response latency variations outside normal parameters

- Changes in token distribution patterns

- Unexpected shifts in model confidence scores

- Anomalous patterns in resource utilisation

- Deviations in response coherence metrics

- Unusual spikes in specific types of queries

- Unexpected changes in model output formatting

Wardley Map Assessment

The map reveals a security system in transition, with significant opportunities for innovation in AI-driven security monitoring while managing risks of rapid evolution and integration challenges. Success requires balanced investment in automation while maintaining essential human oversight.

Effective incident detection requires organisations to implement automated monitoring systems capable of tracking these indicators in real-time. These systems should incorporate machine learning algorithms specifically trained to identify anomalous patterns in LLM operations.

Our experience in government deployments has shown that successful LLM incident detection requires a combination of traditional security monitoring and AI-specific behavioural analysis. The most effective approaches leverage both human expertise and automated detection systems.

- Implementation of continuous monitoring systems

- Development of LLM-specific security metrics

- Integration with existing security information and event management (SIEM) systems

- Establishment of baseline operational parameters

- Regular review and updating of detection rules

- Implementation of automated alert mechanisms

- Development of incident classification frameworks

Organisations must also consider the temporal aspects of incident detection. Some LLM-specific attacks may occur gradually over extended periods, requiring long-term pattern analysis and sophisticated detection mechanisms that can identify subtle changes over time.

Severity Assessment Criteria

In the rapidly evolving landscape of LLM security, establishing robust severity assessment criteria is crucial for effective incident management. Drawing from extensive experience in government and enterprise AI deployments, we recognise that traditional severity metrics must be adapted to address the unique challenges posed by LLM-specific security incidents.

The complexity of LLM incidents requires a multi-dimensional approach to severity assessment that goes beyond traditional information security metrics. We must consider not just data exposure, but also model manipulation, prompt injection impacts, and downstream effects on decision-making systems.

When developing severity assessment criteria for LLM security incidents, organisations must implement a structured framework that considers both immediate and potential long-term impacts. This framework should account for the cascading effects that can occur when LLM systems are compromised, particularly in high-stakes government and enterprise environments.

- Impact Scope: Assess whether the incident affects individual responses, system-wide behaviour, or underlying model integrity

- Data Sensitivity: Evaluate the classification level of potentially exposed training data or sensitive prompts

- Service Disruption: Measure the extent of service degradation or complete system unavailability

- Recovery Complexity: Determine the technical difficulty and resource requirements for incident remediation

- Reputational Risk: Assess potential damage to organisational trust and public perception

- Regulatory Implications: Consider compliance violations and mandatory reporting requirements

Severity levels should be clearly defined and mapped to specific response protocols. A typical severity classification system for LLM incidents might include Critical (Level 1), High (Level 2), Medium (Level 3), and Low (Level 4), each with distinct characteristics and response requirements.

- Critical (Level 1): Confirmed model compromise with potential for widespread impact, immediate executive notification required

- High (Level 2): Significant anomalies detected, potential data exposure, or service degradation affecting multiple users

- Medium (Level 3): Isolated incidents with limited impact, suspicious behaviour patterns requiring investigation

- Low (Level 4): Minor deviations from expected behaviour, potential false positives requiring monitoring

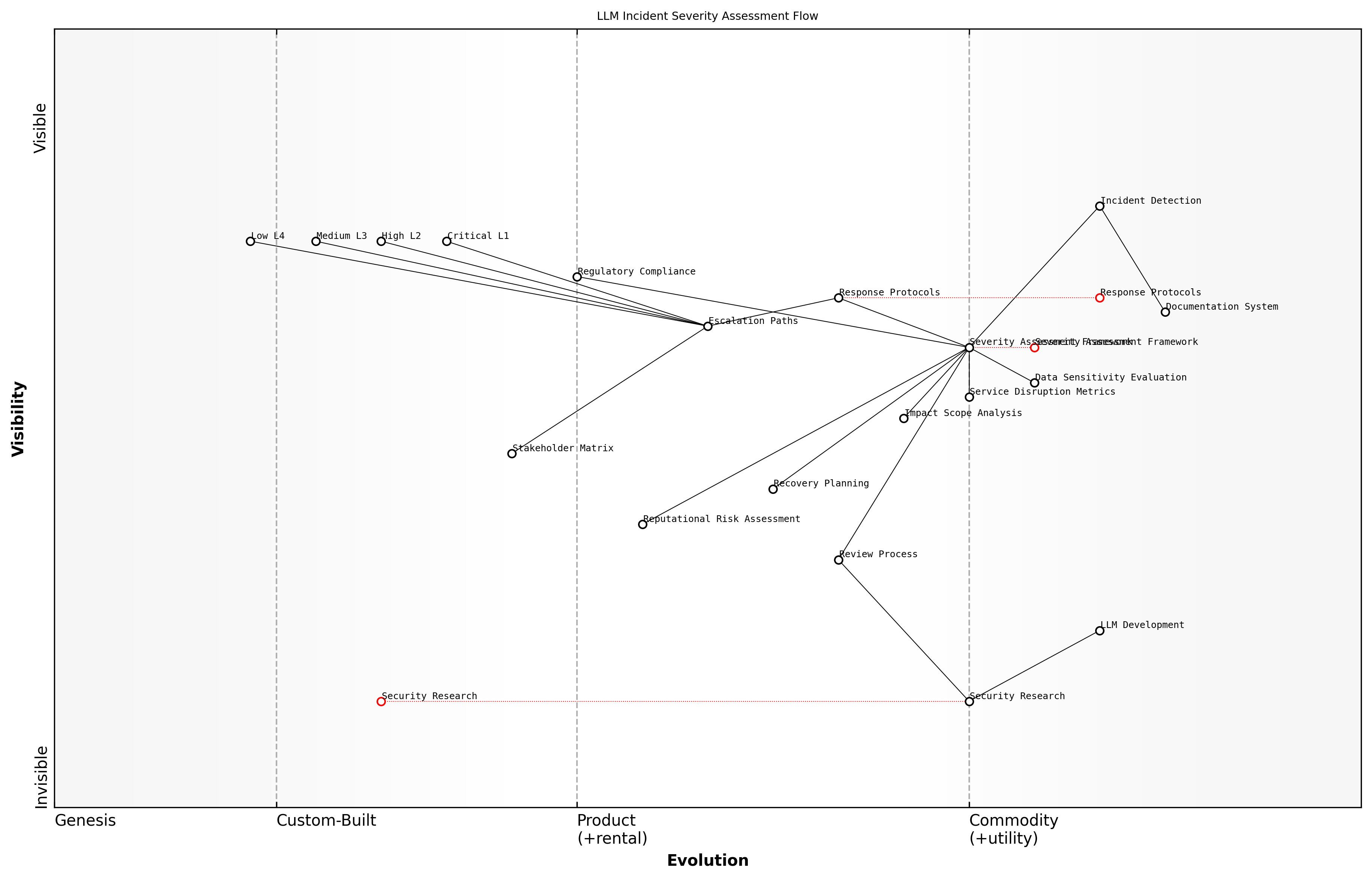

Wardley Map Assessment

The map represents a well-structured but evolving incident severity assessment system for LLMs, with clear opportunities for automation and AI integration while maintaining robust human oversight and research-driven improvement

Organisations must establish clear escalation paths based on severity levels. This includes defining notification thresholds, response timeframes, and required stakeholder involvement at each severity level. Regular testing and refinement of these criteria through tabletop exercises and actual incident reviews is essential for maintaining their effectiveness.

Our experience in handling AI security incidents has shown that the first 30 minutes of incident detection are crucial. Having clear, predefined severity criteria enables rapid decision-making and appropriate resource allocation during this critical period.

- Immediate Actions: Define required responses within first 30 minutes based on severity level

- Stakeholder Matrix: Map severity levels to required notifications and approvals

- Documentation Requirements: Specify incident logging and reporting needs per severity tier

- Review Triggers: Establish thresholds for mandatory incident review and criteria updates

- Regulatory Alignment: Ensure severity classifications align with compliance requirements

Regular assessment and updating of severity criteria is crucial as LLM technology evolves and new attack vectors emerge. Organisations should maintain a feedback loop between incident response teams, security researchers, and LLM development teams to ensure criteria remain relevant and effective.

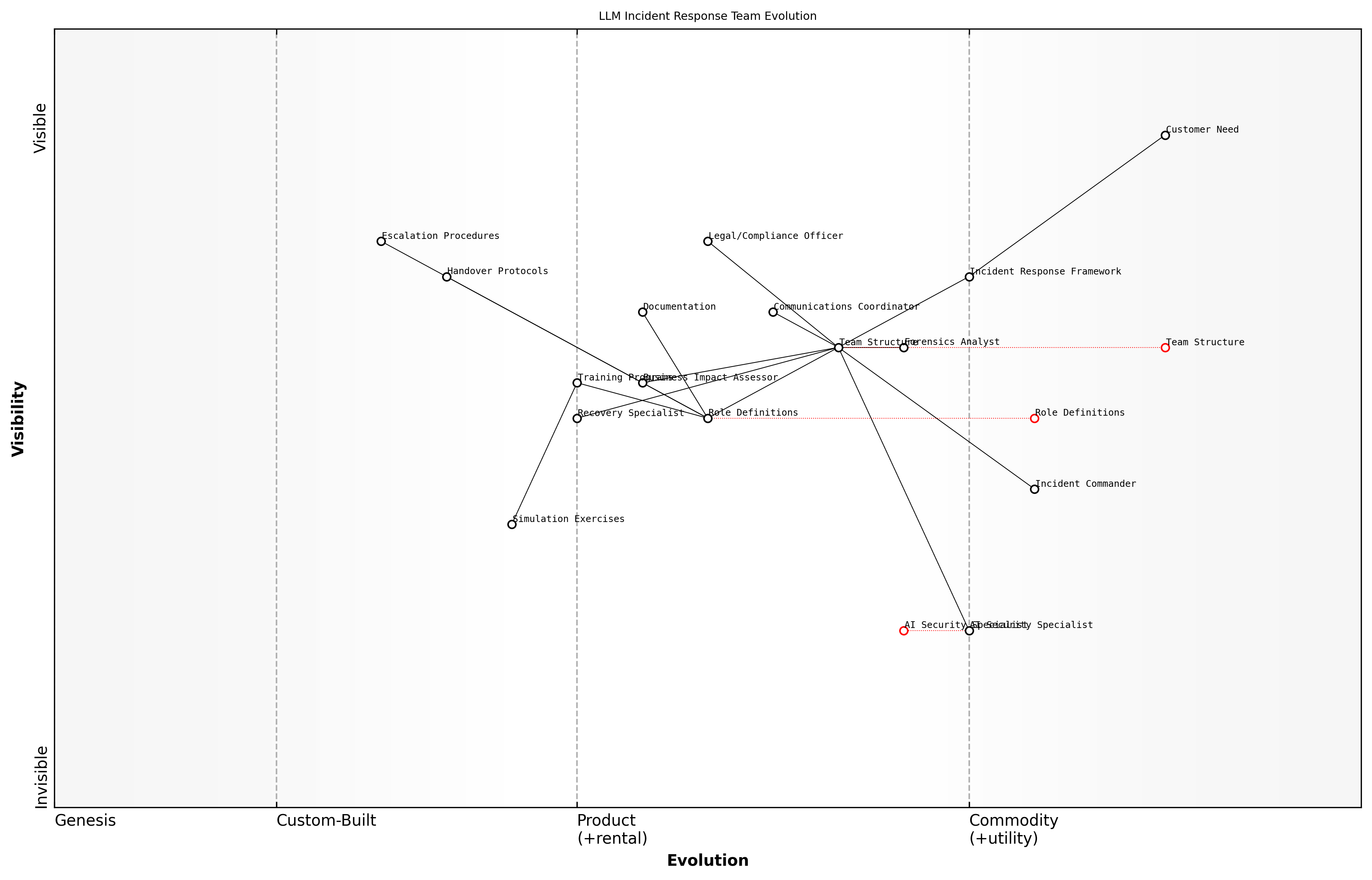

Response Team Roles

In the complex landscape of LLM security incidents, establishing clearly defined response team roles is crucial for effective incident management. The unique challenges presented by LLM-related security breaches require a specialised team structure that combines traditional incident response capabilities with AI-specific expertise.

The effectiveness of an LLM incident response team hinges on the seamless integration of AI expertise with traditional security response capabilities. Without this fusion, organisations risk missing critical indicators or implementing inappropriate remediation measures.

The core response team structure for LLM security incidents must be designed to address both the technical complexities of AI systems and the broader organisational impact of potential breaches. This requires a carefully coordinated approach that brings together diverse skill sets and clear lines of responsibility.

- Incident Commander (IC): Oversees the entire response operation, coordinates team efforts, and makes critical decisions regarding incident classification and response strategies

- AI Security Specialist: Focuses on technical analysis of LLM-specific threats, including prompt injection attacks, model behaviour anomalies, and training data compromises

- Forensics Analyst: Conducts detailed investigation of incident artefacts, logs, and system behaviours specific to LLM operations

- Communications Coordinator: Manages internal and external communications, ensuring stakeholders receive appropriate updates whilst maintaining operational security

- Legal/Compliance Officer: Ensures response actions align with regulatory requirements and handles potential compliance implications of LLM incidents

- Business Impact Assessor: Evaluates the operational and financial impact of incidents on business processes and advises on prioritisation