The Planet Information Platform: Mapping Earth with Satellites, AI, and Big Data

Strategic MappingThe Planet Information Platform: Mapping Earth with Satellites, AI, and Big Data

:warning: WARNING: This content was generated using Generative AI. While efforts have been made to ensure accuracy and coherence, readers should approach the material with critical thinking and verify important information from authoritative sources.

Table of Contents

- The Planet Information Platform: Mapping Earth with Satellites, AI, and Big Data

- Introduction: A New Era of Planetary Observation

- Fundamentals of Earth Observation Technologies

- AI and Machine Learning for Satellite Data Analysis

- The Planet Information Platform: Architecture and Implementation

- Applications and Impact

- Ethical Considerations and Policy Implications

- Conclusion: The Future of Planetary Intelligence

Introduction: A New Era of Planetary Observation

The Vision of a Global Information Platform

Defining the Planet Information Platform

The Planet Information Platform represents a paradigm shift in our ability to observe, analyse, and understand the Earth on a global scale. As we embark on this new era of planetary observation, it is crucial to establish a clear definition of what this platform entails and its potential to revolutionise our approach to global challenges.

At its core, the Planet Information Platform is an integrated system that combines cutting-edge Earth observation satellites, advanced machine learning algorithms, big data analytics, and generative AI to create a comprehensive, real-time digital representation of our planet. This platform aims to identify, categorise, and monitor everything on Earth's surface and in its atmosphere, providing unprecedented insights into global processes, patterns, and changes.

The Planet Information Platform is not just a technological marvel; it's a transformative tool that will reshape how we understand and interact with our world. It has the potential to become the most comprehensive and dynamic atlas of Earth ever created.

To fully grasp the concept of the Planet Information Platform, it is essential to break it down into its key components and understand how they synergise to create a powerful global monitoring system:

- Earth Observation Satellites: A constellation of advanced satellites equipped with various sensors, including optical, radar, and multispectral instruments, continuously orbiting and imaging the Earth's surface.

- Data Collection and Transmission: A robust infrastructure for acquiring, downlinking, and storing vast amounts of raw satellite data from multiple sources.

- Machine Learning and AI Algorithms: Sophisticated algorithms capable of processing and analysing satellite imagery to extract meaningful information, detect patterns, and identify objects and phenomena on a global scale.

- Big Data Analytics: Advanced data processing capabilities to handle the enormous volume, velocity, and variety of data generated by Earth observation satellites and other sources.

- Generative AI: Cutting-edge AI models that can enhance image quality, fill data gaps, and even generate predictive scenarios based on historical and real-time data.

- Integration Platform: A unified system that combines data from satellites with other sources, such as ground-based sensors, social media, and historical records, to create a comprehensive view of the planet.

- User Interface and Visualisation Tools: Intuitive and powerful tools that allow users to interact with the platform, query data, and visualise complex information in accessible formats.

The Planet Information Platform goes beyond mere data collection; it is designed to transform raw satellite imagery and sensor data into actionable intelligence. By leveraging the power of AI and machine learning, the platform can automatically identify and classify features on the Earth's surface, from individual buildings and vehicles to large-scale phenomena like deforestation or urban expansion.

One of the key attributes that sets the Planet Information Platform apart is its ability to provide near real-time monitoring of global events and changes. This capability is crucial for applications such as disaster response, where timely information can save lives and resources. For instance, the platform could detect and alert authorities to the early signs of a wildfire, allowing for rapid response and containment.

The real power of the Planet Information Platform lies in its ability to turn the vast amounts of data we collect about our planet into knowledge that can drive informed decision-making at all levels, from local communities to global governance.

Another defining feature of the Planet Information Platform is its scalability and adaptability. As new satellites are launched and sensor technologies improve, the platform can seamlessly integrate these additional data sources, continuously enhancing its capabilities and resolution. This scalability ensures that the platform remains at the cutting edge of Earth observation technology, providing ever more detailed and accurate information about our planet.

The platform's use of generative AI introduces a new dimension to Earth observation. These advanced models can not only enhance the quality and resolution of satellite imagery but also generate synthetic data to fill gaps in coverage or predict future scenarios. This capability is particularly valuable for modelling complex systems like climate patterns or urban development, allowing policymakers and researchers to explore potential outcomes and make more informed decisions.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution and dependencies of key components in the Planet Information Platform]](https://images.wardleymaps.ai/wardleymaps/map_0d264afc-cacb-438d-8155-b209fd3e393b.png)

It is important to note that the Planet Information Platform is not just a technological solution; it represents a new approach to global governance and decision-making. By providing a shared, objective view of the Earth, the platform has the potential to foster international cooperation on global challenges such as climate change, resource management, and disaster mitigation.

However, with great power comes great responsibility. The development and deployment of such a comprehensive global monitoring system raise significant ethical and privacy concerns. Striking the right balance between the platform's capabilities and the protection of individual and national privacy rights will be crucial to its acceptance and success.

As we develop the Planet Information Platform, we must remain vigilant about its ethical implications. Our goal should be to create a tool that empowers humanity to better understand and protect our planet, not one that infringes on fundamental rights or exacerbates existing inequalities.

In conclusion, the Planet Information Platform represents a revolutionary approach to Earth observation and global monitoring. By combining advanced satellite technology, AI, and big data analytics, it promises to provide unprecedented insights into our planet's systems and processes. As we continue to refine and expand this platform, it has the potential to become an invaluable tool for addressing some of the most pressing challenges facing humanity, from climate change to sustainable development. The journey towards a truly comprehensive Planet Information Platform is just beginning, and its full impact on our understanding and stewardship of Earth is yet to be realised.

Historical Context and Technological Evolution

The vision of a global information platform, capable of identifying and monitoring everything on planet Earth, has its roots in the early days of space exploration and remote sensing. This ambitious concept has evolved dramatically over the decades, driven by technological advancements in satellite technology, data processing capabilities, and artificial intelligence. To fully appreciate the transformative potential of the Planet Information Platform, it is crucial to understand its historical context and the technological evolution that has made it possible.

The journey towards a comprehensive planetary observation system began with the launch of the first Earth observation satellites in the 1960s. These early missions, such as the Landsat programme initiated by NASA and the U.S. Department of the Interior, marked the beginning of a new era in Earth science and environmental monitoring. However, the limitations of these early systems in terms of resolution, coverage, and data processing capabilities meant that the dream of a truly global information platform remained out of reach.

The launch of the first Landsat satellite in 1972 was a watershed moment in Earth observation. It opened our eyes to the possibility of monitoring our planet from space, but we could scarcely imagine the comprehensive global monitoring systems we're developing today.

As satellite technology advanced, so did the vision of what could be achieved. The 1980s and 1990s saw the launch of more sophisticated Earth observation satellites, including those with synthetic aperture radar (SAR) capabilities, which could penetrate cloud cover and operate at night. This period also witnessed the emergence of commercial satellite operators, broadening the scope and availability of Earth observation data.

The turn of the millennium brought about a paradigm shift in Earth observation with the advent of high-resolution commercial satellites. These systems, capable of capturing images with sub-metre resolution, revolutionised the field and opened up new possibilities for detailed planetary monitoring. Concurrently, advances in computing power and data storage technologies began to address the challenge of handling the vast amounts of data generated by these satellite systems.

- 1960s-1970s: First-generation Earth observation satellites (e.g., Landsat)

- 1980s-1990s: Advanced sensors and SAR satellites

- 2000s: High-resolution commercial satellites and improved data processing

- 2010s: Big data analytics and machine learning applications

- 2020s: AI-driven analysis and integration of multiple data sources

The rapid advancement of artificial intelligence and machine learning in the 2010s marked another crucial milestone in the evolution of planetary observation. These technologies enabled the automated analysis of satellite imagery at unprecedented scales, allowing for the detection and classification of objects, monitoring of changes over time, and prediction of future trends. The integration of AI with Earth observation data has been a game-changer, bringing us closer to the vision of a comprehensive Planet Information Platform.

The convergence of big data analytics and artificial intelligence with Earth observation technologies has fundamentally altered our ability to monitor and understand our planet. We are now on the cusp of realising a truly global information platform that can provide insights at scales and speeds previously unimaginable.

In recent years, the concept of the Planet Information Platform has been further enhanced by the integration of multiple data sources beyond satellite imagery. Ground-based sensors, social media feeds, and other forms of crowdsourced data are now being combined with satellite observations to create a more comprehensive and nuanced understanding of global phenomena. This fusion of data sources, coupled with advanced AI algorithms, is enabling near-real-time monitoring and analysis of complex environmental and social systems.

The emergence of small satellite constellations and CubeSats has also played a significant role in the evolution of global Earth observation capabilities. These systems, often deployed in large numbers, offer frequent revisit times and can provide continuous monitoring of specific areas of interest. The democratisation of space technology has allowed smaller nations and even private companies to contribute to the global Earth observation effort, further expanding the scope and diversity of available data.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution of Earth observation technologies and their components, from satellite hardware to data analytics and AI applications]](https://images.wardleymaps.ai/wardleymaps/map_a24d443e-c36b-4ea1-8edc-5690192ef895.png)

Looking ahead, the vision of the Planet Information Platform continues to evolve. The integration of quantum computing, edge processing capabilities on satellites, and advanced generative AI models promises to further enhance our ability to monitor and understand Earth systems. As we move towards this future, it is crucial to consider the ethical implications and potential societal impacts of such a comprehensive global monitoring system.

The historical context and technological evolution of the Planet Information Platform underscore the remarkable progress we have made in our ability to observe and understand our planet. From the early days of grainy satellite images to today's AI-driven analysis of multi-source data, we have come a long way in realising the vision of a global information platform. As we continue to push the boundaries of what is possible, it is clear that the Planet Information Platform will play an increasingly critical role in addressing global challenges, from climate change and environmental conservation to disaster response and sustainable development.

Potential Impact on Global Challenges

The vision of a Global Information Platform, leveraging Earth observation satellites, machine learning algorithms, and generative AI to identify and analyse everything on our planet, holds immense potential to address some of the most pressing global challenges of our time. This revolutionary approach to planetary observation and data analysis promises to transform our understanding of Earth's systems and our ability to respond to complex issues ranging from climate change to resource management.

As we delve into the potential impact of this platform, it's crucial to recognise the unprecedented scale and scope of information it aims to provide. By integrating diverse data sources and applying advanced analytics, the Global Information Platform has the capacity to offer real-time, comprehensive insights into the state of our planet, enabling more informed decision-making and targeted interventions across various sectors.

The Global Information Platform represents a paradigm shift in how we observe, understand, and interact with our planet. It's not just about collecting data; it's about creating a living, breathing digital twin of Earth that can guide us towards a more sustainable future.

Let's explore the key areas where this platform could have a transformative impact:

- Climate Change Mitigation and Adaptation

- Environmental Conservation and Biodiversity

- Disaster Risk Reduction and Response

- Sustainable Urban Development

- Global Food Security and Agriculture

- Water Resource Management

- Energy Transition and Renewable Resources

- Public Health and Disease Surveillance

Climate Change Mitigation and Adaptation: The Global Information Platform offers unprecedented capabilities for monitoring and predicting climate change impacts. By integrating satellite data with ground-based sensors and advanced climate models, the platform can provide high-resolution, real-time insights into greenhouse gas emissions, deforestation rates, sea level rise, and extreme weather patterns. This comprehensive view enables policymakers to develop more effective mitigation strategies and adapt to changing environmental conditions with greater agility.

Environmental Conservation and Biodiversity: The platform's ability to map and monitor ecosystems at a global scale can revolutionise conservation efforts. By tracking changes in habitat extent, species distribution, and ecosystem health, conservationists can identify critical areas for protection, detect illegal activities such as poaching or logging, and assess the effectiveness of conservation measures. The use of AI and machine learning algorithms can also help in discovering new species and understanding complex ecological relationships.

Disaster Risk Reduction and Response: With its capacity for real-time monitoring and predictive analytics, the Global Information Platform can significantly enhance disaster preparedness and response. Early warning systems for natural disasters such as hurricanes, floods, and wildfires can be greatly improved, allowing for more timely evacuations and resource allocation. In the aftermath of disasters, the platform can provide rapid damage assessments, guiding recovery efforts and informing long-term resilience planning.

The integration of Earth observation data with AI-driven predictive models is transforming our ability to anticipate and respond to natural disasters. We're moving from reactive to proactive disaster management, potentially saving countless lives and billions in economic losses.

Sustainable Urban Development: As urbanisation continues to accelerate globally, the platform can play a crucial role in promoting sustainable city planning and management. By analysing urban growth patterns, traffic flows, energy consumption, and air quality, city planners can make data-driven decisions to optimise infrastructure, reduce pollution, and improve quality of life for residents. The platform's ability to simulate future scenarios can also help in designing more resilient and sustainable urban environments.

Global Food Security and Agriculture: The Global Information Platform has the potential to revolutionise agriculture and bolster food security worldwide. Through precise monitoring of crop health, soil moisture, and weather patterns, farmers can optimise irrigation, fertiliser use, and pest control, leading to increased yields and reduced environmental impact. On a larger scale, the platform can help predict and mitigate food shortages, inform agricultural policy, and support sustainable farming practices.

Water Resource Management: With water scarcity becoming an increasingly critical issue, the platform's ability to monitor global water resources is invaluable. By tracking surface water levels, groundwater depletion, and water quality, policymakers can develop more effective strategies for water conservation and equitable distribution. The platform can also help in identifying and addressing sources of water pollution, protecting this vital resource for future generations.

Energy Transition and Renewable Resources: The Global Information Platform can accelerate the transition to renewable energy by identifying optimal locations for solar, wind, and hydroelectric installations. By analysing factors such as solar irradiance, wind patterns, and land use, the platform can guide investment in renewable energy infrastructure. Additionally, it can monitor the performance and environmental impact of existing energy systems, supporting the optimisation of the global energy mix.

Public Health and Disease Surveillance: While not traditionally associated with Earth observation, the platform's capabilities can significantly contribute to public health efforts. By monitoring environmental factors that influence disease spread, such as temperature, humidity, and land use changes, health authorities can better predict and respond to disease outbreaks. The platform can also help in assessing air and water quality, identifying pollution hotspots that may impact public health.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution of global challenge response capabilities enabled by the Global Information Platform]](https://images.wardleymaps.ai/wardleymaps/map_7904047b-b6b0-4e7f-89d2-75d6a37b0ad0.png)

The potential impact of the Global Information Platform on these global challenges is profound. However, realising this potential requires overcoming significant technical, ethical, and governance challenges. Issues of data privacy, equitable access to information, and the responsible use of AI must be carefully addressed to ensure that the benefits of this powerful tool are shared globally and ethically.

The Global Information Platform is not just a technological achievement; it's a call to action for global cooperation. To truly address our planet's challenges, we must ensure that this wealth of information is accessible to all and used for the common good.

As we move forward, the development and implementation of the Global Information Platform must be guided by a commitment to transparency, collaboration, and sustainable development. By harnessing the power of Earth observation, AI, and big data analytics, we have an unprecedented opportunity to create a more resilient, equitable, and sustainable world. The vision of a comprehensive planetary information system is within our grasp; the challenge now lies in translating this vision into meaningful action and lasting positive impact on a global scale.

Overview of Key Technologies

Earth Observation Satellites

Earth Observation Satellites (EOS) form the cornerstone of the Planet Information Platform, serving as the primary data collection mechanism for global-scale monitoring and analysis. These sophisticated space-based systems have revolutionised our ability to observe, measure, and understand Earth's complex systems with unprecedented detail and frequency. As we embark on this new era of planetary observation, it is crucial to comprehend the fundamental technologies that underpin these remarkable tools.

EOS can be broadly categorised into several types, each designed to capture specific aspects of our planet's surface, atmosphere, and oceans. These categories include:

- Optical imaging satellites

- Synthetic Aperture Radar (SAR) satellites

- Atmospheric and meteorological satellites

- Oceanographic satellites

- Hyperspectral imaging satellites

- Gravity and magnetic field measurement satellites

Optical imaging satellites, such as the Landsat series and Sentinel-2, capture high-resolution visible and near-infrared imagery of Earth's surface. These satellites are instrumental in monitoring land use changes, urban development, and vegetation health. SAR satellites, like Sentinel-1 and RADARSAT, use microwave technology to penetrate cloud cover and darkness, providing all-weather, day-and-night Earth observation capabilities crucial for disaster monitoring and maritime surveillance.

Atmospheric and meteorological satellites, including the GOES and Meteosat series, play a vital role in weather forecasting and climate monitoring. They provide real-time data on atmospheric conditions, cloud formations, and severe weather events. Oceanographic satellites, such as Jason-3 and Sentinel-3, measure sea surface height, temperature, and ocean colour, contributing to our understanding of ocean dynamics and climate change impacts.

Hyperspectral imaging satellites, like EnMAP and PRISMA, offer detailed spectral information across hundreds of narrow wavelength bands, enabling precise identification of Earth surface materials and subtle environmental changes. Gravity and magnetic field measurement satellites, such as GRACE and Swarm, provide crucial data for understanding Earth's internal structure, water distribution, and geodynamics.

The diversity and sophistication of Earth Observation Satellites have transformed our planet into a living laboratory, allowing us to monitor and analyse global phenomena with unprecedented precision and timeliness.

The technological advancements in EOS have been remarkable, with improvements in several key areas:

- Spatial resolution: Modern satellites can achieve sub-metre resolution, enabling detailed mapping and monitoring of small-scale features.

- Temporal resolution: Constellations of satellites now provide near-real-time coverage of the entire globe, with revisit times as short as a few hours.

- Spectral resolution: Advanced sensors can capture data across a wide range of the electromagnetic spectrum, from visible light to thermal infrared and microwave.

- Radiometric resolution: Improved sensor sensitivity allows for more accurate measurement of subtle variations in Earth's features.

- Data transmission and processing: High-bandwidth communications and on-board processing capabilities enable rapid data delivery and analysis.

The integration of these advanced EOS technologies with machine learning, artificial intelligence, and big data analytics forms the backbone of the Planet Information Platform. This synergy enables the extraction of meaningful insights from the vast amounts of data collected by these satellites, driving applications across various domains such as environmental monitoring, urban planning, and disaster management.

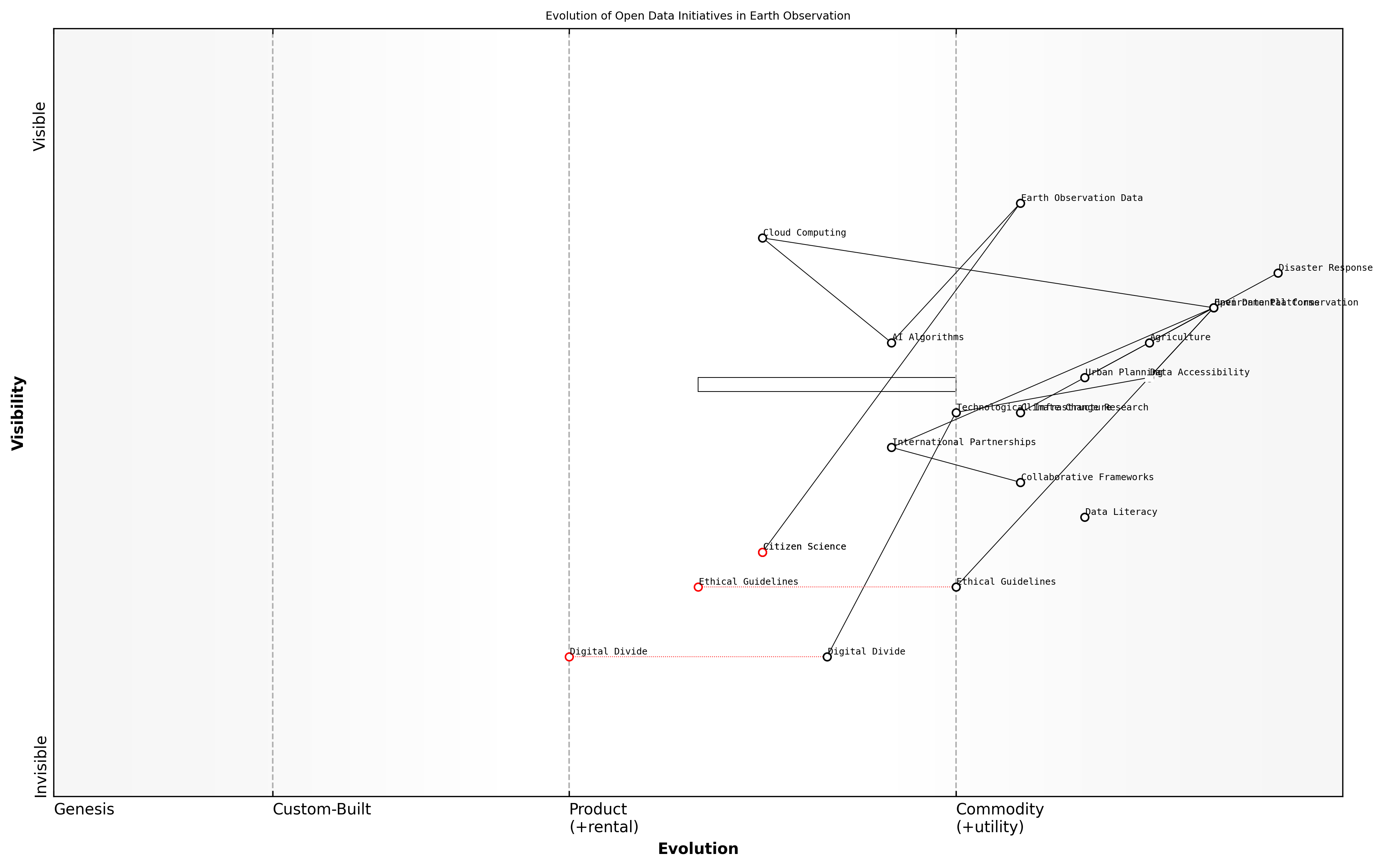

One of the most significant developments in recent years has been the democratisation of access to Earth observation data. Many space agencies and organisations now provide open access to satellite imagery and derived products, fostering innovation and enabling a wide range of applications. For instance, the European Space Agency's Copernicus programme offers free and open access to data from the Sentinel satellites, empowering researchers, businesses, and policymakers worldwide.

The open data policies adopted by major space agencies have catalysed a new era of global collaboration and innovation in Earth observation, transforming how we address planetary-scale challenges.

However, the proliferation of Earth Observation Satellites also presents challenges. The increasing volume of data generated by these systems requires sophisticated data management and analysis techniques. Moreover, the growing number of satellites in orbit raises concerns about space debris and the sustainable use of Earth's orbital environment.

Looking ahead, the future of Earth Observation Satellites is poised for further innovation. Emerging trends include:

- Miniaturisation: The development of smaller, more cost-effective satellites (e.g., CubeSats) is enabling more frequent launches and denser satellite constellations.

- Advanced sensors: Next-generation sensors will offer even higher resolutions and novel measurement capabilities, such as greenhouse gas monitoring at individual facility levels.

- On-board AI: Satellites equipped with artificial intelligence will be able to process data in orbit, reducing the volume of information transmitted to Earth and enabling faster response times for critical applications.

- Inter-satellite communications: Advanced laser communication systems will enable satellites to form networked constellations, improving data relay capabilities and global coverage.

- Quantum sensors: The integration of quantum technologies promises to revolutionise the precision and sensitivity of Earth observation measurements.

As we continue to push the boundaries of Earth observation technology, it is crucial to consider the ethical implications and ensure responsible development and use of these powerful tools. The Planet Information Platform must balance the immense potential for global benefit with concerns about privacy, security, and equitable access to information.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution and dependencies of Earth Observation Satellite technologies within the Planet Information Platform ecosystem]](https://images.wardleymaps.ai/wardleymaps/map_1f457ddb-1cb4-4cbb-b57d-36f1323146d4.png)

In conclusion, Earth Observation Satellites represent a cornerstone technology in our quest to build a comprehensive Planet Information Platform. By harnessing the power of these space-based sensors in conjunction with advanced data analytics and AI, we are entering a new era of planetary awareness. This technological convergence promises to revolutionise our understanding of Earth systems and our ability to address global challenges, from climate change to sustainable development, with unprecedented insight and precision.

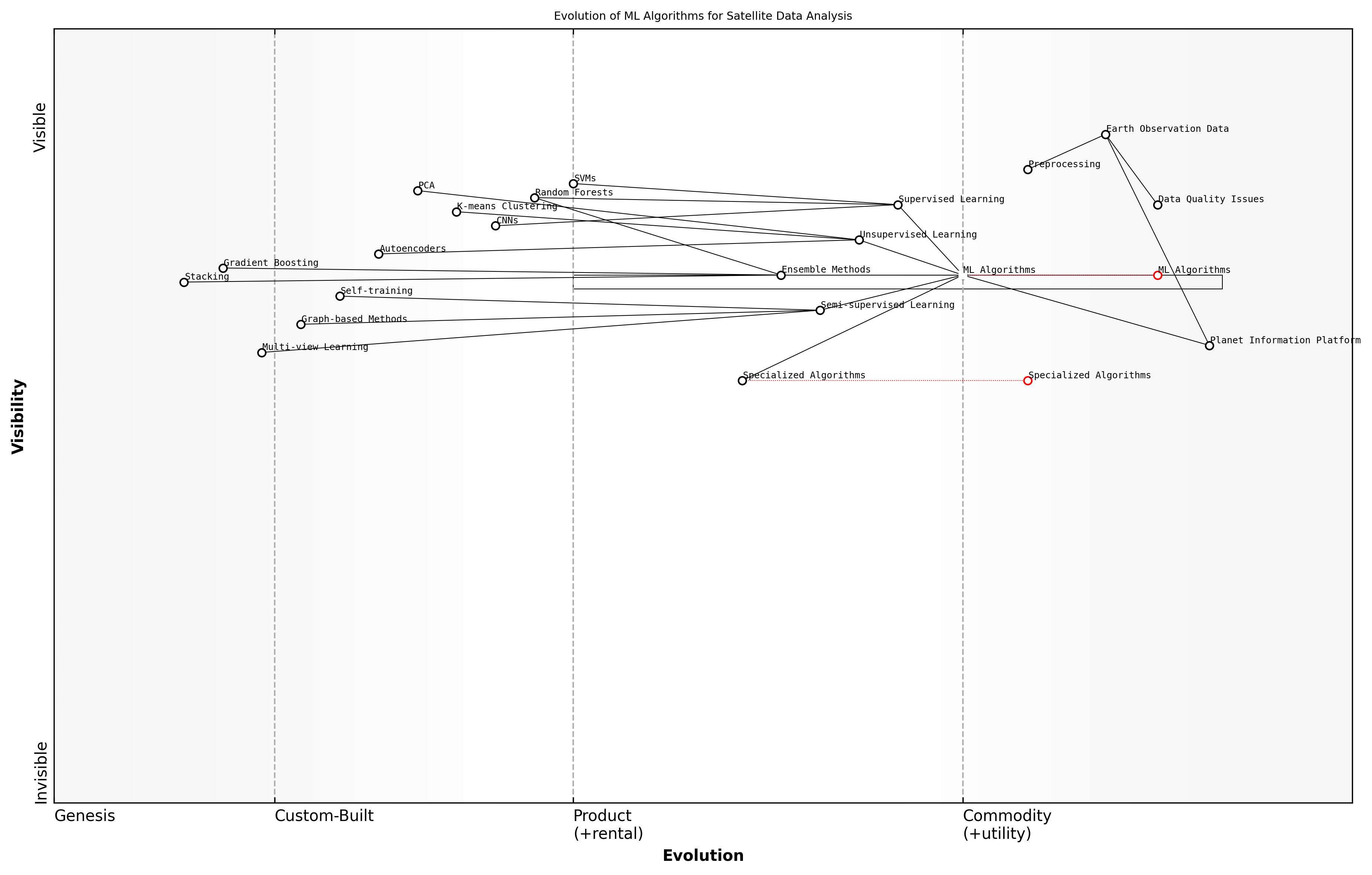

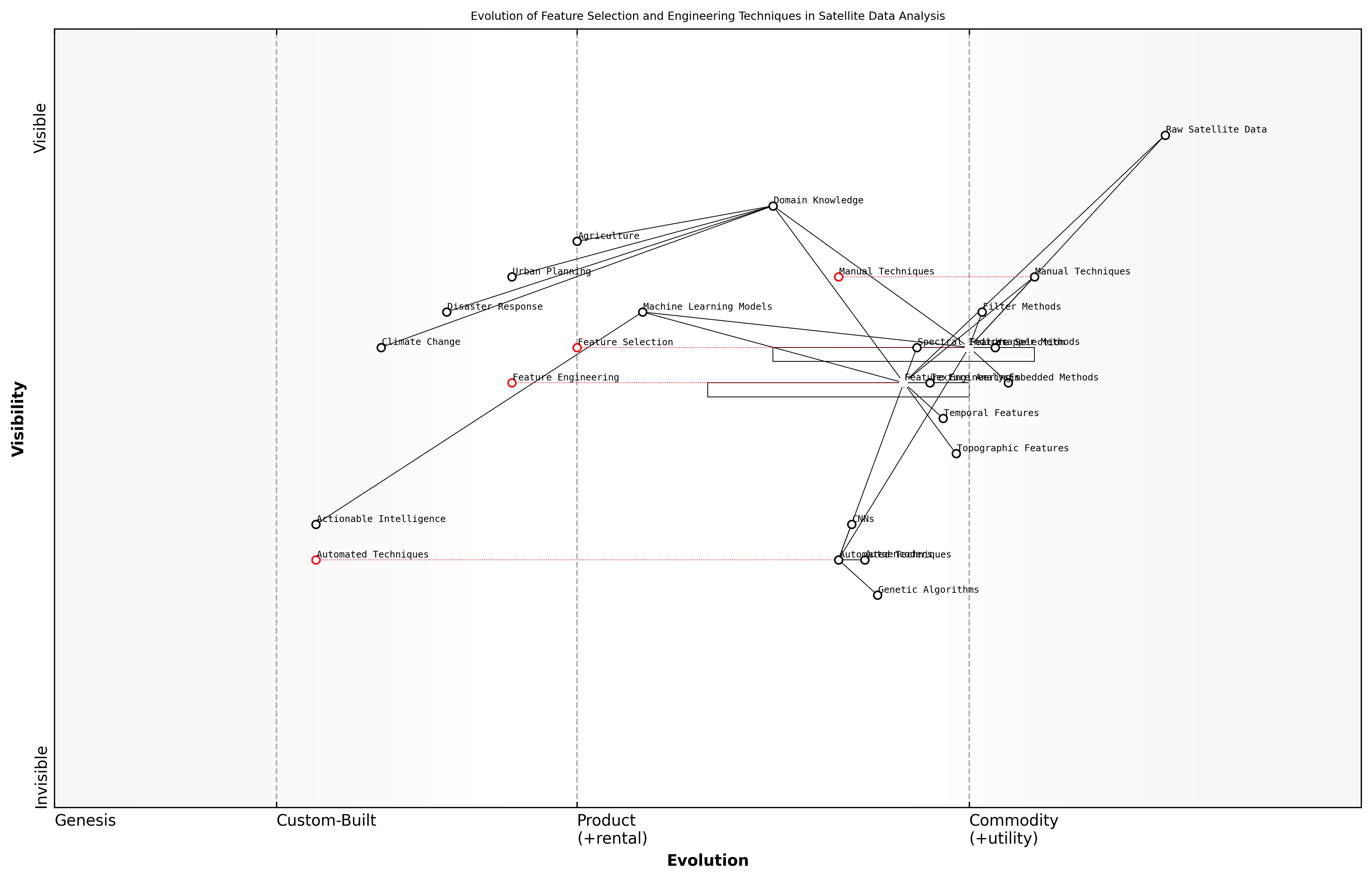

Machine Learning and AI

Machine Learning (ML) and Artificial Intelligence (AI) form the cognitive backbone of the Planet Information Platform, enabling the transformation of vast quantities of raw satellite data into actionable insights. As we embark on this new era of planetary observation, these technologies are pivotal in unlocking the full potential of Earth observation satellites, big data analytics, and generative AI to comprehensively map and understand our planet.

The integration of ML and AI within the Planet Information Platform represents a paradigm shift in how we process, analyse, and interpret Earth observation data. These technologies enable us to detect patterns, anomalies, and trends that would be impossible to discern through human analysis alone, given the sheer volume and complexity of the data involved.

The synergy between satellite technology and AI is not just an incremental improvement; it's a revolutionary leap that allows us to see our planet in ways we never thought possible. We're not just observing the Earth; we're understanding it in real-time.

Let's delve into the key components and applications of ML and AI within the context of the Planet Information Platform:

- Deep Learning and Neural Networks

- Computer Vision

- Natural Language Processing (NLP)

- Reinforcement Learning

- Generative AI

Deep Learning and Neural Networks form the foundation of many AI applications in Earth observation. These sophisticated algorithms, inspired by the human brain's neural structure, excel at processing and analysing complex, high-dimensional data such as satellite imagery. Convolutional Neural Networks (CNNs), in particular, have revolutionised image analysis, enabling tasks such as land cover classification, object detection, and change detection with unprecedented accuracy and efficiency.

Computer Vision techniques, powered by deep learning, allow the Platform to 'see' and interpret visual data from satellites. This capability is crucial for applications such as monitoring deforestation, urban development, and agricultural productivity. Advanced computer vision algorithms can detect subtle changes in landscapes over time, identify specific objects or structures, and even estimate quantitative parameters like crop yield or building height from imagery.

While perhaps less obvious, Natural Language Processing (NLP) plays a vital role in the Planet Information Platform. NLP algorithms help in processing and analysing textual data from various sources, including satellite metadata, scientific reports, and social media. This capability allows for the integration of human-generated information with satellite data, providing context and enriching the overall analysis.

Reinforcement Learning, though still in its early stages of application in Earth observation, shows promise for optimising satellite operations and data collection strategies. These algorithms can learn to make decisions about where to point sensors or how to prioritise data collection based on the potential value of the information gathered.

Generative AI, including techniques like Generative Adversarial Networks (GANs), represents the cutting edge of AI applications in the Platform. These models can generate synthetic satellite imagery, fill in gaps in data coverage, and even predict future scenarios. For instance, GANs can be used to enhance the resolution of low-quality satellite images or to simulate the potential impacts of climate change on landscapes.

Generative AI is not just about creating data; it's about understanding the underlying patterns and structures in our world. It allows us to see beyond what our satellites can directly observe, opening up new frontiers in predictive modelling and scenario planning.

The application of these ML and AI technologies within the Planet Information Platform faces several challenges and considerations:

- Data Quality and Quantity: AI models require vast amounts of high-quality, labelled data for training. Ensuring consistent data quality across diverse satellite sources and developing efficient labelling methods are ongoing challenges.

- Interpretability and Explainability: As AI models become more complex, ensuring their decisions are interpretable and explainable to policymakers and the public becomes crucial, especially for applications with significant societal impact.

- Computational Resources: Processing global-scale satellite data with advanced AI models requires substantial computational power. Optimising algorithms and leveraging cloud computing infrastructure are key to making these technologies scalable and accessible.

- Ethical Considerations: The use of AI for global monitoring raises important ethical questions about privacy, surveillance, and the potential for misuse. Developing robust governance frameworks and ethical guidelines is essential.

Despite these challenges, the integration of ML and AI into the Planet Information Platform offers unprecedented opportunities for global monitoring, environmental protection, and sustainable development. As these technologies continue to evolve, we can expect even more sophisticated applications, from real-time global change detection to AI-driven predictive models that can forecast environmental trends and support evidence-based policymaking.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution and dependencies of ML/AI technologies within the Planet Information Platform ecosystem]](https://images.wardleymaps.ai/wardleymaps/map_49b730b9-d7f8-4512-93d3-0857b877fbd9.png)

In conclusion, Machine Learning and AI are not merely tools within the Planet Information Platform; they are transformative technologies that fundamentally reshape our ability to observe, understand, and manage our planet. As we continue to push the boundaries of these technologies, we move closer to realising the vision of a truly comprehensive, real-time global information system that can help address some of the most pressing challenges facing our world today.

Big Data Analytics

Big Data Analytics forms a cornerstone of the Planet Information Platform, enabling the processing and interpretation of vast quantities of Earth observation data. As we enter a new era of planetary observation, the ability to harness and extract meaningful insights from the deluge of satellite imagery and sensor data has become paramount. This section explores the critical role of Big Data Analytics in transforming raw satellite data into actionable intelligence for global decision-making.

The scale of data generated by Earth observation satellites is staggering. A single high-resolution satellite can produce terabytes of data daily, and with the proliferation of satellite constellations, we are now dealing with petabytes of data on a regular basis. Traditional data processing methods are simply inadequate for handling this volume, velocity, and variety of information. Big Data Analytics provides the tools and techniques necessary to process, analyse, and derive insights from this massive dataset in a timely and efficient manner.

The challenge is not just about storing and processing vast amounts of data, but about extracting meaningful patterns and insights that can drive action on a global scale.

Key components of Big Data Analytics in the context of the Planet Information Platform include:

- Distributed Computing Frameworks: Technologies like Apache Hadoop and Apache Spark enable the processing of large datasets across clusters of computers, allowing for parallel processing and significantly reducing computation time.

- Stream Processing: Real-time analysis of data streams from satellites and ground-based sensors using platforms such as Apache Kafka or Apache Flink, enabling rapid response to emerging events or changes.

- Data Lakes and Cloud Storage: Scalable storage solutions that can accommodate the ever-growing volume of Earth observation data, providing flexible access and integration capabilities.

- Advanced Analytics and Machine Learning: Utilising sophisticated algorithms to detect patterns, anomalies, and trends in satellite imagery and sensor data, often leveraging GPU acceleration for complex computations.

- Visualisation Tools: Powerful software for rendering and interacting with geospatial data, allowing users to explore and understand complex datasets intuitively.

The application of Big Data Analytics in Earth observation has revolutionised our ability to monitor and understand global phenomena. For instance, in the realm of deforestation monitoring, we can now process daily satellite imagery of vast forest regions, using change detection algorithms to identify areas of concern almost in real-time. This capability has transformed the way governments and conservation organisations approach forest management and protection.

Similarly, in urban planning, Big Data Analytics enables the integration of multiple data sources – from high-resolution satellite imagery to IoT sensor networks – to create comprehensive models of urban environments. These models can be used to optimise traffic flow, plan infrastructure development, and improve energy efficiency on a city-wide scale.

The integration of Big Data Analytics with Earth observation technologies is not just enhancing our understanding of the planet; it's fundamentally changing how we interact with and manage our global resources.

However, the implementation of Big Data Analytics in the Planet Information Platform is not without challenges. Key considerations include:

- Data Quality and Consistency: Ensuring the accuracy and reliability of insights derived from diverse data sources with varying levels of quality and resolution.

- Scalability: Designing systems that can grow to accommodate the exponential increase in data volume and complexity expected in the coming years.

- Interoperability: Developing standards and protocols for data sharing and integration across different platforms and organisations.

- Privacy and Security: Balancing the need for detailed Earth observation data with concerns about privacy and potential misuse of high-resolution imagery.

- Skill Gap: Addressing the shortage of professionals with the necessary expertise in both geospatial technologies and advanced data analytics.

As we look to the future, the role of Big Data Analytics in the Planet Information Platform will only grow in importance. Emerging technologies such as edge computing and 5G networks will enable more distributed and real-time analytics capabilities, pushing the boundaries of what's possible in global monitoring and decision-making.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution of Big Data Analytics technologies in the context of Earth observation and their strategic importance to the Planet Information Platform]](https://images.wardleymaps.ai/wardleymaps/map_2131cbe3-3464-4a41-a523-0f0f99765b9f.png)

In conclusion, Big Data Analytics serves as a crucial enabler for the Planet Information Platform, transforming the vast quantities of Earth observation data into actionable insights. As we continue to refine and expand our analytical capabilities, we move closer to realising the vision of a truly comprehensive and responsive global information system, capable of addressing the most pressing challenges facing our planet.

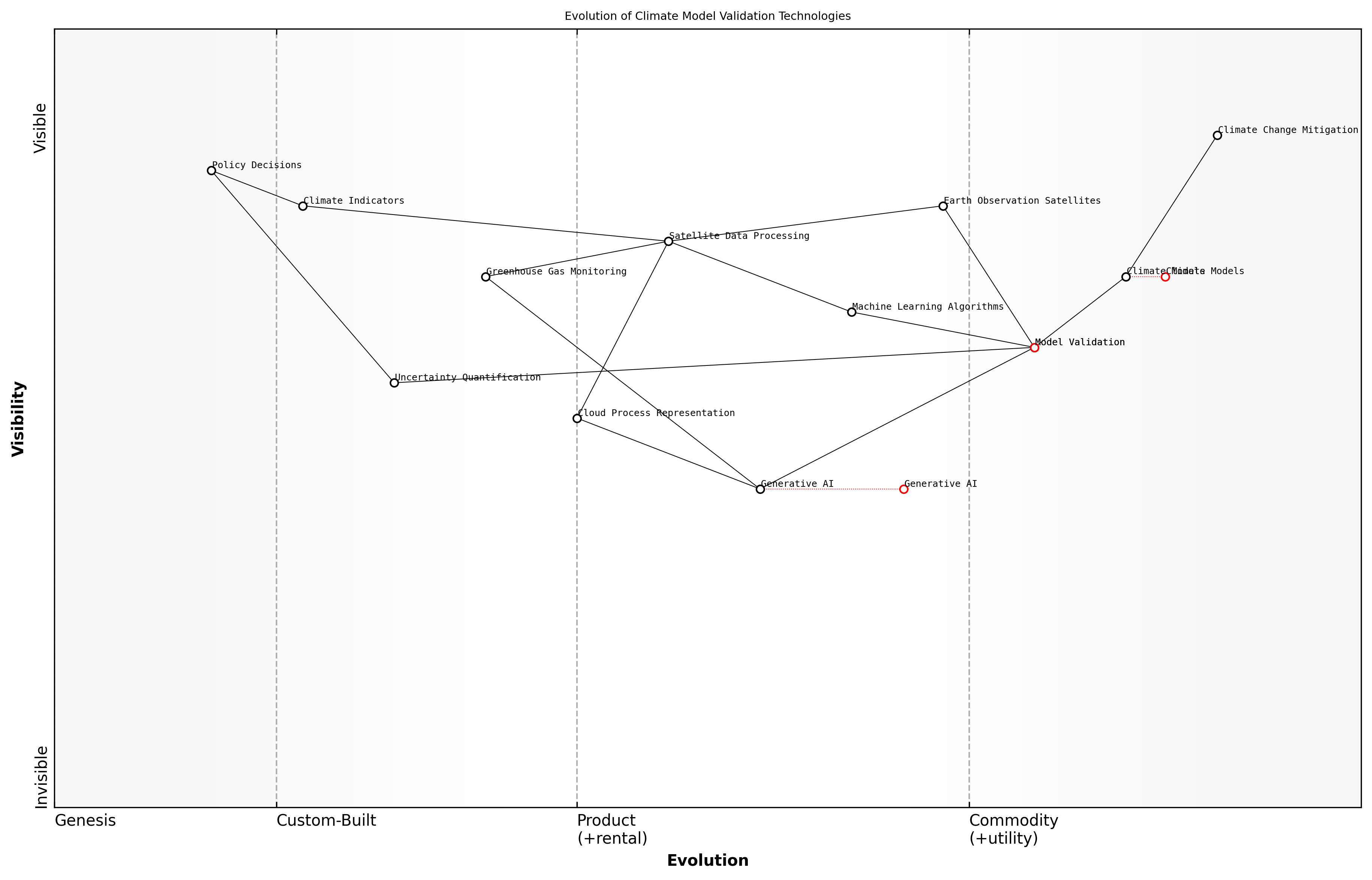

Generative AI

Generative AI represents a transformative force in the realm of Earth observation and the Planet Information Platform. As we embark on this new era of planetary observation, generative AI emerges as a pivotal technology, offering unprecedented capabilities in data analysis, image enhancement, and predictive modelling. This section explores the fundamental concepts, applications, and implications of generative AI within the context of global Earth monitoring systems.

At its core, generative AI refers to artificial intelligence systems capable of creating new, original content based on patterns and information learned from existing data. In the context of Earth observation, this technology opens up a wealth of possibilities for enhancing our understanding of the planet and addressing complex global challenges.

Generative AI is not just a tool; it's a paradigm shift in how we perceive and interact with Earth observation data. It allows us to see beyond the limitations of our current sensing capabilities, filling gaps and creating new insights that were previously unattainable.

The applications of generative AI in Earth observation can be broadly categorised into three main areas:

- Image Enhancement and Super-resolution

- Data Interpolation and Gap Filling

- Predictive Modelling and Scenario Generation

Image Enhancement and Super-resolution: One of the most promising applications of generative AI in Earth observation is the ability to enhance image quality and resolution. Traditional satellite imagery often suffers from limitations in spatial resolution, cloud cover, or atmospheric distortions. Generative AI models, particularly Generative Adversarial Networks (GANs), can be trained to upscale low-resolution images or reconstruct missing details, effectively increasing the usable data from existing Earth observation systems.

For instance, a GAN trained on high-resolution satellite imagery can learn to generate realistic details when presented with a lower-resolution input. This capability is particularly valuable for historical satellite data, allowing researchers to 'upgrade' older imagery to match the quality of modern sensors, thereby extending the temporal range of high-quality Earth observation data.

Data Interpolation and Gap Filling: Earth observation data often suffers from gaps due to various factors such as cloud cover, sensor malfunctions, or orbital limitations. Generative AI can be employed to fill these gaps by learning the underlying patterns and structures in the available data. This approach goes beyond simple interpolation, as the AI can generate plausible and context-aware data for missing regions.

The ability of generative AI to fill data gaps is not just about completing the picture; it's about maintaining the continuity of our planetary monitoring systems. This ensures that decision-makers have access to comprehensive, uninterrupted data streams for critical applications such as climate modelling or disaster response.

Predictive Modelling and Scenario Generation: Perhaps the most powerful application of generative AI in the Planet Information Platform is its capacity for predictive modelling and scenario generation. By learning from historical data and current trends, generative models can simulate future scenarios with unprecedented detail and accuracy. This capability is invaluable for climate change studies, urban planning, and environmental impact assessments.

For example, a generative model trained on decades of land use data, climate patterns, and human activity can generate detailed projections of how a specific region might evolve under various climate change scenarios or policy interventions. These AI-generated scenarios provide policymakers and researchers with a powerful tool for exploring potential futures and informing decision-making processes.

However, the integration of generative AI into Earth observation systems also presents challenges and ethical considerations that must be carefully addressed:

- Data Integrity and Trustworthiness: As generative AI creates new data, ensuring the integrity and trustworthiness of this synthetic information becomes crucial. Robust validation mechanisms and clear communication of AI-generated content are essential.

- Bias and Representation: Generative models can inadvertently perpetuate or amplify biases present in training data. Ensuring diverse and representative training datasets is critical for fair and accurate Earth observation applications.

- Computational Resources: Training and deploying large-scale generative AI models requires significant computational resources, which can have environmental implications. Balancing the benefits of these technologies with their energy consumption is an important consideration.

- Interpretability and Explainability: As generative AI models become more complex, ensuring their decisions and outputs are interpretable and explainable to human users becomes increasingly challenging but essential for building trust and accountability.

Despite these challenges, the potential of generative AI to revolutionise Earth observation and the Planet Information Platform is immense. As we continue to refine these technologies and address their ethical implications, generative AI promises to provide us with unprecedented insights into our planet's systems, enabling more informed decision-making and effective responses to global challenges.

Generative AI is not just enhancing our view of the Earth; it's expanding our capacity to understand, predict, and positively shape the future of our planet. As we harness this technology responsibly, we open new frontiers in planetary stewardship and sustainable development.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution and strategic positioning of generative AI technologies within the Earth observation value chain]](https://images.wardleymaps.ai/wardleymaps/map_cab7e236-f64d-419d-a5a3-9976fb248cb2.png)

As we move forward, the integration of generative AI into the Planet Information Platform will undoubtedly continue to evolve, offering new possibilities and challenges. The key to harnessing its full potential lies in fostering interdisciplinary collaboration, maintaining ethical vigilance, and continuously aligning technological advancements with the broader goals of sustainable development and global environmental stewardship.

Fundamentals of Earth Observation Technologies

Satellite Systems and Sensors

Types of Earth Observation Satellites

Earth observation satellites are the cornerstone of the Planet Information Platform, providing the raw data that fuels our understanding of global phenomena. As we delve into the various types of these satellites, it's crucial to recognise their pivotal role in creating a comprehensive, real-time view of our planet. This section explores the diverse array of Earth observation satellites, their unique capabilities, and how they contribute to the broader goals of planetary monitoring and analysis.

Earth observation satellites can be broadly categorised based on their orbital characteristics, sensor types, and primary applications. Understanding these categories is essential for leveraging the full potential of satellite data in the Planet Information Platform.

- Low Earth Orbit (LEO) Satellites

- Geostationary Satellites

- Medium Earth Orbit (MEO) Satellites

- Polar Orbiting Satellites

- Sun-Synchronous Orbit (SSO) Satellites

Low Earth Orbit (LEO) Satellites operate at altitudes between 160 to 2,000 kilometres above the Earth's surface. These satellites are particularly valuable for high-resolution imagery and detailed Earth observation due to their proximity to the planet. LEO satellites are often used for applications requiring frequent revisits and high spatial resolution, such as urban planning, precision agriculture, and disaster response.

LEO satellites have revolutionised our ability to monitor Earth's systems with unprecedented detail and frequency. Their low altitude allows for the capture of high-resolution imagery that is crucial for a wide range of applications, from environmental monitoring to infrastructure assessment.

Geostationary Satellites, positioned approximately 35,786 kilometres above the Earth's equator, maintain a fixed position relative to the Earth's surface. These satellites are invaluable for continuous monitoring of large geographical areas, making them ideal for weather forecasting, climate studies, and telecommunications. Their ability to provide constant coverage of a specific region is particularly useful for tracking dynamic phenomena such as severe weather events or large-scale environmental changes.

Medium Earth Orbit (MEO) Satellites occupy the region between LEO and geostationary orbits, typically at altitudes of 2,000 to 35,786 kilometres. These satellites offer a balance between the high-resolution capabilities of LEO satellites and the broad coverage of geostationary satellites. MEO satellites are commonly used for navigation systems like GPS and for communication networks.

Polar Orbiting Satellites pass over the Earth's polar regions on each revolution, providing global coverage as the Earth rotates beneath them. These satellites are crucial for applications requiring consistent, global data collection, such as climate monitoring, sea ice mapping, and atmospheric studies. Their ability to observe nearly every part of the Earth's surface makes them indispensable for comprehensive planetary monitoring.

Polar orbiting satellites are the workhorses of global Earth observation. Their unique orbital characteristics allow for consistent, worldwide data collection, which is essential for understanding large-scale environmental processes and climate patterns.

Sun-Synchronous Orbit (SSO) Satellites are a special type of polar-orbiting satellite that passes over any given point on the Earth's surface at the same local solar time. This consistent lighting condition is particularly valuable for long-term monitoring and change detection, as it minimises variations in illumination between observations. SSO satellites are extensively used for environmental monitoring, land-use mapping, and agricultural applications.

In addition to these orbital classifications, Earth observation satellites can be further categorised based on their primary sensor types and applications:

- Optical Imaging Satellites

- Radar Satellites

- Hyperspectral Imaging Satellites

- Atmospheric and Climate Monitoring Satellites

- Ocean Observation Satellites

Optical Imaging Satellites capture visible and near-infrared light reflected from the Earth's surface, providing high-resolution imagery similar to traditional aerial photography. These satellites are essential for applications such as land-use mapping, urban planning, and natural resource management. Advanced optical satellites can achieve sub-metre resolution, enabling detailed analysis of small-scale features and changes.

Radar Satellites, particularly those equipped with Synthetic Aperture Radar (SAR), use microwave signals to image the Earth's surface. These satellites can operate day or night and can penetrate cloud cover, making them invaluable for applications in regions with frequent cloud coverage or for monitoring nighttime activities. SAR satellites are particularly useful for monitoring sea ice, oil spills, and subtle ground deformations associated with geological processes.

Hyperspectral Imaging Satellites capture data across a wide range of the electromagnetic spectrum, often in hundreds of narrow spectral bands. This detailed spectral information allows for precise identification and analysis of Earth surface materials, including minerals, vegetation types, and water quality parameters. Hyperspectral data is particularly valuable for applications in geology, agriculture, and environmental monitoring.

The advent of hyperspectral imaging satellites has opened up new frontiers in Earth observation. Their ability to capture detailed spectral signatures allows us to 'see' the unseen, revealing information about our planet that was previously inaccessible through traditional remote sensing methods.

Atmospheric and Climate Monitoring Satellites are specifically designed to measure various atmospheric parameters such as temperature, humidity, greenhouse gas concentrations, and aerosol distributions. These satellites play a crucial role in climate research, weather forecasting, and monitoring air quality. Examples include NASA's Orbiting Carbon Observatory (OCO) series and ESA's Sentinel-5P, which focuses on atmospheric composition.

Ocean Observation Satellites are tailored to monitor oceanic conditions, including sea surface temperature, ocean colour, sea level, and wave heights. These satellites are essential for understanding ocean dynamics, monitoring marine ecosystems, and supporting maritime activities. Missions like the Jason series for sea level monitoring and the Sentinel-3 for ocean colour and sea surface temperature have significantly advanced our understanding of Earth's oceans.

The diversity of Earth observation satellites reflects the complexity of our planet and the multifaceted approach required to monitor and understand it comprehensively. As we continue to develop and launch new satellite missions, the synergy between different types of Earth observation satellites becomes increasingly important. The Planet Information Platform leverages this diverse array of satellite data, combining it with advanced AI and machine learning algorithms to extract meaningful insights and create a holistic view of our changing planet.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution and strategic importance of different types of Earth observation satellites in the context of the Planet Information Platform]](https://images.wardleymaps.ai/wardleymaps/map_cb5e99cb-4753-470d-adcd-849261d15956.png)

As we move forward, the integration of data from these various satellite types, combined with ground-based observations and other data sources, will be crucial in addressing global challenges such as climate change, resource management, and disaster response. The Planet Information Platform serves as the nexus for this integration, transforming raw satellite data into actionable intelligence for decision-makers across the public and private sectors.

Sensor Technologies and Capabilities

In the realm of Earth observation satellites, sensor technologies and capabilities form the cornerstone of our ability to gather comprehensive data about our planet. These advanced instruments are the eyes and ears of the Planet Information Platform, enabling us to capture a wide array of information from the vantage point of space. As we delve into this critical subsection, we'll explore the diverse range of sensors employed in satellite systems, their unique capabilities, and how they contribute to our understanding of Earth's complex systems.

Earth observation satellites employ a variety of sensor types, each designed to capture specific types of data. These sensors can be broadly categorised into two main groups: passive and active sensors.

- Passive Sensors: These sensors detect natural radiation emitted or reflected by the Earth and its atmosphere. Examples include optical sensors and radiometers.

- Active Sensors: These sensors emit their own energy and measure the reflection. Examples include radar and LiDAR systems.

Let's examine some of the key sensor technologies in more detail:

- Optical Sensors: These are perhaps the most familiar type of satellite sensors. They capture visible light reflected from the Earth's surface, much like a digital camera. However, satellite optical sensors are far more sophisticated, often capturing data across multiple spectral bands.

- Panchromatic sensors: Capture high-resolution images in a single, broad wavelength band

- Multispectral sensors: Capture data in several spectral bands, typically 3-10

- Hyperspectral sensors: Capture data in hundreds of narrow spectral bands, allowing for detailed spectral analysis

-

Synthetic Aperture Radar (SAR): This active sensor technology uses microwave signals to create high-resolution images of the Earth's surface. SAR has the unique ability to penetrate cloud cover and operate in darkness, making it invaluable for continuous monitoring.

-

LiDAR (Light Detection and Ranging): While more commonly used in airborne platforms, LiDAR is increasingly being deployed on satellites. It uses laser pulses to measure distances and create detailed 3D maps of the Earth's surface.

-

Thermal Infrared Sensors: These sensors detect heat emitted from the Earth's surface, allowing for temperature mapping and thermal anomaly detection. They are crucial for applications such as urban heat island monitoring and volcanic activity tracking.

-

Atmospheric Sensors: A range of specialised sensors are used to measure various atmospheric parameters, including:

- Spectrometers for measuring atmospheric composition

- Radiometers for detecting atmospheric radiation

- Scatterometers for measuring wind speed and direction over oceans

The capabilities of these sensors are continually evolving, driven by advancements in technology and the growing demand for more detailed and frequent Earth observation data. Some key trends in sensor capabilities include:

- Improved spatial resolution: Modern optical sensors can achieve sub-metre resolution, allowing for incredibly detailed imaging of the Earth's surface.

- Enhanced spectral resolution: Hyperspectral sensors can now capture data in hundreds of narrow bands, enabling precise material identification and analysis.

- Increased temporal resolution: Constellations of small satellites are providing more frequent revisit times, allowing for near-real-time monitoring of rapidly changing phenomena.

- Greater radiometric sensitivity: Advances in sensor technology are allowing for the detection of ever-fainter signals, improving our ability to measure subtle changes in the Earth system.

The rapid evolution of satellite sensor technologies is revolutionising our ability to observe and understand our planet. We are now able to detect and measure phenomena that were previously invisible to us, opening up new frontiers in Earth science and environmental monitoring.

The integration of these diverse sensor technologies within the Planet Information Platform presents both opportunities and challenges. On one hand, the wealth of data from multiple sensor types allows for unprecedented insights into Earth's systems. On the other hand, it requires sophisticated data fusion techniques and powerful computing resources to effectively combine and analyse these diverse data streams.

As we look to the future, emerging sensor technologies promise to further expand our observational capabilities. Quantum sensors, for instance, may offer unprecedented sensitivity and precision in measuring gravity fields, magnetic fields, and other fundamental properties of the Earth system.

In conclusion, the diverse array of sensor technologies and their ever-expanding capabilities form the foundation of the Planet Information Platform. By harnessing these technologies and integrating their data streams, we are building a comprehensive, near-real-time understanding of our planet. This knowledge is crucial for addressing global challenges, from climate change and environmental degradation to disaster response and sustainable development.

The true power of the Planet Information Platform lies not just in the individual capabilities of each sensor, but in our ability to integrate and analyse data from multiple sensors to gain a holistic view of the Earth system. This synergistic approach is key to unlocking new insights and driving informed decision-making on a global scale.

Orbital Considerations and Coverage

In the context of the Planet Information Platform, understanding orbital considerations and coverage is crucial for optimising the collection of Earth observation data. This subsection delves into the intricate relationship between satellite orbits, Earth's surface coverage, and the temporal and spatial resolution of data acquisition. As we strive to map and monitor our planet with unprecedented detail, the strategic placement and movement of satellites become paramount in achieving comprehensive and timely observations.

Satellite orbits are fundamentally categorised based on their altitude, inclination, and eccentricity. Each type of orbit offers distinct advantages and limitations for Earth observation missions, influencing factors such as revisit time, swath width, and spatial resolution. Let's explore the primary orbital configurations and their implications for global coverage:

- Low Earth Orbit (LEO): Typically ranging from 160 to 2,000 km above Earth's surface, LEO satellites offer high spatial resolution and low latency. They are ideal for detailed mapping and rapid response applications but require larger constellations for frequent global coverage.

- Medium Earth Orbit (MEO): Positioned between 2,000 and 35,786 km, MEO satellites provide a balance between coverage area and resolution. They are often used for navigation systems and some Earth observation missions requiring intermediate revisit times.

- Geostationary Orbit (GEO): At approximately 35,786 km above the equator, GEO satellites maintain a fixed position relative to Earth's surface. While offering continuous coverage of a specific region, they are limited in spatial resolution and polar coverage.

- Sun-Synchronous Orbit (SSO): A special type of LEO, SSO ensures that a satellite passes over any given point of the Earth's surface at the same local solar time. This consistency in lighting conditions is crucial for long-term monitoring and change detection.

The choice of orbit significantly impacts the coverage patterns and data collection strategies for Earth observation missions. For instance, LEO satellites in polar orbits can achieve global coverage by utilising the Earth's rotation, while a constellation of satellites can reduce revisit times and increase temporal resolution. The Planet Information Platform must integrate data from various orbital configurations to ensure comprehensive and timely global coverage.

The synergy between diverse orbital configurations is the key to achieving a truly global and responsive Earth observation system. By leveraging the strengths of each orbit type, we can create a multi-layered view of our planet that is both detailed and dynamic.

Optimising coverage also involves considering the swath width of satellite sensors. The swath width, which is the strip of the Earth's surface imaged during a satellite pass, is determined by the sensor's field of view and the satellite's altitude. Higher altitudes generally result in wider swaths but at the cost of spatial resolution. The Planet Information Platform must balance these trade-offs to meet diverse user requirements for both broad coverage and high-resolution imagery.

Another critical aspect of orbital considerations is the management of satellite constellations. By carefully orchestrating the placement and phasing of multiple satellites, we can achieve more frequent revisits and reduce the time between observations of any given location. This is particularly important for applications such as disaster response, where timely data is crucial.

The integration of machine learning and AI algorithms within the Planet Information Platform adds another dimension to orbital considerations. These technologies can optimise tasking and data acquisition strategies, predicting areas of interest and dynamically adjusting satellite pointing to maximise the value of each orbital pass. This intelligent orchestration of satellite resources ensures that we capture the most relevant and timely data for our global monitoring efforts.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution of satellite orbital strategies and their impact on global coverage capabilities]](https://images.wardleymaps.ai/wardleymaps/map_85a06e17-4f75-4ce5-ada6-bc6b2b4392ed.png)

As we look to the future, emerging technologies such as small satellite constellations and adaptive orbit control systems promise to revolutionise our approach to global coverage. These innovations will enable more flexible and responsive Earth observation capabilities, allowing the Planet Information Platform to adapt to changing global priorities and emerging environmental challenges.

The future of Earth observation lies not just in the number of satellites we deploy, but in our ability to orchestrate their movements and capabilities in harmony with the dynamic nature of our planet. It's about creating a responsive, intelligent network that can focus our observational power where and when it's needed most.

In conclusion, orbital considerations and coverage strategies are fundamental to the success of the Planet Information Platform. By leveraging a diverse array of orbital configurations, optimising constellation designs, and integrating intelligent tasking algorithms, we can achieve unprecedented global coverage and responsiveness in Earth observation. This comprehensive approach ensures that we can monitor and understand our planet's systems with the depth and agility required to address the complex challenges of the 21st century.

Data Collection and Transmission

Raw Data Acquisition

Raw data acquisition is a critical first step in the Earth observation process, serving as the foundation for the Planet Information Platform. This stage involves the collection of vast amounts of data from various satellite sensors, forming the basis for all subsequent analysis and insights. As we delve into this crucial aspect of Earth observation technologies, we'll explore the intricacies of data capture, the challenges faced, and the cutting-edge technologies employed to ensure high-quality, comprehensive data collection.

The process of raw data acquisition in Earth observation can be broadly categorised into three main components: sensor operation, data capture, and onboard processing. Each of these components plays a vital role in ensuring the quality and usability of the data collected.

- Sensor Operation: The activation and management of various satellite-based sensors

- Data Capture: The actual recording of Earth observation data

- Onboard Processing: Initial data handling and compression before transmission

Sensor Operation: Earth observation satellites are equipped with a variety of sensors, each designed to capture specific types of data. These may include optical sensors for visible light imagery, infrared sensors for heat detection, radar systems for all-weather imaging, and spectrometers for detailed atmospheric analysis. The operation of these sensors is carefully orchestrated to maximise data collection while managing power consumption and satellite resources.

The art of sensor operation lies in striking a balance between data quality, coverage, and satellite longevity. Each mission requires a bespoke approach to sensor management, tailored to its specific objectives and constraints.

Data Capture: Once sensors are activated, they begin the process of data capture. This involves converting physical phenomena – such as reflected light, heat signatures, or radar echoes – into digital signals that can be stored and processed. The resolution and frequency of data capture can vary widely depending on the sensor type and mission objectives. For instance, high-resolution optical imagery may be captured at specific intervals, while atmospheric sensors might collect data continuously.

One of the key challenges in data capture is managing the vast volumes of information generated. Modern Earth observation satellites can produce terabytes of raw data per day, necessitating sophisticated onboard storage systems and efficient data management protocols.

Onboard Processing: To manage the enormous data volumes and prepare for transmission to ground stations, satellites perform initial onboard processing. This typically involves data compression, error checking, and preliminary formatting. Advanced satellites may also conduct some level of data filtering or prioritisation to ensure the most critical or time-sensitive information is transmitted first.

Onboard processing is the unsung hero of Earth observation. It's the critical link that transforms raw sensor data into manageable, transmittable information packages, setting the stage for all subsequent analysis and insights.

The raw data acquisition process is continually evolving, driven by advancements in sensor technology, onboard computing power, and data storage capabilities. Recent innovations include:

- Adaptive sensor operation algorithms that optimise data collection based on real-time conditions and mission priorities

- Multi-sensor fusion techniques that combine data from different sensors in real-time, enhancing the richness and utility of the captured information

- Edge computing implementations that enable more sophisticated onboard processing, including preliminary AI-driven analysis and data prioritisation

- Advanced data compression algorithms that significantly reduce the volume of data requiring transmission without compromising quality

These advancements are crucial for the Planet Information Platform, as they directly impact the quality, quantity, and timeliness of the data available for analysis. By improving raw data acquisition capabilities, we enhance our ability to monitor and understand Earth systems with unprecedented detail and accuracy.

However, raw data acquisition also presents significant challenges that must be addressed to fully realise the potential of the Planet Information Platform. These include:

- Power management: Balancing the energy demands of high-performance sensors and onboard processing systems with the limited power available on satellites

- Data integrity: Ensuring the accuracy and reliability of captured data in the harsh space environment

- Bandwidth limitations: Optimising data transmission within the constraints of available communication channels

- Sensor calibration: Maintaining the accuracy and consistency of sensor measurements over extended periods

- Data security: Protecting sensitive Earth observation data from unauthorised access or interference

Addressing these challenges requires a multidisciplinary approach, combining expertise in satellite engineering, data science, and Earth system sciences. It also necessitates close collaboration between space agencies, private sector innovators, and the scientific community to drive continuous improvement in raw data acquisition capabilities.

The future of Earth observation lies not just in launching more satellites, but in revolutionising how we capture, process, and transmit data from space. Every advancement in raw data acquisition ripples through the entire Earth observation value chain, amplifying our ability to understand and protect our planet.

As we look to the future, emerging technologies such as quantum sensors, artificial intelligence-driven adaptive sampling, and inter-satellite data relay systems promise to further enhance our raw data acquisition capabilities. These advancements will enable the Planet Information Platform to provide even more comprehensive, timely, and actionable insights into the state of our planet, supporting critical decision-making across a wide range of domains, from climate change mitigation to disaster response and urban planning.

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution of raw data acquisition technologies and their position in the Earth observation value chain]](https://images.wardleymaps.ai/wardleymaps/map_8a99d173-a75d-45d6-9248-0e29a8985226.png)

In conclusion, raw data acquisition forms the bedrock of the Planet Information Platform, providing the essential inputs for all subsequent analysis and decision-making processes. By continually advancing our capabilities in this crucial area, we enhance our ability to monitor, understand, and ultimately protect our planet, paving the way for more informed and effective global stewardship.

Data Downlink and Ground Stations

In the realm of Earth observation and the Planet Information Platform, the process of data downlink and the role of ground stations are critical components in the journey from raw satellite data to actionable intelligence. This subsection delves into the intricate mechanisms and infrastructure that enable the seamless transmission of vast quantities of Earth observation data from orbiting satellites to terrestrial processing centres.

The importance of efficient and reliable data downlink systems cannot be overstated. As a senior consultant in this field once remarked, 'The most sophisticated Earth observation satellite is only as good as its ability to transmit data back to Earth.' This sentiment encapsulates the pivotal role that data downlink and ground stations play in the broader ecosystem of planetary monitoring and analysis.

Let us explore the key aspects of data downlink and ground stations in detail:

- Satellite-to-Ground Communication Protocols

- Ground Station Network Architecture

- Data Reception and Initial Processing

- Challenges and Innovations in Data Downlink

Satellite-to-Ground Communication Protocols:

The foundation of effective data downlink lies in robust communication protocols between satellites and ground stations. These protocols must account for the unique challenges of space-to-Earth transmission, including signal attenuation, atmospheric interference, and the Doppler effect due to satellite motion.

Modern Earth observation satellites typically employ high-frequency radio waves in the X-band (8-12 GHz) or Ka-band (26.5-40 GHz) for data transmission. These frequencies offer high bandwidth capabilities, allowing for the rapid downlink of large volumes of data during brief overhead passes. However, they are also susceptible to atmospheric attenuation, particularly in adverse weather conditions.

To mitigate these challenges, advanced error correction algorithms and adaptive coding and modulation techniques are employed. These ensure data integrity and optimise transmission rates based on real-time link conditions. As one leading expert in satellite communications noted, 'The evolution of these protocols has been a game-changer, enabling us to achieve near-lossless data transmission even in challenging environments.'

Ground Station Network Architecture:

The global network of ground stations forms the terrestrial backbone of the Planet Information Platform. This network is strategically designed to maximise coverage and minimise latency in data reception. Key considerations in ground station network architecture include:

- Geographical distribution to ensure global coverage

- Redundancy and load balancing capabilities

- Integration with high-speed terrestrial data networks

- Scalability to accommodate increasing data volumes

Modern ground station networks often leverage cloud computing infrastructure to enhance flexibility and scalability. This approach, known as 'Ground Station as a Service' (GSaaS), allows for more efficient resource allocation and enables smaller organisations to access advanced Earth observation capabilities without significant infrastructure investments.

The shift towards cloud-based ground station networks is democratising access to Earth observation data, opening up new possibilities for innovation and global collaboration.

Data Reception and Initial Processing:

Upon reception at the ground station, satellite data undergoes initial processing to prepare it for further analysis and distribution. This stage typically involves:

- Demodulation and decoding of the received signal

- Error checking and correction

- Decompression of data (if applicable)

- Metadata extraction and cataloguing

- Preliminary quality assessment

The efficiency of this initial processing stage is crucial for minimising latency in the overall data pipeline. Advanced ground stations employ high-performance computing systems and parallel processing techniques to handle the incoming data streams in real-time.

Challenges and Innovations in Data Downlink:

As Earth observation satellites become more sophisticated and generate increasingly large volumes of data, the field of data downlink faces ongoing challenges. These include:

- Bandwidth limitations in satellite-to-ground links

- Increasing demand for near-real-time data access

- Cybersecurity concerns in data transmission and reception

- Energy efficiency in both space and ground segments

To address these challenges, several innovative approaches are being explored and implemented:

- Optical communication links using laser technology for higher bandwidth

- On-board processing and data compression to reduce downlink requirements

- Inter-satellite links to create mesh networks for more flexible data routing

- Artificial Intelligence for adaptive resource allocation in ground station networks

These innovations are pushing the boundaries of what's possible in Earth observation, enabling more frequent and detailed monitoring of our planet. As one senior government official involved in Earth observation programmes remarked, 'The advancements in data downlink technologies are not just incremental improvements – they're revolutionising our ability to understand and respond to global challenges in near-real-time.'

![Draft Wardley Map: [Insert Wardley Map illustrating the evolution of data downlink technologies and their position in the value chain of Earth observation systems]](https://images.wardleymaps.ai/wardleymaps/map_d3a9ad9b-f5c5-4a3b-9a48-47fb807aebbf.png)

In conclusion, the field of data downlink and ground stations represents a critical juncture in the Earth observation data pipeline. It bridges the gap between space-based sensors and terrestrial analysis systems, enabling the creation of a comprehensive Planet Information Platform. As we continue to push the boundaries of Earth observation technologies, innovations in this domain will play a pivotal role in enhancing our ability to monitor, understand, and sustainably manage our planet's resources.

Initial Processing and Storage